前言:

Centos 7.9+8G内存+50G,当时搭建配置不足报错,没继续搭建下去。

推荐最低:10G内存+70G存储

AI 动手实战营线下现场搭建(步骤 1 ~ 5跳过)

1. 安装 [Python 3.9.5] 和 [pip]

疑似需要的版本区间是大于3.9且小于4.0,我使用的版本是3.9.6(官网下载慢,我使用国内镜像)

wget https://mirrors.huaweicloud.com/python/3.9.5/Python-3.9.5.tgz

依赖包安装

yum install zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel readline-devel tk-devel gcc make libffi-devel

2. 安装 [Poetry]

export PATH="$PATH:/usr/local/python3/bin"

/usr/local/python3/bin/python3 -m pip install poetry

这里还遇到个问题

raise ImportError(

ImportError: urllib3 v2 only supports OpenSSL 1.1.1+, currently the 'ssl' module is compiled with 'OpenSSL 1.0.2k-fips 26 Jan 2017'. See: https://github.com/urllib3/urllib3/issues/2168

卸载重装

pip3 uninstall urllib3

pip3 install urllib3==1.26.15

我这下载非常慢。若想快速点可参考#AI 实战营 #使用OB搭建RAG 聊天机器人 - 社区问答- OceanBase社区-分布式数据库

3. clone项目

git clone https://gitee.com/oceanbase-devhub/ai-workshop-2024.git

4. 安装 Docker

yum install -y docker-ce

5. 部署 OceanBase 集群

5.1 启动 OceanBase docker 容器

systemctl start docker

5.2 启动一个 OceanBase docker 容器

docker run --ulimit stack=4294967296 --name=ob433 -e MODE=mini -e OB_MEMORY_LIMIT=8G -e OB_DATAFILE_SIZE=10G -e OB_CLUSTER_NAME=ailab2024 -p 127.0.0.1:2881:2881 -d quay.io/oceanbase/oceanbase-ce:4.3.3.1-101000012024102216

如果上述命令执行成功,将会打印容器 ID,如下所示:

f7095ace669670874d67bf43c42bdbd046430d954e6d3dda9a708ee99d4bd607

5.3 检查 OceanBase 初始化是否完成

容器启动后,您可以使用以下命令检查 OceanBase 数据库初始化状态:

docker logs -f ob433

初始化过程大约需要 2 ~ 3 分钟。当您看到以下消息(底部的 boot success! 是必须的)时,说明 OceanBase 数据库初始化完成:

配置不足报错了。

[root@localhost ~]# docker logs -f ob433

+--------------------------------------------------+

| Cluster List |

+------+-------------------------+-----------------+

| Name | Configuration Path | Status (Cached) |

+------+-------------------------+-----------------+

| demo | /root/.obd/cluster/demo | stopped |

+------+-------------------------+-----------------+

Trace ID: 715efe88-a87d-11ef-b400-0242ac110002

If you want to view detailed obd logs, please run: obd display-trace 715efe88-a87d-11ef-b400-0242ac110002

repository/

.....................................................

.....................................................

.....................................................

[WARN] OBD-1007: (172.17.0.2) The recommended number of stack size is unlimited (Current value: 4194304)

[WARN] OBD-1017: (172.17.0.2) The value of the "vm.max_map_count" must be within [327600, 1310720] (Current value: 65530, Recommended value: 655360)

[WARN] OBD-1017: (172.17.0.2) The value of the "fs.file-max" must be greater than 6573688 (Current value: 760168, Recommended value: 6573688)

[ERROR] OBD-2000: (172.17.0.2) not enough memory. (Free: 248M, Buff/Cache: 4G, Need: 8G), Please reduce the `memory_limit` or `memory_limit_percentage`

[WARN] OBD-1012: (172.17.0.2) clog and data use the same disk (/)

[ERROR] OBD-2003: (172.17.0.2) / not enough disk space. (Avail: 14G, Need: 19G), Please reduce the `datafile_size` or `datafile_disk_percentage`

See https://www.oceanbase.com/product/ob-deployer/error-codes .

Trace ID: 786cb5f8-a87d-11ef-a08d-0242ac110002

If you want to view detailed obd logs, please run: obd display-trace 786cb5f8-a87d-11ef-a08d-0242ac110002

boot failed!

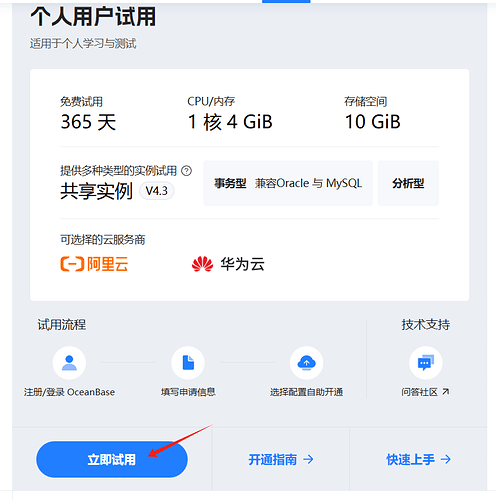

6. 开通 OB Cloud 个人实例

-

使用 OB Cloud 云数据库免费试用版,平台注册和实例开通请参考OB Cloud 云数据库 365 天免费试用;

-

创建数据库,账号

-

并导入25M数据

-

配置白名单和连接串

7. 注册阿里云百炼账号并获取 API Key

8 设置环境变量.env

聊天机器人所需的环境变量

cp .env.example .env

# 更新 .env 文件中的值,特别是 API_KEY 和数据库连接信息

vi .env

API_KEY= #步骤7中的API-KE

LLM_MODEL="qwen-turbo-2024-11-01"

LLM_BASE_URL="https://dashscope.aliyuncs.com/compatible-mode/v1"

HF_ENDPOINT=https://hf-mirror.com

BGE_MODEL_PATH=BAAI/bge-m3

OLLAMA_URL=

OLLAMA_TOKEN=

OPENAI_EMBEDDING_API_KEY= #步骤7中的API-KE

OPENAI_EMBEDDING_BASE_URL="https://dashscope.aliyuncs.com/compatible-mode/v1/embeddings"

OPENAI_EMBEDDING_MODEL=text-embedding-v3

UI_LANG="zh"

# 如果你使用的是 OB Cloud 的实例,请根据实例的连接信息更新下面的变量

DB_HOST="步骤6中的连接串IP"

DB_PORT="步骤6中的连接串端口"

DB_USER="步骤6中的创建的用户"

DB_NAME="test"

DB_PASSWORD="步骤6中账号密码"

9. 测试远程连接数据库

# 步骤6中连接串测试

mysql -h127.0.0.1 -P2881 -uroot@test -A -e "show databases"

bash utils/connect_db.sh

# 如果顺利进入 MySQL 连接当中,则验证了环境变量设置成功

10. 准备文档数据

10.1 克隆文档仓库

首先我们将使用 git 克隆 observer 和 obd 两个项目的文档到本地。

git clone --single-branch --branch V4.3.4 https://github.com/oceanbase/oceanbase-doc.git doc_repos/oceanbase-doc

# 如果您访问 Github 仓库速度较慢,可以使用以下命令克隆 Gitee 的镜像版本

git clone --single-branch --branch V4.3.4 https://gitee.com/oceanbase-devhub/oceanbase-doc.git doc_repos/oceanbase-doc

10.2 文档格式标准化

# 把文档的标题转换为标准的 markdown 格式

poetry run python convert_headings.py doc_repos/oceanbase-doc/zh-CN

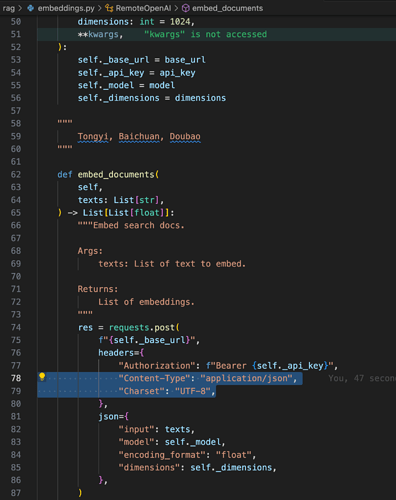

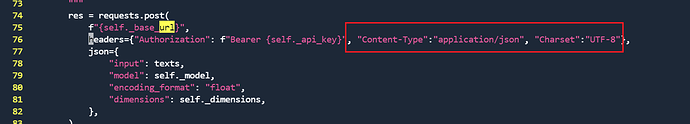

10.3 将文档转换为向量并插入 OceanBase 数据库

# 生成文档向量和元数据

poetry run python embed_docs.py --doc_base doc_repos/oceanbase-doc/zh-CN/640.ob-vector-search

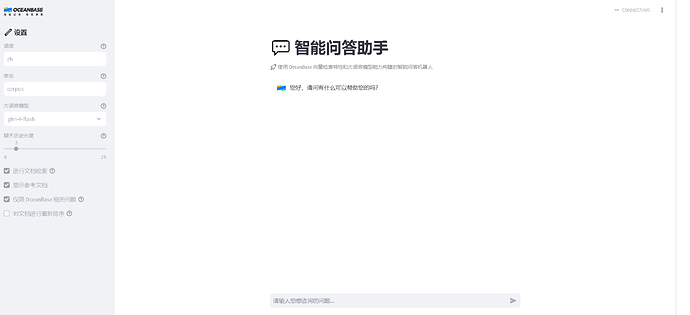

11. 启动聊天界面

执行以下命令启动聊天界面:

poetry run streamlit run --server.runOnSave false chat_ui.py

访问终端中显示的 URL 来打开聊天机器人应用界面。

You can now view your Streamlit app in your browser.

Local URL: http://localhost:8501

Network URL: http://172.xxx.xxx.xxx:8501

External URL: http://xxx.xxx.xxx.xxx:8501 # 这是您可以从浏览器访问的 URL,浏览器打开