obd display-trace 1ef13938-24a9-11f0-a7c8-005056b7a1e2

[2025-04-29 11:22:03.568] [DEBUG] - cmd: [‘demo’]

[2025-04-29 11:22:03.568] [DEBUG] - opts: {‘servers’: None, ‘components’: None, ‘force_delete’: None, ‘strict_check’: None, ‘without_parameter’: True}

[2025-04-29 11:22:03.569] [DEBUG] - mkdir /root/.obd/lock/

[2025-04-29 11:22:03.569] [DEBUG] - unknown lock mode

[2025-04-29 11:22:03.569] [DEBUG] - try to get share lock /root/.obd/lock/global

[2025-04-29 11:22:03.569] [DEBUG] - share lock /root/.obd/lock/global, count 1

[2025-04-29 11:22:03.569] [DEBUG] - Get Deploy by name

[2025-04-29 11:22:03.569] [DEBUG] - mkdir /root/.obd/cluster/

[2025-04-29 11:22:03.569] [DEBUG] - mkdir /root/.obd/config_parser/

[2025-04-29 11:22:03.570] [DEBUG] - try to get exclusive lock /root/.obd/lock/deploy_demo

[2025-04-29 11:22:03.570] [DEBUG] - exclusive lock /root/.obd/lock/deploy_demo, count 1

[2025-04-29 11:22:03.577] [DEBUG] - Deploy status judge

[2025-04-29 11:22:03.577] [INFO] Get local repositories

[2025-04-29 11:22:03.578] [DEBUG] - mkdir /root/.obd/repository

[2025-04-29 11:22:03.578] [DEBUG] - Get local repository obagent-4.2.2-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.578] [DEBUG] - Search repository obagent version: 4.2.2, tag: 19739a07a12eab736aff86ecf357b1ae660b554e, release: None, package_hash: None

[2025-04-29 11:22:03.579] [DEBUG] - try to get share lock /root/.obd/lock/mirror_and_repo

[2025-04-29 11:22:03.579] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo, count 1

[2025-04-29 11:22:03.579] [DEBUG] - mkdir /root/.obd/repository/obagent

[2025-04-29 11:22:03.581] [DEBUG] - Found repository obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.581] [DEBUG] - Get local repository obproxy-ce-4.2.3.0-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:03.581] [DEBUG] - Search repository obproxy-ce version: 4.2.3.0, tag: 0490ebc04220def8d25cb9cac9ac61a4efa6d639, release: None, package_hash: None

[2025-04-29 11:22:03.581] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo, count 2

[2025-04-29 11:22:03.581] [DEBUG] - mkdir /root/.obd/repository/obproxy-ce

[2025-04-29 11:22:03.583] [DEBUG] - Found repository obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:03.583] [DEBUG] - Get local repository oceanbase-ce-4.3.0.1-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:03.583] [DEBUG] - Search repository oceanbase-ce version: 4.3.0.1, tag: c4a03c83614f50c99ddb1c37dda858fa5d9b14b7, release: None, package_hash: None

[2025-04-29 11:22:03.583] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo, count 3

[2025-04-29 11:22:03.583] [DEBUG] - mkdir /root/.obd/repository/oceanbase-ce

[2025-04-29 11:22:03.585] [DEBUG] - Found repository oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:03.585] [DEBUG] - Get local repository grafana-7.5.17-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:03.585] [DEBUG] - Search repository grafana version: 7.5.17, tag: 1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6, release: None, package_hash: None

[2025-04-29 11:22:03.585] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo, count 4

[2025-04-29 11:22:03.586] [DEBUG] - mkdir /root/.obd/repository/grafana

[2025-04-29 11:22:03.587] [DEBUG] - Found repository grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:03.588] [DEBUG] - Get local repository prometheus-2.37.1-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:03.588] [DEBUG] - Search repository prometheus version: 2.37.1, tag: 58913c7606f05feb01bc1c6410346e5fc31cf263, release: None, package_hash: None

[2025-04-29 11:22:03.588] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo, count 5

[2025-04-29 11:22:03.588] [DEBUG] - mkdir /root/.obd/repository/prometheus

[2025-04-29 11:22:03.590] [DEBUG] - Found repository prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:03.709] [DEBUG] - Get deploy config

[2025-04-29 11:22:03.727] [INFO] Search plugins

[2025-04-29 11:22:03.727] [DEBUG] - Searching start_check plugin for components …

[2025-04-29 11:22:03.727] [DEBUG] - Searching start_check plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.728] [DEBUG] - mkdir /root/.obd/plugins

[2025-04-29 11:22:03.728] [DEBUG] - Found for obagent-py_script_start_check-1.3.0 for obagent-4.2.2

[2025-04-29 11:22:03.729] [DEBUG] - Searching start_check plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:03.729] [DEBUG] - Found for obproxy-ce-py_script_start_check-4.2.3 for obproxy-ce-4.2.3.0

[2025-04-29 11:22:03.729] [DEBUG] - Searching start_check plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:03.729] [DEBUG] - Found for oceanbase-ce-py_script_start_check-4.2.2.0 for oceanbase-ce-4.3.0.1

[2025-04-29 11:22:03.729] [DEBUG] - Searching start_check plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:03.729] [DEBUG] - Found for grafana-py_script_start_check-7.5.17 for grafana-7.5.17

[2025-04-29 11:22:03.729] [DEBUG] - Searching start_check plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:03.729] [DEBUG] - Found for prometheus-py_script_start_check-2.37.1 for prometheus-2.37.1

[2025-04-29 11:22:03.730] [DEBUG] - Searching create_tenant plugin for components …

[2025-04-29 11:22:03.730] [DEBUG] - Searching create_tenant plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.730] [DEBUG] - No such create_tenant plugin for obagent-4.2.2

[2025-04-29 11:22:03.730] [DEBUG] - Searching create_tenant plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:03.730] [DEBUG] - No such create_tenant plugin for obproxy-ce-4.2.3.0

[2025-04-29 11:22:03.730] [DEBUG] - Searching create_tenant plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:03.730] [DEBUG] - Found for oceanbase-ce-py_script_create_tenant-4.3.0.0 for oceanbase-ce-4.3.0.1

[2025-04-29 11:22:03.730] [DEBUG] - Searching create_tenant plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:03.731] [DEBUG] - No such create_tenant plugin for grafana-7.5.17

[2025-04-29 11:22:03.731] [DEBUG] - Searching create_tenant plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:03.731] [DEBUG] - No such create_tenant plugin for prometheus-2.37.1

[2025-04-29 11:22:03.731] [DEBUG] - Searching tenant_optimize plugin for components …

[2025-04-29 11:22:03.731] [DEBUG] - Searching tenant_optimize plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.731] [DEBUG] - No such tenant_optimize plugin for obagent-4.2.2

[2025-04-29 11:22:03.731] [DEBUG] - Searching tenant_optimize plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:03.731] [DEBUG] - No such tenant_optimize plugin for obproxy-ce-4.2.3.0

[2025-04-29 11:22:03.731] [DEBUG] - Searching tenant_optimize plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:03.732] [DEBUG] - Found for oceanbase-ce-py_script_tenant_optimize-4.3.0.0 for oceanbase-ce-4.3.0.1

[2025-04-29 11:22:03.732] [DEBUG] - Searching tenant_optimize plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:03.732] [DEBUG] - No such tenant_optimize plugin for grafana-7.5.17

[2025-04-29 11:22:03.732] [DEBUG] - Searching tenant_optimize plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:03.732] [DEBUG] - No such tenant_optimize plugin for prometheus-2.37.1

[2025-04-29 11:22:03.732] [DEBUG] - Searching start plugin for components …

[2025-04-29 11:22:03.732] [DEBUG] - Searching start plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.732] [DEBUG] - Found for obagent-py_script_start-1.3.0 for obagent-4.2.2

[2025-04-29 11:22:03.732] [DEBUG] - Searching start plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:03.733] [DEBUG] - Found for obproxy-ce-py_script_start-4.2.3 for obproxy-ce-4.2.3.0

[2025-04-29 11:22:03.733] [DEBUG] - Searching start plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:03.733] [DEBUG] - Found for oceanbase-ce-py_script_start-4.3.0.0 for oceanbase-ce-4.3.0.1

[2025-04-29 11:22:03.733] [DEBUG] - Searching start plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:03.733] [DEBUG] - Found for grafana-py_script_start-7.5.17 for grafana-7.5.17

[2025-04-29 11:22:03.733] [DEBUG] - Searching start plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:03.733] [DEBUG] - Found for prometheus-py_script_start-2.37.1 for prometheus-2.37.1

[2025-04-29 11:22:03.734] [DEBUG] - Searching connect plugin for components …

[2025-04-29 11:22:03.734] [DEBUG] - Searching connect plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.734] [DEBUG] - Found for obagent-py_script_connect-1.3.0 for obagent-4.2.2

[2025-04-29 11:22:03.734] [DEBUG] - Searching connect plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:03.734] [DEBUG] - Found for obproxy-ce-py_script_connect-3.1.0 for obproxy-ce-4.2.3.0

[2025-04-29 11:22:03.734] [DEBUG] - Searching connect plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:03.734] [DEBUG] - Found for oceanbase-ce-py_script_connect-4.2.2.0 for oceanbase-ce-4.3.0.1

[2025-04-29 11:22:03.735] [DEBUG] - Searching connect plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:03.735] [DEBUG] - Found for grafana-py_script_connect-7.5.17 for grafana-7.5.17

[2025-04-29 11:22:03.735] [DEBUG] - Searching connect plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:03.735] [DEBUG] - Found for prometheus-py_script_connect-2.37.1 for prometheus-2.37.1

[2025-04-29 11:22:03.735] [DEBUG] - Searching bootstrap plugin for components …

[2025-04-29 11:22:03.735] [DEBUG] - Searching bootstrap plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.735] [DEBUG] - Found for obagent-py_script_bootstrap-0.1 for obagent-4.2.2

[2025-04-29 11:22:03.735] [DEBUG] - Searching bootstrap plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:03.736] [DEBUG] - Found for obproxy-ce-py_script_bootstrap-3.1.0 for obproxy-ce-4.2.3.0

[2025-04-29 11:22:03.736] [DEBUG] - Searching bootstrap plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:03.736] [DEBUG] - Found for oceanbase-ce-py_script_bootstrap-4.2.2.0 for oceanbase-ce-4.3.0.1

[2025-04-29 11:22:03.736] [DEBUG] - Searching bootstrap plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:03.736] [DEBUG] - Found for grafana-py_script_bootstrap-7.5.17 for grafana-7.5.17

[2025-04-29 11:22:03.736] [DEBUG] - Searching bootstrap plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:03.736] [DEBUG] - Found for prometheus-py_script_bootstrap-2.37.1 for prometheus-2.37.1

[2025-04-29 11:22:03.736] [DEBUG] - Searching display plugin for components …

[2025-04-29 11:22:03.736] [DEBUG] - Searching display plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.737] [DEBUG] - Found for obagent-py_script_display-1.3.0 for obagent-4.2.2

[2025-04-29 11:22:03.737] [DEBUG] - Searching display plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:03.737] [DEBUG] - Found for obproxy-ce-py_script_display-3.1.0 for obproxy-ce-4.2.3.0

[2025-04-29 11:22:03.737] [DEBUG] - Searching display plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:03.737] [DEBUG] - Found for oceanbase-ce-py_script_display-3.1.0 for oceanbase-ce-4.3.0.1

[2025-04-29 11:22:03.737] [DEBUG] - Searching display plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:03.737] [DEBUG] - Found for grafana-py_script_display-7.5.17 for grafana-7.5.17

[2025-04-29 11:22:03.737] [DEBUG] - Searching display plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:03.738] [DEBUG] - Found for prometheus-py_script_display-2.37.1 for prometheus-2.37.1

[2025-04-29 11:22:03.858] [INFO] Load cluster param plugin

[2025-04-29 11:22:03.859] [DEBUG] - Get local repository obagent-4.2.2-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.859] [DEBUG] - Get local repository obproxy-ce-4.2.3.0-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:03.859] [DEBUG] - Get local repository oceanbase-ce-4.3.0.1-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:03.859] [DEBUG] - Get local repository grafana-7.5.17-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:03.859] [DEBUG] - Get local repository prometheus-2.37.1-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:03.859] [DEBUG] - Searching param plugin for components …

[2025-04-29 11:22:03.860] [DEBUG] - Search param plugin for obagent

[2025-04-29 11:22:03.860] [DEBUG] - Found for obagent-param-1.3.0 for obagent-4.2.2

[2025-04-29 11:22:03.860] [DEBUG] - Applying obagent-param-1.3.0 for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:03.927] [DEBUG] - Search param plugin for obproxy-ce

[2025-04-29 11:22:03.927] [DEBUG] - Found for obproxy-ce-param-4.2.3 for obproxy-ce-4.2.3.0

[2025-04-29 11:22:03.927] [DEBUG] - Applying obproxy-ce-param-4.2.3 for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:04.047] [DEBUG] - Search param plugin for oceanbase-ce

[2025-04-29 11:22:04.048] [DEBUG] - Found for oceanbase-ce-param-4.3.0.0 for oceanbase-ce-4.3.0.1

[2025-04-29 11:22:04.048] [DEBUG] - Applying oceanbase-ce-param-4.3.0.0 for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:04.540] [DEBUG] - Search param plugin for grafana

[2025-04-29 11:22:04.541] [DEBUG] - Found for grafana-param-7.5.17 for grafana-7.5.17

[2025-04-29 11:22:04.541] [DEBUG] - Applying grafana-param-7.5.17 for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:04.564] [DEBUG] - Search param plugin for prometheus

[2025-04-29 11:22:04.565] [DEBUG] - Found for prometheus-param-2.37.1 for prometheus-2.37.1

[2025-04-29 11:22:04.565] [DEBUG] - Applying prometheus-param-2.37.1 for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:04.695] [INFO] Open ssh connection

[2025-04-29 11:22:04.827] [DEBUG] - Call oceanbase-ce-py_script_start_check-4.2.2.0 for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:04.827] [DEBUG] - import start_check

[2025-04-29 11:22:04.834] [DEBUG] - add start_check ref count to 1

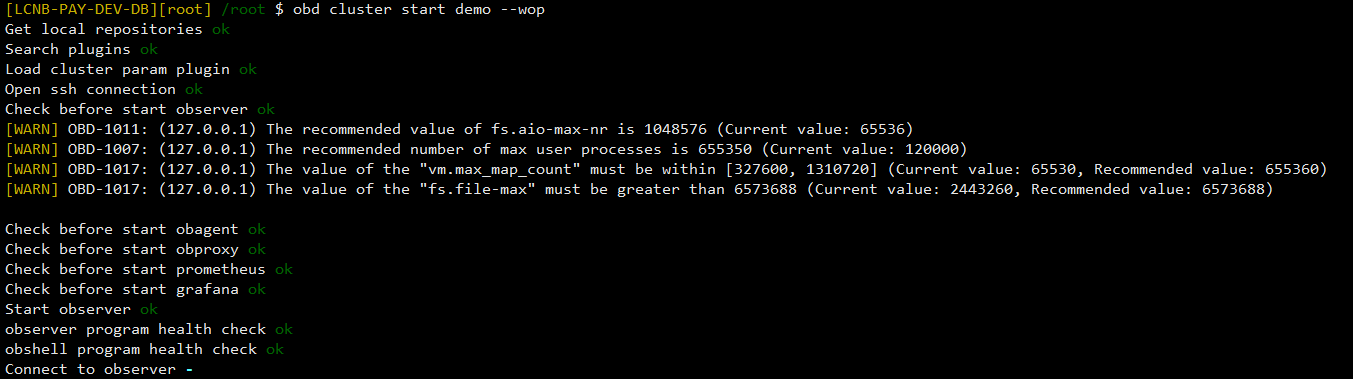

[2025-04-29 11:22:04.834] [INFO] Check before start observer

[2025-04-29 11:22:04.836] [DEBUG] – local execute: ls /root/oceanbase-ce/store/clog/tenant_1/

[2025-04-29 11:22:04.841] [DEBUG] – exited code 0

[2025-04-29 11:22:04.842] [DEBUG] – local execute: cat /root/oceanbase-ce/run/observer.pid

[2025-04-29 11:22:04.845] [DEBUG] – exited code 0

[2025-04-29 11:22:04.845] [DEBUG] – local execute: ls /proc/3496

[2025-04-29 11:22:04.850] [DEBUG] – exited code 2, error output:

[2025-04-29 11:22:04.850] [DEBUG] ls: 无法访问/proc/3496: 没有那个文件或目录

[2025-04-29 11:22:04.850] [DEBUG]

[2025-04-29 11:22:04.850] [DEBUG] – 127.0.0.1 port check

[2025-04-29 11:22:04.850] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:0B41’ | awk -F’ ’ ‘{print $3}’ | uniq

[2025-04-29 11:22:04.857] [DEBUG] – exited code 0

[2025-04-29 11:22:04.857] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:0B42’ | awk -F’ ’ ‘{print $3}’ | uniq

[2025-04-29 11:22:04.865] [DEBUG] – exited code 0

[2025-04-29 11:22:04.865] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:0B46’ | awk -F’ ’ ‘{print $3}’ | uniq

[2025-04-29 11:22:04.872] [DEBUG] – exited code 0

[2025-04-29 11:22:04.872] [DEBUG] – local execute: ls /root/oceanbase-ce/store/sstable/block_file

[2025-04-29 11:22:04.877] [DEBUG] – exited code 0

[2025-04-29 11:22:04.877] [DEBUG] – local execute: [ -w /tmp/ ] || [ -w /tmp/obshell ]

[2025-04-29 11:22:04.880] [DEBUG] – exited code 0

[2025-04-29 11:22:04.880] [DEBUG] – local execute: cat /proc/sys/fs/aio-max-nr /proc/sys/fs/aio-nr

[2025-04-29 11:22:04.883] [DEBUG] – exited code 0

[2025-04-29 11:22:04.883] [DEBUG] – local execute: ulimit -a

[2025-04-29 11:22:04.886] [DEBUG] – exited code 0

[2025-04-29 11:22:04.887] [WARNING] OBD-1007: (127.0.0.1) The recommended number of max user processes is 655350 (Current value: 120000)

[2025-04-29 11:22:04.887] [DEBUG] – local execute: sysctl -a

[2025-04-29 11:22:04.922] [DEBUG] – exited code 0

[2025-04-29 11:22:04.926] [WARNING] OBD-1017: (127.0.0.1) The value of the “vm.max_map_count” must be within [327600, 1310720] (Current value: 65530, Recommended value: 655360)

[2025-04-29 11:22:04.926] [DEBUG] – local execute: cat /proc/meminfo

[2025-04-29 11:22:04.930] [DEBUG] – exited code 0

[2025-04-29 11:22:04.930] [DEBUG] – local execute: df --block-size=1024

[2025-04-29 11:22:04.934] [DEBUG] – exited code 0

[2025-04-29 11:22:04.935] [DEBUG] – get disk info for path /dev, total: 13069365248 avail: 13069365248

[2025-04-29 11:22:04.935] [DEBUG] – get disk info for path /dev/shm, total: 13081649152 avail: 13081649152

[2025-04-29 11:22:04.935] [DEBUG] – get disk info for path /run, total: 13081649152 avail: 13047009280

[2025-04-29 11:22:04.935] [DEBUG] – get disk info for path /sys/fs/cgroup, total: 13081649152 avail: 13081649152

[2025-04-29 11:22:04.935] [DEBUG] – get disk info for path /, total: 992102842368 avail: 528803721216

[2025-04-29 11:22:04.935] [DEBUG] – get disk info for path /boot, total: 1063256064 avail: 905920512

[2025-04-29 11:22:04.935] [DEBUG] – get disk info for path /home, total: 90146082816 avail: 90112217088

[2025-04-29 11:22:04.935] [DEBUG] – get disk info for path /run/user/0, total: 2616332288 avail: 2616332288

[2025-04-29 11:22:04.935] [DEBUG] – get disk info for path /run/user/1003, total: 2616332288 avail: 2616332288

[2025-04-29 11:22:04.935] [DEBUG] – disk: {’/dev’: {‘total’: 13069365248, ‘avail’: 13069365248, ‘need’: 0}, ‘/dev/shm’: {‘total’: 13081649152, ‘avail’: 13081649152, ‘need’: 0}, ‘/run’: {‘total’: 13081649152, ‘avail’: 13047009280, ‘need’: 0}, ‘/sys/fs/cgroup’: {‘total’: 13081649152, ‘avail’: 13081649152, ‘need’: 0}, ‘/’: {‘total’: 992102842368, ‘avail’: 528803721216, ‘need’: 0}, ‘/boot’: {‘total’: 1063256064, ‘avail’: 905920512, ‘need’: 0}, ‘/home’: {‘total’: 90146082816, ‘avail’: 90112217088, ‘need’: 0}, ‘/run/user/0’: {‘total’: 2616332288, ‘avail’: 2616332288, ‘need’: 0}, ‘/run/user/1003’: {‘total’: 2616332288, ‘avail’: 2616332288, ‘need’: 0}}

[2025-04-29 11:22:04.936] [DEBUG] – local execute: date +%s%N

[2025-04-29 11:22:04.939] [DEBUG] – exited code 0

[2025-04-29 11:22:04.939] [DEBUG] – 127.0.0.1 time delta -0.41455078125

[2025-04-29 11:22:04.967] [INFO] [WARN] OBD-1007: (127.0.0.1) The recommended number of max user processes is 655350 (Current value: 120000)

[2025-04-29 11:22:04.967] [INFO] [WARN] OBD-1017: (127.0.0.1) The value of the “vm.max_map_count” must be within [327600, 1310720] (Current value: 65530, Recommended value: 655360)

[2025-04-29 11:22:04.967] [INFO]

[2025-04-29 11:22:04.967] [DEBUG] - sub start_check ref count to 0

[2025-04-29 11:22:04.967] [DEBUG] - export start_check

[2025-04-29 11:22:04.967] [DEBUG] - Call obagent-py_script_start_check-1.3.0 for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2025-04-29 11:22:04.968] [DEBUG] - import start_check

[2025-04-29 11:22:04.970] [DEBUG] - add start_check ref count to 1

[2025-04-29 11:22:04.970] [INFO] Check before start obagent

[2025-04-29 11:22:04.972] [DEBUG] – local execute: cat /root/obagent/run/ob_agentd.pid

[2025-04-29 11:22:04.976] [DEBUG] – exited code 1, error output:

[2025-04-29 11:22:04.976] [DEBUG] cat: /root/obagent/run/ob_agentd.pid: 没有那个文件或目录

[2025-04-29 11:22:04.976] [DEBUG]

[2025-04-29 11:22:04.976] [DEBUG] – 127.0.0.1 port check

[2025-04-29 11:22:04.976] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:1F99’ | awk -F’ ’ ‘{print $3}’ | uniq

[2025-04-29 11:22:04.983] [DEBUG] – exited code 0

[2025-04-29 11:22:04.983] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:1F98’ | awk -F’ ’ ‘{print $3}’ | uniq

[2025-04-29 11:22:04.990] [DEBUG] – exited code 0

[2025-04-29 11:22:05.103] [DEBUG] - sub start_check ref count to 0

[2025-04-29 11:22:05.103] [DEBUG] - export start_check

[2025-04-29 11:22:05.103] [DEBUG] - Call obproxy-ce-py_script_start_check-4.2.3 for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2025-04-29 11:22:05.103] [DEBUG] - import start_check

[2025-04-29 11:22:05.106] [DEBUG] - add start_check ref count to 1

[2025-04-29 11:22:05.107] [INFO] Check before start obproxy

[2025-04-29 11:22:05.108] [DEBUG] – local execute: cat /root/obproxy-ce/run/obproxy-127.0.0.1-2883.pid

[2025-04-29 11:22:05.113] [DEBUG] – exited code 0

[2025-04-29 11:22:05.113] [DEBUG] – local execute: ls /proc/11868/fd

[2025-04-29 11:22:05.118] [DEBUG] – exited code 2, error output:

[2025-04-29 11:22:05.119] [DEBUG] ls: 无法访问/proc/11868/fd: 没有那个文件或目录

[2025-04-29 11:22:05.119] [DEBUG]

[2025-04-29 11:22:05.119] [DEBUG] – 127.0.0.1 port check

[2025-04-29 11:22:05.119] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:0B43’ | awk -F’ ’ ‘{print $3}’ | uniq

[2025-04-29 11:22:05.127] [DEBUG] – exited code 0

[2025-04-29 11:22:05.127] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:0B44’ | awk -F’ ’ ‘{print $3}’ | uniq

[2025-04-29 11:22:05.135] [DEBUG] – exited code 0

[2025-04-29 11:22:05.239] [DEBUG] - sub start_check ref count to 0

[2025-04-29 11:22:05.239] [DEBUG] - export start_check

[2025-04-29 11:22:05.239] [DEBUG] - Call prometheus-py_script_start_check-2.37.1 for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2025-04-29 11:22:05.239] [DEBUG] - import start_check

[2025-04-29 11:22:05.241] [DEBUG] - add start_check ref count to 1

[2025-04-29 11:22:05.242] [INFO] Check before start prometheus

[2025-04-29 11:22:05.243] [DEBUG] – local execute: cat /root/prometheus/run/prometheus.pid

[2025-04-29 11:22:05.247] [DEBUG] – exited code 0

[2025-04-29 11:22:05.247] [DEBUG] – 127.0.0.1 port check

[2025-04-29 11:22:05.247] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:2382’ | awk -F’ ’ ‘{print $3}’ | uniq

[2025-04-29 11:22:05.255] [DEBUG] – exited code 0

[2025-04-29 11:22:05.374] [DEBUG] - sub start_check ref count to 0

[2025-04-29 11:22:05.374] [DEBUG] - export start_check

[2025-04-29 11:22:05.374] [DEBUG] - Call grafana-py_script_start_check-7.5.17 for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2025-04-29 11:22:05.374] [DEBUG] - import start_check

[2025-04-29 11:22:05.376] [DEBUG] - add start_check ref count to 1

[2025-04-29 11:22:05.377] [INFO] Check before start grafana

[2025-04-29 11:22:05.377] [DEBUG] – local execute: cat /root/grafana/run/grafana.pid

[2025-04-29 11:22:05.381] [DEBUG] – exited code 0

[2025-04-29 11:22:05.382] [DEBUG] – local execute: ls /proc/12002

[2025-04-29 11:22:05.386] [DEBUG] – exited code 2, error output:

[2025-04-29 11:22:05.387] [DEBUG] ls: 无法访问/proc/12002: 没有那个文件或目录

[2025-04-29 11:22:05.387] [DEBUG]

[2025-04-29 11:22:05.387] [DEBUG] – 127.0.0.1 port check

[2025-04-29 11:22:05.387] [DEBUG] – local execute: bash -c ‘cat /proc/net/{udp*,tcp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:0BB8’ | awk -F’ ’ ‘{print $3}’ | uniq

[2025-04-29 11:22:05.394] [DEBUG] – exited code 0

[2025-04-29 11:22:05.508] [DEBUG] - sub start_check ref count to 0

[2025-04-29 11:22:05.508] [DEBUG] - export start_check

[2025-04-29 11:22:05.509] [DEBUG] - Call oceanbase-ce-py_script_start-4.3.0.0 for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2025-04-29 11:22:05.509] [DEBUG] - import start

[2025-04-29 11:22:05.512] [DEBUG] - add start ref count to 1

[2025-04-29 11:22:05.513] [INFO] Start observer

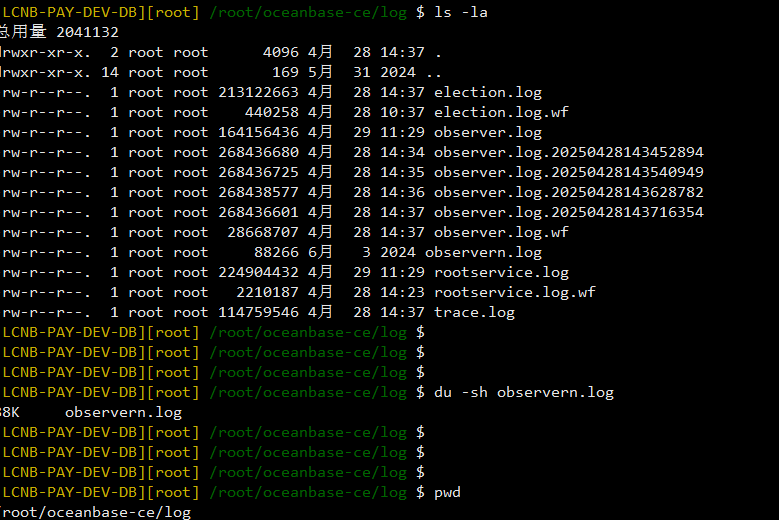

[2025-04-29 11:22:05.514] [DEBUG] – local execute: ls /root/oceanbase-ce/store/clog/tenant_1/

[2025-04-29 11:22:05.519] [DEBUG] – exited code 0

[2025-04-29 11:22:05.519] [DEBUG] – local execute: cat /root/oceanbase-ce/run/observer.pid

[2025-04-29 11:22:05.522] [DEBUG] – exited code 0

[2025-04-29 11:22:05.523] [DEBUG] – local execute: ls /proc/3496

[2025-04-29 11:22:05.527] [DEBUG] – exited code 2, error output:

[2025-04-29 11:22:05.527] [DEBUG] ls: 无法访问/proc/3496: 没有那个文件或目录

[2025-04-29 11:22:05.527] [DEBUG]

[2025-04-29 11:22:05.527] [DEBUG] – 127.0.0.1 start command construction

[2025-04-29 11:22:05.528] [DEBUG] – local execute: ls /root/oceanbase-ce/etc/observer.config.bin

[2025-04-29 11:22:05.532] [DEBUG] – exited code 0

[2025-04-29 11:22:05.532] [DEBUG] – starting 127.0.0.1 observer

[2025-04-29 11:22:05.533] [DEBUG] – root@127.0.0.1 set env LD_LIBRARY_PATH to ‘/root/oceanbase-ce/lib:’

[2025-04-29 11:22:05.533] [DEBUG] – local execute: cd /root/oceanbase-ce; /root/oceanbase-ce/bin/observer -p 2881

[2025-04-29 11:22:05.580] [DEBUG] – exited code 0

[2025-04-29 11:22:05.581] [DEBUG] – root@127.0.0.1 delete env LD_LIBRARY_PATH

[2025-04-29 11:22:05.645] [DEBUG] – start_obshell: True

[2025-04-29 11:22:05.645] [DEBUG] – local execute: cat /root/oceanbase-ce/run/obshell.pid

[2025-04-29 11:22:05.649] [DEBUG] – exited code 0

[2025-04-29 11:22:05.650] [DEBUG] – local execute: ls /proc/3565

[2025-04-29 11:22:05.655] [DEBUG] – exited code 2, error output:

[2025-04-29 11:22:05.655] [DEBUG] ls: 无法访问/proc/3565: 没有那个文件或目录

[2025-04-29 11:22:05.655] [DEBUG]

[2025-04-29 11:22:05.655] [DEBUG] – root@127.0.0.1 set env OB_ROOT_PASSWORD to ‘’

[2025-04-29 11:22:05.655] [DEBUG] – start obshell: cd /root/oceanbase-ce; /root/oceanbase-ce/bin/obshell admin start --ip 127.0.0.1 --port 2886

[2025-04-29 11:22:05.655] [DEBUG] – local execute: cd /root/oceanbase-ce; /root/oceanbase-ce/bin/obshell admin start --ip 127.0.0.1 --port 2886

[2025-04-29 11:22:07.714] [DEBUG] – exited code 0

[2025-04-29 11:22:07.714] [INFO] observer program health check

[2025-04-29 11:22:10.718] [DEBUG] – 127.0.0.1 program health check

[2025-04-29 11:22:10.718] [DEBUG] – local execute: cat /root/oceanbase-ce/run/observer.pid

[2025-04-29 11:22:10.723] [DEBUG] – exited code 0

[2025-04-29 11:22:10.723] [DEBUG] – local execute: ls /proc/14248

[2025-04-29 11:22:10.728] [DEBUG] – exited code 2, error output:

[2025-04-29 11:22:10.728] [DEBUG] ls: 无法访问/proc/14248: 没有那个文件或目录

[2025-04-29 11:22:10.728] [DEBUG]

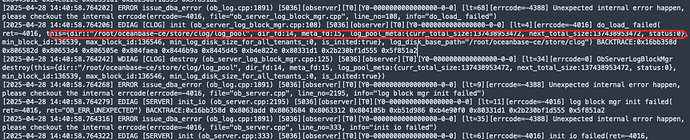

[2025-04-29 11:22:10.842] [WARNING] OBD-2002: Failed to start 127.0.0.1 observer

[2025-04-29 11:22:10.842] [DEBUG] - sub start ref count to 0

[2025-04-29 11:22:10.843] [DEBUG] - export start

[2025-04-29 11:22:10.843] [ERROR] oceanbase-ce start failed

[2025-04-29 11:22:10.846] [INFO] See https://www.oceanbase.com/product/ob-deployer/error-codes .

[2025-04-29 11:22:10.846] [INFO] Trace ID: 1ef13938-24a9-11f0-a7c8-005056b7a1e2

[2025-04-29 11:22:10.846] [INFO] If you want to view detailed obd logs, please run: obd display-trace 1ef13938-24a9-11f0-a7c8-005056b7a1e2

[2025-04-29 11:22:10.846] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo release, count 4

[2025-04-29 11:22:10.846] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo release, count 3

[2025-04-29 11:22:10.846] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo release, count 2

[2025-04-29 11:22:10.846] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo release, count 1

[2025-04-29 11:22:10.846] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo release, count 0

[2025-04-29 11:22:10.846] [DEBUG] - unlock /root/.obd/lock/mirror_and_repo

[2025-04-29 11:22:10.847] [DEBUG] - exclusive lock /root/.obd/lock/deploy_demo release, count 0

[2025-04-29 11:22:10.847] [DEBUG] - unlock /root/.obd/lock/deploy_demo

[2025-04-29 11:22:10.847] [DEBUG] - share lock /root/.obd/lock/global release, count 0

[2025-04-29 11:22:10.847] [DEBUG] - unlock /root/.obd/lock/global