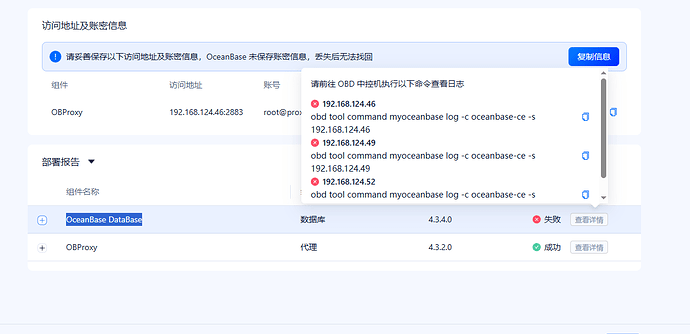

【 使用环境 】生产环境 OpenEuler 24.03版本 x86

【 OB or 其他组件 】OceanBase DataBase、OBProxy

【 使用版本 】4.3.4.0

【问题描述】使用OBD WEB 白屏部署 OceanBase DataBase失败

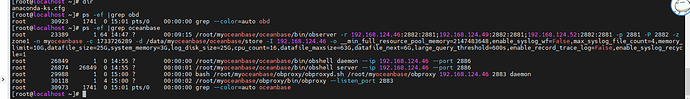

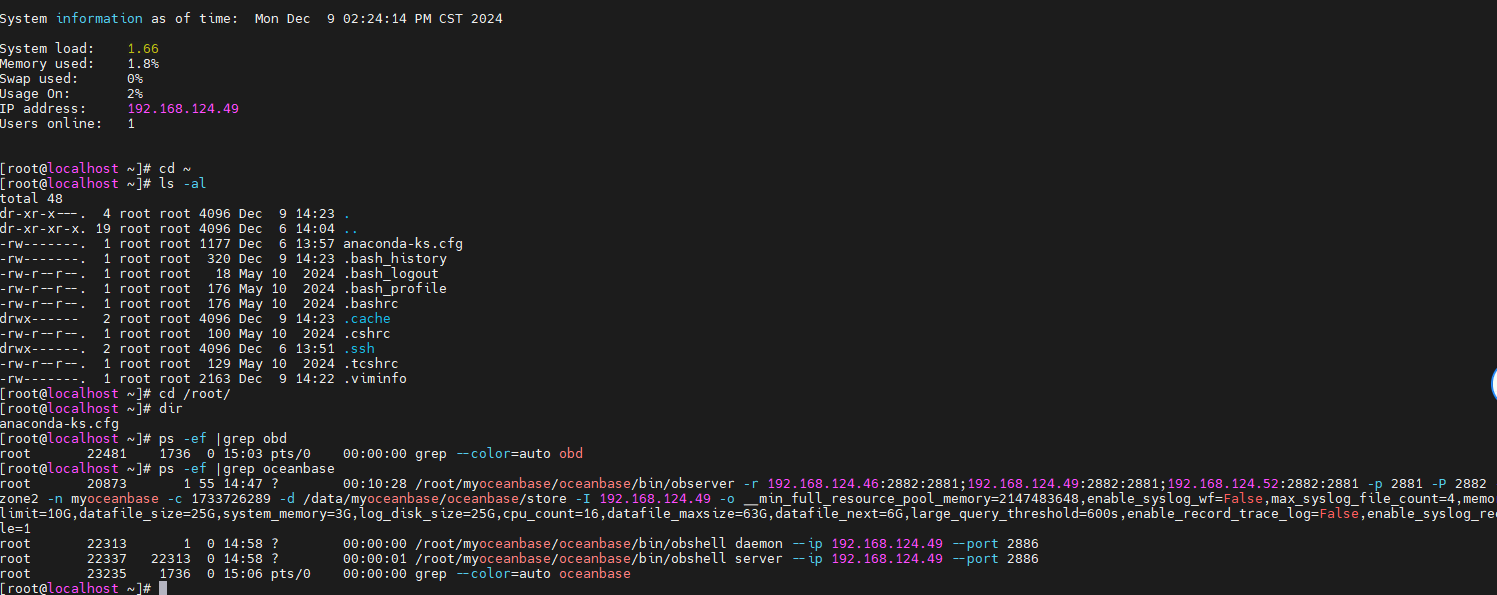

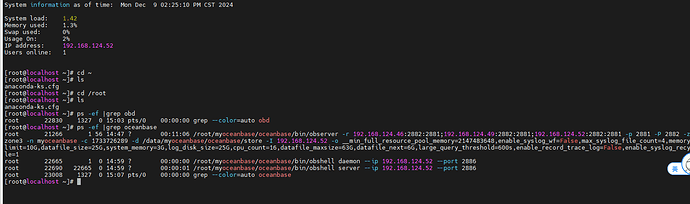

报错日志如下,检测实际目录下 文件 /root/myoceanbase/oceanbase/run/obshell.pid存在

[2024-12-06 16:40:35.450] [INFO] [WARN] OBD-1012: (192.168.124.46) clog and data use the same disk (/)

[2024-12-06 16:40:35.450] [INFO] [WARN] OBD-1012: (192.168.124.49) clog and data use the same disk (/)

[2024-12-06 16:40:35.450] [INFO] [WARN] OBD-1012: (192.168.124.32) clog and data use the same disk (/)

[2024-12-06 16:40:35.450] [INFO]

[2024-12-06 16:40:35.451] [DEBUG] - plugin oceanbase-ce-py_script_start_check-4.3.0.0 result: True

[2024-12-06 16:40:35.451] [DEBUG] - Call oceanbase-ce-py_script_start-4.3.0.0 for oceanbase-ce-4.3.4.0-100000162024110717.el7-5d59e837a0ecff1a6baa20f72747c343ac7c8dce

[2024-12-06 16:40:35.451] [DEBUG] - import start

[2024-12-06 16:40:35.456] [DEBUG] - add start ref count to 1

[2024-12-06 16:40:35.457] [INFO] cluster scenario: htap

[2024-12-06 16:40:35.457] [INFO] Start observer

[2024-12-06 16:40:35.457] [DEBUG] -- root@192.168.124.46 execute: ls /root/myoceanbase/oceanbase/store/clog/tenant_1/

[2024-12-06 16:40:35.521] [DEBUG] -- exited code 2, error output:

[2024-12-06 16:40:35.522] [DEBUG] ls: cannot access '/root/myoceanbase/oceanbase/store/clog/tenant_1/': No such file or directory

[2024-12-06 16:40:35.522] [DEBUG]

[2024-12-06 16:40:35.522] [DEBUG] -- root@192.168.124.46 execute: cat /root/myoceanbase/oceanbase/run/observer.pid

[2024-12-06 16:40:35.625] [DEBUG] -- exited code 1, error output:

[2024-12-06 16:40:35.626] [DEBUG] cat: /root/myoceanbase/oceanbase/run/observer.pid: No such file or directory

[2024-12-06 16:40:35.626] [DEBUG]

[2024-12-06 16:40:35.626] [DEBUG] -- 192.168.124.46 start command construction

[2024-12-06 16:40:35.626] [DEBUG] -- update large_query_threshold to 600s because of scenario

[2024-12-06 16:40:35.626] [DEBUG] -- update enable_record_trace_log to False because of scenario

[2024-12-06 16:40:35.626] [DEBUG] -- update enable_syslog_recycle to 1 because of scenario

[2024-12-06 16:40:35.626] [DEBUG] -- update max_syslog_file_count to 300 because of scenario

[2024-12-06 16:40:35.627] [DEBUG] -- root@192.168.124.49 execute: ls /root/myoceanbase/oceanbase/store/clog/tenant_1/

[2024-12-06 16:40:35.690] [DEBUG] -- exited code 2, error output:

[2024-12-06 16:40:35.691] [DEBUG] ls: cannot access '/root/myoceanbase/oceanbase/store/clog/tenant_1/': No such file or directory

[2024-12-06 16:40:35.691] [DEBUG]

[2024-12-06 16:40:35.691] [DEBUG] -- root@192.168.124.49 execute: cat /root/myoceanbase/oceanbase/run/observer.pid

[2024-12-06 16:40:35.793] [DEBUG] -- exited code 1, error output:

[2024-12-06 16:40:35.794] [DEBUG] cat: /root/myoceanbase/oceanbase/run/observer.pid: No such file or directory

[2024-12-06 16:40:35.794] [DEBUG]

[2024-12-06 16:40:35.794] [DEBUG] -- 192.168.124.49 start command construction

[2024-12-06 16:40:35.794] [DEBUG] -- update large_query_threshold to 600s because of scenario

[2024-12-06 16:40:35.794] [DEBUG] -- update enable_record_trace_log to False because of scenario

[2024-12-06 16:40:35.794] [DEBUG] -- update enable_syslog_recycle to 1 because of scenario

[2024-12-06 16:40:35.794] [DEBUG] -- update max_syslog_file_count to 300 because of scenario

[2024-12-06 16:40:35.794] [DEBUG] -- root@192.168.124.32 execute: ls /root/myoceanbase/oceanbase/store/clog/tenant_1/

[2024-12-06 16:40:35.859] [DEBUG] -- exited code 2, error output:

[2024-12-06 16:40:35.860] [DEBUG] ls: cannot access '/root/myoceanbase/oceanbase/store/clog/tenant_1/': No such file or directory

[2024-12-06 16:40:35.860] [DEBUG]

[2024-12-06 16:40:35.860] [DEBUG] -- root@192.168.124.32 execute: cat /root/myoceanbase/oceanbase/run/observer.pid

[2024-12-06 16:40:35.963] [DEBUG] -- exited code 1, error output:

[2024-12-06 16:40:35.963] [DEBUG] cat: /root/myoceanbase/oceanbase/run/observer.pid: No such file or directory

[2024-12-06 16:40:35.963] [DEBUG]

[2024-12-06 16:40:35.963] [DEBUG] -- 192.168.124.32 start command construction

[2024-12-06 16:40:35.963] [DEBUG] -- update large_query_threshold to 600s because of scenario

[2024-12-06 16:40:35.964] [DEBUG] -- update enable_record_trace_log to False because of scenario

[2024-12-06 16:40:35.964] [DEBUG] -- update enable_syslog_recycle to 1 because of scenario

[2024-12-06 16:40:35.964] [DEBUG] -- update max_syslog_file_count to 300 because of scenario

[2024-12-06 16:40:35.964] [DEBUG] -- starting 192.168.124.46 observer

[2024-12-06 16:40:35.964] [DEBUG] -- root@192.168.124.46 export LD_LIBRARY_PATH='/root/myoceanbase/oceanbase/lib:'

[2024-12-06 16:40:35.964] [DEBUG] -- root@192.168.124.46 execute: cd /root/myoceanbase/oceanbase; /root/myoceanbase/oceanbase/bin/observer -r '192.168.124.46:2882:2881;192.168.124.49:2882:2881;192.168.124.32:2882:2881' -p 2881 -P 2882 -z 'zone1' -n 'myoceanbase' -c 1733473891 -d '/root/myoceanbase/oceanbase/store' -I '192.168.124.46' -o __min_full_resource_pool_memory=2147483648,enable_syslog_wf=False,max_syslog_file_count=4,memory_limit='10G',datafile_size='25G',system_memory='3G',log_disk_size='25G',cpu_count=16,datafile_maxsize='64G',datafile_next='6G',large_query_threshold='600s',enable_record_trace_log=False,enable_syslog_recycle=1

[2024-12-06 16:40:36.077] [DEBUG] -- exited code 0

[2024-12-06 16:40:36.078] [DEBUG] -- root@192.168.124.46 export LD_LIBRARY_PATH=''

[2024-12-06 16:40:36.078] [DEBUG] -- starting 192.168.124.49 observer

[2024-12-06 16:40:36.079] [DEBUG] -- root@192.168.124.49 export LD_LIBRARY_PATH='/root/myoceanbase/oceanbase/lib:'

[2024-12-06 16:40:36.079] [DEBUG] -- root@192.168.124.49 execute: cd /root/myoceanbase/oceanbase; /root/myoceanbase/oceanbase/bin/observer -r '192.168.124.46:2882:2881;192.168.124.49:2882:2881;192.168.124.32:2882:2881' -p 2881 -P 2882 -z 'zone2' -n 'myoceanbase' -c 1733473891 -d '/root/myoceanbase/oceanbase/store' -I '192.168.124.49' -o __min_full_resource_pool_memory=2147483648,enable_syslog_wf=False,max_syslog_file_count=4,memory_limit='10G',datafile_size='25G',system_memory='3G',log_disk_size='25G',cpu_count=16,datafile_maxsize='64G',datafile_next='6G',large_query_threshold='600s',enable_record_trace_log=False,enable_syslog_recycle=1

[2024-12-06 16:40:36.197] [DEBUG] -- exited code 0

[2024-12-06 16:40:36.197] [DEBUG] -- root@192.168.124.49 export LD_LIBRARY_PATH=''

[2024-12-06 16:40:36.197] [DEBUG] -- starting 192.168.124.32 observer

[2024-12-06 16:40:36.198] [DEBUG] -- root@192.168.124.32 export LD_LIBRARY_PATH='/root/myoceanbase/oceanbase/lib:'

[2024-12-06 16:40:36.198] [DEBUG] -- root@192.168.124.32 execute: cd /root/myoceanbase/oceanbase; /root/myoceanbase/oceanbase/bin/observer -r '192.168.124.46:2882:2881;192.168.124.49:2882:2881;192.168.124.32:2882:2881' -p 2881 -P 2882 -z 'zone3' -n 'myoceanbase' -c 1733473891 -d '/root/myoceanbase/oceanbase/store' -I '192.168.124.32' -o __min_full_resource_pool_memory=2147483648,enable_syslog_wf=False,max_syslog_file_count=4,memory_limit='10G',datafile_size='25G',system_memory='3G',log_disk_size='25G',cpu_count=16,datafile_maxsize='26G',datafile_next='3G',large_query_threshold='600s',enable_record_trace_log=False,enable_syslog_recycle=1

[2024-12-06 16:40:36.318] [DEBUG] -- exited code 0

[2024-12-06 16:40:36.319] [DEBUG] -- root@192.168.124.32 export LD_LIBRARY_PATH=''

[2024-12-06 16:40:36.319] [DEBUG] -- start_obshell: False

[2024-12-06 16:40:36.320] [INFO] observer program health check

[2024-12-06 16:40:39.323] [DEBUG] -- 192.168.124.46 program health check

[2024-12-06 16:40:39.323] [DEBUG] -- root@192.168.124.46 execute: cat /root/myoceanbase/oceanbase/run/observer.pid

[2024-12-06 16:40:39.390] [DEBUG] -- exited code 0

[2024-12-06 16:40:39.391] [DEBUG] -- root@192.168.124.46 execute: ls /proc/39638

[2024-12-06 16:40:39.508] [DEBUG] -- exited code 0

[2024-12-06 16:40:39.509] [DEBUG] -- 192.168.124.46 observer[pid: 39638] started

[2024-12-06 16:40:39.509] [DEBUG] -- 192.168.124.49 program health check

[2024-12-06 16:40:39.509] [DEBUG] -- root@192.168.124.49 execute: cat /root/myoceanbase/oceanbase/run/observer.pid

[2024-12-06 16:40:39.593] [DEBUG] -- exited code 0

[2024-12-06 16:40:39.593] [DEBUG] -- root@192.168.124.49 execute: ls /proc/31146

[2024-12-06 16:40:39.710] [DEBUG] -- exited code 0

[2024-12-06 16:40:39.710] [DEBUG] -- 192.168.124.49 observer[pid: 31146] started

[2024-12-06 16:40:39.711] [DEBUG] -- 192.168.124.32 program health check

[2024-12-06 16:40:39.711] [DEBUG] -- root@192.168.124.32 execute: cat /root/myoceanbase/oceanbase/run/observer.pid

[2024-12-06 16:40:39.780] [DEBUG] -- exited code 0

[2024-12-06 16:40:39.780] [DEBUG] -- root@192.168.124.32 execute: ls /proc/23050

[2024-12-06 16:40:39.889] [DEBUG] -- exited code 0

[2024-12-06 16:40:39.890] [DEBUG] -- 192.168.124.32 observer[pid: 23050] started

[2024-12-06 16:40:39.890] [DEBUG] -- need_bootstrap: True

[2024-12-06 16:40:39.890] [DEBUG] - sub start ref count to 0

[2024-12-06 16:40:39.891] [DEBUG] - export start

[2024-12-06 16:40:39.891] [DEBUG] - plugin oceanbase-ce-py_script_start-4.3.0.0 result: True

[2024-12-06 16:40:39.891] [DEBUG] - Call oceanbase-ce-py_script_connect-4.2.2.0 for oceanbase-ce-4.3.4.0-100000162024110717.el7-5d59e837a0ecff1a6baa20f72747c343ac7c8dce

[2024-12-06 16:40:39.891] [DEBUG] - import connect

[2024-12-06 16:40:39.901] [DEBUG] - add connect ref count to 1

[2024-12-06 16:40:39.902] [DEBUG] -- connect obshell (192.168.124.46:2886)

[2024-12-06 16:40:39.902] [DEBUG] -- connect obshell (192.168.124.49:2886)

[2024-12-06 16:40:39.902] [DEBUG] -- connect obshell (192.168.124.32:2886)

[2024-12-06 16:40:39.903] [INFO] Connect to observer

[2024-12-06 16:40:39.903] [DEBUG] -- connect 192.168.124.46 -P2881 -uroot -p******

[2024-12-06 16:40:39.907] [DEBUG] -- connect 192.168.124.49 -P2881 -uroot -p******

[2024-12-06 16:40:39.911] [DEBUG] -- connect 192.168.124.32 -P2881 -uroot -p******

[2024-12-06 16:41:55.196] [DEBUG] -- execute sql: select 1. args: None

[2024-12-06 16:41:55.200] [DEBUG] - sub connect ref count to 0

[2024-12-06 16:41:55.200] [DEBUG] - export connect

[2024-12-06 16:41:55.200] [DEBUG] - plugin oceanbase-ce-py_script_connect-4.2.2.0 result: True

[2024-12-06 16:41:55.200] [INFO] Initialize oceanbase-ce

[2024-12-06 16:41:55.201] [DEBUG] - Call oceanbase-ce-py_script_bootstrap-4.2.2.0 for oceanbase-ce-4.3.4.0-100000162024110717.el7-5d59e837a0ecff1a6baa20f72747c343ac7c8dce

[2024-12-06 16:41:55.201] [DEBUG] - import bootstrap

[2024-12-06 16:41:55.204] [DEBUG] - add bootstrap ref count to 1

[2024-12-06 16:41:55.205] [DEBUG] -- bootstrap for components: dict_keys(['oceanbase-ce', 'obproxy-ce'])

[2024-12-06 16:41:55.205] [DEBUG] -- execute sql: set session ob_query_timeout=1000000000

[2024-12-06 16:41:55.205] [DEBUG] -- execute sql: set session ob_query_timeout=1000000000. args: None

[2024-12-06 16:41:55.208] [DEBUG] -- execute sql: alter system bootstrap REGION "sys_region" ZONE "zone1" SERVER "192.168.124.46:2882",REGION "sys_region" ZONE "zone2" SERVER "192.168.124.49:2882",REGION "sys_region" ZONE "zone3" SERVER "192.168.124.32:2882". args: None

[2024-12-06 16:47:35.948] [DEBUG] -- execute sql: alter user "root" IDENTIFIED BY %s. args: ['******']

[2024-12-06 16:47:38.055] [DEBUG] -- execute sql: select * from oceanbase.__all_server. args: None

[2024-12-06 16:47:38.083] [DEBUG] -- root@192.168.124.46 execute: ls /root/myoceanbase/oceanbase/.meta

[2024-12-06 16:47:38.175] [DEBUG] -- exited code 2, error output:

[2024-12-06 16:47:38.176] [DEBUG] ls: cannot access '/root/myoceanbase/oceanbase/.meta': No such file or directory

[2024-12-06 16:47:38.176] [DEBUG]

[2024-12-06 16:47:38.176] [DEBUG] --

[2024-12-06 16:47:38.176] [DEBUG] -- ls: cannot access '/root/myoceanbase/oceanbase/.meta': No such file or directory

[2024-12-06 16:47:38.176] [DEBUG]

[2024-12-06 16:47:38.176] [DEBUG] -- root@192.168.124.46 execute: cat /root/myoceanbase/oceanbase/run/obshell.pid

[2024-12-06 16:47:38.306] [DEBUG] -- exited code 1, error output:

[2024-12-06 16:47:38.306] [DEBUG] cat: /root/myoceanbase/oceanbase/run/obshell.pid: No such file or directory

[2024-12-06 16:47:38.306] [DEBUG]

[2024-12-06 16:47:38.307] [DEBUG] -- root@192.168.124.46 execute: strings /root/myoceanbase/oceanbase/etc/observer.conf.bin

[2024-12-06 16:47:38.428] [DEBUG] -- exited code 127, error output:

[2024-12-06 16:47:38.428] [DEBUG] bash: line 1: strings: command not found

[2024-12-06 16:47:38.428] [DEBUG]

[2024-12-06 16:47:38.428] [DEBUG] --

[2024-12-06 16:47:38.428] [DEBUG] -- bash: line 1: strings: command not found

[2024-12-06 16:47:38.428] [DEBUG]

[2024-12-06 16:47:38.429] [DEBUG] -- root@192.168.124.49 execute: ls /root/myoceanbase/oceanbase/.meta

[2024-12-06 16:47:38.523] [DEBUG] -- exited code 2, error output:

[2024-12-06 16:47:38.523] [DEBUG] ls: cannot access '/root/myoceanbase/oceanbase/.meta': No such file or directory

[2024-12-06 16:47:38.523] [DEBUG]

[2024-12-06 16:47:38.523] [DEBUG] --

[2024-12-06 16:47:38.523] [DEBUG] -- ls: cannot access '/root/myoceanbase/oceanbase/.meta': No such file or directory

[2024-12-06 16:47:38.523] [DEBUG]

[2024-12-06 16:47:38.523] [DEBUG] -- root@192.168.124.49 execute: cat /root/myoceanbase/oceanbase/run/obshell.pid

[2024-12-06 16:47:38.654] [DEBUG] -- exited code 1, error output:

[2024-12-06 16:47:38.655] [DEBUG] cat: /root/myoceanbase/oceanbase/run/obshell.pid: No such file or directory

[2024-12-06 16:47:38.655] [DEBUG]

[2024-12-06 16:47:38.655] [DEBUG] -- root@192.168.124.49 execute: strings /root/myoceanbase/oceanbase/etc/observer.conf.bin

[2024-12-06 16:47:38.781] [DEBUG] -- exited code 127, error output:

[2024-12-06 16:47:38.782] [DEBUG] bash: line 1: strings: command not found

[2024-12-06 16:47:38.782] [DEBUG]

[2024-12-06 16:47:38.782] [DEBUG] --

[2024-12-06 16:47:38.782] [DEBUG] -- bash: line 1: strings: command not found

[2024-12-06 16:47:38.782] [DEBUG]

[2024-12-06 16:47:38.782] [DEBUG] -- root@192.168.124.32 execute: ls /root/myoceanbase/oceanbase/.meta

[2024-12-06 16:47:38.866] [DEBUG] -- exited code 2, error output:

[2024-12-06 16:47:38.867] [DEBUG] ls: cannot access '/root/myoceanbase/oceanbase/.meta': No such file or directory

[2024-12-06 16:47:38.867] [DEBUG]

[2024-12-06 16:47:38.867] [DEBUG] --

[2024-12-06 16:47:38.867] [DEBUG] -- ls: cannot access '/root/myoceanbase/oceanbase/.meta': No such file or directory

[2024-12-06 16:47:38.867] [DEBUG]

[2024-12-06 16:47:38.867] [DEBUG] -- root@192.168.124.32 execute: cat /root/myoceanbase/oceanbase/run/obshell.pid

[2024-12-06 16:47:38.984] [DEBUG] -- exited code 1, error output:

[2024-12-06 16:47:38.985] [DEBUG] cat: /root/myoceanbase/oceanbase/run/obshell.pid: No such file or directory

[2024-12-06 16:47:38.985] [DEBUG]

[2024-12-06 16:47:38.985] [DEBUG] -- root@192.168.124.32 execute: strings /root/myoceanbase/oceanbase/etc/observer.conf.bin

[2024-12-06 16:47:39.100] [DEBUG] -- exited code 127, error output:

[2024-12-06 16:47:39.101] [DEBUG] bash: line 1: strings: command not found

[2024-12-06 16:47:39.101] [DEBUG]

[2024-12-06 16:47:39.101] [DEBUG] --

[2024-12-06 16:47:39.101] [DEBUG] -- bash: line 1: strings: command not found

[2024-12-06 16:47:39.101] [DEBUG]

[2024-12-06 16:47:39.102] [DEBUG] -- root@192.168.124.46 execute: cat /root/myoceanbase/oceanbase/run/obshell.pid

[2024-12-06 16:47:39.180] [DEBUG] -- exited code 1, error output:

[2024-12-06 16:47:39.180] [DEBUG] cat: /root/myoceanbase/oceanbase/run/obshell.pid: No such file or directory

[2024-12-06 16:47:39.180] [DEBUG]

[2024-12-06 16:47:39.181] [DEBUG] -- root@192.168.124.46 export OB_ROOT_PASSWORD=''******''

[2024-12-06 16:47:39.181] [DEBUG] -- start obshell: cd /root/myoceanbase/oceanbase; /root/myoceanbase/oceanbase/bin/obshell admin start --ip 192.168.124.46 --port 2886

[2024-12-06 16:47:39.181] [DEBUG] -- root@192.168.124.46 execute: cd /root/myoceanbase/oceanbase; /root/myoceanbase/oceanbase/bin/obshell admin start --ip 192.168.124.46 --port 2886

[2024-12-06 16:49:58.531] [DEBUG] -- exited code 0

[2024-12-06 16:49:58.531] [DEBUG] -- root@192.168.124.49 execute: cat /root/myoceanbase/oceanbase/run/obshell.pid

[2024-12-06 16:49:58.629] [DEBUG] -- exited code 1, error output:

[2024-12-06 16:49:58.629] [DEBUG] cat: /root/myoceanbase/oceanbase/run/obshell.pid: No such file or directory

[2024-12-06 16:49:58.629] [DEBUG]

[2024-12-06 16:49:58.629] [DEBUG] -- root@192.168.124.49 export OB_ROOT_PASSWORD=''******''

[2024-12-06 16:49:58.630] [DEBUG] -- start obshell: cd /root/myoceanbase/oceanbase; /root/myoceanbase/oceanbase/bin/obshell admin start --ip 192.168.124.49 --port 2886

[2024-12-06 16:49:58.630] [DEBUG] -- root@192.168.124.49 execute: cd /root/myoceanbase/oceanbase; /root/myoceanbase/oceanbase/bin/obshell admin start --ip 192.168.124.49 --port 2886

[2024-12-06 16:50:44.920] [DEBUG] -- exited code 0

[2024-12-06 16:50:44.920] [DEBUG] -- root@192.168.124.32 execute: cat /root/myoceanbase/oceanbase/run/obshell.pid

[2024-12-06 16:50:45.018] [DEBUG] -- exited code 1, error output:

[2024-12-06 16:50:45.019] [DEBUG] cat: /root/myoceanbase/oceanbase/run/obshell.pid: No such file or directory

[2024-12-06 16:50:45.019] [DEBUG]

[2024-12-06 16:50:45.019] [DEBUG] -- root@192.168.124.32 export OB_ROOT_PASSWORD=''******''

[2024-12-06 16:50:45.020] [DEBUG] -- start obshell: cd /root/myoceanbase/oceanbase; /root/myoceanbase/oceanbase/bin/obshell admin start --ip 192.168.124.32 --port 2886

[2024-12-06 16:50:45.020] [DEBUG] -- root@192.168.124.32 execute: cd /root/myoceanbase/oceanbase; /root/myoceanbase/oceanbase/bin/obshell admin start --ip 192.168.124.32 --port 2886

[2024-12-06 16:51:33.795] [DEBUG] -- exited code 0

[2024-12-06 16:51:36.799] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/status, data: {}, headers: None, params: None

[2024-12-06 16:51:36.815] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/status, data: {}, headers: None, params: None

[2024-12-06 16:51:36.830] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/status, data: {}, headers: None, params: None

[2024-12-06 16:51:39.991] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/task/dag/maintain/agent, data: rKXi5FNjZytmLV4SvfX7vQ==, headers: None, params: None

[2024-12-06 16:51:39.992] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/secret, data: {}, headers: None, params: None

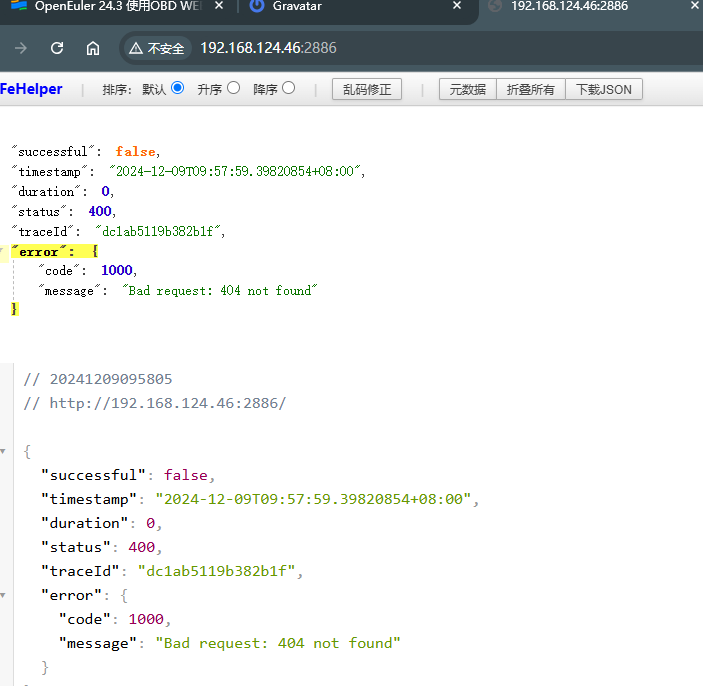

[2024-12-06 16:51:40.001] [DEBUG] -- request obshell failed: <Response [404]>

[2024-12-06 16:51:40.016] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/task/dag/maintain/agent, data: lttgDDGmPPxGWFvlj6hlDA==, headers: None, params: None

[2024-12-06 16:51:40.017] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/secret, data: {}, headers: None, params: None

[2024-12-06 16:51:40.026] [DEBUG] -- request obshell failed: <Response [404]>

[2024-12-06 16:51:40.035] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/task/dag/maintain/agent, data: kqAO8qDCrS+QI2/Bb0BZbA==, headers: None, params: None

[2024-12-06 16:51:40.035] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/secret, data: {}, headers: None, params: None

[2024-12-06 16:51:40.159] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/task/dag/23232267296028861, data: kqAO8qDCrS+QI2/Bb0BZbA==, headers: None, params: None

[2024-12-06 16:51:40.159] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/secret, data: {}, headers: None, params: None

[2024-12-06 16:51:41.171] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/task/dag/23232267296028861, data: kqAO8qDCrS+QI2/Bb0BZbA==, headers: None, params: None

[2024-12-06 16:51:41.171] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/secret, data: {}, headers: None, params: None

[2024-12-06 16:51:45.724] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/task/dag/23232267296028861, data: kqAO8qDCrS+QI2/Bb0BZbA==, headers: None, params: None

[2024-12-06 16:51:45.725] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/secret, data: {}, headers: None, params: None

[2024-12-06 16:51:47.877] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/task/dag/23232267296028861, data: kqAO8qDCrS+QI2/Bb0BZbA==, headers: None, params: None

[2024-12-06 16:51:47.878] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/secret, data: {}, headers: None, params: None

[2024-12-06 16:51:51.226] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/task/dag/23232267296028861, data: kqAO8qDCrS+QI2/Bb0BZbA==, headers: None, params: None

[2024-12-06 16:51:51.226] [DEBUG] -- send request to obshell: method: GET, url: /api/v1/secret, data: {}, headers: None, params: None

[2024-12-06 16:51:56.249] [DEBUG] -- request obshell failed: <Response [404]>

[2024-12-06 16:51:56.249] [DEBUG] -- find take over dag failed, count: 600

[2024-12-06 16:51:57.253] [ERROR] obshell take over failed

[2024-12-06 16:51:57.253] [DEBUG] - sub bootstrap ref count to 0

[2024-12-06 16:51:57.253] [DEBUG] - export bootstrap

[2024-12-06 16:51:57.253] [DEBUG] - plugin oceanbase-ce-py_script_bootstrap-4.2.2.0 result: False

[2024-12-06 16:51:57.253] [INFO] [ERROR] obshell take over failed

[2024-12-06 16:51:57.253] [INFO]

[2024-12-06 16:51:57.253] [ERROR] Cluster init failed

【复现路径】config配置如下

user:

username: root

password: *****

port: 22

oceanbase-ce:

version: 4.3.4.0

release: 100000162024110717.el7

package_hash: 5d59e837a0ecff1a6baa20f72747c343ac7c8dce

192.168.124.46:

zone: zone1

datafile_maxsize: 64G

datafile_next: 6G

192.168.124.49:

zone: zone2

datafile_maxsize: 64G

datafile_next: 6G

192.168.124.32:

zone: zone3

datafile_maxsize: 26G

datafile_next: 3G

servers:

- 192.168.124.46

- 192.168.124.49

- 192.168.124.32

global:

appname: myoceanbase

root_password: Yanfa2023@

mysql_port: 2881

rpc_port: 2882

home_path: /root/myoceanbase/oceanbase

scenario: htap

cluster_id: 1733473891

proxyro_password: iMoFmDyt3U

enable_syslog_wf: false

max_syslog_file_count: 4

memory_limit: 10G

datafile_size: 25G

system_memory: 3G

log_disk_size: 25G

cpu_count: 16

production_mode: false

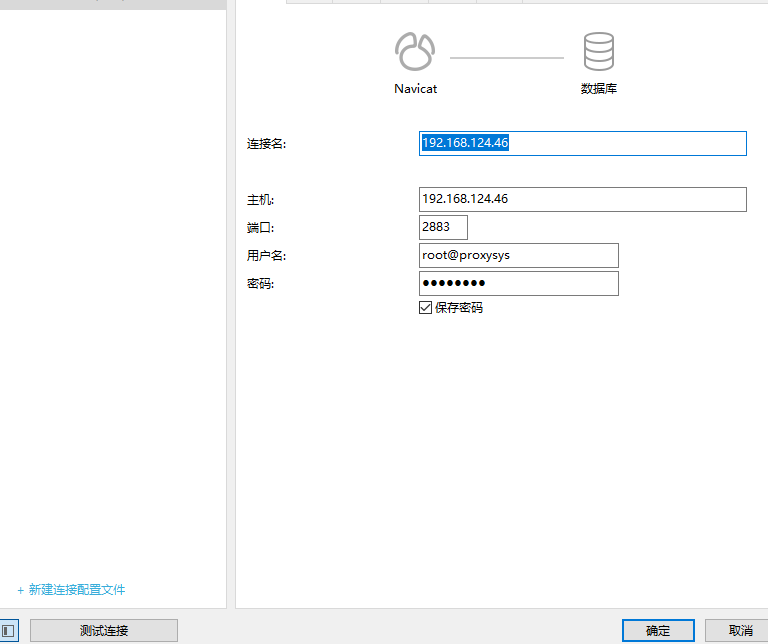

obproxy-ce:

version: 4.3.2.0

package_hash: fd779e401be448715254165b1a4f7205c4c1bda5

release: 26.el7

servers:

- 192.168.124.46

global:

prometheus_listen_port: 2884

listen_port: 2883

rpc_listen_port: 2885

home_path: /root/myoceanbase/obproxy

obproxy_sys_password: Bjn@W21dzSW~JP

skip_proxy_sys_private_check: true

enable_strict_kernel_release: false

enable_cluster_checkout: false

192.168.124.46:

proxy_id: 4135

client_session_id_version: 2

depends:

- oceanbase-ce

【附件及日志】推荐使用OceanBase敏捷诊断工具obdiag收集诊断信息,详情参见链接(右键跳转查看):

【备注】基于 LLM 和开源文档 RAG 的论坛小助手已开放测试,在发帖时输入 [@论坛小助手] 即可召唤小助手,欢迎试用!