【 使用环境 】生产环境 or 测试环境

【 OB or 其他组件 】

【 使用版本 】

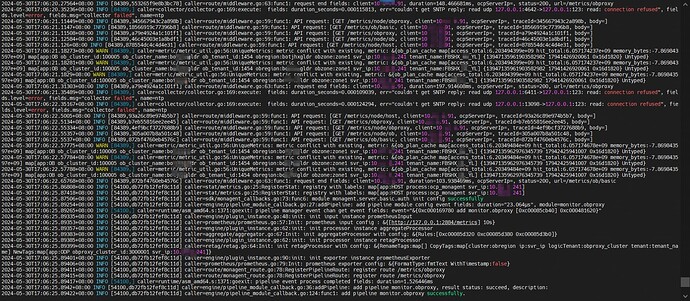

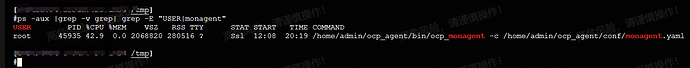

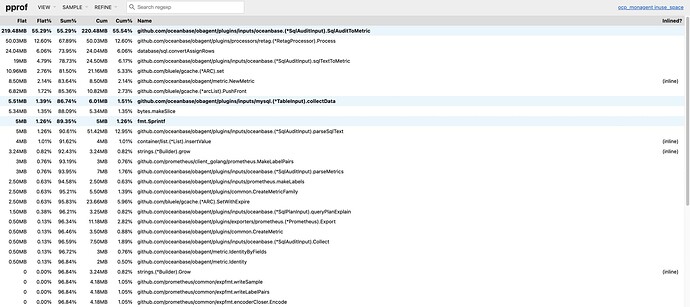

【问题描述】ocp_monagent重启,怀疑是内存超限触发的。但是什么原因导致内存超限不清楚。看日志中重启发生在【2024-05-30T17:06:25.73613】,重启前的monagent日志有WARN: metric conflict with existing,看看是不是和这个有关?

【复现路径】问题出现前后相关操作

【附件及日志】

monagent.log

grep “2024-05-30T17:06:2” monagent-2024-05-30T17-42-27.827.log|more

2024-05-30T17:06:20.00549+08:00 INFO [84389,26de405bc9187578] caller=oceanbase/slow_sql.go:258:collectByTenant: slow sql pipeline collect tenant 1438 sql_audit data round 20, collect time:2024-05-30 17:06:19 +0800 CST, start_request_id:2079224598, min_request_id:2079224599, max_request_id:2079228598

2024-05-30T17:06:20.02227+08:00 INFO [84389,26de405bc9187578] caller=oceanbase/slow_sql.go:258:collectByTenant: slow sql pipeline collect tenant 1438 sql_audit data round 21, collect time:2024-05-30 17:06:19 +0800 CST, start_request_id:2079228598, min_request_id:2079228599, max_request_id:2079232598

2024-05-30T17:06:20.03913+08:00 INFO [84389,26de405bc9187578] caller=oceanbase/slow_sql.go:258:collectByTenant: slow sql pipeline collect tenant 1438 sql_audit data round 22, collect time:2024-05-30 17:06:19 +0800 CST, start_request_id:2079232598, min_request_id:2079232599, max_request_id:2079236038

2024-05-30T17:06:20.05199+08:00 INFO [84389,26de405bc9187578] caller=oceanbase/slow_sql.go:255:collectByTenant: slow sql pipeline collect tenant 1438 sql_audit data round 23 complete, collect time:2024-05-30 17:06:19 +0800 CST, start_request_id:2079236038

2024-05-30T17:06:20.05205+08:00 INFO [84389,26de405bc9187578] caller=oceanbase/slow_sql.go:171:Collect: slow sql pipeline collect data complete, collect start time:2024-05-30 17:06:19 +0800 CST, end time:2024-05-30 17:06:20 +0800 CST, Metrics number 25

2024-05-30T17:06:20.05216+08:00 INFO [84389,26de405bc9187578] caller=engine/pipeline.go:134:pipelinePush: push pipeline ob_slow_sql metrics count 25

2024-05-30T17:06:20.11161+08:00 INFO [84389,872c837e51a11273] caller=route/middleware.go:59:func1: API request: [GET /metrics/node/host, client=10...91, ocpServerIp=, traceId=872c837e51a11273, body=]

2024-05-30T17:06:20.12692+08:00 INFO [84389,6b6fc1b5bde59262] caller=route/middleware.go:59:func1: API request: [GET /metrics/node/obproxy, client=10...91, ocpServerIp=, traceId=6b6fc1b5bde59262, body=]

2024-05-30T17:06:20.12713+08:00 INFO [84389,553265f9e8b3bc78] caller=route/middleware.go:59:func1: API request: [GET /metrics/obproxy, client=10...91, ocpServerIp=, traceId=553265f9e8b3bc78, body=]

2024-05-30T17:06:20.14618+08:00 INFO [84389,13c768d980dbd955] caller=route/middleware.go:59:func1: API request: [GET /metrics/ob/basic, client=10...91, ocpServerIp=, traceId=13c768d980dbd955, body=]

2024-05-30T17:06:20.14649+08:00 INFO [84389,a4a6d5f5f1e62264] caller=route/middleware.go:59:func1: API request: [GET /metrics/node/ob, client=10...91, ocpServerIp=, traceId=a4a6d5f5f1e62264, body=]

2024-05-30T17:06:20.1626+08:00 WARN [84389,] caller=metric/metric_util.go:56:UniqueMetrics: metric conflict with existing, metric: &{ob_plan_cache map[access_total:6.203494027e+09 hit_total:6.057173865e+09 memory_bytes:-7.869843597e+09] map[app:OB ob_cluster_id:100005 ob_cluster_name:boldr ob_tenant_id:1454 obregion:boldr obzone:zone1 svr_ip:10...241 tenant_name:FB**_YL] {13947135960844202203 17940441281102 0x16d1820} Untyped}

2024-05-30T17:06:20.16267+08:00 WARN [84389,] caller=metric/metric_util.go:56:UniqueMetrics: metric conflict with existing, metric: &{ob_plan_cache map[access_total:6.203494027e+09 hit_total:6.057173865e+09 memory_bytes:-7.869843597e+09] map[app:OB ob_cluster_id:100005 ob_cluster_name:boldr ob_tenant_id:1454 obregion:boldr obzone:zone1 svr_ip:10..*.241 tenant_name:FB**_YL] {13947135960844202203 17940441281102 0x16d1820} Untyped}

2024-05-30T17:06:20.16272+08:00 WARN [84389,] caller=metric/metric_util.go:56:UniqueMetrics: metric conflict with existing, metric: &{ob_plan_cache map[access_total:6.203494027e+09 hit_total:6.057173865e+09 memory_bytes:-7.869843597e+09] map[app:OB ob_cluster_id:100005 ob_cluster_name:boldr ob_tenant_id:1454 obregion:boldr obzone:zone1 svr_ip:10...241 tenant_name:FB**_YL] {13947135960844202203 17940441281102 0x16d1820} Untyped}

2024-05-30T17:06:20.27564+08:00 INFO [84389,553265f9e8b3bc78] caller=route/middleware.go:63:func1: request end fields: client=10...91, duration=148.466681ms, ocpServerIp=, status=200, url=/metrics/obproxy

2024-05-30T17:06:20.35236+08:00 INFO [84389,] caller=collector/collector.go:169:execute: fields: duration_seconds=0.000115813, err=“couldn’t get SNTP reply: read udp 127.0.0.1:4642->127.0.0.1:123: read: connection refused”, fields.level=error, fields.msg=“collector failed”, name=ntp

2024-05-30T17:06:21.11449+08:00 INFO [84389,345667943c2a898b] caller=route/middleware.go:59:func1: API request: [GET /metrics/node/obproxy, client=10...91, ocpServerIp=, traceId=345667943c2a898b, body=]

2024-05-30T17:06:21.1147+08:00 INFO [84389,18566919c77396b8] caller=route/middleware.go:59:func1: API request: [GET /metrics/ob/basic, client=10...91, ocpServerIp=, traceId=18566919c77396b8, body=]

2024-05-30T17:06:21.11508+08:00 INFO [84389,a79e4924a1c101f1] caller=route/middleware.go:59:func1: API request: [GET /metrics/obproxy, client=10...91, ocpServerIp=, traceId=a79e4924a1c101f1, body=]

2024-05-30T17:06:21.12584+08:00 INFO [84389,46c45003e1a8bdf1] caller=route/middleware.go:59:func1: API request: [GET /metrics/node/ob, client=10...91, ocpServerIp=, traceId=46c45003e1a8bdf1, body=]

2024-05-30T17:06:21.126+08:00 INFO [84389,878554dc4c4d4e31] caller=route/middleware.go:59:func1: API request: [GET /metrics/node/host, client=10...91, ocpServerIp=, traceId=878554dc4c4d4e31, body=]

2024-05-30T17:06:21.18273+08:00 WARN [84389,] caller=metric/metric_util.go:56:UniqueMetrics: metric conflict with existing, metric: &{ob_plan_cache map[access_total:6.203494399e+09 hit_total:6.057174237e+09 memory_bytes:-7.869843597e+09] map[app:OB ob_cluster_id:100005 ob_cluster_name:boldr ob_tenant_id:1454 obregion:boldr obzone:zone1 svr_ip:10...241 tenant_name:FB**_YL] {13947135961903582982 17941426920061 0x16d1820} Untyped}

2024-05-30T17:06:21.18281+08:00 WARN [84389,] caller=metric/metric_util.go:56:UniqueMetrics: metric conflict with existing, metric: &{ob_plan_cache map[access_total:6.203494399e+09 hit_total:6.057174237e+09 memory_bytes:-7.869843597e+09] map[app:OB ob_cluster_id:100005 ob_cluster_name:boldr ob_tenant_id:1454 obregion:boldr obzone:zone1 svr_ip:10...241 tenant_name:FB**_YL] {13947135961903582982 17941426920061 0x16d1820} Untyped}

2024-05-30T17:06:21.1829+08:00 WARN [84389,] caller=metric/metric_util.go:56:UniqueMetrics: metric conflict with existing, metric: &{ob_plan_cache map[access_total:6.203494399e+09 hit_total:6.057174237e+09 memory_bytes:-7.869843597e+09] map[app:OB ob_cluster_id:100005 ob_cluster_name:boldr ob_tenant_id:1454 obregion:boldr obzone:zone1 svr_ip:10...241 tenant_name:FB**_YL] {13947135961903582982 17941426920061 0x16d1820} Untyped}

2024-05-30T17:06:21.31303+08:00 INFO [84389,a79e4924a1c101f1] caller=route/middleware.go:63:func1: request end fields: client=10...91, duration=197.914608ms, ocpServerIp=, status=200, url=/metrics/obproxy

2024-05-30T17:06:21.35489+08:00 INFO [84389,] caller=collector/collector.go:169:execute: fields: duration_seconds=0.000109039, err=“couldn’t get SNTP reply: read udp 127.0.0.1:6411->127.0.0.1:123: read: connection refused”, fields.level=error, fields.msg=“collector failed”, name=ntp

2024-05-30T17:06:22.35167+08:00 INFO [84389,] caller=collector/collector.go:169:execute: fields: duration_seconds=0.000124294, err=“couldn’t get SNTP reply: read udp 127.0.0.1:13098->127.0.0.1:123: read: connection refused”, fields.level=error, fields.msg=“collector failed”, name=ntp

2024-05-30T17:06:22.5005+08:00 INFO [84389,93a26c89e974b5b7] caller=route/middleware.go:59:func1: API request: [GET /metrics/node/host, client=10...91, ocpServerIp=, traceId=93a26c89e974b5b7, body=]

2024-05-30T17:06:22.5134+08:00 INFO [84389,b7eb55816ee2ee45] caller=route/middleware.go:59:func1: API request: [GET /metrics/obproxy, client=10...91, ocpServerIp=, traceId=b7eb55816ee2ee45, body=]

2024-05-30T17:06:22.55334+08:00 INFO [84389,4ef9bcf3727688b9] caller=route/middleware.go:59:func1: API request: [GET /metrics/node/obproxy, client=10...91, ocpServerIp=, traceId=4ef9bcf3727688b9, body=]

2024-05-30T17:06:22.55357+08:00 INFO [84389,305a007b8a501c48] caller=route/middleware.go:59:func1: API request: [GET /metrics/node/ob, client=10...91, ocpServerIp=, traceId=305a007b8a501c48, body=]

2024-05-30T17:06:22.5537+08:00 INFO [84389,872bf4760eb4576c] caller=route/middleware.go:59:func1: API request: [GET /metrics/ob/basic, client=10...91, ocpServerIp=, traceId=872bf4760eb4576c, body=]

2024-05-30T17:06:22.57775+08:00 WARN [84389,] caller=metric/metric_util.go:56:UniqueMetrics: metric conflict with existing, metric: &{ob_plan_cache map[access_total:6.20349484e+09 hit_total:6.057174678e+09 memory_bytes:-7.869843597e+09] map[app:OB ob_cluster_id:100005 ob_cluster_name:boldr ob_tenant_id:1454 obregion:boldr obzone:zone1 svr_ip:10...241 tenant_name:FB**_YL] {13947135962976345739 17942425941007 0x16d1820} Untyped}

2024-05-30T17:06:22.57784+08:00 WARN [84389,] caller=metric/metric_util.go:56:UniqueMetrics: metric conflict with existing, metric: &{ob_plan_cache map[access_total:6.20349484e+09 hit_total:6.057174678e+09 memory_bytes:-7.869843597e+09] map[app:OB ob_cluster_id:100005 ob_cluster_name:boldr ob_tenant_id:1454 obregion:boldr obzone:zone1 svr_ip:10...241 tenant_name:FB**_YL] {13947135962976345739 17942425941007 0x16d1820} Untyped}

2024-05-30T17:06:22.57788+08:00 WARN [84389,] caller=metric/metric_util.go:56:UniqueMetrics: metric conflict with existing, metric: &{ob_plan_cache map[access_total:6.20349484e+09 hit_total:6.057174678e+09 memory_bytes:-7.869843597e+09] map[app:OB ob_cluster_id:100005 ob_cluster_name:boldr ob_tenant_id:1454 obregion:boldr obzone:zone1 svr_ip:10...241 tenant_name:FB**__YL] {13947135962976345739 17942425941007 0x16d1820} Untyped}

2024-05-30T17:06:22.65768+08:00 INFO [84389,872bf4760eb4576c] caller=route/middleware.go:63:func1: request end fields: client=10...91, duration=103.938469ms, ocpServerIp=, status=200, url=/metrics/ob/basic

2024-05-30T17:06:25.86808+08:00 INFO [54100,db72fb12fef8c11d] caller=stat/metrics.go:25:RegisterStat: registry with labels: map[app:HOST process:ocp_monagent svr_ip:10...241]

2024-05-30T17:06:25.87418+08:00 INFO [54100,db72fb12fef8c11d] caller=stat/metrics.go:25:RegisterStat: registry with labels: map[app:HOST process:ocp_monagent svr_ip:10...241]

2024-05-30T17:06:25.87506+08:00 INFO [54100,db72fb12fef8c11d] caller=sdk/monagent_callbacks.go:73:func6: module monagent.server.basic.auth init config successfully

2024-05-30T17:06:25.89254+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/pipeline_module_callback.go:27:addPipeline: add pipeline module config event fields: duration=“23.064µs”, module=monitor.obproxy

2024-05-30T17:06:25.89265+08:00 INFO [54100,] caller=runtime/asm_amd64.s:1371:goexit: pipeline manager event chan get event fields: event="&{0xc000169780 add monitor.obproxy [0xc00085cb40] 0xc000481620}"

2024-05-30T17:06:25.89335+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/plugin_instance.go:48:init: init input instance prometheusInput

2024-05-30T17:06:25.89353+08:00 INFO [54100,db72fb12fef8c11d] caller=prometheus/prometheus.go:57:Init: prometheus input config : &{[http://127.0.0.1:2884/metrics] 10s}

2024-05-30T17:06:25.89357+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/plugin_instance.go:62:init: init processor instance aggregateProcessor

2024-05-30T17:06:25.89383+08:00 INFO [54100,db72fb12fef8c11d] caller=aggregate/aggregator.go:67:Init: init aggregateProcessor with config: &{Rules:[0xc00085d320 0xc00085d380 0xc00085d3b0]}

2024-05-30T17:06:25.89385+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/plugin_instance.go:62:init: init processor instance retagProcessor

2024-05-30T17:06:25.89399+08:00 INFO [54100,db72fb12fef8c11d] caller=retag/retag.go:64:Init: init retagProcessor with config: &{RenameTags:map[] CopyTags:map[cluster:obregion ip:svr_ip logicTenant:obproxy_cluster tenant:tenant_name] NewTags:map[app:ODP obproxy_cluster_id:3000001 svr_ip:10...241]}

2024-05-30T17:06:25.89401+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/plugin_instance.go:90:init: init exporter instance prometheusExporter

2024-05-30T17:06:25.89406+08:00 INFO [54100,db72fb12fef8c11d] caller=prometheus/prometheus.go:79:Init: prometheus exporter config: &{FormatType:fmtText WithTimestamp:false}

2024-05-30T17:06:25.89411+08:00 INFO [54100,db72fb12fef8c11d] caller=route/monagent_route.go:78:RegisterPipelineRoute: register route /metrics/obproxy

2024-05-30T17:06:25.89415+08:00 INFO [54100,db72fb12fef8c11d] caller=route/monagent_route.go:78:RegisterPipelineRoute: register route /metrics/obproxy

2024-05-30T17:06:25.89417+08:00 INFO [54100,] caller=runtime/asm_amd64.s:1371:goexit: pipeline event process completed fields: duration=1.526446ms

2024-05-30T17:06:25.8942+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/pipeline_module_callback.go:36:addPipeline: add pipeline monitor.obproxy, result status: succeed, description:

2024-05-30T17:06:25.89422+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/pipeline_module_callback.go:124:func1: add pipeline monitor.obproxy successfully.

2024-05-30T17:06:25.89427+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/pipeline_module_callback.go:27:addPipeline: add pipeline module config event fields: duration=“13.834µs”, module=monitor.node.host

2024-05-30T17:06:25.8943+08:00 INFO [54100,] caller=runtime/asm_amd64.s:1371:goexit: pipeline manager event chan get event fields: event="&{0xc0001df580 add monitor.node.host [0xc0005a6ae0] 0xc0001a8660}"

2024-05-30T17:06:25.89432+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/plugin_instance.go:48:init: init input instance nodeExporterInput

2024-05-30T17:06:25.89478+08:00 INFO [54100,] caller=collector/filesystem_common.go:110:NewFilesystemCollector: fields: collector=filesystem, fields.level=info, fields.msg=“Parsed flag --collector.filesystem.mount-points-exclude”, flag="^/(dev|proc|sys|var/lib/docker/.+)($|/)"

2024-05-30T17:06:25.89607+08:00 INFO [54100,] caller=collector/filesystem_common.go:112:NewFilesystemCollector: fields: collector=filesystem, fields.level=info, fields.msg=“Parsed flag --collector.filesystem.fs-types-exclude”, flag="^(autofs|binfmt_misc|bpf|cgroup2?|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|iso9660|mqueue|nsfs|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|selinuxfs|squashfs|sysfs|tracefs)$"

2024-05-30T17:06:25.89627+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/plugin_instance.go:62:init: init processor instance aggregateProcessor

2024-05-30T17:06:25.89637+08:00 INFO [54100,db72fb12fef8c11d] caller=aggregate/aggregator.go:67:Init: init aggregateProcessor with config: &{Rules:[0xc0005a7ec0]}

2024-05-30T17:06:25.89639+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/plugin_instance.go:62:init: init processor instance excludeFilterProcessor

2024-05-30T17:06:25.89657+08:00 INFO [54100,db72fb12fef8c11d] caller=filter/exclude_filter.go:69:Init: init excludeFilterProcessor with config: &{Conditions:[0xc0005a4a08 0xc0005a4a20 0xc0005a4a38]}

2024-05-30T17:06:25.89658+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/plugin_instance.go:62:init: init processor instance retagProcessor

2024-05-30T17:06:25.89673+08:00 INFO [54100,db72fb12fef8c11d] caller=retag/retag.go:64:Init: init retagProcessor with config: &{RenameTags:map[] CopyTags:map[mountpoint:mount_point] NewTags:map[app:HOST ob_cluster_id:100005 ob_cluster_name:boldr obproxy_cluster:boldrproxy obproxy_cluster_id:3000001 obregion:bo*ldr obzone:zone1 svr_ip:10...241]}

2024-05-30T17:06:25.89674+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/plugin_instance.go:62:init: init processor instance mountLabelProcessor

2024-05-30T17:06:25.89685+08:00 INFO [54100,db72fb12fef8c11d] caller=label/mount_label.go:65:Init: init mountLabelProcessor with config: &{LabelTags:map[checkReadonly:/ dataDiskPath:/data/1 installPath:/home/admin/oceanbase logDiskPath:/data/log1 obMountPath:/home/admin]}

2024-05-30T17:06:25.90818+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/plugin_instance.go:90:init: init exporter instance prometheusExporter

2024-05-30T17:06:25.9083+08:00 INFO [54100,db72fb12fef8c11d] caller=prometheus/prometheus.go:79:Init: prometheus exporter config: &{FormatType:fmtText WithTimestamp:true}

2024-05-30T17:06:25.90833+08:00 INFO [54100,db72fb12fef8c11d] caller=route/monagent_route.go:78:RegisterPipelineRoute: register route /metrics/node/host

2024-05-30T17:06:25.90837+08:00 INFO [54100,db72fb12fef8c11d] caller=route/monagent_route.go:78:RegisterPipelineRoute: register route /metrics/node/host

2024-05-30T17:06:25.9084+08:00 INFO [54100,] caller=runtime/asm_amd64.s:1371:goexit: pipeline event process completed fields: duration=14.100367ms

2024-05-30T17:06:25.90842+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/pipeline_module_callback.go:36:addPipeline: add pipeline monitor.node.host, result status: succeed, description:

2024-05-30T17:06:25.90845+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/pipeline_module_callback.go:124:func1: add pipeline monitor.node.host successfully.

2024-05-30T17:06:25.90849+08:00 INFO [54100,db72fb12fef8c11d] caller=engine/pipeline_module_callback.go:27:addPipeline: add pipeline module config event fields: duration=“13.103µs”, module=obproxy.custom

2024-05-30T17:06:25.90853+08:00 INFO [54100,] caller=runtime/asm_amd64.s:1371:goexit: pipeline manager event chan get event fields: event="&{0xc0001df900 add obproxy.custom [0xc000032f60] 0xc0001a9e00}"

–More–