熊利宏

#1

【 使用环境 】测试环境

【 OB or 其他组件 】

【 使用版本 】

【问题描述】清晰明确描述问题

【复现路径】问题出现前后相关操作

【附件及日志】推荐使用OceanBase敏捷诊断工具obdiag收集诊断信息,详情参见链接(右键跳转查看):

【SOP系列 22 】——故障诊断第一步(自助诊断和诊断信息收集)

执行 kubectl get pod -n oceanbase pod的状态是 Running 状态

但是 执行 kubectl get obclusters.oceanbase.oceanbase.com metadb -n oceanbase

NAME STATUS AGE

metadb new 57m

STATUS 的状态变不到 running

请问估计是那个地方有问题

王利博

#3

kubectl get crds和kubectl get pods -n oceanbase-system 截图看下呢。

麻烦确认下使用的 ob-operator 的镜像版本,还有 obcluster 的 yaml 配置文件也帮忙发一下出来

kubectl get obclusters.oceanbase.oceanbase.com metadb -n oceanbase -o yaml

这样输出完整一些的信息

熊利宏

#6

这是执行 kubectl get obclusters.oceanbase.oceanbase.com metadb -n oceanbase -o yaml 的输出

apiVersion: oceanbase.oceanbase.com/v1alpha1

kind: OBCluster

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{“apiVersion”:“oceanbase.oceanbase.com/v1alpha1",“kind”:“OBCluster”,“metadata”:{“annotations”:{},“name”:“metadb”,“namespace”:“oceanbase”},“spec”:{“clusterId”:1,“clusterName”:“metadb”,“monitor”:{“image”:“oceanbase/obagent:4.2.1-100000092023101717”,“resource”:{“cpu”:1,“memory”:“1Gi”}},“observer”:{“image”:“oceanbase/oceanbase-cloud-native:4.2.1.1-101010012023111012”,“resource”:{“cpu”:2,“memory”:“10Gi”},“storage”:{“dataStorage”:{“size”:“50Gi”,“storageClass”:“local-path”},“logStorage”:{“size”:“20Gi”,“storageClass”:“local-path”},“redoLogStorage”:{“size”:“50Gi”,“storageClass”:“local-path”}}},“parameters”:[{“name”:“system_memory”,“value”:“2G”},{“name”:“obconfig_url”,“value”:“http://svc-ob-configserver.oceanbase.svc:8080/services?Action=ObRootServiceInfo\u0026ObCluster=metadb”}],“topology”:[{“replica”:1,“zone”:“zone1”},{“replica”:1,“zone”:“zone2”},{“replica”:1,“zone”:“zone3”}],“userSecrets”:{“monitor”:“sc-sys-monitor”,“operator”:“sc-sys-operator”,“proxyro”:“sc-sys-proxyro”,“root”:"sc-sys-root”}}}

creationTimestamp: “2024-02-28T05:34:58Z”

generation: 1

name: metadb

namespace: oceanbase

resourceVersion: “51581”

uid: 1c39b24c-01f0-4807-81eb-e9d5aad10507

spec:

clusterId: 1

clusterName: metadb

monitor:

image: oceanbase/obagent:4.2.1-100000092023101717

resource:

cpu: “1”

memory: 1Gi

observer:

image: oceanbase/oceanbase-cloud-native:4.2.1.1-101010012023111012

resource:

cpu: “2”

memory: 10Gi

storage:

dataStorage:

size: 50Gi

storageClass: local-path

logStorage:

size: 20Gi

storageClass: local-path

redoLogStorage:

size: 50Gi

storageClass: local-path

parameters:

- name: system_memory

value: 2G

- name: obconfig_url

value: http://svc-ob-configserver.oceanbase.svc:8080/services?Action=ObRootServiceInfo&ObCluster=metadb

topology:

- replica: 1

zone: zone1

- replica: 1

zone: zone2

- replica: 1

zone: zone3

userSecrets:

monitor: sc-sys-monitor

operator: sc-sys-operator

proxyro: sc-sys-proxyro

root: sc-sys-root

status:

image: oceanbase/oceanbase-cloud-native:4.2.1.1-101010012023111012

obzones:

- status: running

zone: metadb-1-zone1

- status: running

zone: metadb-1-zone2

- status: running

zone: metadb-1-zone3

operationContext:

failureRule:

failureStatus: new

failureStrategy: retry over

retryCount: 2

idx: 2

name: bootstrap obcluster

targetStatus: bootstrapped

task: wait obzone bootstrap ready

taskId: 9fc9fd92-f4b2-459f-a107-bb4dab63bc11

taskStatus: running

tasks:

- create obzone

- wait obzone bootstrap ready

- bootstrap

parameters: []

status: new

熊利宏

#7

熊利宏

#8

ob-operator 的版本是 2.1.0_release

熊利宏

#9

obcluster 的 yaml 配置文件

apiVersion: oceanbase.oceanbase.com/v1alpha1

kind: OBCluster

metadata:

name: metadb

namespace: oceanbase

spec:

clusterName: metadb

clusterId: 1

userSecrets:

root: sc-sys-root

proxyro: sc-sys-proxyro

monitor: sc-sys-monitor

operator: sc-sys-operator

topology:

- zone: zone1

replica: 1

- zone: zone2

replica: 1

- zone: zone3

replica: 1

observer:

image: oceanbase/oceanbase-cloud-native:4.2.1.1-101010012023111012

resource:

cpu: 2

memory: 10Gi

storage:

dataStorage:

storageClass: local-path

size: 50Gi

redoLogStorage:

storageClass: local-path

size: 50Gi

logStorage:

storageClass: local-path

size: 20Gi

monitor:

image: oceanbase/obagent:4.2.1-100000092023101717

resource:

cpu: 1

memory: 1Gi

parameters:

- name: system_memory

value: 2G

- name: obconfig_url

value: 'http://svc-ob-configserver.oceanbase.svc:8080/services?Action=ObRootServiceInfo&ObCluster=meta

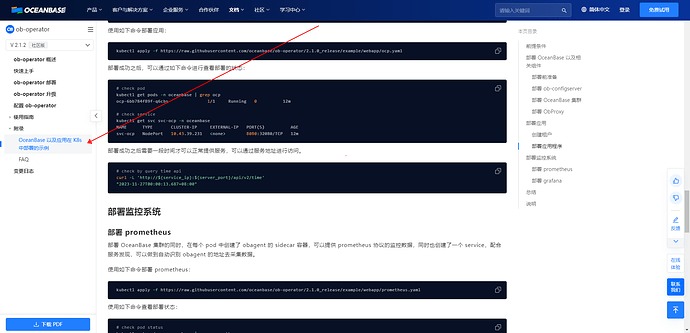

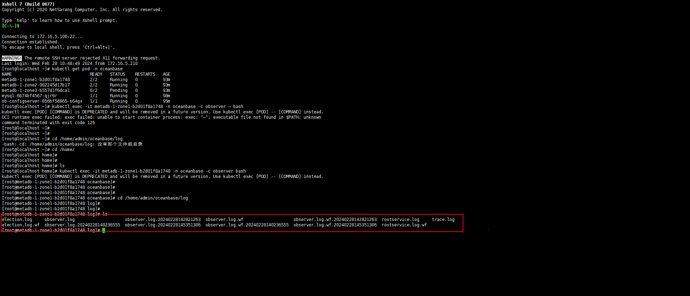

好的,ob-operator 我们现在已经发了 2.1.2 版本了,可以的话,可以用新版本,日志文件也帮忙发一下,kubectl logs oceanbase-controller-manager-xxx -n oceanbase-system -c manager > ob-operator.log, 这样获取 ob-operator 的日志,kubectl exec -it metadb-1-zone1-xxx -n oceanbase -c observer – bash, 这样登陆到 observer 容器中, /home/admin/oceanbase/log 目录下有日志

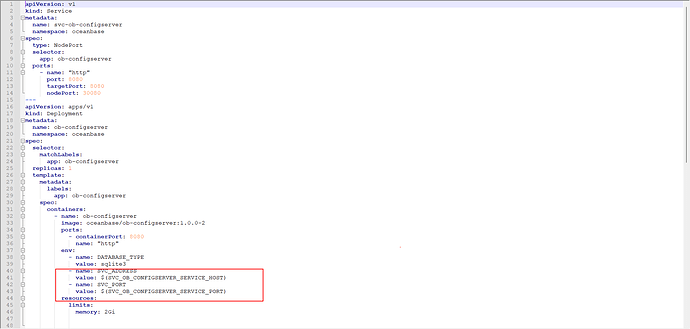

我看配置文件中设置了 configserver 的地址,已经把 configserver 部署好了吗

熊利宏

#13

configserver 没有部署好,状态还是new的状态,不是running状态

熊利宏

#15

kubectl logs oceanbase-controller-manager-xxx -n oceanbase-system -c manager > ob-operator.log 日志为空

熊利宏

#16

ob-operator 我现在改成 2.1.2 版本

熊利宏

#21

我是用mysql作为ob-configserver 元数据存储,需要在我的mysql里面创建好数据库吗

定义了之后 在那个地方配置 让ob-configserver 知道我用的那个数据库