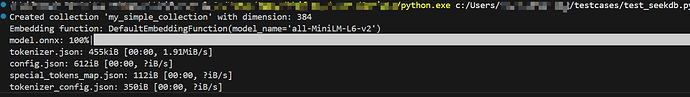

我做了client/server的这种架构,server上安装了seekdb没有问题。运行客户端(官网示例程序),有一个疑问,我看到客户端(windows)上下载了all-MiniLM-L6-v2这个模型,我能不能认为受到这个模型的能力影响,我只能chunk到256大小?

另外,既然我的seekdb是装在server(Linux)上,为什么embedding不能由server端来承担(能力强一些)

# Alternative: Server mode (connecting to remote SeekDB server)

client = pyseekdb.Client(

host="__my_server_ip__",

port=2881,

database="test",

user="root",

password=""

)

# ==================== Step 2: Create a Collection with Embedding Function ====================

# A collection is like a table that stores documents with vector embeddings

collection_name = "my_simple_collection"

# Create collection with default embedding function

# The embedding function will automatically convert documents to embeddings

collection = client.create_collection(

name=collection_name,

)

print(f"Created collection '{collection_name}' with dimension: {collection.dimension}")

print(f"Embedding function: {collection.embedding_function}")

# ==================== Step 3: Add Data to Collection ====================

# With embedding function, you can add documents directly without providing embeddings

# The embedding function will automatically generate embeddings from documents

documents = [

"Machine learning is a subset of artificial intelligence",

"Python is a popular programming language",

"Vector databases enable semantic search",

"Neural networks are inspired by the human brain",

"Natural language processing helps computers understand text"

]

ids = ["id1", "id2", "id3", "id4", "id5"]

# Add data with documents only - embeddings will be auto-generated by embedding function

collection.add(

ids=ids,

documents=documents, # embeddings will be automatically generated

metadatas=[

{"category": "AI", "index": 0},

{"category": "Programming", "index": 1},

{"category": "Database", "index": 2},

{"category": "AI", "index": 3},

{"category": "NLP", "index": 4}

]

)

运行程序能够明显看到客户端(windows运行)用了all-MiniLM-L6-v2,而且也有384维,感觉有点拉低了性能。