ob:4.2.1.8-ce

mysql> select * from oceanbase.dba_ob_zones;

+-------+----------------------------+----------------------------+--------+------+----------+-----------+

| ZONE | CREATE_TIME | MODIFY_TIME | STATUS | IDC | REGION | TYPE |

+-------+----------------------------+----------------------------+--------+------+----------+-----------+

| zone1 | 2025-08-01 10:36:50.097351 | 2025-08-08 18:28:31.286667 | ACTIVE | idc1 | HANGZHOU | ReadWrite |

| zone2 | 2025-08-01 10:52:07.041147 | 2025-08-08 18:28:31.289832 | ACTIVE | idc2 | HANGZHOU | ReadWrite |

| zone3 | 2025-08-01 10:52:10.070665 | 2025-08-08 18:28:31.291942 | ACTIVE | idc3 | SHANGHAI | ReadWrite |

+-------+----------------------------+----------------------------+--------+------+----------+-----------+

3 rows in set (0.04 sec)

mysql> SELECT SVR_IP,SVR_PORT,ZONE,status,START_SERVICE_TIME,STOP_TIME,WITH_ROOTSERVER FROM oceanbase.DBA_OB_servers;

+--------------+----------+-------+--------+----------------------------+-----------+-----------------+

| SVR_IP | SVR_PORT | ZONE | status | START_SERVICE_TIME | STOP_TIME | WITH_ROOTSERVER |

+--------------+----------+-------+--------+----------------------------+-----------+-----------------+

| 10.xx.xx.30 | 2882 | zone1 | ACTIVE | 2025-08-01 10:40:13.834674 | NULL | YES |

| 10.xx.xx.31 | 2882 | zone3 | ACTIVE | 2025-08-01 10:57:06.405188 | NULL | NO |

| 10.xx.xx.202 | 2882 | zone2 | ACTIVE | 2025-08-01 14:47:28.505417 | NULL | NO |

+--------------+----------+-------+--------+----------------------------+-----------+-----------------+

3 rows in set (0.00 sec)

mysql> select * from oceanbase.dba_ob_tablegroups;

+-----------------+-------------------+----------------------+-----------------+------------------------+------------------------+---------------------------+----------+

| TABLEGROUP_NAME | PARTITIONING_TYPE | SUBPARTITIONING_TYPE | PARTITION_COUNT | DEF_SUBPARTITION_COUNT | PARTITIONING_KEY_COUNT | SUBPARTITIONING_KEY_COUNT | SHARDING |

+-----------------+-------------------+----------------------+-----------------+------------------------+------------------------+---------------------------+----------+

| oceanbase | NONE | NONE | NULL | NULL | NULL | NULL | ADAPTIVE |

+-----------------+-------------------+----------------------+-----------------+------------------------+------------------------+---------------------------+----------+

1 row in set (0.01 sec)

mysql> show create table t \G

*************************** 1. row ***************************

Table: t

Create Table: CREATE TABLE `t` (

`C1` int(11) DEFAULT NULL,

`C2` int(11) DEFAULT NULL,

KEY `INDEX1` (`C2`) BLOCK_SIZE 16384 GLOBAL

) DEFAULT CHARSET = utf8mb4 ROW_FORMAT = DYNAMIC COMPRESSION = 'zstd_1.3.8' REPLICA_NUM = 3 BLOCK_SIZE = 16384 USE_BLOOM_FILTER = FALSE TABLET_SIZE = 134217728 PCTFREE = 0

partition by hash(c1)

(partition `p0`,

partition `p1`,

partition `p2`,

partition `p3`,

partition `p4`)

1 row in set (0.01 sec)

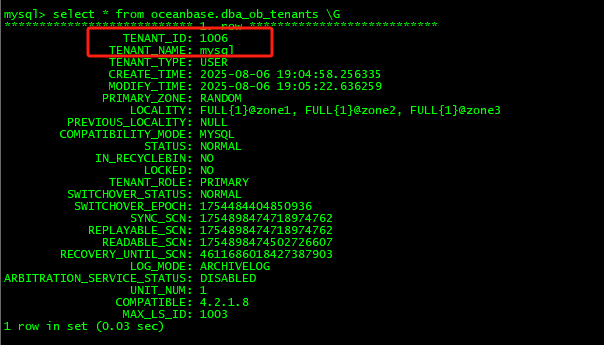

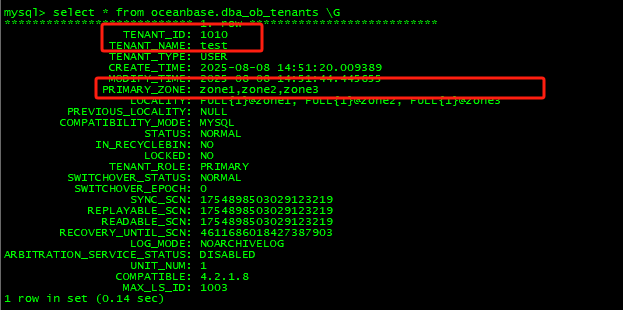

租户信息:

mysql> select * from oceanbase.dba_ob_tenants \G

*************************** 1. row ***************************

TENANT_ID: 1010

TENANT_NAME: test

TENANT_TYPE: USER

CREATE_TIME: 2025-08-08 14:51:20.009389

MODIFY_TIME: 2025-08-08 14:51:44.445655

PRIMARY_ZONE: zone1,zone2,zone3

LOCALITY: FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3

PREVIOUS_LOCALITY: NULL

COMPATIBILITY_MODE: MYSQL

STATUS: NORMAL

IN_RECYCLEBIN: NO

LOCKED: NO

TENANT_ROLE: PRIMARY

SWITCHOVER_STATUS: NORMAL

SWITCHOVER_EPOCH: 0

SYNC_SCN: 1754708039700464060

REPLAYABLE_SCN: 1754708039700464060

READABLE_SCN: 1754708039700464060

RECOVERY_UNTIL_SCN: 4611686018427387903

LOG_MODE: NOARCHIVELOG

ARBITRATION_SERVICE_STATUS: DISABLED

UNIT_NUM: 1

COMPATIBLE: 4.2.1.8

MAX_LS_ID: 1003

1 row in set (0.05 sec)

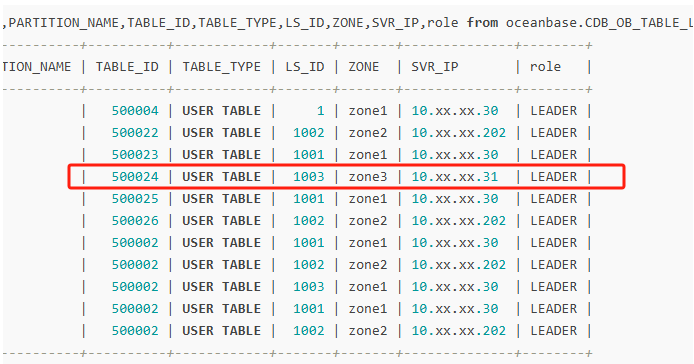

mysql> select TENANT_ID,DATABASE_NAME,TABLE_NAME,PARTITION_NAME,TABLE_ID,TABLE_TYPE,LS_ID,ZONE,SVR_IP,role from oceanbase.CDB_OB_TABLE_LOCATIONS where table_name='t' and role='leader';

+-----------+---------------+------------+----------------+----------+------------+-------+-------+--------------+--------+

| TENANT_ID | DATABASE_NAME | TABLE_NAME | PARTITION_NAME | TABLE_ID | TABLE_TYPE | LS_ID | ZONE | SVR_IP | role |

+-----------+---------------+------------+----------------+----------+------------+-------+-------+--------------+--------+

| 1010 | test | t | p0 | 500002 | USER TABLE | 1001 | zone1 | 10.xx.xx.30 | LEADER |

| 1010 | test | t | p1 | 500002 | USER TABLE | 1002 | zone2 | 10.xx.xx.202 | LEADER |

| 1010 | test | t | p2 | 500002 | USER TABLE | 1003 | zone1 | 10.xx.xx.30 | LEADER |

| 1010 | test | t | p3 | 500002 | USER TABLE | 1001 | zone1 | 10.xx.xx.30 | LEADER |

| 1010 | test | t | p4 | 500002 | USER TABLE | 1002 | zone2 | 10.xx.xx.202 | LEADER |

+-----------+---------------+------------+----------------+----------+------------+-------+-------+--------------+--------+

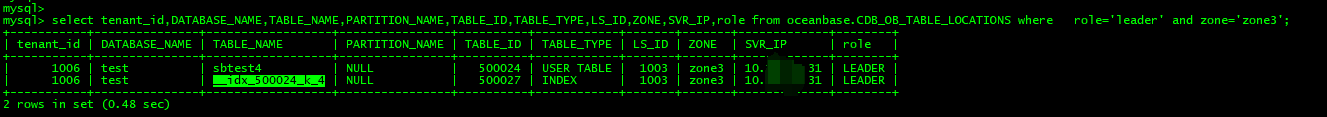

分区表的leader只分布在zone1和zone2上,为什么不是均匀分布呢?