result.txt (182.6 KB)

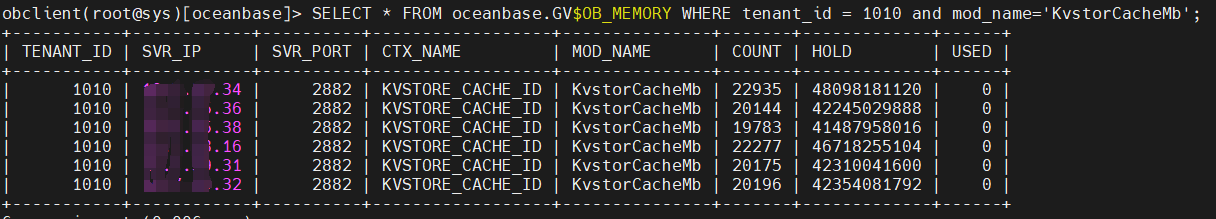

KvstorCacheMb 这个占用较大

并且observer.log一直在频繁刷如下日志,日志切换也很频繁

[2025-06-18 11:14:31.528641] INFO [COMMON] print_io_status (ob_io_manager.cpp:2761) [38433][IO_TUNING0][T0][Y0-0000000000000000-0-0] [lt=14] [IO STATUS TENANT](tenant_id=500, ref_cnt=1, io_config={group_configs_cnt:3, memory_limit_:536870912, callback_thread_count_:0, unit_config_:{min_iops:10000, max_iops:50000, weight:10000, max_net_bandwidth:9223372036854775807, net_bandwidth_weight:100}, enable_io_tracer:false, object_storage_io_timeout_ms:20000, group_configs:[group_id = 0:{mode:0, deleted:false, cleared:false, min_percent:100, max_percent:100, weight_percent:100}, group_id = 0:{mode:1, deleted:false, cleared:false, min_percent:100, max_percent:100, weight_percent:100}, group_id = 0:{mode:2, deleted:false, cleared:false, min_percent:100, max_percent:100, weight_percent:100}]}, hold_mem=31368256, free_req_cnt=50000, free_result_cnt=50000, callback_queues=[0], [FAILED]: fail_ips=0, fail_ops=0, fail_iops=0, fail_ibw=0, fail_obw=0, [SUCC]: ips=0, ops=0, iops=0, ibw=0, obw=0, iops_limit=50000, ibw_limit=9223372036854775807, obw_limit=9223372036854775807)

[2025-06-18 11:14:31.528683] INFO [COMMON] print_io_function_status (ob_io_manager.cpp:2841) [38433][IO_TUNING0][T0][Y0-0000000000000000-0-0] [lt=38] [IO STATUS FUNCTION](tenant_id=500, io_status=function_name:OTHER_GROUPS, mode:LOCAL READ, avg_size:4096, avg_iops:1, avg_bw:4090, [delay/us]: prepare:0, schedule:0, submit:600000000, device:191, total:200)

[2025-06-18 11:14:31.528707] INFO [COMMON] print_io_status (ob_io_manager.cpp:2783) [38433][IO_TUNING0][T0][Y0-0000000000000000-0-0] [lt=11] [IO STATUS CALLBACK](tenant_id=500, runners=[{is_inited_:true, queue_.get_total():0, tg_id_:285, idx_:0, tid_:38432}], io_callback_status=runner 0: thread_id=38432, queue_count=0, )

[2025-06-18 11:14:31.528726] INFO [COMMON] print_status (ob_io_struct.cpp:2860) [38433][IO_TUNING0][T0][Y0-0000000000000000-0-0] [lt=13] [IO STATUS CHANNEL] device_id=0x146a87dad550, req_in_channel, async_total=0, sync_total=0, async_cnt= 0 0 0 0 0 0 0 0, sync_cnt= 0

[2025-06-18 11:14:31.528741] INFO [COMMON] print_status (ob_io_struct.cpp:2860) [38433][IO_TUNING0][T0][Y0-0000000000000000-0-0] [lt=11] [IO STATUS CHANNEL] device_id=0x146a87dac750, req_in_channel, async_total=0, sync_total=0, async_cnt= 0 0 0 0 0 0 0 0, sync_cnt= 0

[2025-06-18 11:14:31.528755] INFO [COMMON] print_server_status (ob_io_manager.cpp:424) [38433][IO_TUNING0][T0][Y0-0000000000000000-0-0] [lt=10] [IO STATUS SERVER] net_in=385kB/s, net_out=136kB/s, bucket_in=0kB/s, bucket_out=0kB/s, failed_bucket_in=0kB/s, failed_bucket_out=0kB/s, limit=1280000kB/s

[2025-06-18 11:14:31.528774] INFO [STORAGE.BLKMGR] alloc_block (ob_block_manager.cpp:336) [38433][IO_TUNING0][T0][Y0-0000000000000000-0-0] [lt=10] successfully alloc block(macro_id={[ver=1,mode=0,seq=3673622][2nd=256150]})

[2025-06-18 11:14:31.536789] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=20][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.539031] WDIAG [STORAGE.TRANS] run1 (ob_standby_timestamp_service.cpp:141) [26577][T1010_STSWorker][T1010][Y0-0000000000000000-0-0] [lt=26][errcode=-4076] query and update last id fail(ret=-4076, ret=“OB_NEED_WAIT”)

[2025-06-18 11:14:31.540364] INFO [STORAGE.REDO] notify_flush (ob_storage_log_writer.cpp:539) [26563][T1010_OB_SLOG][T1010][Y0-0000000000000000-0-0] [lt=18] Successfully flush(log_item={start_cursor:ObLogCursor{file_id=9, log_id=4418768, offset=52154228}, end_cursor:ObLogCursor{file_id=9, log_id=4418769, offset=52154537}, is_inited:true, is_local:false, buf_size:8192, buf:0x146a6476c050, len:4236, log_data_len:309, seq:4418768, flush_finish:false, flush_ret:0})

[2025-06-18 11:14:31.540515] INFO [SERVER] get_ls_replayed_scn (ob_service.cpp:3764) [9295][T1010_L0_G18][T1010][YB420A07271F-000637AD625E2B41-0-0] [lt=27] start get_ls_replayed_scn(arg={tenant_id:1010, ls_id:{id:1004}, all_replica:false})

[2025-06-18 11:14:31.540545] INFO [SERVER] get_ls_replayed_scn (ob_service.cpp:3847) [9295][T1010_L0_G18][T1010][YB420A07271F-000637AD625E2B41-0-0] [lt=16] finish get_ls_replayed_scn(ret=0, ret=“OB_SUCCESS”, cur_readable_scn={val:1750216471012630004, v:0}, arg={tenant_id:1010, ls_id:{id:1004}, all_replica:false}, result={tenant_id:1010, ls_id:{id:1004}, cur_readable_scn:{val:1750216471012630004, v:0}, offline_scn:{val:18446744073709551615, v:3}, self_addr_:“10.7.39.31:2882”}, offline_scn={val:18446744073709551615, v:3})

[2025-06-18 11:14:31.542092] INFO [SERVER] get_ls_replayed_scn (ob_service.cpp:3764) [9295][T1010_L0_G18][T1010][YB420A072720-000637AE0FFFE058-0-0] [lt=22] start get_ls_replayed_scn(arg={tenant_id:1010, ls_id:{id:1005}, all_replica:false})

[2025-06-18 11:14:31.542110] INFO [SERVER] get_ls_replayed_scn (ob_service.cpp:3847) [9295][T1010_L0_G18][T1010][YB420A072720-000637AE0FFFE058-0-0] [lt=13] finish get_ls_replayed_scn(ret=0, ret=“OB_SUCCESS”, cur_readable_scn={val:1750216471075870003, v:0}, arg={tenant_id:1010, ls_id:{id:1005}, all_replica:false}, result={tenant_id:1010, ls_id:{id:1005}, cur_readable_scn:{val:1750216471075870003, v:0}, offline_scn:{val:18446744073709551615, v:3}, self_addr_:“10.7.39.31:2882”}, offline_scn={val:18446744073709551615, v:3})

[2025-06-18 11:14:31.542445] INFO [STORAGE.TRANS] get_gts (ob_gts_source.cpp:257) [26504][T1010_TeRec][T1009][YB420A07271F-000637AD625E2B41-0-0] [lt=17] push gts task success(*task={:""})

[2025-06-18 11:14:31.543286] INFO [STORAGE] runTimerTask (ob_checkpoint_service.cpp:289) [38593][T1_TimerWK3_CKClogDisk][T1][Y0-0000000000000000-0-0] [lt=19] ====== check clog disk timer task ======

[2025-06-18 11:14:31.543303] INFO [PALF] get_disk_usage (palf_env_impl.cpp:902) [38593][T1_TimerWK3_CKClogDisk][T1][Y0-0000000000000000-0-0] [lt=14] get_disk_usage(ret=0, capacity(MB):=6144, used(MB):=2427)

[2025-06-18 11:14:31.543965] INFO [SERVER] runTimerTask (ob_eliminate_task.cpp:225) [26538][T1010_TimerWK2_ReqMemEvict][T1010][Y0-0000000000000000-0-0] [lt=10] sql audit evict task end(request_manager_->get_tenant_id()=1010, evict_high_mem_level=1855425871, evict_high_size_level=1297612, evict_batch_count=0, elapse_time=0, size_used=917504, mem_used=1801058304)

[2025-06-18 11:14:31.544007] INFO [SERVER] prepare_alloc_queue (ob_dl_queue.cpp:92) [26537][T1010_TimerWK1_ReqMemEvict][T1010][Y0-0000000000000000-0-0] [lt=50] Construct Queue Num(construct_num=0, get_push_idx()=74, get_cur_idx()=66, get_pop_idx()=52)

[2025-06-18 11:14:31.544370] WDIAG [RPC.OBMYSQL] serialize (ob_mysql_row.cpp:54) [38733][T1_L0_G0][T1][YB420A07271F-000637AC526DB4D6-0-0] [lt=22][errcode=-4019] failed to encode cell(ret=-4019, cell_idx=2, len=75, pos=48)

[2025-06-18 11:14:31.544892] INFO [LIB] stat (utility.h:1211) [26586][T1010_TxLoopWor][T1010][Y0-0000000000000000-0-0] [lt=32] [PALF STAT PUSH LOG TO FOLLOWERS RPC POST COST TIME](cur_stat_count=10, stat_interval=1000000, avg (count/cost)=32, this=0x14676e3b5470, extra_info="")

[2025-06-18 11:14:31.544924] INFO [PALF] submit_log (palf_handle_impl.cpp:446) [26586][T1010_TxLoopWor][T1010][Y0-0000000000000000-0-0] [lt=21] [PALF STAT APPEND DATA SIZE](this={palf_id:1004, self:“10.7.39.31:2882”, has_set_deleted:false}, append size=1220)

[2025-06-18 11:14:31.544948] INFO [LIB] stat (utility.h:1211) [26586][T1010_TxLoopWor][T1010][Y0-0000000000000000-0-0] [lt=23] [PALF STAT APPEND COST TIME](cur_stat_count=10, stat_interval=1000000, avg (count/cost)=0, this=0x1465383a8050, extra_info=“id:1004”)

[2025-06-18 11:14:31.545054] INFO [PALF] try_advance_committed_lsn_ (log_sliding_window.cpp:1758) [26546][T1010_LogIOCb0][T1010][Y0-0000000000000000-0-0] [lt=21] [PALF STAT COMMITTED LOG SIZE](palf_id=1004, self=“10.7.39.31:2882”, committed size=1220)

[2025-06-18 11:14:31.545148] WDIAG [RPC.OBMYSQL] serialize (ob_mysql_row.cpp:54) [38733][T1_L0_G0][T1][YB420A07271F-000637AC526DB4D6-0-0] [lt=19][errcode=-4019] failed to encode cell(ret=-4019, cell_idx=75, len=2017, pos=315)

[2025-06-18 11:14:31.545276] INFO [PALF] read (log_cache.cpp:101) [26552][T1010_LogShared][T1010][Y0-0000000000000000-0-0] [lt=23] [PALF STAT HOT CACHE HIT RATE](palf_id=1004, read_size=1220, hit_cnt=10, read_cnt=10, hit rate=1.000000000000000000e+00)

[2025-06-18 11:14:31.545356] INFO [ARCHIVE] gc_stale_ls_task_ (ob_ls_mgr.cpp:572) [26579][T1010_LSArchive][T1010][YB420A07271F-000637AD626CEA15-0-0] [lt=16] gc stale ls task succ

[2025-06-18 11:14:31.546882] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=14][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.547017] INFO [STORAGE.TRANS] statistics_ (ob_trans_rpc.cpp:674) [26504][T1010_TeRec][T1009][YB420A07271F-000637AD625E2B41-0-0] [lt=19] rpc statistics(total_trans_msg_count=1, total_batch_msg_count=0)

[2025-06-18 11:14:31.547701] INFO [STORAGE.TRANS] process (ob_trans_rpc.cpp:146) [26751][T1009_L0_G0][T1009][YB420A072322-000637AD832D4720-0-0] [lt=19] transaction rpc statistics(total_rt=132, total_process=10, avg_rt=12)

[2025-06-18 11:14:31.547949] INFO [STORAGE.REDO] notify_flush (ob_storage_log_writer.cpp:539) [26563][T1010_OB_SLOG][T1010][Y0-0000000000000000-0-0] [lt=32] Successfully flush(log_item={start_cursor:ObLogCursor{file_id=9, log_id=4418769, offset=52154537}, end_cursor:ObLogCursor{file_id=9, log_id=4418770, offset=52154834}, is_inited:true, is_local:false, buf_size:8192, buf:0x146a64764050, len:3927, log_data_len:297, seq:4418769, flush_finish:false, flush_ret:0})

[2025-06-18 11:14:31.548079] INFO [STORAGE.REDO] notify_flush (ob_storage_log_writer.cpp:539) [26563][T1010_OB_SLOG][T1010][Y0-0000000000000000-0-0] [lt=21] Successfully flush(log_item={start_cursor:ObLogCursor{file_id=9, log_id=4418770, offset=52154834}, end_cursor:ObLogCursor{file_id=9, log_id=4418771, offset=52155139}, is_inited:true, is_local:false, buf_size:8192, buf:0x146a6478c050, len:3630, log_data_len:305, seq:4418770, flush_finish:false, flush_ret:0})

[2025-06-18 11:14:31.548141] INFO [PALF] next (palf_iterator.h:183) [26597][T1010_ReplaySrv][T1010][Y0-0000000000000000-0-0] [lt=22] [PALF STAT ITERATOR INFO](io_ctx={user:“REPLAY”, palf_id:1005, iterator_info:{allow_filling_cache:true, hot_cache_stat:{hit_cnt:1221146, miss_cnt:0, cache_read_size:346193586, hit_ratio:“1.000000000000000000e+00”}, cold_cache_stat:{hit_cnt:0, miss_cnt:0, cache_read_size:0, hit_ratio:“0.000000000000000000e+00”}, read_io_cnt:0, read_io_size:0, read_disk_cost_ts:0, start_lsn:{lsn:0}}})

[2025-06-18 11:14:31.548143] INFO [PALF] next (palf_iterator.h:183) [26598][T1010_ReplaySrv][T1010][Y0-0000000000000000-0-0] [lt=33] [PALF STAT ITERATOR INFO](io_ctx={user:“REPLAY”, palf_id:1006, iterator_info:{allow_filling_cache:true, hot_cache_stat:{hit_cnt:1123728, miss_cnt:0, cache_read_size:342387963, hit_ratio:“1.000000000000000000e+00”}, cold_cache_stat:{hit_cnt:0, miss_cnt:0, cache_read_size:0, hit_ratio:“0.000000000000000000e+00”}, read_io_cnt:0, read_io_size:0, read_disk_cost_ts:0, start_lsn:{lsn:0}}})

[2025-06-18 11:14:31.556987] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=14][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.567088] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=14][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.569086] INFO [PALF] inner_append_log (palf_handle_impl.cpp:2239) [26549][T1010_IOWorker][T1010][Y0-0000000000000000-0-0] [lt=12] [PALF STAT INNER APPEND LOG SIZE](this={palf_id:1005, self:“10.7.39.31:2882”, has_set_deleted:false}, accum_size=1220)

[2025-06-18 11:14:31.569248] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:2926) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=25] dump_dag_status(priority=“PRIO_COMPACTION_HIGH”, limits=6, running_task=0, adaptive_task_limit=6, ready_dag_count=0, waiting_dag_count=0, rank_dag_count=0)

[2025-06-18 11:14:31.569271] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:2926) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=23] dump_dag_status(priority=“PRIO_HA_HIGH”, limits=8, running_task=0, adaptive_task_limit=8, ready_dag_count=0, waiting_dag_count=0, rank_dag_count=0)

[2025-06-18 11:14:31.569286] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:2926) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=15] dump_dag_status(priority=“PRIO_COMPACTION_MID”, limits=6, running_task=0, adaptive_task_limit=6, ready_dag_count=0, waiting_dag_count=0, rank_dag_count=0)

[2025-06-18 11:14:31.569303] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:2926) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=17] dump_dag_status(priority=“PRIO_HA_MID”, limits=5, running_task=0, adaptive_task_limit=5, ready_dag_count=0, waiting_dag_count=0, rank_dag_count=0)

[2025-06-18 11:14:31.569319] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:2926) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=15] dump_dag_status(priority=“PRIO_COMPACTION_LOW”, limits=6, running_task=0, adaptive_task_limit=6, ready_dag_count=0, waiting_dag_count=0, rank_dag_count=0)

[2025-06-18 11:14:31.569308] INFO [COMMON] compute_tenant_wash_size (ob_kvcache_store.cpp:1135) [38204][TimerWK1_KVCacheWash][T0][Y0-0000000000000000-0-0] [lt=15] Wash compute wash size(is_wash_valid=false, sys_total_wash_size=-26067943424, global_cache_size=45235896320, tenant_max_wash_size=0, tenant_min_wash_size=0, tenant_ids_=[cnt:6, 500, 508, 509, 1, 1009, 1010])

[2025-06-18 11:14:31.569335] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:2926) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=16] dump_dag_status(priority=“PRIO_HA_LOW”, limits=2, running_task=0, adaptive_task_limit=2, ready_dag_count=0, waiting_dag_count=0, rank_dag_count=0)

[2025-06-18 11:14:31.569320] INFO [PALF] read (log_cache.cpp:101) [26601][T1010_ReplaySrv][T1010][Y0-0000000000000000-0-0] [lt=34] [PALF STAT HOT CACHE HIT RATE](palf_id=1005, read_size=1220, hit_cnt=10, read_cnt=10, hit rate=1.000000000000000000e+00)

[2025-06-18 11:14:31.569350] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:2926) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=15] dump_dag_status(priority=“PRIO_DDL”, limits=2, running_task=0, adaptive_task_limit=2, ready_dag_count=0, waiting_dag_count=0, rank_dag_count=0)

[2025-06-18 11:14:31.569367] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:2926) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=16] dump_dag_status(priority=“PRIO_DDL_HIGH”, limits=6, running_task=0, adaptive_task_limit=6, ready_dag_count=0, waiting_dag_count=0, rank_dag_count=0)

[2025-06-18 11:14:31.569383] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:2926) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=15] dump_dag_status(priority=“PRIO_TTL”, limits=2, running_task=0, adaptive_task_limit=2, ready_dag_count=0, waiting_dag_count=0, rank_dag_count=0)

[2025-06-18 11:14:31.569403] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:3745) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=16] dump_dag_status[DAG_NET](running_dag_net_map_size=0, blocking_dag_net_list_size=0, running_dag_net_list_size=0)

[2025-06-18 11:14:31.569418] INFO [COMMON] dump_dag_status (ob_tenant_dag_scheduler.cpp:4493) [26680][T1009_DagSchedu][T1009][Y0-0000000000000000-0-0] [lt=14] dump_dag_status(dag_cnt=0, total_worker_cnt_=0, total_running_task_cnt=0, work_thread_num_=43, scheduled_task_cnt=0)

[2025-06-18 11:14:31.577190] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=13][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.587289] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=13][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.597385] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=12][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.607478] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=11][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.617574] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=13][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.627661] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=12][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.637755] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=12][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.639058] WDIAG [STORAGE.TRANS] run1 (ob_standby_timestamp_service.cpp:141) [26577][T1010_STSWorker][T1010][Y0-0000000000000000-0-0] [lt=8][errcode=-4076] query and update last id fail(ret=-4076, ret=“OB_NEED_WAIT”)

[2025-06-18 11:14:31.645067] INFO [LIB] stat (utility.h:1211) [26548][T1010_IOWorker][T1010][Y0-0000000000000000-0-0] [lt=24] [PALF STAT WRITE LOG COST TIME](cur_stat_count=10, stat_interval=1000000, avg (count/cost)=130, this=0x14676e3bfdb0, extra_info=“palf_id:1004”)

[2025-06-18 11:14:31.645091] INFO [LIB] stat (utility.h:1228) [26548][T1010_IOWorker][T1010][Y0-0000000000000000-0-0] [lt=20] [PALF STAT IO TASK IN QUEUE TIME](cur_stat_count=10, stat_interval=1000000, avg (count/cost)=9, this=0x146378ff4c70, extra_info="")

[2025-06-18 11:14:31.645102] INFO [LIB] stat (utility.h:1211) [26546][T1010_LogIOCb0][T1010][Y0-0000000000000000-0-0] [lt=20] [PALF STAT FLUSH CB COST TIME](cur_stat_count=10, stat_interval=1000000, avg (count/cost)=9, this=0x14676e3bffe0, extra_info=“palf_id:1004”)

[2025-06-18 11:14:31.645320] INFO [LIB] stat (utility.h:1211) [9297][T1010_L0_G1][T1010][YB420A072720-000637AEE59FA146-0-0] [lt=18] [PALF STAT FS CB EXCUTE COST TIME](cur_stat_count=10, stat_interval=1000000, avg (count/cost)=5, this=0x14676e3b56a0, extra_info=“palf_id:1004”)

[2025-06-18 11:14:31.645341] INFO [LIB] stat (utility.h:1211) [9297][T1010_L0_G1][T1010][YB420A072720-000637AEE59FA146-0-0] [lt=20] [PALF STAT LOG LIFE TIME](cur_stat_count=10, stat_interval=1000000, avg (count/cost)=435, this=0x14676e3b58d0, extra_info=“palf_id:1004”)

[2025-06-18 11:14:31.647826] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=11][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.657921] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=13][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.660509] INFO eloop_run (eloop.c:145) [38287][pnio2][T0][Y0-0000000000000000-0-0] [lt=25] PNIO [ratelimit] time: 1750216471660507, bytes: 0, bw: 0.000000 MB/s, add_ts: 1007616, add_bytes: 0

[2025-06-18 11:14:31.664427] INFO [SERVER] runTimerTask (ob_eliminate_task.cpp:225) [38590][T1_TimerWK0_ReqMemEvict][T1][Y0-0000000000000000-0-0] [lt=22] sql audit evict task end(request_manager_->get_tenant_id()=1, evict_high_mem_level=103079214, evict_high_size_level=530841, evict_batch_count=0, elapse_time=0, size_used=65536, mem_used=93588480)

[2025-06-18 11:14:31.664464] INFO [SERVER] prepare_alloc_queue (ob_dl_queue.cpp:92) [38591][T1_TimerWK1_ReqMemEvict][T1][Y0-0000000000000000-0-0] [lt=42] Construct Queue Num(construct_num=0, get_push_idx()=70, get_cur_idx()=62, get_pop_idx()=61)

[2025-06-18 11:14:31.668015] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=12][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)

[2025-06-18 11:14:31.678112] WDIAG [SERVER.OMT] check_cgroup_root_dir (ob_cgroup_ctrl.cpp:212) [38495][MultiTenant][T0][Y0-0000000000000000-0-0] [lt=13][errcode=-4027] dir not exist(OBSERVER_ROOT_CGROUP_DIR=“cgroup”, ret=-4027)