【 使用环境 】生产环境

【 OB or 其他组件 】OceanBase

【 使用版本 】OceanBase-CE-4.2.1-bp8

【问题描述】由于正在使用的OB集群的磁盘使用率较高,最高的节点磁盘使用率87%,最低的56%。安排通过OCP对OB集群进行节点扩容操作,并修改租户的UNIT_NUM个数进行租户扩容。上述操作完成后,观察新加入的节点磁盘是否正常。

发现新加入的节点磁盘一直未上涨,集群整体的磁盘情况未获得缓解。

查看负载均衡的相关表,结果如下:

MySQL [oceanbase]> select * from cdb_ob_balance_tasks limit 5\G;

*************************** 1. row ***************************

TENANT_ID: 1002

TASK_ID: 85348527502

CREATE_TIME: 2025-05-29 15:32:50.249740

MODIFY_TIME: 2025-05-29 16:00:14.606135

TASK_TYPE: LS_SPLIT

SRC_LS: 1043

DEST_LS: 4932

PART_LIST: 557950:558567

FINISHED_PART_LIST:

PART_COUNT: 1

FINISHED_PART_COUNT: 0

LS_GROUP_ID: 1015

STATUS: TRANSFER

PARENT_LIST: NULL

CHILD_LIST: 85348527503

CURRENT_TRANSFER_TASK_ID: 3441226

JOB_ID: 85348527500

COMMENT:

*************************** 2. row ***************************

TENANT_ID: 1002

TASK_ID: 85348527503

CREATE_TIME: 2025-05-29 15:32:50.249740

MODIFY_TIME: 2025-05-29 15:32:50.249740

TASK_TYPE: LS_ALTER

SRC_LS: 4932

DEST_LS: 4932

PART_LIST: NULL

FINISHED_PART_LIST: NULL

PART_COUNT: 0

FINISHED_PART_COUNT: 0

LS_GROUP_ID: 1021

STATUS: INIT

PARENT_LIST: 85348527502

CHILD_LIST: 85348527504

CURRENT_TRANSFER_TASK_ID: -1

JOB_ID: 85348527500

COMMENT: NULL

*************************** 3. row ***************************

TENANT_ID: 1002

TASK_ID: 85348527504

CREATE_TIME: 2025-05-29 15:32:50.249740

MODIFY_TIME: 2025-05-29 15:32:50.249740

TASK_TYPE: LS_MERGE

SRC_LS: 4932

DEST_LS: 4632

PART_LIST: NULL

FINISHED_PART_LIST: NULL

PART_COUNT: 0

FINISHED_PART_COUNT: 0

LS_GROUP_ID: 1021

STATUS: INIT

PARENT_LIST: 85348527503

CHILD_LIST: NULL

CURRENT_TRANSFER_TASK_ID: -1

JOB_ID: 85348527500

COMMENT: NULL

3 rows in set (0.03 sec)

MySQL [oceanbase]> select * from cdb_OB_TRANSFER_TASKS limit 5\G;

*************************** 1. row ***************************

TENANT_ID: 1002

TASK_ID: 3441226

CREATE_TIME: 2025-05-29 16:00:14.606135

MODIFY_TIME: 2025-05-29 16:00:14.608232

SRC_LS: 1043

DEST_LS: 4932

PART_LIST: 557950:558567

PART_COUNT: 1

NOT_EXIST_PART_LIST: NULL

LOCK_CONFLICT_PART_LIST: NULL

TABLE_LOCK_TABLET_LIST: NULL

TABLET_LIST: NULL

TABLET_COUNT: 0

START_SCN: 0

FINISH_SCN: 0

STATUS: INIT

TRACE_ID: YB421372120C-0006351B1D8D7F10-0-0

RESULT: -1

BALANCE_TASK_ID: 85348527502

TABLE_LOCK_OWNER_ID: -1

COMMENT: Wait to retry due to the last failure

1 row in set (0.04 sec)

MySQL [oceanbase]> select * from cdb_OB_TRANSFER_TASK_history order by FINISH_TIME desc limit 5\G;

*************************** 1. row ***************************

TENANT_ID: 1002

TASK_ID: 3441227

CREATE_TIME: 2025-05-29 16:01:14.797374

FINISH_TIME: 2025-05-29 16:02:14.749683

SRC_LS: 1043

DEST_LS: 4932

PART_LIST: 557950:558567

PART_COUNT: 1

NOT_EXIST_PART_LIST: NULL

LOCK_CONFLICT_PART_LIST: NULL

TABLE_LOCK_TABLET_LIST: 208838

TABLET_LIST: 208838:0,1152921504606893484:0,1152921504606894144:0,1152921504606894804:0,1152921504606895464:0

TABLET_COUNT: 5

START_SCN: 0

FINISH_SCN: 0

STATUS: FAILED

TRACE_ID: YB421372120C-0006351B1D8D7F4D-0-0

RESULT: -7114

BALANCE_TASK_ID: 85348527502

TABLE_LOCK_OWNER_ID: 85370831571

COMMENT:

*************************** 2. row ***************************

TENANT_ID: 1002

TASK_ID: 3441226

CREATE_TIME: 2025-05-29 16:00:14.606135

FINISH_TIME: 2025-05-29 16:01:14.619607

SRC_LS: 1043

DEST_LS: 4932

PART_LIST: 557950:558567

PART_COUNT: 1

NOT_EXIST_PART_LIST: NULL

LOCK_CONFLICT_PART_LIST: NULL

TABLE_LOCK_TABLET_LIST: 208838

TABLET_LIST: 208838:0,1152921504606893484:0,1152921504606894144:0,1152921504606894804:0,1152921504606895464:0

TABLET_COUNT: 5

START_SCN: 0

FINISH_SCN: 0

STATUS: FAILED

TRACE_ID: YB421372120C-0006351B1D8D7F10-0-0

RESULT: -7114

BALANCE_TASK_ID: 85348527502

TABLE_LOCK_OWNER_ID: 85366559553

COMMENT:

*************************** 3. row ***************************

TENANT_ID: 1002

TASK_ID: 3441225

CREATE_TIME: 2025-05-29 15:59:14.419215

FINISH_TIME: 2025-05-29 16:00:14.445245

SRC_LS: 1043

DEST_LS: 4932

PART_LIST: 557950:558567

PART_COUNT: 1

NOT_EXIST_PART_LIST: NULL

LOCK_CONFLICT_PART_LIST: NULL

TABLE_LOCK_TABLET_LIST: 208838

TABLET_LIST: 208838:0,1152921504606893484:0,1152921504606894144:0,1152921504606894804:0,1152921504606895464:0

TABLET_COUNT: 5

START_SCN: 0

FINISH_SCN: 0

STATUS: FAILED

TRACE_ID: YB421372120C-0006351B1D8D7ED3-0-0

RESULT: -7114

BALANCE_TASK_ID: 85348527502

TABLE_LOCK_OWNER_ID: 85366557326

COMMENT:

MySQL [oceanbase]> select count(*) from __all_virtual_trans_stat where tenant_id=1002 and ls_id=1043;

+----------+

| count(*) |

+----------+

| 248 |

+----------+

1 row in set (0.01 sec)

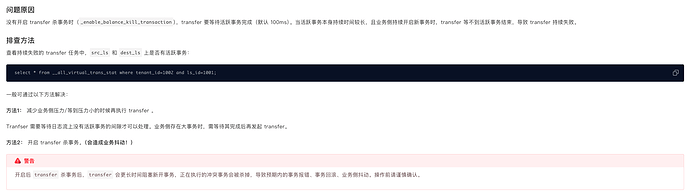

根据上面的查询结果,进行梳理:

- 集群、租户扩容完成后,rebalance任务正常发起,先在磁盘使用率最高的节点上进行了日志流的分裂,生成了临时的日志流,再与新扩容节点上的日志流进行合并

- transfer任务也正常发起,状态一直出于INIT中,通过

cdb_OB_TRANSFER_TASK_history表可以看到,transfer 任务持续失败,错误码-7114 - 依据错误码检索,发现原因是“ 没有开启 transfer 杀事务时(

_enable_balance_kill_transaction),transfer 要等待活跃事务完成(默认 100ms)。当活跃事务本身持续时间较长,且业务侧持续开启新事务时,transfer 等不到活跃事务结束,导致 transfer 持续失败。” - 检查相关参数,确认当前集群确实没有开启_enable_balance_kill_transaction,并且该集群属于7*24小时高负载的情况。

疑问:

- rebalance任务为什么设计成 在待搬出的节点上进行日志流分裂,再与待搬入的节点进行日志流合并的方式?

- 4.x 和 3.x 的负载均衡表现差异较大,原3.x 在同等负载压力的情况下,扩容节点个数后磁盘负载均衡速度挺快的。当前这个集群,扩容操作完成后,一个星期,新扩容的节点磁盘使用率才上涨至7%。原3.x版本上,分区负载均衡的实际表现优于4.x的日志流均衡。

解决方案:

目前看只能尝试打开_enable_balance_kill_transaction ,并设置_balance_wait_killing_transaction_end_threshold 为合理的数值,等待活跃事务完成完成transfer。最差情况下,需要业务停压,这样对业务的影响就很大了