【 使用环境 】 测试环境

【 OB or 其他组件 】

【 使用版本 】4.3.5

【问题描述】

按照官方教程,将安装前配置完成,

后续按照白屏部署教程组件单zone多副本的教程,

尝试多次,总是提示安装失败

求大神指点是不是要先建立集群名称还是咋回事?

【 使用环境 】 测试环境

【 OB or 其他组件 】

【 使用版本 】4.3.5

【问题描述】

按照官方教程,将安装前配置完成,

尝试多次,总是提示安装失败

求大神指点是不是要先建立集群名称还是咋回事?

学习

能截图白屏的前几步操作吗

机器的配置也顺便发一份,

看一下详细配置

官网的那个操作说明 ![]()

1.中控机配置java环境

2.tar -xzf oceanbase-all-in-one-*.tar.gz

cd oceanbase-all-in-one/bin/

./install.sh

source ~/.oceanbase-all-in-one/bin/env.sh

3 obd web

虚拟机 3台同样配置

之前1-1-1的一个测试集群不知道怎么就布上了 ![]()

虚拟机删了 我再重建一次看看

1、创建admin用户(中控机 + observer节点)

# useradd -U admin -d /home/admin -s /bin/bash

# mkdir -p /home/admin

# sudo chown -R admin:admin /home/admin

# passwd admin

# 密码:自行设置

# 为admin用户设置sudo权限

# vim /etc/sudoers

# 添加以下内容:

# admin ALL=(ALL) NOPASSWD: ALL

# mkdir /data

# mkdir /redo

# chown -R admin:admin /data

# chown -R admin:admin /redo

# su - admin

# mkdir -p /home/admin/oceanbase/store/obcluster /data/obcluster/{sstable,etc3} /redo/obcluster/{clog,ilog,slog,etc2}

# for f in {clog,ilog,slog,etc2}; do ln -s /redo/obcluster/$f /home/admin/oceanbase/store/obcluster/$f ; done

# for f in {sstable,etc3}; do ln -s /data/obcluster/$f /home/admin/oceanbase/store/obcluster/$f; done

2 注意操作系统时间的配置(中控机 + observer节点)

3 配置limits.conf(中控机 + observer节点)

# sudo vim /etc/security/limits.conf

# 增加或修改以下内容

root soft nofile 655350

root hard nofile 655350

* soft nofile 655350

* hard nofile 655350

* soft stack 20480

* hard stack 20480

* soft nproc 655360

* hard nproc 655360

* soft core unlimited

* hard core unlimited

4 配置 /etc/sysctl.conf(中控机 + observer节点)

sudo vim /etc/sysctl.conf

# 新增或修改以下内容

fs.aio-max-nr=1048576 # 修改内核异步 I/O 限制

net.core.somaxconn = 2048 # Socket 监听队列的最大长度,频繁建立连接需要调大该值

net.core.netdev_max_backlog = 10000 # 协议栈处理的缓冲队列长度,设置的过小有可能造成丢包

net.core.rmem_default = 16777216 # 接收缓冲区队列的默认长度

net.core.wmem_default = 16777216 # 发送缓冲区队列的默认长度

net.core.rmem_max = 536870912 # 接收缓冲区队列的最大长度

net.core.wmem_max = 16777216 # 发送缓冲区队列的最大长度

net.ipv4.tcp_rmem = 4096 87380 16777216 # Socket 接收缓冲区的大小,分别为最小值、默认值、最大值

net.ipv4.tcp_wmem = 4096 65536 16777216 # Socket 发送缓冲区的大小,分别为最小值、默认值、最大值

net.ipv4.tcp_max_syn_backlog = 16384 # 处于 SYN_RECVD 状态的连接数

net.ipv4.tcp_fin_timeout = 15 # Socket 主动断开之后 FIN-WAIT-2 状态的持续时间

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_tw_reuse = 1 # 允许重用处于 TIME WAIT 状态的 Socket

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_slow_start_after_idle=0 # 禁止 TCP 连接从 Idle 状态的慢启动,降低某些情况的网络延迟

vm.swappiness = 0 # 优先使用物理内存

vm.min_free_kbytes = 2097152

vm.max_map_count=655360

fs.file-max=6573688

# 系统内核dump日志存储目录,结合具体使用修改目录位置

kernel.core_pattern = /home/admin/core-dump/core-%e-%p-%t

5 关闭防火墙和SELinux(中控机 + observer节点)

# 关闭防火墙

sudo systemctl status firewalld

sudo systemctl disable firewalld

sudo systemctl stop firewalld

# 关闭SELinux

sudo vim /etc/selinux/config

# 将以下内容改为 disabled

SELINUX=disabled

# 查看 SELinux 状态

sudo sestatus

6 手动关闭透明大页

echo never > /sys/kernel/mm/redhat_transparent_hugepage/enabled

# 重启

7 中控机安装java

java -version #确认是否安装java

yum clean all && yum makecache

yum list | grep jdk

#安装openjdk1.8的命令如下,安装过程中有确认步骤,输入y即可。

# yum install java-1.8.0-openjdk

#安装openjdk11的命令如下,安装过程中有确认步骤,输入y即可。

# yum install java-11-openjdk

配置java环境变量,编辑/etc/profile添加如下内容,不同系统版本及CPU架构JAVA_HOME的路径可能不一样,请根据自己java环境(如下图所示)修改。

以jdk1.8为例,则配置方法和内容如下:

# vim /etc/profile

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.422.b05-0.up2.uel20.01.x86_64/

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

执行# source /etc/profile 或 重启系统(更稳妥)使变量生效。没啥变化

新建了虚拟机 内存给到24G

重新obd web白屏部署,安装过程还是出现以下错误

[WARN] OBD-1012: (166.166.166.198) clog and data use the same disk (/)

[WARN] OBD-1012: (166.166.166.199) clog and data use the same disk (/)

[WARN] OBD-2000: (166.166.166.201) not enough memory. (Free: 9G, Need: 13G)

[WARN] OBD-1012: (166.166.166.201) clog and data use the same disk (/)

[ERROR] oceanbase-ce-py_script_environment_check-4.2.0.0 RuntimeError: ‘166.166.166.198’

Load cluster param plugin ok

start obproxy ok

obproxy program health check ok

Connect to obproxy ok

Connect to obproxy ok

±--------------------------------------------------------------------+

| obproxy-ce |

±----------------±-----±----------------±----------------±-------+

| ip | port | prometheus_port | rpc_listen_port | status |

±----------------±-----±----------------±----------------±-------+

| 166.166.166.198 | 2883 | 2884 | 2885 | active |

| 166.166.166.199 | 2883 | 2884 | 2885 | active |

| 166.166.166.201 | 2883 | 2884 | 2885 | active |

±----------------±-----±----------------±----------------±-------+

obclient -h166.166.166.198 -P2883 -uroot@proxysys -p’******’ -Doceanbase -A

succeed

Load cluster param plugin ok

Check before start obagent ok

Start obagent ok

obagent program health check ok

Connect to Obagent ok

±-------------------------------------------------------------------+

| obagent |

±----------------±-------------------±-------------------±-------+

| ip | mgragent_http_port | monagent_http_port | status |

±----------------±-------------------±-------------------±-------+

| 166.166.166.198 | 8089 | 8088 | active |

| 166.166.166.199 | 8089 | 8088 | active |

| 166.166.166.201 | 8089 | 8088 | active |

±----------------±-------------------±-------------------±-------+

succeed

Load cluster param plugin ok

Check before start ocp-express ok

[WARN] OBD-4302: (166.166.166.200) not enough memory. (Free: 225M, Need: 4G)

Connect to observer

就是这个:

[ERROR] oceanbase-ce-py_script_environment_check-4.2.0.0 RuntimeError: ‘166.166.166.198’

然后日志里面的错误是:

[root@200 ~]# obd tool command obdev1 log -c oceanbase-ce -s 166.166.166.198

[ERROR] No such deploy: obdev1.

See https://www.oceanbase.com/product/ob-deployer/error-codes .

Trace ID: f39450b2-ec8c-11ef-8ea2-000c2942a3ac

If you want to view detailed obd logs, please run: obd display-trace f39450b2-ec8c-11ef-8ea2-000c2942a3ac

[root@200 ~]# obd display-trace f39450b2-ec8c-11ef-8ea2-000c2942a3ac

[2025-02-17 01:39:19.794] [DEBUG] - cmd: [‘obdev1’, ‘log’]

[2025-02-17 01:39:19.794] [DEBUG] - opts: {‘components’: ‘oceanbase-ce’, ‘servers’: ‘166.166.166.198’}

[2025-02-17 01:39:19.794] [DEBUG] - mkdir /root/.obd/lock/

[2025-02-17 01:39:19.794] [DEBUG] - set lock mode to NO_LOCK(0)

[2025-02-17 01:39:19.794] [DEBUG] - Get Deploy by name

[2025-02-17 01:39:19.794] [DEBUG] - mkdir /root/.obd/cluster/

[2025-02-17 01:39:19.794] [DEBUG] - mkdir /root/.obd/config_parser/

[2025-02-17 01:39:19.795] [ERROR] No such deploy: obdev1.

[2025-02-17 01:39:19.795] [INFO] See https://www.oceanbase.com/product/ob-deployer/error-codes .

[2025-02-17 01:39:19.795] [INFO] Trace ID: f39450b2-ec8c-11ef-8ea2-000c2942a3ac

[2025-02-17 01:39:19.795] [INFO] If you want to view detailed obd logs, please run: obd display-trace f39450b2-ec8c-11ef-8ea2-000c2942a3ac

求大神指点

内存不足警告 :

166.166.166.201 上的内存不足(仅有9G空闲,但需要13G)。166.166.166.200 上的内存也严重不足(仅有225M空闲,但需要4G)。

报错

你在尝试使用obd tool command obdev1 log 命令查看日志,但出现了“No such deploy: obdev1”的错误。

[ERROR] No such deploy: obdev1.

这个报错提示OBD无法找到名为obdev1 的部署

是否已经存在,或重新部署,重新命令新集群名

1、查看集群状态,查看是否在obdev1集群或其它集群

obd cluster list

2、销毁集群 (obdev1)替换成查到的集群

obd cluster destroy obdev1 -f

3、重新白屏部署。

好的 现在虚拟机都恢复到部署前的快照了

还是报错

[root@200 ~]# obd tool command obtest log -c oceanbase-ce -s 166.166.166.200

[ERROR] No such deploy: obtest.

See https://www.oceanbase.com/product/ob-deployer/error-codes .

Trace ID: 4e8efa62-ed3c-11ef-baeb-000c2942a3ac

If you want to view detailed obd logs, please run: obd display-trace 4e8efa62-ed3c-11ef-baeb-000c2942a3ac

[root@200 ~]# obd display-trace 4e8efa62-ed3c-11ef-baeb-000c2942a3ac

[2025-02-17 22:34:34.359] [DEBUG] - cmd: [‘obtest’, ‘log’]

[2025-02-17 22:34:34.359] [DEBUG] - opts: {‘components’: ‘oceanbase-ce’, ‘servers’: ‘166.166.166.200’}

[2025-02-17 22:34:34.360] [DEBUG] - mkdir /root/.obd/lock/

[2025-02-17 22:34:34.360] [DEBUG] - set lock mode to NO_LOCK(0)

[2025-02-17 22:34:34.360] [DEBUG] - Get Deploy by name

[2025-02-17 22:34:34.360] [DEBUG] - mkdir /root/.obd/cluster/

[2025-02-17 22:34:34.361] [DEBUG] - mkdir /root/.obd/config_parser/

[2025-02-17 22:34:34.361] [ERROR] No such deploy: obtest.

[2025-02-17 22:34:34.361] [INFO] See https://www.oceanbase.com/product/ob-deployer/error-codes .

[2025-02-17 22:34:34.361] [INFO] Trace ID: 4e8efa62-ed3c-11ef-baeb-000c2942a3ac

[2025-02-17 22:34:34.361] [INFO] If you want to view detailed obd logs, please run: obd display-trace 4e8efa62-ed3c-11ef-baeb-000c2942a3ac

[root@200 ~]# obd cluster list

Local deploy is empty

Trace ID: 60571860-ed3c-11ef-a8b4-000c2942a3ac

If you want to view detailed obd logs, please run: obd display-trace 60571860-ed3c-11ef-a8b4-000c2942a3ac

[root@200 ~]# obd display-trace 60571860-ed3c-11ef-a8b4-000c2942a3ac

[2025-02-17 22:35:04.192] [DEBUG] - cmd: []

[2025-02-17 22:35:04.192] [DEBUG] - opts: {}

[2025-02-17 22:35:04.192] [DEBUG] - mkdir /root/.obd/lock/

[2025-02-17 22:35:04.192] [DEBUG] - set lock mode to NO_LOCK(0)

[2025-02-17 22:35:04.192] [DEBUG] - Get deploy list

[2025-02-17 22:35:04.193] [DEBUG] - mkdir /root/.obd/cluster/

[2025-02-17 22:35:04.193] [DEBUG] - mkdir /root/.obd/config_parser/

[2025-02-17 22:35:04.193] [INFO] Local deploy is empty

[2025-02-17 22:35:04.193] [INFO] Trace ID: 60571860-ed3c-11ef-a8b4-000c2942a3ac

[2025-02-17 22:35:04.193] [INFO] If you want to view detailed obd logs, please run: obd display-trace 60571860-ed3c-11ef-a8b4-000c2942a3ac

注意到白屏部署时有个提示:

oceanbase-ce-py_script_environment_check-4.2.0.0 RuntimeError: ‘166.166.166.198’

这是哪里环境出错了?

失败后,中控机上执行obd cluster list

[root@200 ~]# obd cluster list

Local deploy is empty

Trace ID: c117e9ec-ed49-11ef-84a4-000c2942a3ac

If you want to view detailed obd logs, please run: obd display-trace c117e9ec-ed49-11ef-84a4-000c2942a3ac

然后再次obd web

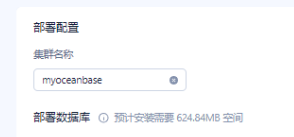

白屏部署输入集群名称报错

再次查询obd

[root@200 ~]# obd cluster destroy obtest -f

[ERROR] No such deploy: obtest.

See https://www.oceanbase.com/product/ob-deployer/error-codes .

Trace ID: ece67bc4-ed49-11ef-ab27-000c2942a3ac

If you want to view detailed obd logs, please run: obd display-trace ece67bc4-ed49-11ef-ab27-000c2942a3ac

[root@200 ~]# obd display-trace c117e9ec-ed49-11ef-84a4-000c2942a3ac

[2025-02-18 00:10:49.974] [DEBUG] - cmd: []

[2025-02-18 00:10:49.975] [DEBUG] - opts: {}

[2025-02-18 00:10:49.975] [DEBUG] - mkdir /root/.obd/lock/

[2025-02-18 00:10:49.975] [DEBUG] - set lock mode to NO_LOCK(0)

[2025-02-18 00:10:49.975] [DEBUG] - Get deploy list

[2025-02-18 00:10:49.975] [DEBUG] - mkdir /root/.obd/cluster/

[2025-02-18 00:10:49.975] [DEBUG] - mkdir /root/.obd/config_parser/

[2025-02-18 00:10:49.975] [INFO] Local deploy is empty

[2025-02-18 00:10:49.975] [INFO] Trace ID: c117e9ec-ed49-11ef-84a4-000c2942a3ac

[2025-02-18 00:10:49.975] [INFO] If you want to view detailed obd logs, please run: obd display-trace c117e9ec-ed49-11ef-84a4-000c2942a3ac

这个部署一直不成功是怎么回事