【 使用环境 】测试环境

【 OB or 其他组件 】OCP

【 使用版本 】

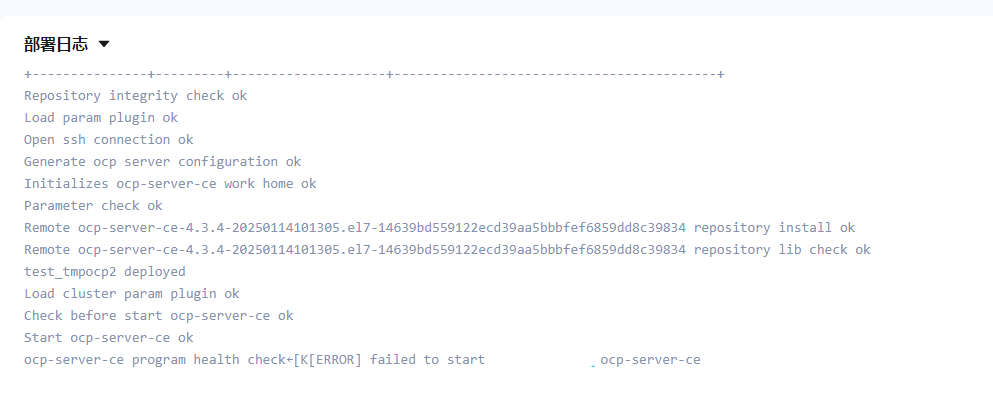

【问题描述】页面部署OCP ERROR failed

麻烦提供一份详细的obd日志(~/.obd/log)+yaml参数文件(/.obd/cluster/xxxx/)

user:

username: admin

password:

port: 22

ocp-server-ce:

version: 4.3.4

package_hash: 14639bd559122ecd39aa5bbbfef6859dd8c39834

release: 20250114101305.el7

servers:

- xxxxxx

global:

home_path: /home/admin/ocp

soft_dir: /home/admin/software

log_dir: /home/admin/logs

ocp_site_url: http://xxxxx:8080

port: 8080

admin_password: xxx

memory_size: 4G

manage_info:

machine: 10

jdbc_url: jdbc:oceanbase://xxx:xxx/meta_database

jdbc_username: root@sys#xxxx

jdbc_password: xxx

ocp_meta_tenant:

tenant_name: tmp_ocpmeta_test

max_cpu: 5.0

memory_size: 6G

ocp_meta_username: root

ocp_meta_password: xxx

ocp_meta_db: meta_database

ocp_monitor_tenant:

tenant_name: tmp_ocpmonitor_test

max_cpu: 5.0

memory_size: 10G

ocp_monitor_username: root

ocp_monitor_password: xxx

ocp_monitor_db: monitor_database

obd cluster start xxx

[ERROR] Another app is currently holding the obd lock.

Trace ID: c8d386c0-e527-11ef-92c2-30c6d7959d22

If you want to view detailed obd logs, please run: obd display-trace c8d386c0-e527-11ef-92c2-30c6d7959d22 具体怎么? stop start 手动都无法操作

obd display-trace e25e5390-e527-11ef-8060-30c6d7959d22

[2025-02-07 15:48:13.610] [DEBUG] - cmd: [‘test_tmpocp2’]

[2025-02-07 15:48:13.610] [DEBUG] - opts: {‘servers’: None, ‘components’: None, ‘force_delete’: None, ‘strict_check’: None, ‘without_parameter’: None}

[2025-02-07 15:48:13.610] [DEBUG] - mkdir /root/.obd/lock/

[2025-02-07 15:48:13.610] [DEBUG] - unknown lock mode

[2025-02-07 15:48:13.610] [DEBUG] - try to get share lock /root/.obd/lock/global

[2025-02-07 15:48:13.610] [DEBUG] - share lock /root/.obd/lock/global, count 1

[2025-02-07 15:48:13.610] [DEBUG] - Get Deploy by name

[2025-02-07 15:48:13.610] [DEBUG] - mkdir /root/.obd/cluster/

[2025-02-07 15:48:13.611] [DEBUG] - mkdir /root/.obd/config_parser/

[2025-02-07 15:48:13.611] [DEBUG] - try to get exclusive lock /root/.obd/lock/deploy_test_tmpocp2

[2025-02-07 15:48:13.611] [ERROR] Another app is currently holding the obd lock.

[2025-02-07 15:48:13.611] [ERROR] Traceback (most recent call last):

[2025-02-07 15:48:13.611] [ERROR] File “_lock.py”, line 59, in _ex_lock

[2025-02-07 15:48:13.611] [ERROR] File “tool.py”, line 496, in exclusive_lock_obj

[2025-02-07 15:48:13.611] [ERROR] BlockingIOError: [Errno 11] Resource temporarily unavailable

[2025-02-07 15:48:13.611] [ERROR]

[2025-02-07 15:48:13.611] [ERROR] During handling of the above exception, another exception occurred:

[2025-02-07 15:48:13.611] [ERROR]

[2025-02-07 15:48:13.611] [ERROR] Traceback (most recent call last):

[2025-02-07 15:48:13.611] [ERROR] File “_lock.py”, line 80, in ex_lock

[2025-02-07 15:48:13.611] [ERROR] File “_lock.py”, line 61, in _ex_lock

[2025-02-07 15:48:13.611] [ERROR] _errno.LockError: [Errno 11] Resource temporarily unavailable

[2025-02-07 15:48:13.611] [ERROR]

[2025-02-07 15:48:13.611] [ERROR] During handling of the above exception, another exception occurred:

[2025-02-07 15:48:13.611] [ERROR]

[2025-02-07 15:48:13.612] [ERROR] Traceback (most recent call last):

[2025-02-07 15:48:13.612] [ERROR] File “obd.py”, line 286, in do_command

[2025-02-07 15:48:13.612] [ERROR] File “obd.py”, line 976, in _do_command

[2025-02-07 15:48:13.612] [ERROR] File “core.py”, line 2065, in start_cluster

[2025-02-07 15:48:13.612] [ERROR] File “_deploy.py”, line 1880, in get_deploy_config

[2025-02-07 15:48:13.612] [ERROR] File “_deploy.py”, line 1867, in _lock

[2025-02-07 15:48:13.612] [ERROR] File “_lock.py”, line 278, in deploy_ex_lock

[2025-02-07 15:48:13.612] [ERROR] File “_lock.py”, line 257, in _ex_lock

[2025-02-07 15:48:13.612] [ERROR] File “_lock.py”, line 249, in _lock

[2025-02-07 15:48:13.612] [ERROR] File “_lock.py”, line 180, in lock

[2025-02-07 15:48:13.612] [ERROR] File “_lock.py”, line 85, in ex_lock

[2025-02-07 15:48:13.612] [ERROR] _errno.LockError: [Errno 11] Resource temporarily unavailable

[2025-02-07 15:48:13.612] [ERROR]

[2025-02-07 15:48:13.612] [DEBUG] - share lock /root/.obd/lock/global release, count 0

[2025-02-07 15:48:13.612] [DEBUG] - unlock /root/.obd/lock/global

[2025-02-07 15:48:13.612] [INFO] Trace ID: e25e5390-e527-11ef-8060-30c6d7959d22

[2025-02-07 15:48:13.612] [INFO] If you want to view detailed obd logs, please run: obd display-trace e25e5390-e527-11ef-8060-30c6d7959d22

[2025-02-07 15:48:13.612] [DEBUG] - unlock /root/.obd/lock/deploy_test_tmpocp2

看你的yaml文件仅有ocp的组件信息,是准备在已有的ob集群上搭建ocp么。需要确保ob集群的内存够用。

先把obd web进程停掉。再执行start命令

obd web 退出了

obd cluster start test_tmpocp2

Get local repositories ok

Load cluster param plugin ok

Open ssh connection ok

Check before start ocp-server-ce ok

Start ocp-server-ce ok

ocp-server-ce program health check / 还是一直卡在这里。 是的,租户已经自动新建上了

等待报错看看,然后在获取一下obd的详细日志

obd cluster start test_tmpocp2

Get local repositories ok

Load cluster param plugin ok

Open ssh connection ok

Check before start ocp-server-ce ok

Start ocp-server-ce ok

[ERROR] failed to start xxxx ocp-server-ce

See https://www.oceanbase.com/product/ob-deployer/error-codes .

Trace ID: 5c339642-e52a-11ef-a8fc-30c6d7959d22

If you want to view detailed obd logs, please run: obd display-trace 5c339642-e52a-11ef-a8fc-30c6d7959d22

报错后

执行 obd display-trace 5c339642-e52a-11ef-a8fc-30c6d7959d22

…

[2025-02-07 16:36:18.574] [DEBUG] – admin@xx.xx.xx.xx execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{print $2,$10}’ | grep ‘00000000:1F90’ | awk -F’ ’ ‘{print $2}’ | uniq

[2025-02-07 16:36:18.724] [DEBUG] – exited code 0

[2025-02-07 16:36:18.834] [ERROR] failed to start xxxxx ocp-server-ce

[2025-02-07 16:36:18.834] [DEBUG] - sub health_check ref count to 0

[2025-02-07 16:36:18.834] [DEBUG] - export health_check

[2025-02-07 16:36:18.834] [DEBUG] - plugin ocp-server-ce-py_script_health_check-4.2.1 result: False

[2025-02-07 16:36:18.837] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo release, count 1

[2025-02-07 16:36:18.837] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo release, count 0

[2025-02-07 16:36:18.837] [DEBUG] - unlock /root/.obd/lock/mirror_and_repo

[2025-02-07 16:36:18.837] [DEBUG] - exclusive lock /root/.obd/lock/deploy_test_tmpocp2 release, count 0

[2025-02-07 16:36:18.837] [DEBUG] - unlock /root/.obd/lock/deploy_test_tmpocp2

[2025-02-07 16:36:18.837] [DEBUG] - share lock /root/.obd/lock/global release, count 0

[2025-02-07 16:36:18.837] [DEBUG] - unlock /root/.obd/lock/global

[2025-02-07 16:36:18.838] [INFO] See https://www.oceanbase.com/product/ob-deployer/error-codes .

你的ob集群的版本是多少

看日志应该是超时了,可以试试使用obd cluster start xxxx -c ocp-server-ce单独启动该组件试试。

帮忙拿一下OCP 日志: ps -ef|grep ocp确认ocp与agent启动路径,agent日志保存于bin目录同级的log目录下,server日志保存于ocp目录下的log中。/home/admin/ocp/log中,ocp-server.log

odb版本

OceanBase Deploy: 3.1.1

REVISION: 94b5853a18ad1ad09d5afc30d9edb44643dd488c

cat /home/admin/ocp/log/bootstrap.log 空 ocp还未生成日志

observer数据库版本是多少,ocp日志需要到你创建ocp服务的ip查看一下。麻烦再帮忙找找![]()

和元数据的租户有关?ob是 4.3.2.1 的 (元数据租户名可以自定义吧)

cat /home/admin/ocp/log/bootstrap.log (空) OCP 服务没有起来没有日志(是启ocp服务的节点)。

log.zip (119.3 KB)

租户名应该是不可以自定义的

ocp_meta和ocp_monitor租户这俩应该是不能修改名称的

租户名试了下不改变也failed

obd的详细日志发出来看一下,再看看有没有ocp-server日志生成.

你是不是root用户执行的,到/root下看看有没有ocp路径目录

使用推荐的方式可以了

使用已有的obproxy(configeurl)连接失败,通过直连已有的 metadb解决