部署集群时,test-check-fs-hvvqdd-tdzf69-2c899 pod ERROR

Failed to check storage,

operation not supported

operation not supported

operation not supported

看信息是存储卷有问题了吧,详细信息贴一下吧,比如怎么部署的,配置啥样,存储卷用的是啥,

使用本地的sc,手动创建了pv,其余的pv就不贴出来了,都类似

----sc配置信息

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ob-local-ssd-redolog

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

----pv配置信息

apiVersion: v1

kind: PersistentVolume

metadata:

name: ob-pv-redolog1

spec:

capacity:

storage: 30Gi

accessModes:

- ReadWriteOnce

storageClassName: {{ .Release.Name }}-local-ssd-redolog

hostPath:

path: /middleware/ob/ob/redolog

实际部署信息

----kubectl get pvc -n ob

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

testcheck-clog-claim-l2kljt Bound ob-pv-redolog1 30Gi RWO ob-local-ssd-redolog 6s

----kubectl describe pvc -n ob

Name: testcheck-clog-claim-l2kljt

Namespace: ob

StorageClass: ob-local-ssd-redolog

Status: Terminating (lasts 2m16s)

Volume: ob-pv-redolog1

Labels:

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 30Gi

Access Modes: RWO

VolumeMode: Filesystem

Used By: test-check-fs-f5zzh8-jrgdbz-m64g7

Events:

Type Reason Age From Message

Normal WaitForFirstConsumer 2m23s persistentvolume-controller waiting for first consumer to be created before binding

root@crms-10-10-186-49[/opt/chart]#

拿官方提供的obcluster.yaml做了个一个chart,这个是所有的文件:

apiVersion: v1

kind: Secret

metadata:

name: root-password

type: Opaque

data:

password: UFBwUmhrWFdUSw==

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: {{ .Release.Name }}-local-ssd-data

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: {{ .Release.Name }}-local-ssd-redolog

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: {{ .Release.Name }}-local-ssd-log

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

apiVersion: v1

kind: PersistentVolume

metadata:

name: {{ .Release.Name }}-pv-redolog0

spec:

capacity:

storage: 30Gi

accessModes:

- ReadWriteOnce

storageClassName: {{ .Release.Name }}-local-ssd-redolog

hostPath:

path: {{ printf "%s/%s" "/middleware/ob" .Release.Name }}/redolog

apiVersion: v1

kind: PersistentVolume

metadata:

name: {{ .Release.Name }}-pv-data

spec:

capacity:

storage: 30Gi

accessModes:

- ReadWriteOnce

storageClassName: {{ .Release.Name }}-local-ssd-data

hostPath:

path: {{ printf "%s/%s" "/middleware/ob" .Release.Name }}/data

apiVersion: v1

kind: PersistentVolume

metadata:

name: {{ .Release.Name }}-pv-redolog1

spec:

capacity:

storage: 30Gi

accessModes:

- ReadWriteOnce

storageClassName: {{ .Release.Name }}-local-ssd-redolog

hostPath:

path: {{ printf "%s/%s" "/middleware/ob" .Release.Name }}/redolog

apiVersion: v1

kind: PersistentVolume

metadata:

name: {{ .Release.Name }}-pv-log

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

storageClassName: {{ .Release.Name }}-local-ssd-log

hostPath:

path: {{ printf "%s/%s" "/middleware/ob" .Release.Name }}/log

apiVersion: oceanbase.oceanbase.com/v1alpha1

kind: OBCluster

metadata:

name: test

namespace: {{ .Release.Namespace }}

spec:

clusterName: obcluster

clusterId: 1

serviceAccount: “default”

userSecrets:

root: root-password

topology:

- zone: zone1

replica: 1

observer:

image: xxxx/quay.io/oceanbase/oceanbase-cloud-native:4.2.1.6-106000012024042515

resource:

cpu: 2

memory: 8Gi

storage:

dataStorage:

storageClass: {{ .Release.Name }}-local-ssd-data

size: 30Gi

redoLogStorage:

storageClass: {{ .Release.Name }}-local-ssd-redolog

size: 30Gi

logStorage:

storageClass: {{ .Release.Name }}-local-ssd-log

size: 10Gi

parameters:

-

name: system_memory

value: 1G

-

name: “__min_full_resource_pool_memory”

value: “2147483648” # 2G

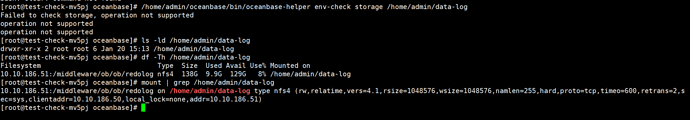

挂载的pv存储的路径是nfs的导出点,两台工作节点都安装了nfs共享了存储的路径。 这个会影响 /home/admin/oceanbase/bin/oceanbase-helper env-check storage /home/admin/data-log 命令执行吗? 我换了一个其他的pv路径就正常了

之前sc配置了nfs的自动分配,也是出现了问题,这块不支持nfs类型的挂载路径吗

这个功能在老版本镜像里是没有的,报这个错应该也会走下去。但是还是推荐使用新的 observer 镜像。

https://hub.docker.com/r/oceanbase/oceanbase-cloud-native/tags

镜像 tag 可以在这里查看。

换了最新镜像试了一下,效果还是差不多。

pv挂载的文件夹是本地的nfs文件夹时(工作节点B的/middware为导出点,工作节点A挂载/middlware到工作节点B的/middware,实现双节点的/middware路径下的文件共享),obcheck那个job报错:

Failed to check storage, operation not supported

operation not supported

operation not supported

pv挂载的文件夹是本地的普通的文件夹,obcheck能够正常

https://github.com/oceanbase/ob-operator

之前我理解成镜像运行 job 报错了,这个检查确实是我们后面版本加上的,如果是 NFS 的话应该不行,可以看一下项目中存储兼容性的部分。我们测过一些存储系统,NFS 是有一定问题的。

好的,我后续是使用local-path的方式进行进行ob集群的部署是可以的,但是我如果吧ob集群uninstall之后,再重新部署起来,由于原先的local-path路径内已经有一部分数据,会导致启动失败,但是我如果手动把local-path内的存储数据删除掉,就能够正常部署。 这个有什么处理办法呢? 如果每次下线再部署都需要更换一个存储地址,会导致数据丢失,等于说重新部署了一个新的。