【 OB or 其他组件 】obd

【 使用版本 】3.0.1

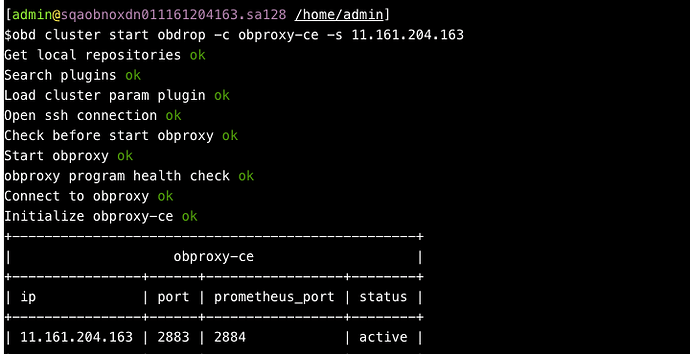

【问题描述】疑似 obd bug:obd cluster start <deploy name> -c <component name> -s <server> 指定组件和IP,但是重启的是整个集群所有节点的所有组件

【日志】

[root@ocp-01 ~]# obd cluster start ocp_cluster -c obproxy-ce -s 192.168.41.45

Get local repositories ok

Load cluster param plugin ok

Cluster status check ok

Check before start ocp-server-ce ok

cluster scenario: None

Start observer ok

observer program health check ok

Connect to observer 192.168.41.43:2881 ok

obshell start ok

obshell program health check ok

start obproxy ok

obproxy program health check ok

Connect to obproxy ok

Connect to observer 192.168.41.43:2881 ok

Start ocp-server-ce ok

ocp-server-ce program health check ok

Stop ocp-server-ce ok

Start ocp-server-ce ok

ocp-server-ce program health check ok

Connect to ocp-server-ce ok

Connect to ocp-server-ce ok

+-------------------------------------------------------------------+

| ocp-server-ce |

+---------------------------+----------+-------------------+--------+

| url | username | password | status |

+---------------------------+----------+-------------------+--------+

| http://192.168.41.74:8080 | admin | OCP2024[eastsoft] | active |

| http://192.168.41.75:8080 | admin | OCP2024[eastsoft] | active |

| http://192.168.41.76:8080 | admin | OCP2024[eastsoft] | active |

+---------------------------+----------+-------------------+--------+

Connect to observer 192.168.41.43:2881 ok

Wait for observer init ok

+-------------------------------------------------+

| oceanbase-ce |

+---------------+---------+------+-------+--------+

| ip | version | port | zone | status |

+---------------+---------+------+-------+--------+

| 192.168.41.43 | 4.2.1.8 | 2881 | zone1 | ACTIVE |

| 192.168.41.44 | 4.2.1.8 | 2881 | zone2 | ACTIVE |

| 192.168.41.45 | 4.2.1.8 | 2881 | zone3 | ACTIVE |

+---------------+---------+------+-------+--------+

obclient -h192.168.41.43 -P2881 -uroot -p'g2p4Zc!UXJ/' -Doceanbase -A

cluster unique id: 401c5499-d7b7-595e-9c99-b4660bc71a02-193e343dcd2-08010204

Connect to obproxy ok

+-------------------------------------------------------------------+

| obproxy-ce |

+---------------+------+-----------------+-----------------+--------+

| ip | port | prometheus_port | rpc_listen_port | status |

+---------------+------+-----------------+-----------------+--------+

| 192.168.41.43 | 2883 | 2884 | 2885 | active |

| 192.168.41.44 | 2883 | 2884 | 2885 | active |

| 192.168.41.45 | 2883 | 2884 | 2885 | active |

+---------------+------+-----------------+-----------------+--------+

obclient -h192.168.41.43 -P2883 -uroot@proxysys -p'P5fiaIH17k' -Doceanbase -A

succeed

Trace ID: 39fd11a0-c26d-11ef-ad1b-52540087cf30

If you want to view detailed obd logs, please run: obd display-trace 39fd11a0-c26d-11ef-ad1b-52540087cf30