【 使用环境 】 测试环境

【 OB or 其他组件 】OB

【 使用版本 】4.3.4

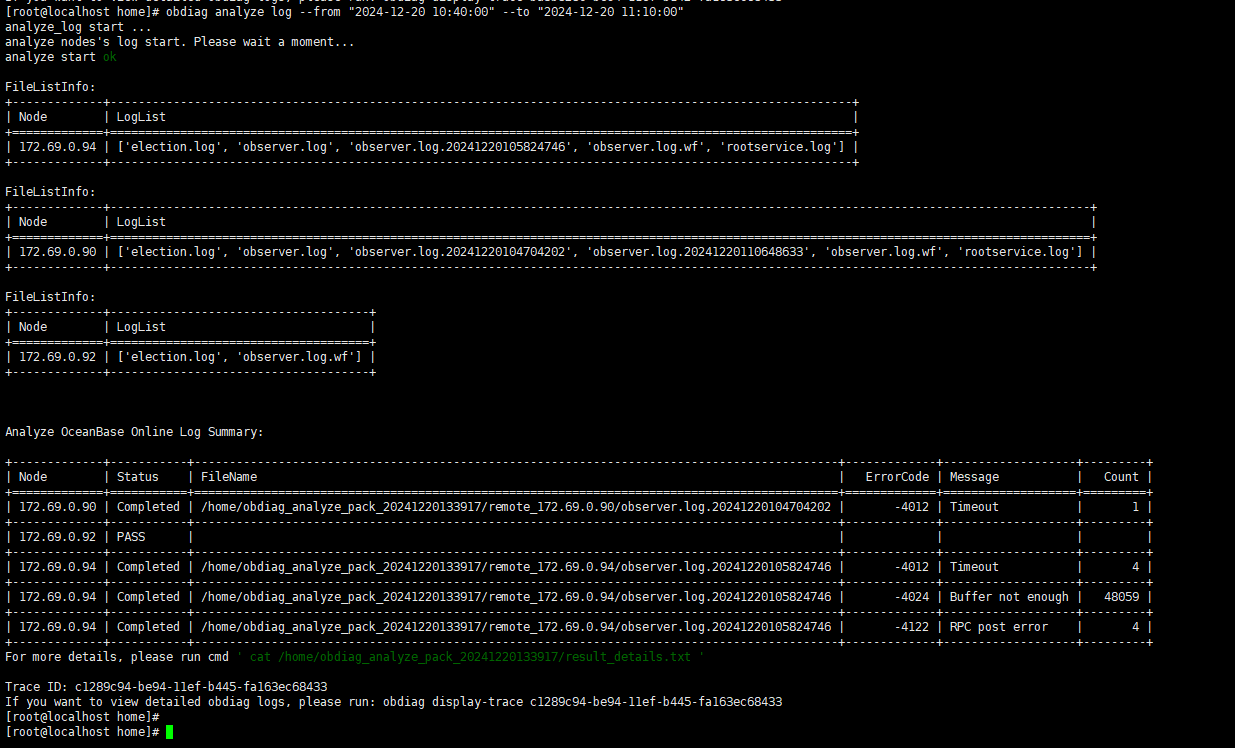

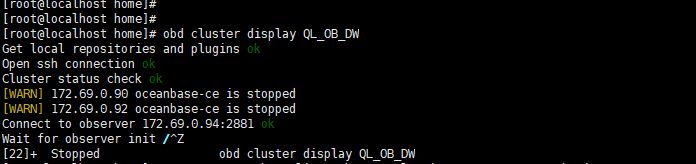

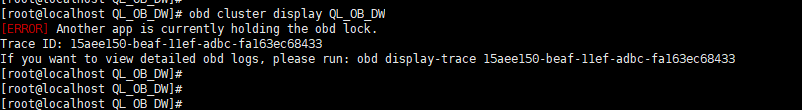

【问题描述】部署集群后节点observer进程相继中断,使用obdiag check提示如下,这是什么问题导致的?

【附件及日志】

[root@localhost home]# obdiag check

check start …

[WARN] ./check_report/ not exists. mkdir it!

[WARN] step_base ResultFalseException:ip:172.69.0.90 ,data_dir and log_dir_disk are on the same disk.

[WARN] step_base ResultFalseException:ip:172.69.0.92 ,data_dir and log_dir_disk are on the same disk.

[WARN] step_base ResultFalseException:ip:172.69.0.94 ,data_dir and log_dir_disk are on the same disk.

[WARN] TaskBase execute StepResultFailException: ip:172.69.0.90 ,data_dir and log_dir_disk are on the same disk.

[WARN] TaskBase execute StepResultFailException: ip:172.69.0.92 ,data_dir and log_dir_disk are on the same disk.

[WARN] TaskBase execute StepResultFailException: ip:172.69.0.94 ,data_dir and log_dir_disk are on the same disk.

[WARN] step_base ResultFalseException:There is 1 not_ACTIVE observer, please check as soon as possible.

[WARN] TaskBase execute StepResultFailException: There is 1 not_ACTIVE observer, please check as soon as possible.

[WARN] step_base ResultFalseException:There is 1 not_ACTIVE observer, please check as soon as possible.

[WARN] step_base ResultFalseException:There is 1 not_ACTIVE observer, please check as soon as possible.

[WARN] TaskBase execute StepResultFailException: There is 1 not_ACTIVE observer, please check as soon as possible. [WARN] TaskBase execute StepResultFailException: There is 1 not_ACTIVE observer, please check as soon as possible.

[WARN] step_base ResultFalseException:The collection of statistical information related to tenants has issues… Please check the tenant_ids: 1

[WARN] TaskBase execute StepResultFailException: The collection of statistical information related to tenants has issues… Please check the tenant_ids: 1

[WARN] step_base ResultFalseException:The collection of statistical information related to tenants has issues… Please check the tenant_ids: 1

[WARN] TaskBase execute StepResultFailException: The collection of statistical information related to tenants has issues… Please check the tenant_ids: 1

[WARN] step_base ResultFalseException:The collection of statistical information related to tenants has issues… Please check the tenant_ids: 1

[WARN] TaskBase execute StepResultFailException: The collection of statistical information related to tenants has issues… Please check the tenant_ids: 1

[WARN] step_base ResultFalseException:tsar is not installed. we can not check tcp retransmission.

[WARN] step_base ResultFalseException:tsar is not installed. we can not check tcp retransmission.

[WARN] step_base ResultFalseException:tsar is not installed. we can not check tcp retransmission.

[WARN] TaskBase execute StepResultFailException: tsar is not installed. we can not check tcp retransmission.

[WARN] TaskBase execute StepResultFailException: tsar is not installed. we can not check tcp retransmission.

[WARN] TaskBase execute StepResultFailException: tsar is not installed. we can not check tcp retransmission.

[WARN] step_base ResultFalseException:ip_local_port_range_min : 32768. recommended: 3500

[WARN] step_base ResultFalseException:ip_local_port_range_min : 32768. recommended: 3500

[WARN] step_base ResultFalseException:ip_local_port_range_min : 32768. recommended: 3500

[WARN] TaskBase execute StepResultFalseException: ip_local_port_range_min : 32768. recommended: 3500 .

[WARN] TaskBase execute StepResultFalseException: ip_local_port_range_min : 32768. recommended: 3500 .

[WARN] step_base ResultFalseException:ip_local_port_range_max : 60999. recommended: 65535

[WARN] step_base ResultFalseException:ip_local_port_range_max : 60999. recommended: 65535

[WARN] TaskBase execute StepResultFalseException: ip_local_port_range_min : 32768. recommended: 3500 .

[WARN] step_base ResultFalseException:ip_local_port_range_max : 60999. recommended: 65535

[WARN] TaskBase execute StepResultFalseException: ip_local_port_range_max : 60999. recommended: 65535 .

[WARN] TaskBase execute StepResultFalseException: ip_local_port_range_max : 60999. recommended: 65535 .

[WARN] TaskBase execute StepResultFalseException: ip_local_port_range_max : 60999. recommended: 65535 .

[WARN] step_base ResultFalseException:On ip : 172.69.0.92, ulimit -u is 655350 . recommended: 655360.

[WARN] TaskBase execute StepResultFalseException: On ip : 172.69.0.92, ulimit -u is 655350 . recommended: 655360. .

Check obproxy finished. For more details, please run cmd ’ cat ./check_report/obdiag_check_report_obproxy_2024-12-20-11-03-20.table ’

Check observer finished. For more details, please run cmd’ cat ./check_report/obdiag_check_report_observer_2024-12-20-11-03-22.table ’

Trace ID: f6c57c3e-be7e-11ef-8f92-fa163ec68433

If you want to view detailed obdiag logs, please run: obdiag display-trace f6c57c3e-be7e-11ef-8f92-fa163ec68433