【 使用环境 】生产环境

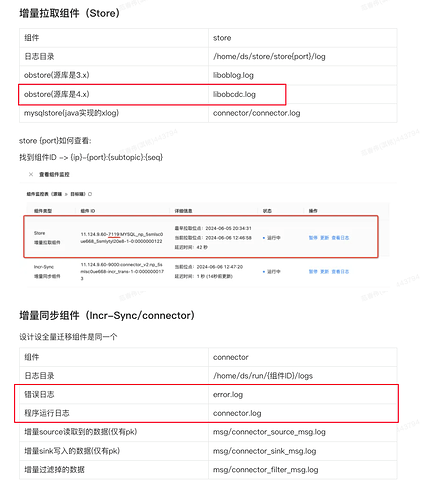

【 OB or 其他组件 】增量同步组件store

【 使用版本 】

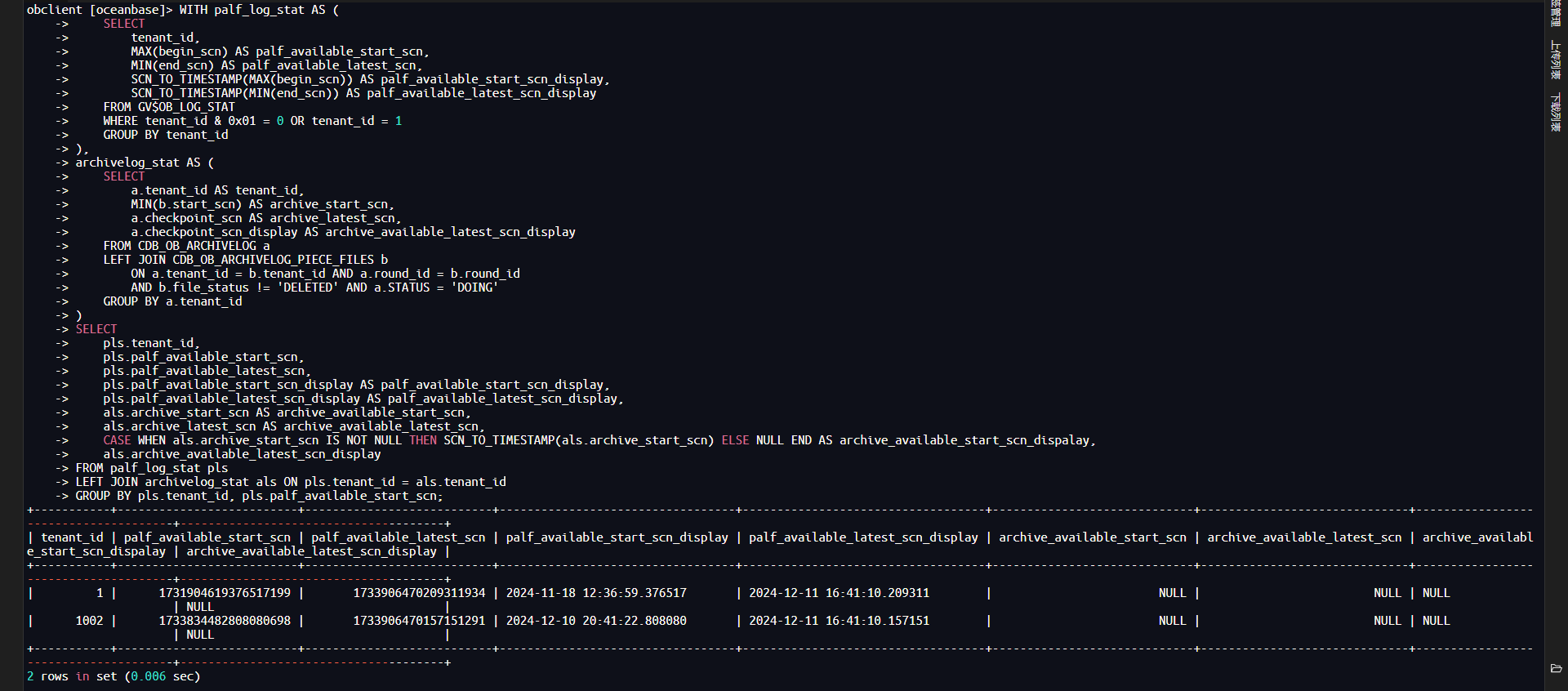

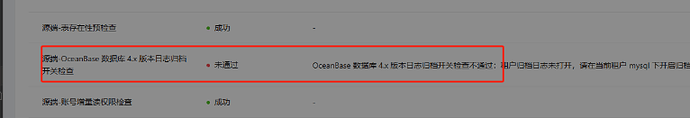

【问题描述】OceanBase 迁移服务增量同步组件store的当前拉取位点突然停止不更新了,ob和同步任务除了推送数据未进行其他操作。前期进行增量同步可以正常运行拉取日志进行数据同步,今天来看到延迟不断变大,并且同步流量无变化,store的拉取位点也不再更新。

【复现路径】未执行任何操作,突然出现的情况

【附件及日志】

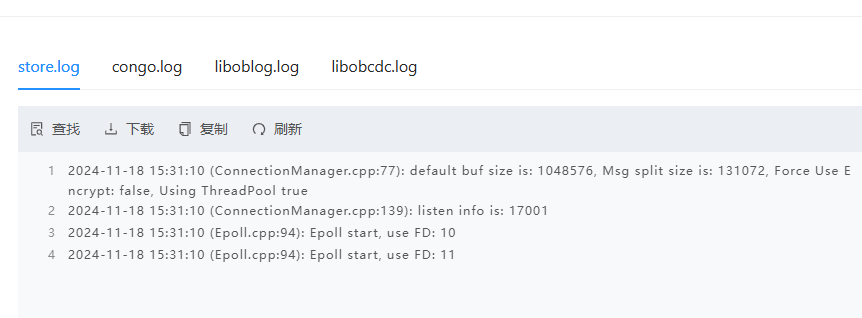

store.log

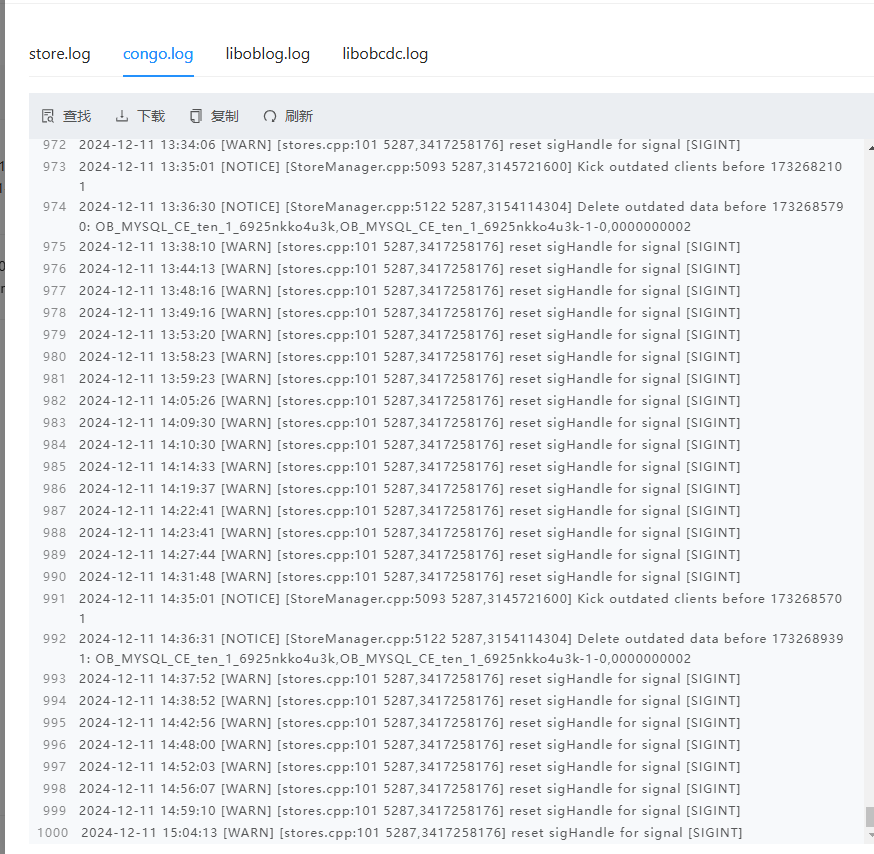

congo.log

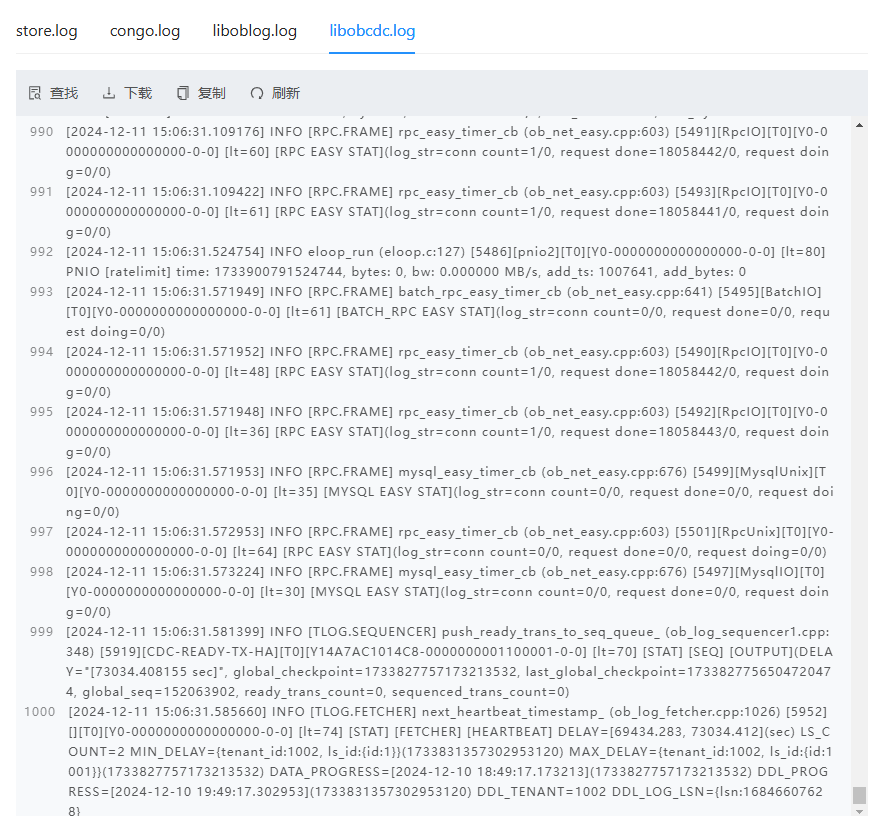

libobcdc.log

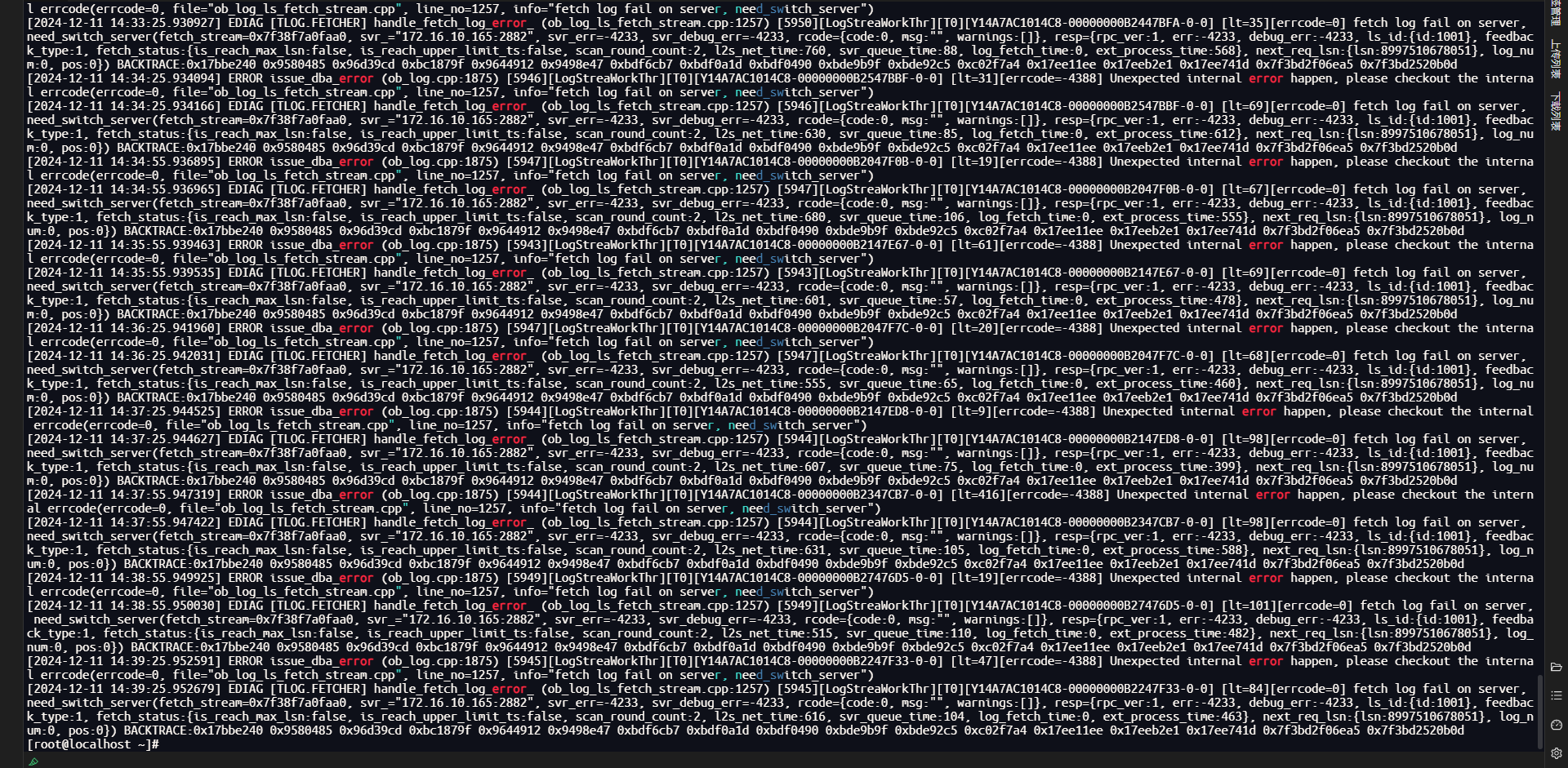

服务器组件日志:

[2024-12-11 14:37:25.944627] EDIAG [TLOG.FETCHER] handle_fetch_log_error_ (ob_log_ls_fetch_stream.cpp:1257) [5944][LogStreaWorkThr][T0][Y14A7AC1014C8-00000000B2147ED8-0-0] [lt=98][errcode=0] fetch log fail on server, need_switch_server(fetch_stream=0x7f38f7a0faa0, svr_=“172.16.10.165:2882”, svr_err=-4233, svr_debug_err=-4233, rcode={code:0, msg:"", warnings:[]}, resp={rpc_ver:1, err:-4233, debug_err:-4233, ls_id:{id:1001}, feedback_type:1, fetch_status:{is_reach_max_lsn:false, is_reach_upper_limit_ts:false, scan_round_count:2, l2s_net_time:607, svr_queue_time:75, log_fetch_time:0, ext_process_time:399}, next_req_lsn:{lsn:8997510678051}, log_num:0, pos:0}) BACKTRACE:0x17bbe240 0x9580485 0x96d39cd 0xbc1879f 0x9644912 0x9498e47 0xbdf6cb7 0xbdf0a1d 0xbdf0490 0xbde9b9f 0xbde92c5 0xc02f7a4 0x17ee11ee 0x17eeb2e1 0x17ee741d 0x7f3bd2f06ea5 0x7f3bd2520b0d [2024-12-11 14:37:55.947319] ERROR issue_dba_error (ob_log.cpp:1875) [5944][LogStreaWorkThr][T0][Y14A7AC1014C8-00000000B2347CB7-0-0] [lt=416][errcode=-4388] Unexpected internal error happen, please checkout the internal errcode(errcode=0, file=“ob_log_ls_fetch_stream.cpp”, line_no=1257, info=“fetch log fail on server, need_switch_server”) [2024-12-11 14:37:55.947422] EDIAG [TLOG.FETCHER] handle_fetch_log_error_ (ob_log_ls_fetch_stream.cpp:1257) [5944][LogStreaWorkThr][T0][Y14A7AC1014C8-00000000B2347CB7-0-0] [lt=98][errcode=0] fetch log fail on server, need_switch_server(fetch_stream=0x7f38f7a0faa0, svr_=“172.16.10.165:2882”, svr_err=-4233, svr_debug_err=-4233, rcode={code:0, msg:"", warnings:[]}, resp={rpc_ver:1, err:-4233, debug_err:-4233, ls_id:{id:1001}, feedback_type:1, fetch_status:{is_reach_max_lsn:false, is_reach_upper_limit_ts:false, scan_round_count:2, l2s_net_time:631, svr_queue_time:105, log_fetch_time:0, ext_process_time:588}, next_req_lsn:{lsn:8997510678051}, log_num:0, pos:0}) BACKTRACE:0x17bbe240 0x9580485 0x96d39cd 0xbc1879f 0x9644912 0x9498e47 0xbdf6cb7 0xbdf0a1d 0xbdf0490 0xbde9b9f 0xbde92c5 0xc02f7a4 0x17ee11ee 0x17eeb2e1 0x17ee741d 0x7f3bd2f06ea5 0x7f3