【 使用环境 】测试环境

【 OB or 其他组件 】 只有observer,版本4.3.3,3节点部署

【 使用版本 】4.3.3

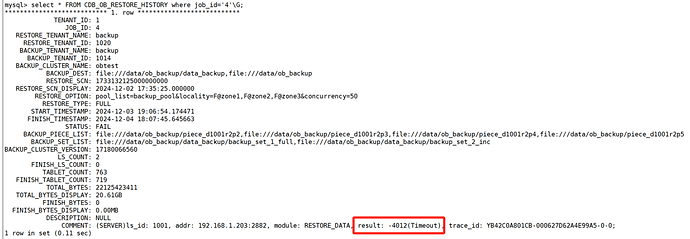

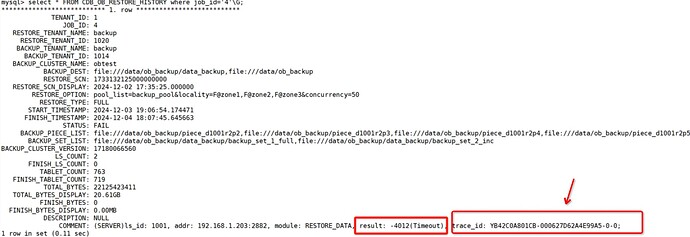

【问题描述】恢复一个backup的租户,恢复到指定时间点,但恢复了一天还是失败了,报错:result: -4012(Timeout)

【复现路径】

恢复命令: ALTER SYSTEM RESTORE backup FROM 'file:///data/ob_backup/data_backup,file:///data/ob_backup’ UNTIL TIME= '2024-12-02 17:35:25’ WITH ‘pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50’ ;

![]()

由于日志保留时间太短,日志被覆盖重写了,已经找不到和这个trace_id有关的日志了,只有下面几条其他的日志:

rootservice.log.20241204184732969:[2024-12-04 18:07:45.632135] WDIAG [RS.RESTORE] do_work (ob_restore_scheduler.cpp:117) [31604][T1_REST_SER][T1][YB42C0A801CB-0006272E633FA2B9-0-0] [lt=136][errcode=-4000] failed to process sys restore job(ret=-4000, ret=“OB_ERROR”, job_info={restore_key:{tenant_id:1, job_id:4}, initiator_job_id:0, initiator_tenant_id:1, tenant_id:1020, backup_tenant_id:1014, restore_type:{restore_type:“FULL”}, status:9, comment:"", restore_start_ts:1733224014174471, restoring_start_ts:0, restore_scn:{val:1733132125000000000, v:0}, consistent_scn:{val:1732877774627316000, v:0}, post_data_version:0, source_cluster_version:17180066560, source_data_version:17180066560, restore_option:“pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50”, backup_dest:“file:///data/ob_backup/data_backup,file:///data/ob_backup”, description:"", tenant_name:“backup”, pool_list:“backup_pool”, locality:“F@zone1,F@zone2,F@zone3”, primary_zone:"", compat_mode:0, compatible:0, kms_info:"", kms_encrypt:false, concurrency:50, passwd_array:"", multi_restore_path_list:{backup_set_path_list:[“file:///data/ob_backup/data_backup/backup_set_1_full”, “file:///data/ob_backup/data_backup/backup_set_2_inc”], backup_piece_path_list:[“file:///data/ob_backup/piece_d1001r2p2”, “file:///data/ob_backup/piece_d1001r2p3”, “file:///data/ob_backup/piece_d1001r2p4”, “file:///data/ob_backup/piece_d1001r2p5”], log_path_list:[“file:///data/ob_backup”]}, white_list:{table_items:[]}, recover_table:false, using_complement_log:false, backup_compatible:4})

rootservice.log.20241204184732969:[2024-12-04 18:07:45.944628] INFO [RS.RESTORE] tenant_restore_finish (ob_restore_scheduler.cpp:762) [31604][T1_REST_SER][T1][YB42C0A801CB-0006272E633FA2BC-0-0] [lt=109] [RESTORE] restore tenant finish(ret=0, job_info={restore_key:{tenant_id:1, job_id:4}, initiator_job_id:0, initiator_tenant_id:1, tenant_id:1020, backup_tenant_id:1014, restore_type:{restore_type:“FULL”}, status:8, comment:“ROOTSERVICE : OB_ERROR(-4000) on “192.168.1.203:2882” with traceid YB42C0A801CB-0006272E633FA2B9-0-0”, restore_start_ts:1733224014174471, restoring_start_ts:0, restore_scn:{val:1733132125000000000, v:0}, consistent_scn:{val:1732877774627316000, v:0}, post_data_version:0, source_cluster_version:17180066560, source_data_version:17180066560, restore_option:“pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50”, backup_dest:“file:///data/ob_backup/data_backup,file:///data/ob_backup”, description:"", tenant_name:“backup”, pool_list:“backup_pool”, locality:“F@zone1,F@zone2,F@zone3”, primary_zone:"", compat_mode:0, compatible:0, kms_info:"", kms_encrypt:false, concurrency:50, passwd_array:"", multi_restore_path_list:{backup_set_path_list:[“file:///data/ob_backup/data_backup/backup_set_1_full”, “file:///data/ob_backup/data_backup/backup_set_2_inc”], backup_piece_path_list:[“file:///data/ob_backup/piece_d1001r2p2”, “file:///data/ob_backup/piece_d1001r2p3”, “file:///data/ob_backup/piece_d1001r2p4”, “file:///data/ob_backup/piece_d1001r2p5”], log_path_list:[“file:///data/ob_backup”]}, white_list:{table_items:[]}, recover_table:false, using_complement_log:false, backup_compatible:4})

rootservice.log.20241204184732969:[2024-12-04 18:07:45.944942] INFO [RS.RESTORE] process_sys_restore_job (ob_restore_scheduler.cpp:165) [31604][T1_REST_SER][T1][YB42C0A801CB-0006272E633FA2BC-0-0] [lt=41] [RESTORE] doing restore(ret=0, job={restore_key:{tenant_id:1, job_id:4}, initiator_job_id:0, initiator_tenant_id:1, tenant_id:1020, backup_tenant_id:1014, restore_type:{restore_type:“FULL”}, status:8, comment:“ROOTSERVICE : OB_ERROR(-4000) on “192.168.1.203:2882” with traceid YB42C0A801CB-0006272E633FA2B9-0-0”, restore_start_ts:1733224014174471, restoring_start_ts:0, restore_scn:{val:1733132125000000000, v:0}, consistent_scn:{val:1732877774627316000, v:0}, post_data_version:0, source_cluster_version:17180066560, source_data_version:17180066560, restore_option:“pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50”, backup_dest:“file:///data/ob_backup/data_backup,file:///data/ob_backup”, description:"", tenant_name:“backup”, pool_list:“backup_pool”, locality:“F@zone1,F@zone2,F@zone3”, primary_zone:"", compat_mode:0, compatible:0, kms_info:"", kms_encrypt:false, concurrency:50, passwd_array:"", multi_restore_path_list:{backup_set_path_list:[“file:///data/ob_backup/data_backup/backup_set_1_full”, “file:///data/ob_backup/data_backup/backup_set_2_inc”], backup_piece_path_list:[“file:///data/ob_backup/piece_d1001r2p2”, “file:///data/ob_backup/piece_d1001r2p3”, “file:///data/ob_backup/piece_d1001r2p4”, “file:///data/ob_backup/piece_d1001r2p5”], log_path_list:[“file:///data/ob_backup”]}, white_list:{table_items:[]}, recover_table:false, using_complement_log:false, backup_compatible:4})

我正在重跑,看能否复现,如果复现了再抓取相关的日志

好的,备份租户和恢复租户的OB版本一致吗?

另外发下备份文件的目录情况

tree 备份目录

一致,都是OceanBase_CE 4.3.3.0

├── check_file

│ └── 1014_connect_file_20241129T170256.obbak

├── data_backup

│ ├── backup_set_1_full

│ │ ├── backup_set_1_full_20241129T172104_20241129T173229.obbak

│ │ ├── infos

│ │ │ ├── diagnose_info.obbak

│ │ │ ├── locality_info.obbak

│ │ │ ├── major_data_info_turn_1

│ │ │ │ ├── tenant_major_data_macro_block_index.0.obbak

│ │ │ │ └── tenant_major_data_meta_index.0.obbak

│ │ │ ├── meta_info

│ │ │ │ ├── ls_attr_info.1.obbak

│ │ │ │ └── ls_meta_infos.obbak

│ │ │ ├── table_list

│ │ │ │ ├── table_list.1732872749889697000.1.obbak

│ │ │ │ └── table_list_meta_info.1732872749889697000.obbak

│ │ │ └── user_data_info_turn_1

│ │ │ └── tablet_log_stream_info.obbak

│ │ ├── logstream_1

│ │ │ ├── fused_meta_info_turn_1_retry_0

│ │ │ │ └── tablet_info.1.obbak

│ │ │ ├── meta_info_turn_1_retry_0

│ │ │ │ ├── ls_meta_info.obbak

│ │ │ │ └── tablet_info.1.obbak

│ │ │ ├── sys_data_turn_1_retry_0

│ │ │ │ ├── index_tree.0.obbak

│ │ │ │ ├── macro_block_data.0.obbak

│ │ │ │ ├── macro_block_index.obbak

│ │ │ │ ├── meta_index.obbak

│ │ │ │ └── meta_tree.0.obbak

│ │ │ └── user_data_turn_1_retry_1

│ │ │ ├── index_tree.0.obbak

│ │ │ ├── macro_block_data.0.obbak

│ │ │ ├── macro_block_index.obbak

│ │ │ ├── meta_index.obbak

│ │ │ └── meta_tree.0.obbak

│ │ ├── logstream_1001

│ │ │ ├── fused_meta_info_turn_1_retry_0

│ │ │ │ └── tablet_info.1.obbak

│ │ │ ├── meta_info_turn_1_retry_1

│ │ │ │ ├── ls_meta_info.obbak

│ │ │ │ └── tablet_info.1.obbak

│ │ │ ├── sys_data_turn_1_retry_1

│ │ │ │ ├── index_tree.0.obbak

│ │ │ │ ├── macro_block_data.0.obbak

│ │ │ │ ├── macro_block_index.obbak

│ │ │ │ ├── meta_index.obbak

│ │ │ │ └── meta_tree.0.obbak

│ │ │ └── user_data_turn_1_retry_0

│ │ │ ├── index_tree.0.obbak

│ │ │ ├── index_tree.1.obbak

│ │ │ ├── index_tree.2.obbak

│ │ │ ├── index_tree.3.obbak

│ │ │ ├── index_tree.4.obbak

│ │ │ ├── index_tree.5.obbak

│ │ │ ├── macro_block_data.0.obbak

│ │ │ ├── macro_block_data.1.obbak

│ │ │ ├── macro_block_data.2.obbak

│ │ │ ├── macro_block_data.3.obbak

│ │ │ ├── macro_block_data.4.obbak

│ │ │ ├── macro_block_data.5.obbak

│ │ │ ├── macro_block_index.obbak

│ │ │ ├── meta_index.obbak

│ │ │ ├── meta_tree.0.obbak

│ │ │ ├── meta_tree.1.obbak

│ │ │ ├── meta_tree.2.obbak

│ │ │ ├── meta_tree.3.obbak

│ │ │ ├── meta_tree.4.obbak

│ │ │ └── meta_tree.5.obbak

│ │ ├── single_backup_set_info.obbak

│ │ └── tenant_backup_set_infos.obbak

│ ├── backup_set_2_inc

│ │ ├── backup_set_2_inc_20241129T185507_20241129T190504.obbak

│ │ ├── infos

│ │ │ ├── diagnose_info.obbak

│ │ │ ├── locality_info.obbak

│ │ │ ├── major_data_info_turn_1

│ │ │ │ ├── tenant_major_data_macro_block_index.0.obbak

│ │ │ │ └── tenant_major_data_meta_index.0.obbak

│ │ │ ├── meta_info

│ │ │ │ ├── ls_attr_info.1.obbak

│ │ │ │ └── ls_meta_infos.obbak

│ │ │ ├── table_list

│ │ │ │ ├── table_list.1732878304451166000.1.obbak

│ │ │ │ └── table_list_meta_info.1732878304451166000.obbak

│ │ │ └── user_data_info_turn_1

│ │ │ └── tablet_log_stream_info.obbak

│ │ ├── logstream_1

│ │ │ ├── fused_meta_info_turn_1_retry_0

│ │ │ │ └── tablet_info.1.obbak

│ │ │ ├── meta_info_turn_1_retry_0

│ │ │ │ ├── ls_meta_info.obbak

│ │ │ │ └── tablet_info.1.obbak

│ │ │ ├── sys_data_turn_1_retry_0

│ │ │ │ ├── index_tree.0.obbak

│ │ │ │ ├── macro_block_data.0.obbak

│ │ │ │ ├── macro_block_index.obbak

│ │ │ │ ├── meta_index.obbak

│ │ │ │ └── meta_tree.0.obbak

│ │ │ └── user_data_turn_1_retry_0

│ │ │ ├── index_tree.0.obbak

│ │ │ ├── macro_block_data.0.obbak

│ │ │ ├── macro_block_index.obbak

│ │ │ ├── meta_index.obbak

│ │ │ └── meta_tree.0.obbak

│ │ ├── logstream_1001

│ │ │ ├── fused_meta_info_turn_1_retry_0

│ │ │ │ └── tablet_info.1.obbak

│ │ │ ├── meta_info_turn_1_retry_1

│ │ │ │ ├── ls_meta_info.obbak

│ │ │ │ └── tablet_info.1.obbak

│ │ │ ├── sys_data_turn_1_retry_1

│ │ │ │ ├── index_tree.0.obbak

│ │ │ │ ├── macro_block_data.0.obbak

│ │ │ │ ├── macro_block_index.obbak

│ │ │ │ ├── meta_index.obbak

│ │ │ │ └── meta_tree.0.obbak

│ │ │ └── user_data_turn_1_retry_0

│ │ │ ├── index_tree.0.obbak

│ │ │ ├── index_tree.1.obbak

│ │ │ ├── index_tree.2.obbak

│ │ │ ├── index_tree.3.obbak

│ │ │ ├── index_tree.4.obbak

│ │ │ ├── index_tree.5.obbak

│ │ │ ├── macro_block_data.0.obbak

│ │ │ ├── macro_block_data.1.obbak

│ │ │ ├── macro_block_data.2.obbak

│ │ │ ├── macro_block_data.3.obbak

│ │ │ ├── macro_block_data.4.obbak

│ │ │ ├── macro_block_data.5.obbak

│ │ │ ├── macro_block_index.obbak

│ │ │ ├── meta_index.obbak

│ │ │ ├── meta_tree.0.obbak

│ │ │ ├── meta_tree.1.obbak

│ │ │ ├── meta_tree.2.obbak

│ │ │ ├── meta_tree.3.obbak

│ │ │ ├── meta_tree.4.obbak

│ │ │ └── meta_tree.5.obbak

│ │ ├── single_backup_set_info.obbak

│ │ └── tenant_backup_set_infos.obbak

│ ├── backup_sets

│ │ ├── backup_set_1_full_end_success_20241129T173229.obbak

│ │ ├── backup_set_1_full_start.obbak

│ │ ├── backup_set_2_inc_end_success_20241129T190504.obbak

│ │ └── backup_set_2_inc_start.obbak

│ ├── check_file

│ │ └── 1014_connect_file_20241129T171930.obbak

│ └── format.obbak

├── format.obbak

├── piece_d1001r1p1

│ ├── checkpoint

│ │ ├── checkpoint_info.0.obarc

│ │ └── checkpoint_info.1732871260130389000.obarc

│ ├── file_info.obarc

│ ├── logstream_1

│ │ ├── file_info.obarc

│ │ ├── log

│ │ │ └── 42.obarc

│ │ └── schema_meta

│ │ └── 1732871261969898000.obarc

│ ├── logstream_1001

│ │ ├── file_info.obarc

│ │ └── log

│ │ └── 2816.obarc

│ ├── piece_d1001r1p1_20241129T170739_20241129T170740.obarc

│ ├── single_piece_info.obarc

│ └── tenant_archive_piece_infos.obarc

├── piece_d1001r2p2

│ ├── checkpoint

│ │ ├── checkpoint_info.0.obarc

│ │ └── checkpoint_info.1732957790532878004.obarc

│ ├── file_info.obarc

│ ├── logstream_1

│ │ ├── file_info.obarc

│ │ ├── log

│ │ │ ├── 42.obarc

│ │ │ ├── 43.obarc

│ │ │ └── 44.obarc

│ │ └── schema_meta

│ │ └── 1732871391081499000.obarc

│ ├── logstream_1001

│ │ ├── file_info.obarc

│ │ └── log

│ │ ├── 2816.obarc

│ │ └── 2817.obarc

│ ├── piece_d1001r2p2_20241129T170950_20241130T170950.obarc

│ ├── single_piece_info.obarc

│ └── tenant_archive_piece_infos.obarc

├── piece_d1001r2p3

│ ├── checkpoint

│ │ ├── checkpoint_info.0.obarc

│ │ └── checkpoint_info.1733044190074656004.obarc

│ ├── file_info.obarc

│ ├── logstream_1

│ │ ├── file_info.obarc

│ │ ├── log

│ │ │ ├── 44.obarc

│ │ │ ├── 45.obarc

│ │ │ └── 46.obarc

│ │ └── schema_meta

│ │ └── 1732957791960758000.obarc

│ ├── logstream_1001

│ │ ├── file_info.obarc

│ │ └── log

│ │ ├── 2817.obarc

│ │ ├── 2818.obarc

│ │ └── 2819.obarc

│ ├── piece_d1001r2p3_20241130T170950_20241201T170950.obarc

│ ├── single_piece_info.obarc

│ └── tenant_archive_piece_infos.obarc

├── piece_d1001r2p4

│ ├── checkpoint

│ │ ├── checkpoint_info.0.obarc

│ │ └── checkpoint_info.1733130585674149001.obarc

│ ├── file_info.obarc

│ ├── logstream_1

│ │ ├── file_info.obarc

│ │ ├── log

│ │ │ ├── 46.obarc

│ │ │ ├── 47.obarc

│ │ │ └── 48.obarc

│ │ └── schema_meta

│ │ └── 1733044192650905000.obarc

│ ├── logstream_1001

│ │ ├── file_info.obarc

│ │ └── log

│ │ ├── 2819.obarc

│ │ └── 2820.obarc

│ ├── piece_d1001r2p4_20241201T170950_20241202T170950.obarc

│ ├── single_piece_info.obarc

│ └── tenant_archive_piece_infos.obarc

├── piece_d1001r2p5

│ ├── checkpoint

│ │ ├── checkpoint_info.0.obarc

│ │ └── checkpoint_info.1733133833390963000.obarc

│ ├── logstream_1

│ │ ├── log

│ │ │ └── 48.obarc

│ │ └── schema_meta

│ │ └── 1733130593391253000.obarc

│ ├── logstream_1001

│ │ └── log

│ │ └── 2820.obarc

│ └── tenant_archive_piece_infos.obarc

├── pieces

│ ├── piece_d1001r1p1_end_20241129T170740.obarc

│ ├── piece_d1001r1p1_start_20241129T170739.obarc

│ ├── piece_d1001r2p2_end_20241130T170950.obarc

│ ├── piece_d1001r2p2_start_20241129T170950.obarc

│ ├── piece_d1001r2p3_end_20241201T170950.obarc

│ ├── piece_d1001r2p3_start_20241130T170950.obarc

│ ├── piece_d1001r2p4_end_20241202T170950.obarc

│ ├── piece_d1001r2p4_start_20241201T170950.obarc

│ └── piece_d1001r2p5_start_20241202T170950.obarc

└── rounds

├── round_d1001r1_end.obarc

├── round_d1001r1_start.obarc

└── round_d1001r2_start.obarc

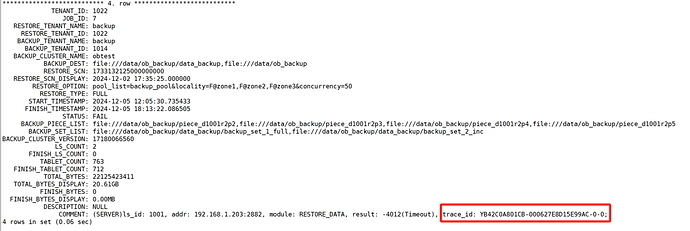

报错复现了:

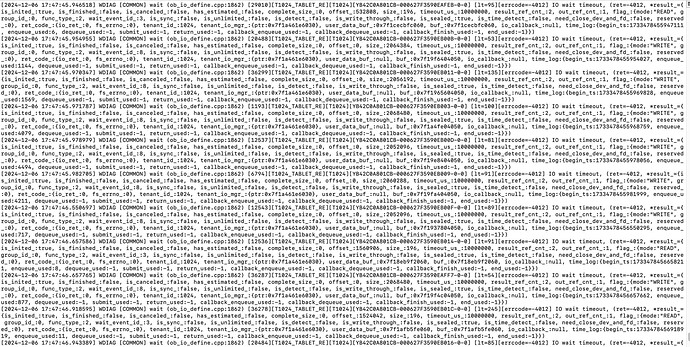

报错日志:

[root@ob203 log]# grep ‘YB42C0A801CB-000627E8D15E99AC-0-0’ observer.*

observer.log.20241205181502178:[2024-12-05 18:12:50.888127] INFO [SHARE] do_update_column_ (ob_inner_table_operator.cpp:906) [17899][T1022_HAService][T1022][YB42C0A801CB-000627E8CF7E9631-0-0] [lt=72] update one column(key={tenant_id:1022, job_id:7, ls_id:{id:1001}, addr:“192.168.1.203:2882”}, assignments=“status = 13, finish_tablet_count = 0, result = -4012, trace_id = ‘YB42C0A801CB-000627E8D15E99AC-0-0’, comment = ‘(SERVER)ls_id: 1001, addr: 192.168.1.203:2882, module: RESTORE_DATA, result: -4012(Timeout), trace_id: YB42C0A801CB-000627E8D15E99AC-0-0’”, affected_rows=1, sql=update __all_ls_restore_progress set status = 13, finish_tablet_count = 0, result = -4012, trace_id = ‘YB42C0A801CB-000627E8D15E99AC-0-0’, comment = ‘(SERVER)ls_id: 1001, addr: 192.168.1.203:2882, module: RESTORE_DATA, result: -4012(Timeout), trace_id: YB42C0A801CB-000627E8D15E99AC-0-0’ where tenant_id = 1022 AND job_id = 7 AND ls_id = 1001 AND svr_ip = ‘192.168.1.203’ AND svr_port = 2882)

[root@ob203 log]# grep ‘YB42C0A801CB-000627E8D15E99AC-0-0’ rootservice.*

rootservice.log:[2024-12-05 18:13:22.098239] INFO [SHARE] do_insert_row_ (ob_inner_table_operator.cpp:657) [17961][T1021_REST_SER][T1021][YB42C0A801CB-000627E8CD1E9B9C-0-0] [lt=27] insert one row(row={key:{tenant_id:1022, job_id:7}, start_time:1733371530735433, finish_time:1733393602086505, restore_type:{restore_type:“FULL”}, restore_tenant_name:“backup”, restore_tenant_id:1022, backup_tenant_id:1014, backup_dest:file:///data/ob_backup/data_backup,file:///data/ob_backup, restore_scn:{val:1733132125000000000, v:0}, restore_option:pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50, table_list:, remap_table_list:, database_list:, remap_database_list:, backup_piece_list:file:///data/ob_backup/piece_d1001r2p2,file:///data/ob_backup/piece_d1001r2p3,file:///data/ob_backup/piece_d1001r2p4,file:///data/ob_backup/piece_d1001r2p5, backup_set_list:file:///data/ob_backup/data_backup/backup_set_1_full,file:///data/ob_backup/data_backup/backup_set_2_inc, backup_cluster_version:17180066560, ls_count:2, finish_ls_count:0, tablet_count:763, finish_tablet_count:712, total_bytes:22125423411, finish_bytes:0, status:1, description:, comment:"(SERVER)ls_id: 1001, addr: 192.168.1.203:2882, module: RESTORE_DATA, result: -4012(Timeout), trace_id: YB42C0A801CB-000627E8D15E99AC-0-0;", initiator_job_id:7, initiator_tenant_id:1}, affected_rows=1, sql=INSERT INTO _all_restore_job_history (tenant_id, job_id, start_time, finish_time, initiator_job_id, initiator_tenant_id, restore_tenant_name, backup_tenant_name, backup_cluster_name, restore_tenant_id, backup_tenant_id, backup_dest, restore_scn, restore_option, table_list, remap_table_list, database_list, remap_database_list, backup_piece_list, backup_set_list, backup_cluster_version, ls_count, finish_ls_count, tablet_count, finish_tablet_count, total_bytes, finish_bytes, status, restore_type, description, comment) VALUES (1022, 7, usec_to_time(1733371530735433), usec_to_time(1733393602086505), 7, 1, ‘backup’, ‘backup’, ‘obtest’, 1022, 1014, ‘file:///data/ob_backup/data_backup,file:///data/ob_backup’, 1733132125000000000, ‘pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50’, NULL, NULL, NULL, NULL, ‘file:///data/ob_backup/piece_d1001r2p2,file:///data/ob_backup/piece_d1001r2p3,file:///data/ob_backup/piece_d1001r2p4,file:///data/ob_backup/piece_d1001r2p5’, ‘file:///data/ob_backup/data_backup/backup_set_1_full,file:///data/ob_backup/data_backup/backup_set_2_inc’, 17180066560, 2, 0, 763, 712, 22125423411, 0, ‘FAIL’, ‘FULL’, NULL, ‘(SERVER)ls_id: 1001, addr: 192.168.1.203:2882, module: RESTORE_DATA, result: -4012(Timeout), trace_id: YB42C0A801CB-000627E8D15E99AC-0-0;’))

rootservice.log:[2024-12-05 18:13:29.665504] INFO [SHARE] do_insert_row (ob_inner_table_operator.cpp:657) [31604][T1_REST_SER][T1][YB42C0A801CB-0006272E633FB3B1-0-0] [lt=89] insert one row(row={key:{tenant_id:1, job_id:7}, start_time:1733371530735433, finish_time:1733393609506173, restore_type:{restore_type:“FULL”}, restore_tenant_name:“backup”, restore_tenant_id:1022, backup_tenant_id:1014, backup_dest:file:///data/ob_backup/data_backup,file:///data/ob_backup, restore_scn:{val:1733132125000000000, v:0}, restore_option:pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50, table_list:, remap_table_list:, database_list:, remap_database_list:, backup_piece_list:file:///data/ob_backup/piece_d1001r2p2,file:///data/ob_backup/piece_d1001r2p3,file:///data/ob_backup/piece_d1001r2p4,file:///data/ob_backup/piece_d1001r2p5, backup_set_list:file:///data/ob_backup/data_backup/backup_set_1_full,file:///data/ob_backup/data_backup/backup_set_2_inc, backup_cluster_version:17180066560, ls_count:2, finish_ls_count:0, tablet_count:763, finish_tablet_count:712, total_bytes:22125423411, finish_bytes:0, status:1, description:, comment:"(SERVER)ls_id: 1001, addr: 192.168.1.203:2882, module: RESTORE_DATA, result: -4012(Timeout), trace_id: YB42C0A801CB-000627E8D15E99AC-0-0;", initiator_job_id:0, initiator_tenant_id:1}, affected_rows=1, sql=INSERT INTO __all_restore_job_history (tenant_id, job_id, start_time, finish_time, initiator_job_id, initiator_tenant_id, restore_tenant_name, backup_tenant_name, backup_cluster_name, restore_tenant_id, backup_tenant_id, backup_dest, restore_scn, restore_option, table_list, remap_table_list, database_list, remap_database_list, backup_piece_list, backup_set_list, backup_cluster_version, ls_count, finish_ls_count, tablet_count, finish_tablet_count, total_bytes, finish_bytes, status, restore_type, description, comment) VALUES (1, 7, usec_to_time(1733371530735433), usec_to_time(1733393609506173), 0, 1, ‘backup’, ‘backup’, ‘obtest’, 1022, 1014, ‘file:///data/ob_backup/data_backup,file:///data/ob_backup’, 1733132125000000000, ‘pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50’, NULL, NULL, NULL, NULL, ‘file:///data/ob_backup/piece_d1001r2p2,file:///data/ob_backup/piece_d1001r2p3,file:///data/ob_backup/piece_d1001r2p4,file:///data/ob_backup/piece_d1001r2p5’, ‘file:///data/ob_backup/data_backup/backup_set_1_full,file:///data/ob_backup/data_backup/backup_set_2_inc’, 17180066560, 2, 0, 763, 712, 22125423411, 0, ‘FAIL’, ‘FULL’, NULL, ‘(SERVER)ls_id: 1001, addr: 192.168.1.203:2882, module: RESTORE_DATA, result: -4012(Timeout), trace_id: YB42C0A801CB-000627E8D15E99AC-0-0;’))

好的,我们分析下,大概明天会有进展,到时回复您

另外这个相关的 observer.log rootsevice.log源文件方便发下吗

好,不着急;有这样一个报错:[errcode=-4000] failed to process sys restore job

rootservice.log:[2024-12-05 18:13:29.493548] WDIAG [RS.RESTORE] do_work (ob_restore_scheduler.cpp:117) [31604][T1_REST_SER][T1][YB42C0A801CB-0006272E633FB3AE-0-0] [lt=107][errcode=-4000] failed to process sys restore job(ret=-4000, ret=“OB_ERROR”, job_info={restore_key:{tenant_id:1, job_id:7}, initiator_job_id:0, initiator_tenant_id:1, tenant_id:1022, backup_tenant_id:1014, restore_type:{restore_type:“FULL”}, status:9, comment:"", restore_start_ts:1733371530735433, restoring_start_ts:0, restore_scn:{val:1733132125000000000, v:0}, consistent_scn:{val:1732877774627316000, v:0}, post_data_version:0, source_cluster_version:17180066560, source_data_version:17180066560, restore_option:“pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50”, backup_dest:“file:///data/ob_backup/data_backup,file:///data/ob_backup”, description:"", tenant_name:“backup”, pool_list:“backup_pool”, locality:“F@zone1,F@zone2,F@zone3”, primary_zone:"", compat_mode:0, compatible:0, kms_info:"", kms_encrypt:false, concurrency:50, passwd_array:"", multi_restore_path_list:{backup_set_path_list:[“file:///data/ob_backup/data_backup/backup_set_1_full”, “file:///data/ob_backup/data_backup/backup_set_2_inc”], backup_piece_path_list:[“file:///data/ob_backup/piece_d1001r2p2”, “file:///data/ob_backup/piece_d1001r2p3”, “file:///data/ob_backup/piece_d1001r2p4”, “file:///data/ob_backup/piece_d1001r2p5”], log_path_list:[“file:///data/ob_backup”]}, white_list:{table_items:[]}, recover_table:false, using_complement_log:false, backup_compatible:4})

rootservice.log:[2024-12-05 18:13:29.650374] INFO [RS.RESTORE] check_is_concurrent_with_clean (ob_restore_util.cpp:2105) [31604][T1_REST_SER][T1][YB42C0A801CB-0006272E633FB3B1-0-0] [lt=18] [RESTORE_FAILURE_CHECKER]check is concurrent with clean(ret=0, is_concurrent_with_clean=false, job={restore_key:{tenant_id:1, job_id:7}, initiator_job_id:0, initiator_tenant_id:1, tenant_id:1022, backup_tenant_id:1014, restore_type:{restore_type:“FULL”}, status:8, comment:“ROOTSERVICE : OB_ERROR(-4000) on “192.168.1.203:2882” with traceid YB42C0A801CB-0006272E633FB3AE-0-0”, restore_start_ts:1733371530735433, restoring_start_ts:0, restore_scn:{val:1733132125000000000, v:0}, consistent_scn:{val:1732877774627316000, v:0}, post_data_version:0, source_cluster_version:17180066560, source_data_version:17180066560, restore_option:“pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50”, backup_dest:“file:///data/ob_backup/data_backup,file:///data/ob_backup”, description:"", tenant_name:“backup”, pool_list:“backup_pool”, locality:“F@zone1,F@zone2,F@zone3”, primary_zone:"", compat_mode:0, compatible:0, kms_info:"", kms_encrypt:false, concurrency:50, passwd_array:"", multi_restore_path_list:{backup_set_path_list:[“file:///data/ob_backup/data_backup/backup_set_1_full”, “file:///data/ob_backup/data_backup/backup_set_2_inc”], backup_piece_path_list:[“file:///data/ob_backup/piece_d1001r2p2”, “file:///data/ob_backup/piece_d1001r2p3”, “file:///data/ob_backup/piece_d1001r2p4”, “file:///data/ob_backup/piece_d1001r2p5”], log_path_list:[“file:///data/ob_backup”]}, white_list:{table_items:[]}, recover_table:false, using_complement_log:false, backup_compatible:4})

observer.log,rootsevice.log源文件也麻烦压缩后上传下,这个分析可能用到全量日志

这个租户有备租户吗?另外麻烦发下全量日志吧

您好,没有备租户;只有主租户,就一个租户;昨天在忙别的事,日志没来得及备份,调错了一个参数今天看日志又没了,我今天重新复现一下,现在设置日志永久保留,我收集到日志了及时发出来。

好的,

目前了解到集群架构1-1-1,需要获取完整observer.log,rootservice.log后进一步分析,

可以使用obdiag进行日志收集

obdiag gather scene run --scene=observer.recovery [options]

https://www.oceanbase.com/docs/common-obdiag-cn-1000000001768250

日志已通过obdiag收集了,由于文件太大通过夸克网盘分享了,收集了两次日志,参见:夸克网盘分享

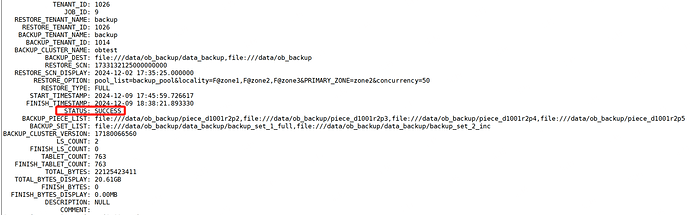

错误信息:COMMENT: (SERVER)ls_id: 1001, addr: 192.168.1.203:2882, module: RESTORE_DATA, result: -4012(Timeout), trace_id: YB42C0A801CB-000627F3590E99AB-0-0;

备份历史:

TENANT_ID: 1024

JOB_ID: 8

RESTORE_TENANT_NAME: backup

RESTORE_TENANT_ID: 1024

BACKUP_TENANT_NAME: backup

BACKUP_TENANT_ID: 1014

BACKUP_CLUSTER_NAME: obtest

BACKUP_DEST: file:///data/ob_backup/data_backup,file:///data/ob_backup

RESTORE_SCN: 1733132125000000000

RESTORE_SCN_DISPLAY: 2024-12-02 17:35:25.000000

RESTORE_OPTION: pool_list=backup_pool&locality=F@zone1,F@zone2,F@zone3&concurrency=50

RESTORE_TYPE: FULL

START_TIMESTAMP: 2024-12-06 11:12:43.926982

FINISH_TIMESTAMP: 2024-12-06 19:23:10.040112

STATUS: FAIL

BACKUP_PIECE_LIST: file:///data/ob_backup/piece_d1001r2p2,file:///data/ob_backup/piece_d1001r2p3,file:///data/ob_backup/piece_d1001r2p4,file:///data/ob_backup/piece_d1001r2p5

BACKUP_SET_LIST: file:///data/ob_backup/data_backup/backup_set_1_full,file:///data/ob_backup/data_backup/backup_set_2_inc

BACKUP_CLUSTER_VERSION: 17180066560

LS_COUNT: 2

FINISH_LS_COUNT: 0

TABLET_COUNT: 763

FINISH_TABLET_COUNT: 716

TOTAL_BYTES: 22125423411

TOTAL_BYTES_DISPLAY: 20.61GB

FINISH_BYTES: 0

FINISH_BYTES_DISPLAY: 0.00MB

DESCRIPTION: NULL

COMMENT: (SERVER)ls_id: 1001, addr: 192.168.1.203:2882, module: RESTORE_DATA, result: -4012(Timeout), trace_id: YB42C0A801CB-000627F3590E99AB-0-0;

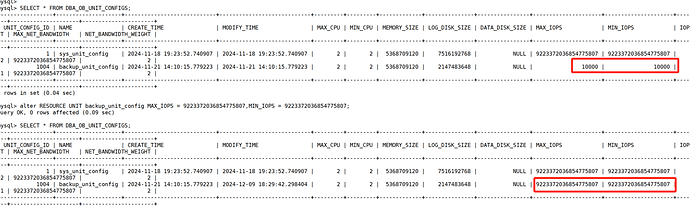

和IOPS限制有关系,也可能和磁的盘性能也有关系,这里不做限制也可以的,当有多个租户时,系统会根据IOPS_WEIGHT的值来动态计算每个租户的相对IOPS量,使租户使用的IOPS在MIN_IOPS和MAX_IOPS之间浮动。

非常感谢