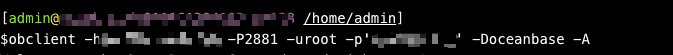

【 使用环境 】 测试环境

【 OB or 其他组件 】opproxy && opserver

【 使用版本 】 4.3.1

【问题描述】

建表语句:

CREATE TABLE t1 (

id bigint(30) unsigned NOT NULL AUTO_INCREMENT,

uniq_str varchar(64) NOT NULL DEFAULT ‘’ COMMENT ‘’,

name varchar(256) NOT NULL DEFAULT ‘’ COMMENT ‘名字’,

PRIMARY KEY (id),

KEY uniq_str (uniq_str),

KEY name (name)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT=‘t1’;

CREATE TABLE t2 (

id bigint(30) unsigned NOT NULL AUTO_INCREMENT,

uniq_str varchar(64) NOT NULL DEFAULT ‘’ COMMENT ‘’,

status smallint(5) NOT NULL DEFAULT ‘0’ COMMENT ‘’,

PRIMARY KEY (id),

KEY uniq_str (uniq_str),

KEY status_uniq_str (status,uniq_str)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT=‘t2’;

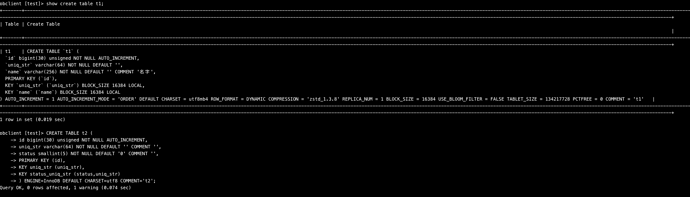

执行SQL:explain SELECT /*+ PARALLEL(10) */ name, count(t1.uniq_str) as num FROM t1 LEFT JOIN t2 ON t2.uniq_str = t1.uniq_str WHERE t2.status IN(0, 1) GROUP BY t1.name LIMIT 10;

OBPROXY 返回结果:

explain SELECT /*+ PARALLEL(10) */ name, count(t1.uniq_str) as num FROM t1 LEFT JOIN t2 ON t2.uniq_str = t1.uniq_str WHERE t2.status IN(0, 1) GROUP BY t1.name LIMIT 10;

±--------------------------------------------------------------------------------------------------------------+

| Query Plan |

±--------------------------------------------------------------------------------------------------------------+

| ========================================================================= |

| |ID|OPERATOR |NAME |EST.ROWS|EST.TIME(us)| |

| ------------------------------------------------------------------------- |

| |0 |LIMIT | |1 |10 | |

| |1 |±HASH GROUP BY | |1 |10 | |

| |2 | ±HASH JOIN | |1 |9 | |

| |3 | |-PX COORDINATOR | |1 |7 | |

| |4 | | ±EXCHANGE OUT DISTR|:EX10000 |1 |6 | |

| |5 | | ±TABLE RANGE SCAN|t2(status_uniq_str)|1 |5 | |

| |6 | ±TABLE FULL SCAN |t1 |1 |3 | |

| ========================================================================= |

| Outputs & filters: |

|---|

| 0 - output([t1.name], [T_FUN_COUNT(t1.uniq_str)]), filter(nil), rowset=16 |

| limit(10), offset(nil) |

| 1 - output([t1.name], [T_FUN_COUNT(t1.uniq_str)]), filter(nil), rowset=16 |

| group([t1.name]), agg_func([T_FUN_COUNT(t1.uniq_str)]) |

| 2 - output([t1.name], [t1.uniq_str]), filter(nil), rowset=16 |

| equal_conds([t2.uniq_str = t1.uniq_str]), other_conds(nil) |

| 3 - output([t2.uniq_str]), filter(nil), rowset=16 |

| 4 - output([t2.uniq_str]), filter(nil), rowset=16 |

| is_single, dop=1 |

| 5 - output([t2.uniq_str]), filter(nil), rowset=16 |

| access([t2.uniq_str]), partitions(p0) |

| is_index_back=false, is_global_index=false, |

| range_key([t2.status], [t2.uniq_str], [t2.id]), range(0,MIN,MIN ; 0,MAX,MAX), (1,MIN,MIN ; 1,MAX,MAX), |

| range_cond([t2.status IN (0, 1)]) |

| 6 - output([t1.uniq_str], [t1.name]), filter(nil), rowset=16 |

| access([t1.uniq_str], [t1.name]), partitions(p0) |

| is_index_back=false, is_global_index=false, |

| range_key([t1.id]), range(MIN ; MAX)always true |

±--------------------------------------------------------------------------------------------------------------+

31 rows in set (0.01 sec)

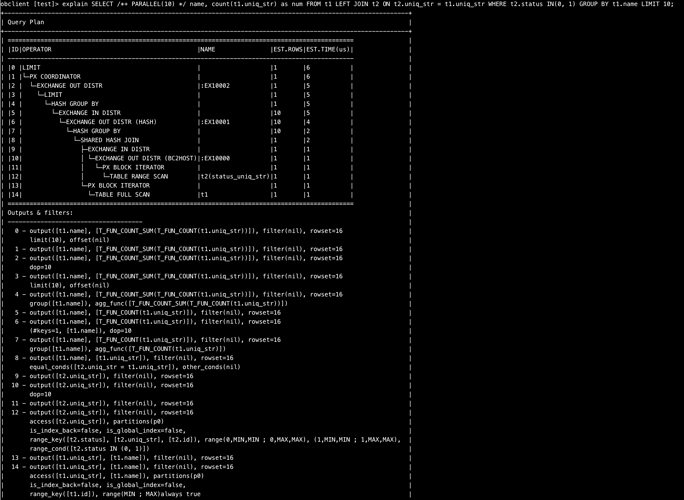

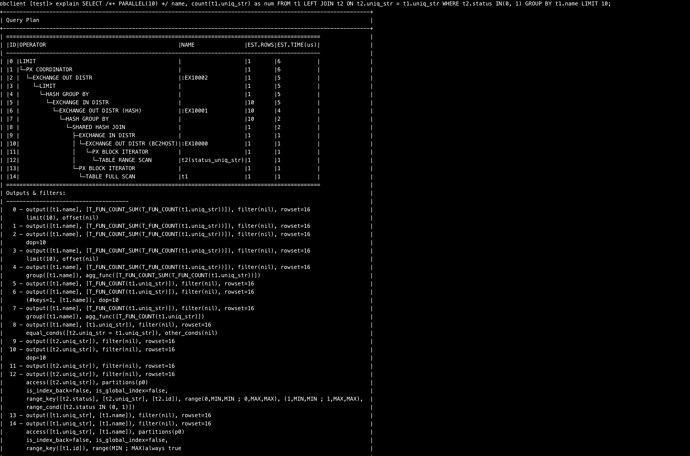

OBSERVER 返回结果:

explain SELECT /*+ PARALLEL(10) */ name, count(t1.uniq_str) as num FROM t1 LEFT JOIN t2 ON t2.uniq_str = t1.uniq_str WHERE t2.status IN(0, 1) GROUP BY t1.name LIMIT 10;

±--------------------------------------------------------------------------------------------------------------+

| Query Plan |

±--------------------------------------------------------------------------------------------------------------+

| =============================================================================================== |

| |ID|OPERATOR |NAME |EST.ROWS|EST.TIME(us)| |

| ----------------------------------------------------------------------------------------------- |

| |0 |LIMIT | |1 |3 | |

| |1 |└─PX COORDINATOR | |1 |3 | |

| |2 | └─EXCHANGE OUT DISTR |:EX10002 |1 |2 | |

| |3 | └─LIMIT | |1 |2 | |

| |4 | └─HASH GROUP BY | |1 |2 | |

| |5 | └─EXCHANGE IN DISTR | |1 |2 | |

| |6 | └─EXCHANGE OUT DISTR (HASH) |:EX10001 |1 |2 | |

| |7 | └─HASH GROUP BY | |1 |2 | |

| |8 | └─SHARED HASH JOIN | |1 |1 | |

| |9 | ├─EXCHANGE IN DISTR | |1 |1 | |

| |10| │ └─EXCHANGE OUT DISTR (BC2HOST)|:EX10000 |1 |1 | |

| |11| │ └─PX BLOCK ITERATOR | |1 |1 | |

| |12| │ └─TABLE RANGE SCAN |t2(status_uniq_str)|1 |1 | |

| |13| └─PX BLOCK ITERATOR | |1 |1 | |

| |14| └─TABLE FULL SCAN |t1 |1 |1 | |

| =============================================================================================== |

| Outputs & filters: |

|---|

| 0 - output([t1.name], [T_FUN_COUNT_SUM(T_FUN_COUNT(t1.uniq_str))]), filter(nil), rowset=16 |

| limit(10), offset(nil) |

| 1 - output([t1.name], [T_FUN_COUNT_SUM(T_FUN_COUNT(t1.uniq_str))]), filter(nil), rowset=16 |

| 2 - output([t1.name], [T_FUN_COUNT_SUM(T_FUN_COUNT(t1.uniq_str))]), filter(nil), rowset=16 |

| dop=10 |

| 3 - output([t1.name], [T_FUN_COUNT_SUM(T_FUN_COUNT(t1.uniq_str))]), filter(nil), rowset=16 |

| limit(10), offset(nil) |

| 4 - output([t1.name], [T_FUN_COUNT_SUM(T_FUN_COUNT(t1.uniq_str))]), filter(nil), rowset=16 |

| group([t1.name]), agg_func([T_FUN_COUNT_SUM(T_FUN_COUNT(t1.uniq_str))]) |

| 5 - output([t1.name], [T_FUN_COUNT(t1.uniq_str)]), filter(nil), rowset=16 |

| 6 - output([t1.name], [T_FUN_COUNT(t1.uniq_str)]), filter(nil), rowset=16 |

| (#keys=1, [t1.name]), dop=10 |

| 7 - output([t1.name], [T_FUN_COUNT(t1.uniq_str)]), filter(nil), rowset=16 |

| group([t1.name]), agg_func([T_FUN_COUNT(t1.uniq_str)]) |

| 8 - output([t1.name], [t1.uniq_str]), filter(nil), rowset=16 |

| equal_conds([t2.uniq_str = t1.uniq_str]), other_conds(nil) |

| 9 - output([t2.uniq_str]), filter(nil), rowset=16 |

| 10 - output([t2.uniq_str]), filter(nil), rowset=16 |

| dop=10 |

| 11 - output([t2.uniq_str]), filter(nil), rowset=16 |

| 12 - output([t2.uniq_str]), filter(nil), rowset=16 |

| access([t2.uniq_str]), partitions(p0) |

| is_index_back=false, is_global_index=false, |

| range_key([t2.status], [t2.uniq_str], [t2.id]), range(0,MIN,MIN ; 0,MAX,MAX), (1,MIN,MIN ; 1,MAX,MAX), |

| range_cond([t2.status IN (0, 1)]) |

| 13 - output([t1.uniq_str], [t1.name]), filter(nil), rowset=16 |

| 14 - output([t1.uniq_str], [t1.name]), filter(nil), rowset=16 |

| access([t1.uniq_str], [t1.name]), partitions(p0) |

| is_index_back=false, is_global_index=false, |

| range_key([t1.id]), range(MIN ; MAX)always true |

±--------------------------------------------------------------------------------------------------------------+

51 rows in set (0.027 sec)