3节点OB集集群,4.2.4_CE版本,第一次部署可以正常启动,测试都没有问题。后续destroy 之后,重新部署,能部署成功,但是无法启动,一直卡在start observer.

Check before start obagent ok

Check before start ocp-express ok

Start observer ok

observer program health check ok

Connect to observer 172.16.51.35:2881 ok

Initialize oceanbase-ce

卡在这一步无法继续。

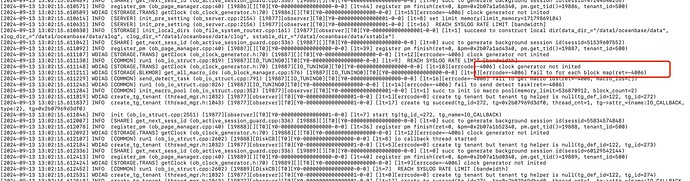

日志反复刷:

[2024-09-13 13:00:32.703915] WDIAG [STORAGE.TRANS] do_cluster_heartbeat_ (ob_tenant_weak_read_service.cpp:879) [3127][T1_TenantWeakRe][T1][Y0-0000000000000000-0-0] [lt=10][errcode=-4076] tenant weak read service do cluster heartbeat fail(ret=-4076, ret=“OB_NEED_WAIT”, tenant_id_=1, last_post_cluster_heartbeat_tstamp_=1726203632503804, cluster_heartbeat_interval_=1000000, cluster_service_tablet_id={id:226}, cluster_service_master=“0.0.0.0:0”)

[2024-09-13 13:00:32.735615] INFO [STORAGE.TRANS] check_all_readonly_tx_clean_up (ob_ls_tx_service.cpp:271) [3144][T1_TxLoopWorker][T1][Y0-0000000000000000-0-0] [lt=29] wait_all_readonly_tx_cleaned_up cleaned up success(ls_id={id:1})

[2024-09-13 13:00:32.736551] WDIAG load_file_to_string (utility.h:662) [2756][ServerGTimer][T0][Y0-0000000000000000-0-0] [lt=8][errcode=0] read /sys/class/net/eth0/speed failed, errno 22

[2024-09-13 13:00:32.736573] WDIAG get_ethernet_speed (utility.cpp:580) [2756][ServerGTimer][T0][Y0-0000000000000000-0-0] [lt=18][errcode=-4000] load file /sys/class/net/eth0/speed failed, ret -4000

[2024-09-13 13:00:32.736580] WDIAG [SERVER] get_network_speed_from_sysfs (ob_server.cpp:2807) [2756][ServerGTimer][T0][Y0-0000000000000000-0-0] [lt=5][errcode=-4000] cannot get Ethernet speed, use default(tmp_ret=0, devname=“eth0”)

[2024-09-13 13:00:32.736590] WDIAG [SERVER] runTimerTask (ob_server.cpp:3341) [2756][ServerGTimer][T0][Y0-0000000000000000-0-0] [lt=8][errcode=-4000] ObRefreshNetworkSpeedTask reload bandwidth throttle limit failed(ret=-4000, ret=“OB_ERROR”)

[2024-09-13 13:00:32.744994] INFO [COMMON] replace_map (ob_kv_storecache.cpp:746) [2788][KVCacheRep][T0][Y0-0000000

日志如下:

alert.log (1.5 KB)

observer.log (9.7 MB)

rootservice.log (37.8 KB)

8004报错属于部署错误,需要尽快停止部署错误的集群。

重新搭建 大概率是yaml配置文件存在问题

时间是同步的,最开始三台机器的chrony指向的同一个时间源,启动报错,刚才我调整节点2,3指向节点1。redeploy还是卡在同样的位置:

Check before start ocp-express ok

Start observer ok

observer program health check ok

Connect to observer 172.16.51.35:2881 ok

Initialize oceanbase-ce /

clock generator not inited这个异常同样还有。

我补充一个背景:

第一次部署成功是4.2.4_CE版本,采用OMS迁移mysql数据库报错,后续发现是4.2.4_CE的bug。因此就想部署4.2.1版本,先是destroy 集群,然后下载了4.2.1版本,重新安装,deploy集群是成功的,start就是卡在上述步骤。一直无法成功。继而继续部署4.2.4版本,同样卡在start位置,topoloy.yaml文件是同一个。

换个新的yaml文件试试

3台机器,topoloy配置没调整,第一次部署,start都是正常的。都做了压测。完全没问题。destroy之后,重新deploy就无法start了。

内容不调整?

你把yaml配置发出来看一下

[root@tidb-1 ob]# obd cluster deploy ob-poc -c ob-poc.yaml

±-------------------------------------------------------------------------------------------+

| Packages |

±-------------±--------±-----------------------±-----------------------------------------+

| Repository | Version | Release | Md5 |

±-------------±--------±-----------------------±-----------------------------------------+

| oceanbase-ce | 4.2.4.0 | 100000082024070810.el7 | 7dc8b049b3283ef4660cdf6e3cfa24f81e9d2a78 |

| obproxy-ce | 4.2.3.0 | 3.el7 | 0490ebc04220def8d25cb9cac9ac61a4efa6d639 |

| obagent | 4.2.2 | 100000042024011120.el7 | 19739a07a12eab736aff86ecf357b1ae660b554e |

| ocp-express | 4.2.2 | 100000022024011120.el7 | 09ffcf156d1df9318a78af52656f499d2315e3f7 |

±-------------±--------±-----------------------±-----------------------------------------+

Repository integrity check ok

Load param plugin ok

Open ssh connection ok

Parameter check ok

Cluster status check ok

Initializes observer work home ok

Initializes obproxy work home ok

Initializes obagent work home ok

Initializes ocp-express work home ok

Remote oceanbase-ce-4.2.4.0-100000082024070810.el7-7dc8b049b3283ef4660cdf6e3cfa24f81e9d2a78 repository install ok

Remote oceanbase-ce-4.2.4.0-100000082024070810.el7-7dc8b049b3283ef4660cdf6e3cfa24f81e9d2a78 repository lib check !!

Remote obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639 repository install ok

Remote obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639 repository lib check ok

Remote obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e repository install ok

Remote obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e repository lib check ok

Remote ocp-express-4.2.2-100000022024011120.el7-09ffcf156d1df9318a78af52656f499d2315e3f7 repository install ok

Remote ocp-express-4.2.2-100000022024011120.el7-09ffcf156d1df9318a78af52656f499d2315e3f7 repository lib check !!

Try to get lib-repository

Remote oceanbase-ce-libs-4.2.4.0-100000082024070810.el7-eda524874ce5daff6685f114bd28965b8c1834ef repository install ok

Remote oceanbase-ce-4.2.4.0-100000082024070810.el7-7dc8b049b3283ef4660cdf6e3cfa24f81e9d2a78 repository lib check ok

Remote openjdk-jre-1.8.0_322-b09.el7-051aa69c5abb8697d15c2f0dcb1392b3f815f7ed repository install ok

Remote ocp-express-4.2.2-100000022024011120.el7-09ffcf156d1df9318a78af52656f499d2315e3f7 repository lib check ok

ob-poc deployed

Please execute obd cluster start ob-poc to start

Trace ID: 99ceca22-7198-11ef-89cb-fa163e45e664

If you want to view detailed obd logs, please run: obd display-trace 99ceca22-7198-11ef-89cb-fa163e45e664

然后start:

Check before start obagent ok

Check before start ocp-express ok

Start observer ok

observer program health check ok

Connect to observer 172.16.51.35:2881 ok

Initialize oceanbase-ce

卡在这里。

第一个节点,执行到:

re_bootstrap|ob_bootstrap.cpp:243|“bootstrap prepare begin.”

2024-09-13 14:26:34.651813|INFO|SERVER|OB_SERVER_INSTANCE_START_SUCCESS|0|0|893|observer|YB42AC103323-000621FA4C61B04B-0-0|start|ob_server.cpp:1044|"[server_start 9/18] observer instance start success."

2024-09-13 14:26:34.694227|INFO|SERVER|OB_SERVER_WAIT_MULTI_TENANT_SYNCED_BEGIN|0|0|893|observer|YB42AC103323-000621FA4C61B04B-0-0|start|ob_server.cpp:1070|"[server_start 10/18] wait multi tenant synced begin."

2024-09-13 14:26:34.697913|INFO|BOOTSTRAP|OB_BOOTSTRAP_PREPARE_SUCCESS|0|1|1313|T1_L0_G0|YB42AC103323-000621FA4CC1B04D-0-0|prepare_bootstrap|ob_bootstrap.cpp:275|“bootstrap prepare success.”

2024-09-13 14:29:49.413416|ERROR|RS|OB_ROOTSERVICE_START_FAIL|-4012|0|987|RSAsyncTask1|YB42AC103323-000621FA4EB1B055-0-0|update_fail_count|ob_root_service.cpp:10743|“rootservice start()/do_restart() has failed 6 times. you may find solutions in previous error logs or seek help from official technicians.”

2024-09-13 14:30:55.421680|ERROR|RS|OB_ROOTSERVICE_START_FAIL|-4012|0|989|RSAsyncTask3|YB42AC103323-000621FA4EC1B0BB-0-0|update_fail_count|ob_root_service.cpp:10743|“rootservice start()/do_restart() has failed 8 times. you may find solutions in previous error logs or seek help from official technicians.”

2024-09-13 14:32:01.428336|ERROR|RS|OB_ROOTSERVICE_START_FAIL|-4012|0|986|RSAsyncTask0|YB42AC103323-000621FA4EA1B08A-0-0|update_fail_count|ob_root_service.cpp:10743|“rootservice start()/do_restart() has failed 10 times. you may find solutions in previous error logs or seek help from official technicians.”

第二、三节点:

2024-09-13 14:25:53.611391|INFO|SERVER|OB_SERVER_START_BEGIN|0|0|16342|observer|Y0-0000000000000001-0-0|start|ob_server.cpp:854|"[server_start 5/18] observer start begin."

2024-09-13 14:25:53.611399|INFO|SERVER|OB_SERVER_INSTANCE_START_BEGIN|0|0|16342|observer|Y0-0000000000000001-0-0|start|ob_server.cpp:872|"[server_start 6/18] observer instance start begin."

2024-09-13 14:25:53.620318|INFO|STORAGE_BLKMGR|OB_SERVER_BLOCK_MANAGER_START_BEGIN|0|0|16342|observer|Y0-0000000000000001-0-0|start|ob_block_manager.cpp:199|"[server_start 7/18] block manager start begin."

2024-09-13 14:25:53.756049|INFO|STORAGE_BLKMGR|OB_SERVER_BLOCK_MANAGER_START_SUCCESS|0|0|16342|observer|Y0-0000000000000001-0-0|start|ob_block_manager.cpp:256|"[server_start 8/18] block manager start success."

部署yaml:

ob-poc.log (4.6 KB)

system_memory 设置的太大了,改为2G试试。最下面的ocp-express内存也改为2G

或者建议你用obd web白屏化部署

调整参数之后,还是一样,卡在那里不继续。

白屏部署,也是一样:

日志和obd部署一样。

第一个节点进展:

2024-09-13 14:59:32.711772|INFO|RS|OB_BOOTSTRAP_BEGIN|0|1|8527|T1_L0_G0|YB42AC103323-000621FAC3B06F1F-0-0|execute_bootstrap|ob_root_service.cpp:1962|"[bootstrap 1/10] cluster bootstrap begin."

2024-09-13 14:59:34.177216|INFO|SERVER|OB_SERVER_INSTANCE_START_SUCCESS|0|0|8068|observer|YB42AC103323-000621FAC3706F1D-0-0|start|ob_server.cpp:1044|"[server_start 9/18] observer instance start success."

2024-09-13 14:59:34.233970|INFO|SERVER|OB_SERVER_WAIT_MULTI_TENANT_SYNCED_BEGIN|0|0|8068|observer|YB42AC103323-000621FAC3706F1D-0-0|start|ob_server.cpp:1070|"[server_start 10/18] wait multi tenant synced begin."

2024-09-13 15:00:03.536012|ERROR|BOOTSTRAP|OB_BOOTSTRAP_CREATE_ALL_SCHEMA_FAIL|-5019|1|8527|T1_L0_G0|YB42AC103323-000621FAC3B06F1F-0-0|create_all_schema|ob_bootstrap.cpp:1052|"[bootstrap 3/10] bootstrap create all schema fail. you may find solutions in previous error logs or seek help from official technicians."

2024-09-13 15:00:03.543158|ERROR|RS|OB_BOOTSTRAP_FAIL|-5019|1|8527|T1_L0_G0|YB42AC103323-000621FAC3B06F1F-0-0|execute_bootstrap|ob_root_service.cpp:2049|"[bootstrap 4/10] cluster bootstrap fail. you may find solutions in previous error logs or seek help from official technicians."

2024-09-13 15:00:03.544208|INFO|BOOTSTRAP|OB_BOOTSTRAP_PREPARE_BEGIN|0|1|8613|T1_L0_G0|YB42AC103323-000621FAC3B06F1F-0-0|prepare_bootstrap|ob_bootstrap.cpp:243|“bootstrap prepare begin.”

2024-09-13 15:00:03.547095|ERROR|BOOTSTRAP|OB_BOOTSTRAP_PREPARE_FAIL|-5156|1|8613|T1_L0_G0|YB42AC103323-000621FAC3B06F1F-0-0|prepare_bootstrap|ob_bootstrap.cpp:273|“bootstrap prepare fail. you may find solutions in previous error logs or seek help from official technicians.”

2024-09-13 15:00:17.419080|ERROR|RS|OB_ROOTSERVICE_START_FAIL|-5019|0|8168|RSAsyncTask2|YB42AC103323-000621FAC5C06F27-0-0|update_fail_count|ob_root_service.cpp:10743|“rootservice start()/do_restart() has failed 6 times. you may find solutions in previous error logs or seek help from official technicians.”

2024-09-13 15:01:17.738413|ERROR|RS|OB_ROOTSERVICE_START_FAIL|-5019|0|8166|RSAsyncTask0|YB42AC103323-000621FAC5A06F49-0-0|update_fail_count|ob_root_service.cpp:10743|“rootservice start()/do_restart() has failed 26 times. you may find solutions in previous error logs or seek help from official technicians.”

2024-09-13 15:02:18.048099|ERROR|RS|OB_ROOTSERVICE_START_FAIL|-5019|0|8168|RSAsyncTask2|YB42AC103323-000621FAC5C06FA0-0-0|update_fail_count|ob_root_service.cpp:10743|“rootservice start()/do_restart() has failed 46 times. you may find solutions in previous error logs or seek help from official technicians.”

二、三节点进展:

4|"[server_start 4/18] observer init success."

2024-09-13 14:59:11.085900|INFO|SERVER|OB_SERVER_START_BEGIN|0|0|18628|observer|Y0-0000000000000001-0-0|start|ob_server.cpp:854|"[server_start 5/18] observer start begin."

2024-09-13 14:59:11.085911|INFO|SERVER|OB_SERVER_INSTANCE_START_BEGIN|0|0|18628|observer|Y0-0000000000000001-0-0|start|ob_server.cpp:872|"[server_start 6/18] observer instance start begin."

2024-09-13 14:59:11.104250|INFO|STORAGE_BLKMGR|OB_SERVER_BLOCK_MANAGER_START_BEGIN|0|0|18628|observer|Y0-0000000000000001-0-0|start|ob_block_manager.cpp:199|"[server_start 7/18] block manager start begin."

2024-09-13 14:59:11.144096|INFO|STORAGE_BLKMGR|OB_SERVER_BLOCK_MANAGER_START_SUCCESS|0|0|18628|observer|Y0-0000000000000001-0-0|start|ob_block_manager.cpp:256|"[server_start 8/18] block manager start success."

2024-09-13 14:59:34.097384|INFO|SERVER|OB_SERVER_INSTANCE_START_SUCCESS|0|0|18628|observer|YB42AC103325-000621FAC3635C20-0-0|start|ob_server.cpp:1044|"[server_start 9/18] observer instance start success."

2024-09-13 14:59:34.103537|INFO|SERVER|OB_SERVER_WAIT_MULTI_TENANT_SYNCED_BEGIN|0|0|18628|observer|YB42AC103325-000621FAC3635C20-0-0|start|ob_server.cpp:1070|"[server_start 10/18] wait multi tenant synced begin."

看之前的observer日志也存在log日志报错,建议扩容到3-4倍内存大小

log_disk_size:

现在调整4倍,还是不行,部署时所有的warn都解决了,还是不行,换了obd服务器,也还是不行,都是卡在同一个地方。

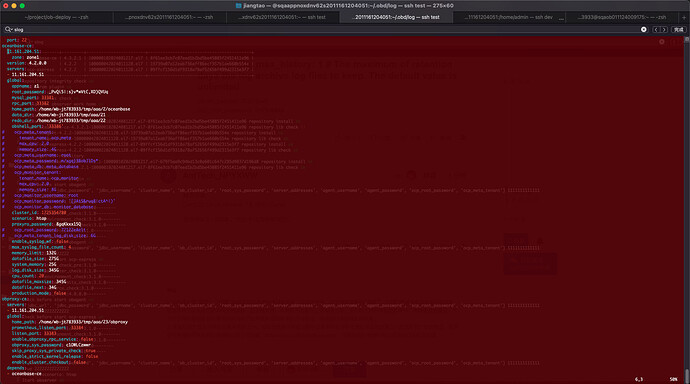

执行下cat ~/.obd/cluster/ob-poc/config.yaml 看看配置文件

稍等 这边让同事帮忙看一下

Only need to configure when remote login is required

user:

username: root

password: xxxx

key_file: your ssh-key file path if need

port: 12722

timeout: ssh connection timeout (second), default 30

oceanbase-ce:

servers:

- name: server1

Please don’t use hostname, only IP can be supported

ip: 172.16.51.35 - name: server2

ip: 172.16.51.36 - name: server3

ip: 172.16.51.37

global:Starting from observer version 4.2, the network selection for the observer is based on the ‘local_ip’ parameter, and the ‘devname’ parameter is no longer mandatory.

If the ‘local_ip’ parameter is set, the observer will first use this parameter for the configuration, regardless of the ‘devname’ parameter.

If only the ‘devname’ parameter is set, the observer will use the ‘devname’ parameter for the configuration.

If neither the ‘devname’ nor the ‘local_ip’ parameters are set, the ‘local_ip’ parameter will be automatically assigned the IP address configured above.

devname: eth0if current hardware’s memory capacity is smaller than 50G, please use the setting of “mini-single-example.yaml” and do a small adjustment.

memory_limit: 16G # The maximum running memory for an observerThe reserved system memory. system_memory is reserved for general tenants. The default value is 30G.

system_memory: 2G

datafile_size: 64G # Size of the data file.

log_disk_size: 64G # The size of disk space used by the clog files.

enable_syslog_wf: true # Print system logs whose levels are higher than WARNING to a separate log file. The default value is true.

enable_syslog_recycle: true # Enable auto system log recycling or not. The default value is false.

max_syslog_file_count: 4 # The maximum number of reserved log files before enabling auto recycling. The default value is 0.Cluster name for OceanBase Database. The default value is obcluster. When you deploy OceanBase Database and obproxy, this value must be the same as the cluster_name for obproxy.

appname: ob_poc

root_password: ob@poc.test

proxyro_password: obp@poc.test

observer_sys_password: obp@poc.testocp_meta_db: ocp_express # The database name of ocp express meta

ocp_meta_username: meta # The username of ocp express meta

ocp_meta_password: ‘’ # The password of ocp express meta

ocp_agent_monitor_password: ‘’ # The password for obagent monitor user

ocp_meta_tenant: # The config for ocp express meta tenant

tenant_name: ocp

max_cpu: 1

memory_size: 2G

log_disk_size: 7680M # The recommend value is (4608 + (expect node num + expect tenant num) * 512) M.

mysql_port: 2881

rpc_port: 2882

obshell_port: 2886

home_path: /u01/app/observer

data_dir: /data1/oceanbase/data

redo_dir: /u01/oceanbase/redo

cluster_id: 1726214411

ocp_agent_monitor_password: reuq1AdaSj

ocp_root_password: 5ZFR7h6MSH

ocp_meta_password: Qq6HymAFkE

server1:

zone: zone1

server2:

zone: zone2

server3:

zone: zone3

obproxy-ce:

depends: - oceanbase-ce

servers: - 172.16.51.35

global:

listen_port: 2883

prometheus_listen_port: 2884 # The Prometheus port. The default value is 2884.

home_path: /u01/app/obproxyoceanbase root server list

format: ip:mysql_port;ip:mysql_port. When a depends exists, OBD gets this value from the oceanbase-ce of the depends.

rs_list: 192.168.1.2:2881;192.168.1.3:2881;192.168.1.4:2881

enable_cluster_checkout: falseobserver cluster name, consistent with oceanbase-ce’s appname. When a depends exists, OBD gets this value from the oceanbase-ce of the depends.

cluster_name: obcluster

skip_proxy_sys_private_check: true

enable_strict_kernel_release: false

obproxy_sys_password: obproxy_sys_password@poc.test

172.16.51.35:

proxy_id: 6346

client_session_id_version: 2

obagent:

depends: - oceanbase-ce

servers: - name: server1

ip: 172.16.51.35 - name: server2

ip: 172.16.51.36 - name: server3

ip: 172.16.51.37

global:

home_path: /u01/app/obagent

http_basic_auth_password: t79z6QAVf

ocp-express:

depends: - oceanbase-ce

- obproxy-ce

- obagent

servers: - 172.16.51.35

global:The working directory for prometheus. prometheus is started under this directory. This is a required field.

home_path: /u01/app/ocp-express

log_dir: /u01/oceanbase/ocp-express/log # The log directory of ocp express server. The default value is {home_path}/log.

memory_size: 2G # The memory size of ocp-express server. The recommend value is 512MB * (expect node num + expect tenant num) * 60MB.logging_file_total_size_cap: 10G # The total log file size of ocp-express server

logging_file_max_history: 1 # The maximum of retention days the log archive log files to keep. The default value is unlimited

admin_passwd: 2Li3.%wZ

ocp_root_password: 9tN%@9zA

操作系统:

CentOS Linux release 7.6.1810 (Core)

现在换4.2.1.8版本,也会卡在同样的地方。

处理配置的值不同,其他没多大差别,deploy是正常的,只是start的时候不行

方便把ob部分截出来看看吗