【 使用环境 】测试环境

【 OB or 其他组件 】 OceanBase_CE 4.2.1

我们在 测试环境 进行如下测试操作:

- 从 A 表查询数据,经过处理后,批量插入 B 表。

- 每批次处理的数据为 20000 条。通过原生 JDBC 进行批量插入( 20000条是在同一个事务中,每3500条调用一次

executeBatch()) - 开了7个线程并行执行上述流程。

- A 表是传统单表,有40亿行数据,查询耗时 0.12秒 左右。

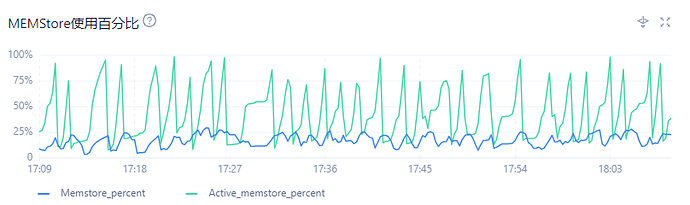

- B 表是分区表,每次插入的耗时,从 1.x 秒 ~ 70.x 秒不等(如下图所示)。

我们通过查询 oceanbase.v$OB_SQL_AUDIT 得到的慢查询如下所示( 为精简内容,只提供2条 ,批量插入的 SQL 太长已省略)

[

{

"SVR_IP": "172.31.46.6",

"SVR_PORT": 2882,

"REQUEST_ID": 424837637,

"SQL_EXEC_ID": 505109686,

"TRACE_ID": "YB42AC1F2E06-00061BAFD2ED7786-0-0",

"SID": 3221749360,

"CLIENT_IP": "172.31.45.237",

"CLIENT_PORT": 59728,

"TENANT_ID": 1002,

"TENANT_NAME": "test",

"EFFECTIVE_TENANT_ID": 1002,

"USER_ID": 200001,

"USER_NAME": "root",

"USER_GROUP": 0,

"USER_CLIENT_IP": "172.31.46.49",

"DB_ID": 503602,

"DB_NAME": "test",

"SQL_ID": "E12E769FAB3D9A8F06824C9B383377C8",

"QUERY_SQL": "",

"PLAN_ID": 48743,

"AFFECTED_ROWS": 3500,

"RETURN_ROWS": 0,

"PARTITION_CNT": 128,

"RET_CODE": 0,

"QC_ID": 0,

"DFO_ID": 0,

"SQC_ID": 0,

"WORKER_ID": 0,

"EVENT": "das wait remote response",

"P1TEXT": "",

"P1": 140505562599568,

"P2TEXT": "",

"P2": 0,

"P3TEXT": "",

"P3": 0,

"LEVEL": 0,

"WAIT_CLASS_ID": 107,

"WAIT_CLASS#": 7,

"WAIT_CLASS": "NETWORK",

"STATE": "WAITED KNOWN TIME",

"WAIT_TIME_MICRO": 51136536,

"TOTAL_WAIT_TIME_MICRO": 51136536,

"TOTAL_WAITS": 1,

"RPC_COUNT": 42,

"PLAN_TYPE": 1,

"IS_INNER_SQL": 0,

"IS_EXECUTOR_RPC": 0,

"IS_HIT_PLAN": 1,

"REQUEST_TIME": 1720771898387968,

"ELAPSED_TIME": 51284594,

"NET_TIME": 0,

"NET_WAIT_TIME": 2,

"QUEUE_TIME": 14,

"DECODE_TIME": 1,

"GET_PLAN_TIME": 11985,

"EXECUTE_TIME": 51272308,

"APPLICATION_WAIT_TIME": 0,

"CONCURRENCY_WAIT_TIME": 0,

"USER_IO_WAIT_TIME": 0,

"SCHEDULE_TIME": 0,

"ROW_CACHE_HIT": 0,

"BLOOM_FILTER_CACHE_HIT": 0,

"BLOCK_CACHE_HIT": 9408,

"DISK_READS": 0,

"RETRY_CNT": 0,

"TABLE_SCAN": 0,

"CONSISTENCY_LEVEL": 3,

"MEMSTORE_READ_ROW_COUNT": 0,

"SSSTORE_READ_ROW_COUNT": 0,

"DATA_BLOCK_READ_CNT": 4702,

"DATA_BLOCK_CACHE_HIT": 4702,

"INDEX_BLOCK_READ_CNT": 9412,

"INDEX_BLOCK_CACHE_HIT": 4706,

"BLOCKSCAN_BLOCK_CNT": 0,

"BLOCKSCAN_ROW_CNT": 0,

"PUSHDOWN_STORAGE_FILTER_ROW_CNT": 0,

"REQUEST_MEMORY_USED": 31426529,

"EXPECTED_WORKER_COUNT": 0,

"USED_WORKER_COUNT": 0,

"SCHED_INFO": null,

"FUSE_ROW_CACHE_HIT": 0,

"PS_CLIENT_STMT_ID": -1,

"PS_INNER_STMT_ID": -1,

"TX_ID": 506214539,

"SNAPSHOT_VERSION": 0,

"REQUEST_TYPE": 2,

"IS_BATCHED_MULTI_STMT": 0,

"OB_TRACE_INFO": null,

"PLAN_HASH": 3290318591324336132,

"LOCK_FOR_READ_TIME": 0,

"PARAMS_VALUE": "",

"RULE_NAME": "",

"PARTITION_HIT": 1,

"TX_INTERNAL_ROUTING": 1,

"TX_STATE_VERSION": 417,

"FLT_TRACE_ID": "",

"NETWORK_WAIT_TIME": 51136536

},

{

"SVR_IP": "172.31.46.6",

"SVR_PORT": 2882,

"REQUEST_ID": 424837464,

"SQL_EXEC_ID": 505109566,

"TRACE_ID": "YB42AC1F2E06-00061BAFD7F9056B-0-0",

"SID": 3221749256,

"CLIENT_IP": "172.31.46.6",

"CLIENT_PORT": 54212,

"TENANT_ID": 1002,

"TENANT_NAME": "test",

"EFFECTIVE_TENANT_ID": 1002,

"USER_ID": 200001,

"USER_NAME": "root",

"USER_GROUP": 0,

"USER_CLIENT_IP": "172.31.46.49",

"DB_ID": 503602,

"DB_NAME": "test",

"SQL_ID": "E12E769FAB3D9A8F06824C9B383377C8",

"QUERY_SQL": "*",

"PLAN_ID": 48743,

"AFFECTED_ROWS": 3500,

"RETURN_ROWS": 0,

"PARTITION_CNT": 128,

"RET_CODE": 0,

"QC_ID": 0,

"DFO_ID": 0,

"SQC_ID": 0,

"WORKER_ID": 0,

"EVENT": "das wait remote response",

"P1TEXT": "",

"P1": 140467300063936,

"P2TEXT": "",

"P2": 0,

"P3TEXT": "",

"P3": 0,

"LEVEL": 0,

"WAIT_CLASS_ID": 107,

"WAIT_CLASS#": 7,

"WAIT_CLASS": "NETWORK",

"STATE": "WAITED KNOWN TIME",

"WAIT_TIME_MICRO": 44044948,

"TOTAL_WAIT_TIME_MICRO": 44044948,

"TOTAL_WAITS": 1,

"RPC_COUNT": 36,

"PLAN_TYPE": 1,

"IS_INNER_SQL": 0,

"IS_EXECUTOR_RPC": 0,

"IS_HIT_PLAN": 1,

"REQUEST_TIME": 1720771896652022,

"ELAPSED_TIME": 44160137,

"NET_TIME": 0,

"NET_WAIT_TIME": 2,

"QUEUE_TIME": 14,

"DECODE_TIME": 1,

"GET_PLAN_TIME": 9008,

"EXECUTE_TIME": 44150790,

"APPLICATION_WAIT_TIME": 0,

"CONCURRENCY_WAIT_TIME": 0,

"USER_IO_WAIT_TIME": 0,

"SCHEDULE_TIME": 0,

"ROW_CACHE_HIT": 0,

"BLOOM_FILTER_CACHE_HIT": 0,

"BLOCK_CACHE_HIT": 9684,

"DISK_READS": 0,

"RETRY_CNT": 0,

"TABLE_SCAN": 0,

"CONSISTENCY_LEVEL": 3,

"MEMSTORE_READ_ROW_COUNT": 0,

"SSSTORE_READ_ROW_COUNT": 0,

"DATA_BLOCK_READ_CNT": 4558,

"DATA_BLOCK_CACHE_HIT": 4558,

"INDEX_BLOCK_READ_CNT": 10252,

"INDEX_BLOCK_CACHE_HIT": 5126,

"BLOCKSCAN_BLOCK_CNT": 0,

"BLOCKSCAN_ROW_CNT": 0,

"PUSHDOWN_STORAGE_FILTER_ROW_CNT": 0,

"REQUEST_MEMORY_USED": 30858687,

"EXPECTED_WORKER_COUNT": 0,

"USED_WORKER_COUNT": 0,

"SCHED_INFO": null,

"FUSE_ROW_CACHE_HIT": 0,

"PS_CLIENT_STMT_ID": -1,

"PS_INNER_STMT_ID": -1,

"TX_ID": 506214534,

"SNAPSHOT_VERSION": 0,

"REQUEST_TYPE": 2,

"IS_BATCHED_MULTI_STMT": 0,

"OB_TRACE_INFO": null,

"PLAN_HASH": 3290318591324336132,

"LOCK_FOR_READ_TIME": 0,

"PARAMS_VALUE": "",

"RULE_NAME": "",

"PARTITION_HIT": 1,

"TX_INTERNAL_ROUTING": 1,

"TX_STATE_VERSION": 228,

"FLT_TRACE_ID": "",

"NETWORK_WAIT_TIME": 44044948

}

]

B 表的建表分区情况大致如下:

CREATE TABLE `table_b` (

`id` bigint unsigned NOT NULL,

`merchant_id` int unsigned NOT NULL,

`user_id` int unsigned NOT NULL,

`local_time` datetime NOT NULL,

`days` SMALLINT UNSIGNED GENERATED ALWAYS AS ( (id & 65504) >> 5 ) VIRTUAL,

`uid` TINYINT UNSIGNED GENERATED ALWAYS AS ( id & 31 ) VIRTUAL,

PRIMARY KEY (`id`) USING BTREE

) DEFAULT CHARSET=utf8mb4

PARTITION BY LIST (days)

SUBPARTITION BY HASH (uid) SUBPARTITIONS 32

(

PARTITION P20240611_0 VALUES IN ( 1632, 1633, 1634, 1635, 1636, 1637, 1638, 1639, 1640, 1641, 1642, 1643, 1644, 1645, 1646, 1647 ),

PARTITION P20240612_0 VALUES IN ( 1648, 1649, 1650, 1651, 1652, 1653, 1654, 1655, 1656, 1657, 1658, 1659, 1660, 1661, 1662, 1663 )

);

同一批次插入的数据一般都是同一天的(最多在凌晨跨天),因此一次插入最多分散在32个分区中。

请问各位大神,这种抖动得如此厉害的情况该如何避免呢 ?