[2024-05-31 11:26:08.934825] WDIAG [RPC] send (ob_poc_rpc_proxy.h:173) [25579][T1002_TntShared][T1002][YB427F000001-00061908C2BDEF9E-0-0] [lt=0][errcode=-4023] REACH SYSLOG RATE LIMIT [bandwidth]

[2024-05-31 11:26:08.934852] INFO [SQL.DAS] fetch_new_range (ob_das_id_rpc.cpp:114) [25579][T1002_TntShared][T1002][YB427F000001-00061908C2BDEF9E-0-0] [lt=0] fetch new DAS ID range finish(ret=-4023, ret=“OB_EAGAIN”, msg={tenant_id:1002, range:1000000}, res={tenant_id:0, status:0, start_id:0, end_id:0})

[2024-05-31 11:26:08.934877] INFO [SERVER] sleep_before_local_retry (ob_query_retry_ctrl.cpp:91) [25579][T1002_TntShared][T1002][YB427F000001-00061908C2BDEF9E-0-0] [lt=0] will sleep(sleep_us=33000, remain_us=20538559, base_sleep_us=1000, retry_sleep_type=1, v.stmt_retry_times_=33, v.err_=-4383, timeout_timestamp=1717125989473435)

[2024-05-31 11:26:08.936625] INFO [SQL.EXE] start_stmt (ob_sql_trans_control.cpp:636) [30386][T1015_ReqMemEvi][T1015][YB427F000001-000619085BDFFF95-0-0] [lt=0] start stmt(ret=-4383, auto_commit=true, session_id=1, snapshot={this:0x7f4d91053108, valid:false, source:0, core:{version:{val:18446744073709551615, v:3}, tx_id:{txid:0}, scn:0}, uncertain_bound:0, snapshot_lsid:{id:-1}, snapshot_ls_role:0, snapshot_acquire_addr:“0.0.0.0:0”, parts:[], committed:false}, savepoint=0, tx_desc={this:0x7f4f3b811cb0, tx_id:{txid:0}, state:1, addr:“127.0.0.1:2882”, tenant_id:1015, session_id:1, assoc_session_id:1, xid:NULL, xa_mode:"", xa_start_addr:“0.0.0.0:0”, access_mode:0, tx_consistency_type:0, isolation:1, snapshot_version:{val:18446744073709551615, v:3}, snapshot_scn:0, active_scn:0, op_sn:2, alloc_ts:1717125968881577, active_ts:-1, commit_ts:-1, finish_ts:-1, timeout_us:29999988, lock_timeout_us:-1, expire_ts:9223372036854775807, coord_id:{id:-1}, parts:[], exec_info_reap_ts:0, commit_version:{val:18446744073709551615, v:3}, commit_times:0, commit_cb:null, cluster_id:1716372876, cluster_version:17180065793, seq_base:1717125968880464, flags_.SHADOW:true, flags_.INTERRUPTED:false, flags_.BLOCK:false, flags_.REPLICA:false, can_elr:true, cflict_txs:[], abort_cause:0, commit_expire_ts:-1, commit_task_.is_registered():false, ref:1}, plan_type=1, stmt_type=1, has_for_update=true, query_start_time=1717125944540899, use_das=false, nested_level=0, session={this:0x7f4d576a61a0, id:1, deser:false, tenant:“sys”, tenant_id:1, effective_tenant:“sys”, effective_tenant_id:1015, database:“oceanbase”, user:“root@%”, consistency_level:3, session_state:0, autocommit:true, tx:0x7f4f3b811cb0}, plan=0x7f4d7ace6050, consistency_level_in_plan_ctx=3, trans_result={incomplete:false, parts:[], touched_ls_list:[], cflict_txs:[]})

[2024-05-31 11:26:08.936697] INFO [SERVER] sleep_before_local_retry (ob_query_retry_ctrl.cpp:91) [30386][T1015_ReqMemEvi][T1015][YB427F000001-000619085BDFFF95-0-0] [lt=0] will sleep(sleep_us=100000, remain_us=5604190, base_sleep_us=1000, retry_sleep_type=1, v.stmt_retry_times_=190, v.err_=-4383, timeout_timestamp=1717125974540887)

[2024-05-31 11:26:08.937162] INFO [SQL.EXE] end_stmt (ob_sql_trans_control.cpp:1139) [16628][TimezoneMgr][T1002][YB427F000001-00061908C76E0CC5-0-0] [lt=1] end stmt(ret=0, tx_id=0, plain_select=true, stmt_type=1, savepoint=0, tx_desc={this:0x7f4effcd3230, tx_id:{txid:0}, state:1, addr:“127.0.0.1:2882”, tenant_id:1002, session_id:1, assoc_session_id:1, xid:NULL, xa_mode:"", xa_start_addr:“0.0.0.0:0”, access_mode:-1, tx_consistency_type:0, isolation:-1, snapshot_version:{val:18446744073709551615, v:3}, snapshot_scn:0, active_scn:0, op_sn:1, alloc_ts:1717125968669068, active_ts:-1, commit_ts:-1, finish_ts:-1, timeout_us:-1, lock_timeout_us:-1, expire_ts:9223372036854775807, coord_id:{id:-1}, parts:[], exec_info_reap_ts:0, commit_version:{val:18446744073709551615, v:3}, commit_times:0, commit_cb:null, cluster_id:-1, cluster_version:17180065793, seq_base:1717125968666941, flags_.SHADOW:true, flags_.INTERRUPTED:false, flags_.BLOCK:false, flags_.REPLICA:false, can_elr:true, cflict_txs:[], abort_cause:0, commit_expire_ts:-1, commit_task_.is_registered():false, ref:1}, trans_result={incomplete:false, parts:[], touched_ls_list:[], cflict_txs:[]}, rollback=true, session={this:0x7f4f00ecc1a0, id:1, deser:false, tenant:“sys”, tenant_id:1, effective_tenant:“sys”, effective_tenant_id:1002, database:“oceanbase”, user:“root@%”, consistency_level:3, session_state:0, autocommit:true, tx:0x7f4effcd3230}, exec_ctx.get_errcode()=-4383)

[2024-05-31 11:26:08.937531] INFO [SQL.DAS] fetch_new_range (ob_das_id_rpc.cpp:114) [16628][TimezoneMgr][T1002][YB427F000001-00061908C76E0CC5-0-0] [lt=0] fetch new DAS ID range finish(ret=-4023, ret=“OB_EAGAIN”, msg={tenant_id:1002, range:1000000}, res={tenant_id:0, status:0, start_id:0, end_id:0})

[2024-05-31 11:26:08.938518] INFO [SQL.EXE] start_stmt (ob_sql_trans_control.cpp:636) [16070][EvtHisUpdTask][T1][YB427F000001-00061908C15DE35D-0-0] [lt=0] start stmt(ret=-4383, auto_commit=true, session_id=1, snapshot={this:0x7f4f7c0d3388, valid:false, source:0, core:{version:{val:18446744073709551615, v:3}, tx_id:{txid:0}, scn:0}, uncertain_bound:0, snapshot_lsid:{id:-1}, snapshot_ls_role:0, snapshot_acquire_addr:“0.0.0.0:0”, parts:[], committed:false}, savepoint=0, tx_desc={this:0x7f4f72fccc10, tx_id:{txid:0}, state:1, addr:“127.0.0.1:2882”, tenant_id:1, session_id:1, assoc_session_id:1, xid:NULL, xa_mode:"", xa_start_addr:“0.0.0.0:0”, access_mode:0, tx_consistency_type:0, isolation:1, snapshot_version:{val:18446744073709551615, v:3}, snapshot_scn:0, active_scn:0, op_sn:2, alloc_ts:1717125968883726, active_ts:-1, commit_ts:-1, finish_ts:-1, timeout_us:29999993, lock_timeout_us:-1, expire_ts:9223372036854775807, coord_id:{id:-1}, parts:[], exec_info_reap_ts:0, commit_version:{val:18446744073709551615, v:3}, commit_times:0, commit_cb:null, cluster_id:1716372876, cluster_version:17180065793, seq_base:1717125968881577, flags_.SHADOW:true, flags_.INTERRUPTED:false, flags_.BLOCK:false, flags_.REPLICA:false, can_elr:false, cflict_txs:[], abort_cause:0, commit_expire_ts:-1, commit_task_.is_registered():false, ref:1}, plan_type=4, stmt_type=2, has_for_update=false, query_start_time=1717125954907776, use_das=false, nested_level=0, session={this:0x7f4cd62fa1a0, id:1, deser:false, tenant:“sys”, tenant_id:1, effective_tenant:“sys”, effective_tenant_id:1, database:“oceanbase”, user:“root@%”, consistency_level:3, session_state:0, autocommit:true, tx:0x7f4f72fccc10}, plan=0x7f4f43258050, consistency_level_in_plan_ctx=3, trans_result={incomplete:false, parts:[], touched_ls_list:[], cflict_txs:[]})

[2024-05-31 11:26:08.938586] INFO [SERVER] sleep_before_local_retry (ob_query_retry_ctrl.cpp:91) [16070][EvtHisUpdTask][T1][YB427F000001-00061908C15DE35D-0-0] [lt=0] will sleep(sleep_us=100000, remain_us=15969183, base_sleep_us=1000, retry_sleep_type=1, v.stmt_retry_times_=123, v.err_=-4383, timeout_timestamp=1717125984907769)

[2024-05-31 11:26:08.938818] INFO [STORAGE.TRANS] get_number (ob_id_service.cpp:389) [25864][T1001_L0_G5][T1001][YB427F000001-00061908ABEFFA98-0-0] [lt=2] get number(ret=-4023, service_type_=2, range=1000000, base_id=0, start_id=0, end_id=0)

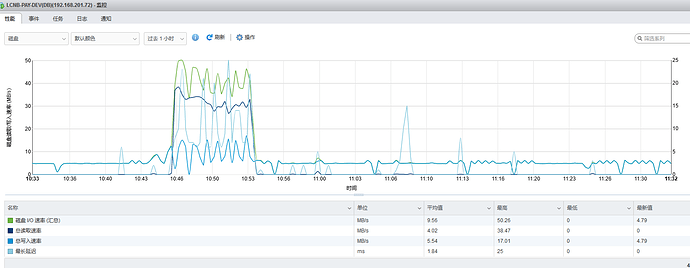

磁盘是什么类型得。ob磁盘推荐使用SSD或者检查下磁盘IO

[2024-05-31 11:17:54.916942] ERROR inner_aio (ob_io_manager.cpp:883) [26479][T1004_OB_SLOG][T1004][Y0-0000000000000000-0-0] [lt=0][errcode=-4392] disk is hung(msg=“disk has fatal error”)

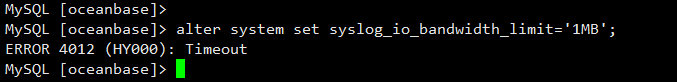

超时了,修改不了

磁盘性能可能有问题,或者磁盘故障,推荐使用SSD

目前已解决,用户重启机器后解决。

[2024-05-31 12:04:17.054] [DEBUG] - cmd: []

[2024-05-31 12:04:17.054] [DEBUG] - opts: {}

[2024-05-31 12:04:17.054] [DEBUG] - mkdir /root/.obd/lock/

[2024-05-31 12:04:17.054] [DEBUG] - set lock mode to NO_LOCK(0)

[2024-05-31 12:04:17.054] [DEBUG] - Get deploy list

[2024-05-31 12:04:17.055] [DEBUG] - mkdir /root/.obd/cluster/

[2024-05-31 12:04:17.055] [DEBUG] - mkdir /root/.obd/config_parser/

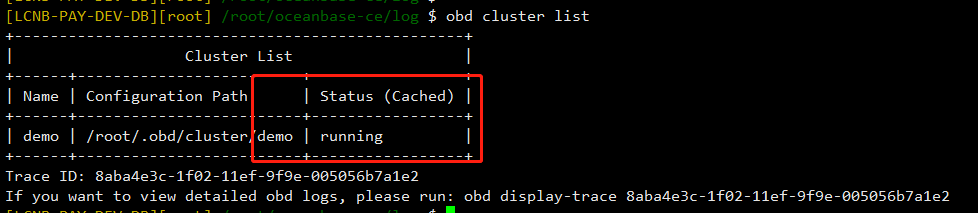

[2024-05-31 12:04:17.063] [INFO] ±-------------------------------------------------+

[2024-05-31 12:04:17.063] [INFO] | Cluster List |

[2024-05-31 12:04:17.063] [INFO] ±-----±------------------------±----------------+

[2024-05-31 12:04:17.063] [INFO] | Name | Configuration Path | Status (Cached) |

[2024-05-31 12:04:17.063] [INFO] ±-----±------------------------±----------------+

[2024-05-31 12:04:17.063] [INFO] | demo | /root/.obd/cluster/demo | running |

[2024-05-31 12:04:17.063] [INFO] ±-----±------------------------±----------------+

[2024-05-31 12:04:17.063] [INFO] Trace ID: d975ac1a-1f02-11ef-aa3b-005056b7a1e2

[2024-05-31 12:04:17.063] [INFO] If you want to view detailed obd logs, please run: obd display-trace d975ac1a-1f02-11ef-aa3b-005056b7a1e2

重装后之前得配置和数据都会清空。已是新集群了

不加集群名称name 怎么重启呢。文档有详细说明得呢

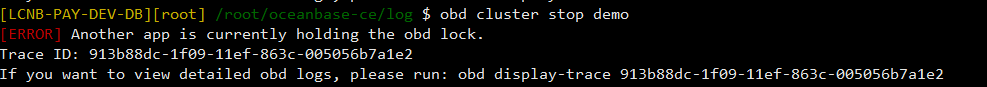

停止不了

[2024-05-31 12:52:22.354] [DEBUG] - cmd: [‘demo’]

[2024-05-31 12:52:22.354] [DEBUG] - opts: {‘servers’: None, ‘components’: None}

[2024-05-31 12:52:22.355] [DEBUG] - mkdir /root/.obd/lock/

[2024-05-31 12:52:22.355] [DEBUG] - unknown lock mode

[2024-05-31 12:52:22.355] [DEBUG] - try to get share lock /root/.obd/lock/global

[2024-05-31 12:52:22.355] [DEBUG] - share lock

/root/.obd/lock/global, count 1[2024-05-31 12:52:22.355] [DEBUG] - Get Deploy by name

[2024-05-31 12:52:22.355] [DEBUG] - mkdir /root/.obd/cluster/

[2024-05-31 12:52:22.356] [DEBUG] - mkdir /root/.obd/config_parser/

[2024-05-31 12:52:22.356] [DEBUG] - try to get exclusive lock /root/.obd/lock/deploy_demo

[2024-05-31 12:52:22.358] [ERROR] Another app is currently holding the obd lock.

[2024-05-31 12:52:22.358] [ERROR] Traceback (most recent call last):

[2024-05-31 12:52:22.358] [ERROR] File “_lock.py”, line 64, in _ex_lock

[2024-05-31 12:52:22.358] [ERROR] File “tool.py”, line 499, in exclusive_lock_obj

[2024-05-31 12:52:22.358] [ERROR] BlockingIOError: [Errno 11] Resource temporarily unavailable

[2024-05-31 12:52:22.359] [ERROR]

[2024-05-31 12:52:22.359] [ERROR] During handling of the above exception, another exception occurred:

[2024-05-31 12:52:22.359] [ERROR]

[2024-05-31 12:52:22.359] [ERROR] Traceback (most recent call last):

[2024-05-31 12:52:22.359] [ERROR] File “_lock.py”, line 85, in ex_lock

[2024-05-31 12:52:22.359] [ERROR] File “_lock.py”, line 66, in _ex_lock

[2024-05-31 12:52:22.359] [ERROR] _errno.LockError: [Errno 11] Resource temporarily unavailable

[2024-05-31 12:52:22.359] [ERROR]

[2024-05-31 12:52:22.359] [ERROR] During handling of the above exception, another exception occurred:

[2024-05-31 12:52:22.359] [ERROR]

[2024-05-31 12:52:22.359] [ERROR] Traceback (most recent call last):

[2024-05-31 12:52:22.359] [ERROR] File “obd.py”, line 244, in do_command

[2024-05-31 12:52:22.359] [ERROR] File “obd.py”, line 912, in _do_command

[2024-05-31 12:52:22.359] [ERROR] File “core.py”, line 2654, in stop_cluster

[2024-05-31 12:52:22.359] [ERROR] File “_deploy.py”, line 1831, in get_deploy_config

[2024-05-31 12:52:22.359] [ERROR] File “_deploy.py”, line 1818, in _lock

[2024-05-31 12:52:22.359] [ERROR] File “_lock.py”, line 283, in deploy_ex_lock

[2024-05-31 12:52:22.359] [ERROR] File “_lock.py”, line 262, in _ex_lock

[2024-05-31 12:52:22.359] [ERROR] File “_lock.py”, line 254, in _lock

[2024-05-31 12:52:22.359] [ERROR] File “_lock.py”, line 185, in lock

[2024-05-31 12:52:22.359] [ERROR] File “_lock.py”, line 90, in ex_lock

[2024-05-31 12:52:22.359] [ERROR] _errno.LockError: [Errno 11] Resource temporarily unavailable

[2024-05-31 12:52:22.360] [ERROR]

[2024-05-31 12:52:22.360] [INFO] Trace ID: 913b88dc-1f09-11ef-863c-005056b7a1e2

[2024-05-31 12:52:22.360] [INFO] If you want to view detailed obd logs, please run: obd display-trace 913b88dc-1f09-11ef-863c-005056b7a1e2

[2024-05-31 12:52:22.360] [DEBUG] - share lock /root/.obd/lock/global release, count 0

[2024-05-31 12:52:22.360] [DEBUG] - unlock /root/.obd/lock/global

[2024-05-31 12:52:22.360] [DEBUG] - unlock /root/.obd/lock/deploy_demo

[2024-05-31 12:58:14.537764] INFO [SERVER.OMT] stop (ob_multi_tenant.cpp:626) [2730][observer][T0][Y0-0000000000000000-0-0] [lt=1] there’re some tenants need destroy(count=4)

[2024-05-31 12:58:14.537928] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2730][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1001)

[2024-05-31 12:58:14.537960] WDIAG [LIB] try_wait (threads.cpp:278) [2730][observer][T0][Y0-0000000000000000-0-0] [lt=0][errcode=-4023] ObPThread try_wait failed(ret=-4023)

[2024-05-31 12:58:14.538043] WDIAG [SERVER.OMT] try_wait (ob_tenant.cpp:1107) [2730][observer][T0][Y0-0000000000000000-0-0] [lt=0][errcode=-4023] tenant pthread_tryjoin_np failed(errno=11, id_=1)

[2024-05-31 12:58:14.538082] WDIAG [LIB] try_wait (thread.cpp:241) [2730][observer][T0][Y0-0000000000000000-0-0] [lt=0][errcode=-4023] pthread_tryjoin_np failed(pret=16, errno=11, ret=-4023)

[2024-05-31 12:58:14.538088] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1691) [2730][observer][T0][Y0-0000000000000000-0-0] [lt=1] removed_tenant begin to kill tenant session(tenant_id=1002)

[2024-05-31 12:58:14.538378] WDIAG [LIB] try_wait (threads.cpp:278) [2730][observer][T0][Y0-0000000000000000-0-0] [lt=1][errcode=-4023] ObPThread try_wait failed(ret=-4023)

[2024-05-31 12:58:14.538416] INFO [SERVER.OMT] stop (ob_multi_tenant.cpp:626) [2730][observer][T0][Y0-0000000000000000-0-0] [lt=0] there’re some tenants need destroy(count=4)

[2024-05-31 12:58:14.538470] WDIAG [SERVER.OMT] try_wait (ob_tenant.cpp:1107) [2730][observer][T0][Y0-0000000000000000-0-0] [lt=0][errcode=-4023] tenant pthread_tryjoin_np failed(errno=11, id_=1)

[2024-05-31 12:58:14.538509] WDIAG [LIB] try_wait (thread.cpp:241) [2730][observer][T0][Y0-0000000000000000-0-0] [lt=0][errcode=-4023] pthread_tryjoin_np failed(pret=16, errno=11, ret=-4023)

observer.log报这个

[2024-05-31 13:56:39.932] [DEBUG] - cmd: [‘demo’]

[2024-05-31 13:56:39.933] [DEBUG] - opts: {‘servers’: None, ‘components’: None, ‘force_delete’: None, ‘strict_check’: None, ‘without_parameter’: None}

[2024-05-31 13:56:39.933] [DEBUG] - mkdir /root/.obd/lock/

[2024-05-31 13:56:39.933] [DEBUG] - unknown lock mode

[2024-05-31 13:56:39.933] [DEBUG] - try to get share lock /root/.obd/lock/global

[2024-05-31 13:56:39.933] [DEBUG] - share lock /root/.obd/lock/global, count 1

[2024-05-31 13:56:39.933] [DEBUG] - Get Deploy by name

[2024-05-31 13:56:39.933] [DEBUG] - mkdir /root/.obd/cluster/

[2024-05-31 13:56:39.933] [DEBUG] - mkdir /root/.obd/config_parser/

[2024-05-31 13:56:39.934] [DEBUG] - try to get exclusive lock /root/.obd/lock/deploy_demo

[2024-05-31 13:56:39.934] [DEBUG] - exclusive lock /root/.obd/lock/deploy_demo, count 1

[2024-05-31 13:56:39.941] [DEBUG] - Deploy status judge

[2024-05-31 13:56:39.974] [INFO] Get local repositories

[2024-05-31 13:56:39.975] [DEBUG] - mkdir /root/.obd/repository

[2024-05-31 13:56:39.975] [DEBUG] - Get local repository obagent-4.2.2-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:39.975] [DEBUG] - Search repository obagent version: 4.2.2, tag: 19739a07a12eab736aff86ecf357b1ae660b554e, release: None, package_hash: None

[2024-05-31 13:56:39.976] [DEBUG] - try to get share lock /root/.obd/lock/mirror_and_repo

[2024-05-31 13:56:39.976] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo, count 1

[2024-05-31 13:56:39.976] [DEBUG] - mkdir /root/.obd/repository/obagent

[2024-05-31 13:56:40.006] [DEBUG] - Found repository obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:40.006] [DEBUG] - Get local repository obproxy-ce-4.2.3.0-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.006] [DEBUG] - Search repository obproxy-ce version: 4.2.3.0, tag: 0490ebc04220def8d25cb9cac9ac61a4efa6d639, release: None, package_hash: None

[2024-05-31 13:56:40.006] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo, count 2

[2024-05-31 13:56:40.006] [DEBUG] - mkdir /root/.obd/repository/obproxy-ce

[2024-05-31 13:56:40.030] [DEBUG] - Found repository obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.030] [DEBUG] - Get local repository oceanbase-ce-4.3.0.1-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:40.030] [DEBUG] - Search repository oceanbase-ce version: 4.3.0.1, tag: c4a03c83614f50c99ddb1c37dda858fa5d9b14b7, release: None, package_hash: None

[2024-05-31 13:56:40.030] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo, count 3

[2024-05-31 13:56:40.030] [DEBUG] - mkdir /root/.obd/repository/oceanbase-ce

[2024-05-31 13:56:40.050] [DEBUG] - Found repository oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:40.051] [DEBUG] - Get local repository grafana-7.5.17-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:40.051] [DEBUG] - Search repository grafana version: 7.5.17, tag: 1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6, release: None, package_hash: None

[2024-05-31 13:56:40.051] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo, count 4

[2024-05-31 13:56:40.051] [DEBUG] - mkdir /root/.obd/repository/grafana

[2024-05-31 13:56:40.080] [DEBUG] - Found repository grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:40.080] [DEBUG] - Get local repository prometheus-2.37.1-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:40.080] [DEBUG] - Search repository prometheus version: 2.37.1, tag: 58913c7606f05feb01bc1c6410346e5fc31cf263, release: None, package_hash: None

[2024-05-31 13:56:40.080] [DEBUG] - share lock /root/.obd/lock/mirror_and_repo, count 5

[2024-05-31 13:56:40.081] [DEBUG] - mkdir /root/.obd/repository/prometheus

[2024-05-31 13:56:40.107] [DEBUG] - Found repository prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:40.236] [DEBUG] - Get deploy config

[2024-05-31 13:56:40.258] [INFO] Search plugins

[2024-05-31 13:56:40.258] [DEBUG] - Searching start_check plugin for components …

[2024-05-31 13:56:40.258] [DEBUG] - Searching start_check plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:40.259] [DEBUG] - mkdir /root/.obd/plugins

[2024-05-31 13:56:40.290] [DEBUG] - Found for obagent-py_script_start_check-1.3.0 for obagent-4.2.2

[2024-05-31 13:56:40.290] [DEBUG] - Searching start_check plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.302] [DEBUG] - Found for obproxy-ce-py_script_start_check-4.2.3 for obproxy-ce-4.2.3.0

[2024-05-31 13:56:40.302] [DEBUG] - Searching start_check plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:40.348] [DEBUG] - Found for oceanbase-ce-py_script_start_check-4.2.2.0 for oceanbase-ce-4.3.0.1

[2024-05-31 13:56:40.348] [DEBUG] - Searching start_check plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:40.357] [DEBUG] - Found for grafana-py_script_start_check-7.5.17 for grafana-7.5.17

[2024-05-31 13:56:40.357] [DEBUG] - Searching start_check plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:40.366] [DEBUG] - Found for prometheus-py_script_start_check-2.37.1 for prometheus-2.37.1

[2024-05-31 13:56:40.366] [DEBUG] - Searching create_tenant plugin for components …

[2024-05-31 13:56:40.366] [DEBUG] - Searching create_tenant plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:40.367] [DEBUG] - No such create_tenant plugin for obagent-4.2.2

[2024-05-31 13:56:40.367] [DEBUG] - Searching create_tenant plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.367] [DEBUG] - No such create_tenant plugin for obproxy-ce-4.2.3.0

[2024-05-31 13:56:40.367] [DEBUG] - Searching create_tenant plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:40.368] [DEBUG] - Found for oceanbase-ce-py_script_create_tenant-4.3.0.0 for oceanbase-ce-4.3.0.1

[2024-05-31 13:56:40.368] [DEBUG] - Searching create_tenant plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:40.368] [DEBUG] - No such create_tenant plugin for grafana-7.5.17

[2024-05-31 13:56:40.368] [DEBUG] - Searching create_tenant plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:40.368] [DEBUG] - No such create_tenant plugin for prometheus-2.37.1

[2024-05-31 13:56:40.369] [DEBUG] - Searching tenant_optimize plugin for components …

[2024-05-31 13:56:40.369] [DEBUG] - Searching tenant_optimize plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:40.369] [DEBUG] - No such tenant_optimize plugin for obagent-4.2.2

[2024-05-31 13:56:40.369] [DEBUG] - Searching tenant_optimize plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.369] [DEBUG] - No such tenant_optimize plugin for obproxy-ce-4.2.3.0

[2024-05-31 13:56:40.370] [DEBUG] - Searching tenant_optimize plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:40.370] [DEBUG] - Found for oceanbase-ce-py_script_tenant_optimize-4.3.0.0 for oceanbase-ce-4.3.0.1

[2024-05-31 13:56:40.370] [DEBUG] - Searching tenant_optimize plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:40.370] [DEBUG] - No such tenant_optimize plugin for grafana-7.5.17

[2024-05-31 13:56:40.370] [DEBUG] - Searching tenant_optimize plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:40.370] [DEBUG] - No such tenant_optimize plugin for prometheus-2.37.1

[2024-05-31 13:56:40.370] [DEBUG] - Searching start plugin for components …

[2024-05-31 13:56:40.371] [DEBUG] - Searching start plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:40.371] [DEBUG] - Found for obagent-py_script_start-1.3.0 for obagent-4.2.2

[2024-05-31 13:56:40.371] [DEBUG] - Searching start plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.372] [DEBUG] - Found for obproxy-ce-py_script_start-4.2.3 for obproxy-ce-4.2.3.0

[2024-05-31 13:56:40.372] [DEBUG] - Searching start plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:40.373] [DEBUG] - Found for oceanbase-ce-py_script_start-4.3.0.0 for oceanbase-ce-4.3.0.1

[2024-05-31 13:56:40.373] [DEBUG] - Searching start plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:40.373] [DEBUG] - Found for grafana-py_script_start-7.5.17 for grafana-7.5.17

[2024-05-31 13:56:40.373] [DEBUG] - Searching start plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:40.373] [DEBUG] - Found for prometheus-py_script_start-2.37.1 for prometheus-2.37.1

[2024-05-31 13:56:40.373] [DEBUG] - Searching connect plugin for components …

[2024-05-31 13:56:40.373] [DEBUG] - Searching connect plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:40.379] [DEBUG] - Found for obagent-py_script_connect-1.3.0 for obagent-4.2.2

[2024-05-31 13:56:40.379] [DEBUG] - Searching connect plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.379] [DEBUG] - Found for obproxy-ce-py_script_connect-3.1.0 for obproxy-ce-4.2.3.0

[2024-05-31 13:56:40.379] [DEBUG] - Searching connect plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:40.380] [DEBUG] - Found for oceanbase-ce-py_script_connect-4.2.2.0 for oceanbase-ce-4.3.0.1

[2024-05-31 13:56:40.380] [DEBUG] - Searching connect plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:40.380] [DEBUG] - Found for grafana-py_script_connect-7.5.17 for grafana-7.5.17

[2024-05-31 13:56:40.380] [DEBUG] - Searching connect plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:40.380] [DEBUG] - Found for prometheus-py_script_connect-2.37.1 for prometheus-2.37.1

[2024-05-31 13:56:40.380] [DEBUG] - Searching bootstrap plugin for components …

[2024-05-31 13:56:40.380] [DEBUG] - Searching bootstrap plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:40.381] [DEBUG] - Found for obagent-py_script_bootstrap-0.1 for obagent-4.2.2

[2024-05-31 13:56:40.381] [DEBUG] - Searching bootstrap plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.381] [DEBUG] - Found for obproxy-ce-py_script_bootstrap-3.1.0 for obproxy-ce-4.2.3.0

[2024-05-31 13:56:40.381] [DEBUG] - Searching bootstrap plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:40.381] [DEBUG] - Found for oceanbase-ce-py_script_bootstrap-4.2.2.0 for oceanbase-ce-4.3.0.1

[2024-05-31 13:56:40.381] [DEBUG] - Searching bootstrap plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:40.381] [DEBUG] - Found for grafana-py_script_bootstrap-7.5.17 for grafana-7.5.17

[2024-05-31 13:56:40.381] [DEBUG] - Searching bootstrap plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:40.382] [DEBUG] - Found for prometheus-py_script_bootstrap-2.37.1 for prometheus-2.37.1

[2024-05-31 13:56:40.382] [DEBUG] - Searching display plugin for components …

[2024-05-31 13:56:40.382] [DEBUG] - Searching display plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:40.382] [DEBUG] - Found for obagent-py_script_display-1.3.0 for obagent-4.2.2

[2024-05-31 13:56:40.382] [DEBUG] - Searching display plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.382] [DEBUG] - Found for obproxy-ce-py_script_display-3.1.0 for obproxy-ce-4.2.3.0

[2024-05-31 13:56:40.382] [DEBUG] - Searching display plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:40.383] [DEBUG] - Found for oceanbase-ce-py_script_display-3.1.0 for oceanbase-ce-4.3.0.1

[2024-05-31 13:56:40.383] [DEBUG] - Searching display plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:40.383] [DEBUG] - Found for grafana-py_script_display-7.5.17 for grafana-7.5.17

[2024-05-31 13:56:40.383] [DEBUG] - Searching display plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:40.383] [DEBUG] - Found for prometheus-py_script_display-2.37.1 for prometheus-2.37.1

[2024-05-31 13:56:40.390] [INFO] Load cluster param plugin

[2024-05-31 13:56:40.390] [DEBUG] - Get local repository obagent-4.2.2-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:40.391] [DEBUG] - Get local repository obproxy-ce-4.2.3.0-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.391] [DEBUG] - Get local repository oceanbase-ce-4.3.0.1-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:40.391] [DEBUG] - Get local repository grafana-7.5.17-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:40.391] [DEBUG] - Get local repository prometheus-2.37.1-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:40.391] [DEBUG] - Searching param plugin for components …

[2024-05-31 13:56:40.391] [DEBUG] - Search param plugin for obagent

[2024-05-31 13:56:40.391] [DEBUG] - Found for obagent-param-1.3.0 for obagent-4.2.2

[2024-05-31 13:56:40.391] [DEBUG] - Applying obagent-param-1.3.0 for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:40.461] [DEBUG] - Search param plugin for obproxy-ce

[2024-05-31 13:56:40.462] [DEBUG] - Found for obproxy-ce-param-4.2.3 for obproxy-ce-4.2.3.0

[2024-05-31 13:56:40.462] [DEBUG] - Applying obproxy-ce-param-4.2.3 for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:40.590] [DEBUG] - Search param plugin for oceanbase-ce

[2024-05-31 13:56:40.590] [DEBUG] - Found for oceanbase-ce-param-4.3.0.0 for oceanbase-ce-4.3.0.1

[2024-05-31 13:56:40.590] [DEBUG] - Applying oceanbase-ce-param-4.3.0.0 for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:41.115] [DEBUG] - Search param plugin for grafana

[2024-05-31 13:56:41.115] [DEBUG] - Found for grafana-param-7.5.17 for grafana-7.5.17

[2024-05-31 13:56:41.115] [DEBUG] - Applying grafana-param-7.5.17 for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:41.155] [DEBUG] - Search param plugin for prometheus

[2024-05-31 13:56:41.155] [DEBUG] - Found for prometheus-param-2.37.1 for prometheus-2.37.1

[2024-05-31 13:56:41.155] [DEBUG] - Applying prometheus-param-2.37.1 for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:41.220] [INFO] Cluster status check

[2024-05-31 13:56:41.221] [DEBUG] - Searching status plugin for components …

[2024-05-31 13:56:41.221] [DEBUG] - Searching status plugin for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:41.222] [DEBUG] - Found for obagent-py_script_status-1.3.0 for obagent-4.2.2

[2024-05-31 13:56:41.222] [DEBUG] - Searching status plugin for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:41.222] [DEBUG] - Found for obproxy-ce-py_script_status-3.1.0 for obproxy-ce-4.2.3.0

[2024-05-31 13:56:41.222] [DEBUG] - Searching status plugin for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:41.222] [DEBUG] - Found for oceanbase-ce-py_script_status-3.1.0 for oceanbase-ce-4.3.0.1

[2024-05-31 13:56:41.223] [DEBUG] - Searching status plugin for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:41.223] [DEBUG] - Found for grafana-py_script_status-7.5.17 for grafana-7.5.17

[2024-05-31 13:56:41.223] [DEBUG] - Searching status plugin for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:41.223] [DEBUG] - Found for prometheus-py_script_status-2.37.1 for prometheus-2.37.1

[2024-05-31 13:56:41.352] [DEBUG] - Call obagent-py_script_status-1.3.0 for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:41.352] [DEBUG] - import status

[2024-05-31 13:56:41.367] [DEBUG] - add status ref count to 1

[2024-05-31 13:56:41.367] [DEBUG] – local execute: cat /root/obagent/run/ob_agentd.pid

[2024-05-31 13:56:41.372] [DEBUG] – exited code 0

[2024-05-31 13:56:41.373] [DEBUG] – local execute: ls /proc/16750

[2024-05-31 13:56:41.379] [DEBUG] – exited code 2, error output:

[2024-05-31 13:56:41.379] [DEBUG] ls: 无法访问/proc/16750: 没有那个文件或目录

[2024-05-31 13:56:41.379] [DEBUG]

[2024-05-31 13:56:41.380] [DEBUG] - sub status ref count to 0

[2024-05-31 13:56:41.380] [DEBUG] - export status

[2024-05-31 13:56:41.380] [DEBUG] - Call obproxy-ce-py_script_status-3.1.0 for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:41.380] [DEBUG] - import status

[2024-05-31 13:56:41.387] [DEBUG] - add status ref count to 1

[2024-05-31 13:56:41.387] [DEBUG] – local execute: cat /root/obproxy-ce/run/obproxy-127.0.0.1-2883.pid

[2024-05-31 13:56:41.406] [DEBUG] – exited code 0

[2024-05-31 13:56:41.406] [DEBUG] – local execute: ls /proc/16911

[2024-05-31 13:56:41.412] [DEBUG] – exited code 2, error output:

[2024-05-31 13:56:41.412] [DEBUG] ls: 无法访问/proc/16911: 没有那个文件或目录

[2024-05-31 13:56:41.412] [DEBUG]

[2024-05-31 13:56:41.412] [DEBUG] - sub status ref count to 0

[2024-05-31 13:56:41.412] [DEBUG] - export status

[2024-05-31 13:56:41.413] [DEBUG] - Call oceanbase-ce-py_script_status-3.1.0 for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:41.413] [DEBUG] - import status

[2024-05-31 13:56:41.423] [DEBUG] - add status ref count to 1

[2024-05-31 13:56:41.423] [DEBUG] – local execute: cat /root/oceanbase-ce/run/observer.pid

[2024-05-31 13:56:41.435] [DEBUG] – exited code 0

[2024-05-31 13:56:41.435] [DEBUG] – local execute: ls /proc/2730

[2024-05-31 13:56:41.440] [DEBUG] – exited code 2, error output:

[2024-05-31 13:56:41.441] [DEBUG] ls: 无法访问/proc/2730: 没有那个文件或目录

[2024-05-31 13:56:41.441] [DEBUG]

[2024-05-31 13:56:41.441] [DEBUG] - sub status ref count to 0

[2024-05-31 13:56:41.441] [DEBUG] - export status

[2024-05-31 13:56:41.441] [DEBUG] - Call grafana-py_script_status-7.5.17 for grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6

[2024-05-31 13:56:41.441] [DEBUG] - import status

[2024-05-31 13:56:41.451] [DEBUG] - add status ref count to 1

[2024-05-31 13:56:41.452] [DEBUG] – local execute: cat /root/grafana/run/grafana.pid

[2024-05-31 13:56:41.471] [DEBUG] – exited code 0

[2024-05-31 13:56:41.471] [DEBUG] – local execute: ls /proc/17142

[2024-05-31 13:56:41.477] [DEBUG] – exited code 2, error output:

[2024-05-31 13:56:41.477] [DEBUG] ls: 无法访问/proc/17142: 没有那个文件或目录

[2024-05-31 13:56:41.477] [DEBUG]

[2024-05-31 13:56:41.477] [DEBUG] - sub status ref count to 0

[2024-05-31 13:56:41.478] [DEBUG] - export status

[2024-05-31 13:56:41.478] [DEBUG] - Call prometheus-py_script_status-2.37.1 for prometheus-2.37.1-10000102022110211.el7-58913c7606f05feb01bc1c6410346e5fc31cf263

[2024-05-31 13:56:41.478] [DEBUG] - import status

[2024-05-31 13:56:41.486] [DEBUG] - add status ref count to 1

[2024-05-31 13:56:41.486] [DEBUG] – local execute: cat /root/prometheus/run/prometheus.pid

[2024-05-31 13:56:41.496] [DEBUG] – exited code 0

[2024-05-31 13:56:41.496] [DEBUG] – local execute: ls /proc/17075

[2024-05-31 13:56:41.502] [DEBUG] – exited code 2, error output:

[2024-05-31 13:56:41.502] [DEBUG] ls: 无法访问/proc/17075: 没有那个文件或目录

[2024-05-31 13:56:41.502] [DEBUG]

[2024-05-31 13:56:41.502] [DEBUG] - sub status ref count to 0

[2024-05-31 13:56:41.502] [DEBUG] - export status

[2024-05-31 13:56:41.502] [DEBUG] - Call oceanbase-ce-py_script_start_check-4.2.2.0 for oceanbase-ce-4.3.0.1-100000242024032211.el7-c4a03c83614f50c99ddb1c37dda858fa5d9b14b7

[2024-05-31 13:56:41.502] [DEBUG] - import start_check

[2024-05-31 13:56:41.517] [DEBUG] - add start_check ref count to 1

[2024-05-31 13:56:41.518] [INFO] Check before start observer

[2024-05-31 13:56:41.519] [DEBUG] – local execute: ls /root/oceanbase-ce/store/clog/tenant_1/

[2024-05-31 13:56:41.532] [DEBUG] – exited code 0

[2024-05-31 13:56:41.532] [DEBUG] – local execute: cat /root/oceanbase-ce/run/observer.pid

[2024-05-31 13:56:41.536] [DEBUG] – exited code 0

[2024-05-31 13:56:41.536] [DEBUG] – local execute: ls /proc/2730

[2024-05-31 13:56:41.541] [DEBUG] – exited code 2, error output:

[2024-05-31 13:56:41.541] [DEBUG] ls: 无法访问/proc/2730: 没有那个文件或目录

[2024-05-31 13:56:41.541] [DEBUG]

[2024-05-31 13:56:41.541] [DEBUG] – 127.0.0.1 port check

[2024-05-31 13:56:41.541] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:0B41’ | awk -F’ ’ ‘{print $3}’ | uniq

[2024-05-31 13:56:41.553] [DEBUG] – exited code 0

[2024-05-31 13:56:41.554] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:0B42’ | awk -F’ ’ ‘{print $3}’ | uniq

[2024-05-31 13:56:41.563] [DEBUG] – exited code 0

[2024-05-31 13:56:41.563] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:0B46’ | awk -F’ ’ ‘{print $3}’ | uniq

[2024-05-31 13:56:41.572] [DEBUG] – exited code 0

[2024-05-31 13:56:41.573] [DEBUG] – local execute: ls /root/oceanbase-ce/store/sstable/block_file

[2024-05-31 13:56:41.589] [DEBUG] – exited code 0

[2024-05-31 13:56:41.589] [DEBUG] – local execute: [ -w /tmp/ ] || [ -w /tmp/obshell ]

[2024-05-31 13:56:41.592] [DEBUG] – exited code 0

[2024-05-31 13:56:41.593] [DEBUG] – local execute: cat /proc/sys/fs/aio-max-nr /proc/sys/fs/aio-nr

[2024-05-31 13:56:41.596] [DEBUG] – exited code 0

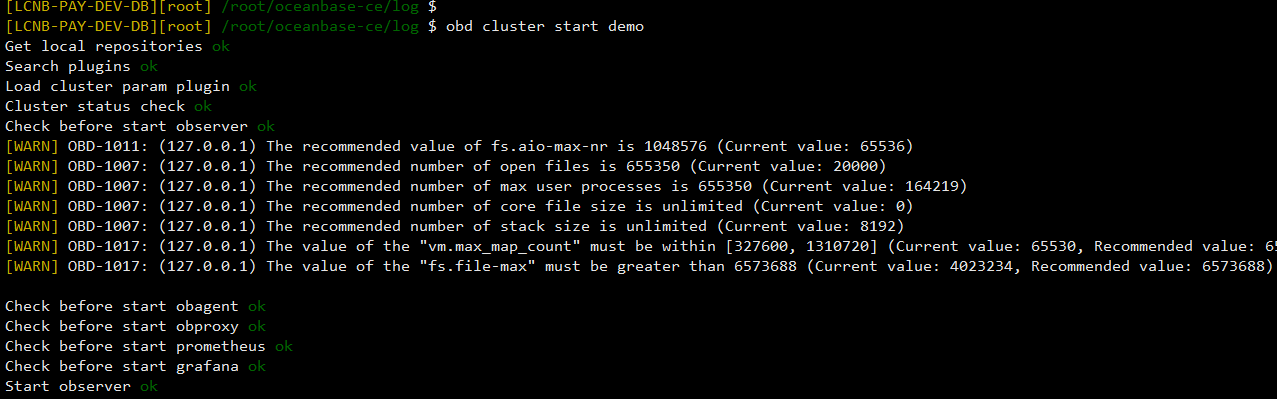

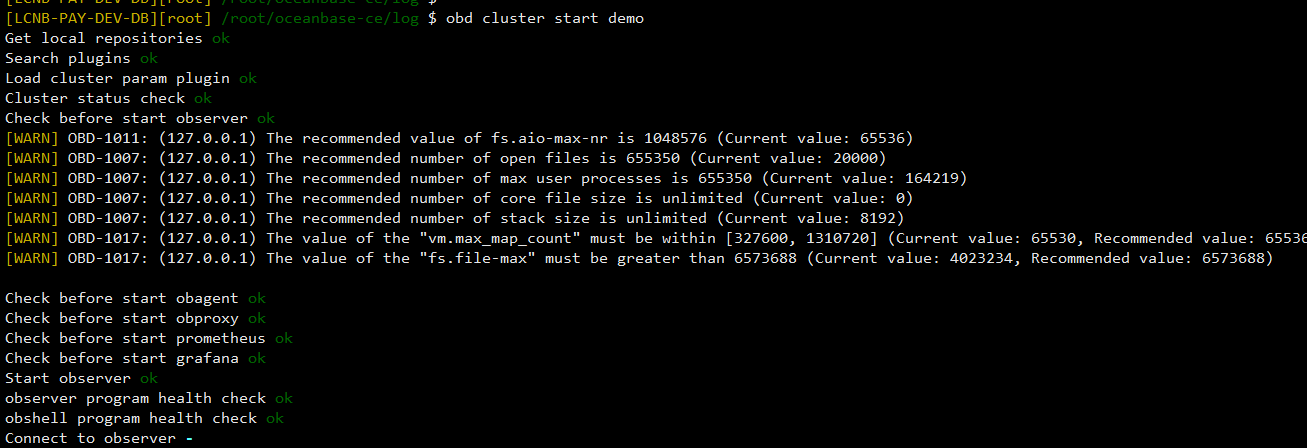

[2024-05-31 13:56:41.597] [WARNING] OBD-1011: (127.0.0.1) The recommended value of fs.aio-max-nr is 1048576 (Current value: 65536)

[2024-05-31 13:56:41.597] [DEBUG] – local execute: ulimit -a

[2024-05-31 13:56:41.599] [DEBUG] – exited code 0

[2024-05-31 13:56:41.600] [WARNING] OBD-1007: (127.0.0.1) The recommended number of open files is 655350 (Current value: 20000)

[2024-05-31 13:56:41.600] [WARNING] OBD-1007: (127.0.0.1) The recommended number of max user processes is 655350 (Current value: 164219)

[2024-05-31 13:56:41.600] [WARNING] OBD-1007: (127.0.0.1) The recommended number of core file size is unlimited (Current value: 0)

[2024-05-31 13:56:41.600] [WARNING] OBD-1007: (127.0.0.1) The recommended number of stack size is unlimited (Current value: 8192)

[2024-05-31 13:56:41.600] [DEBUG] – local execute: sysctl -a

[2024-05-31 13:56:41.696] [DEBUG] – exited code 0

[2024-05-31 13:56:41.700] [WARNING] OBD-1017: (127.0.0.1) The value of the “vm.max_map_count” must be within [327600, 1310720] (Current value: 65530, Recommended value: 655360)

[2024-05-31 13:56:41.700] [WARNING] OBD-1017: (127.0.0.1) The value of the “fs.file-max” must be greater than 6573688 (Current value: 4023216, Recommended value: 6573688)

[2024-05-31 13:56:41.700] [DEBUG] – local execute: cat /proc/meminfo

[2024-05-31 13:56:41.705] [DEBUG] – exited code 0

[2024-05-31 13:56:41.705] [DEBUG] – local execute: df --block-size=1024

[2024-05-31 13:56:41.710] [DEBUG] – exited code 0

[2024-05-31 13:56:41.710] [DEBUG] – get disk info for path /dev, total: 21524553728 avail: 21524553728

[2024-05-31 13:56:41.710] [DEBUG] – get disk info for path /dev/shm, total: 21536837632 avail: 21536837632

[2024-05-31 13:56:41.710] [DEBUG] – get disk info for path /run, total: 21536837632 avail: 21527420928

[2024-05-31 13:56:41.711] [DEBUG] – get disk info for path /sys/fs/cgroup, total: 21536837632 avail: 21536837632

[2024-05-31 13:56:41.711] [DEBUG] – get disk info for path /, total: 992102842368 avail: 418746372096

[2024-05-31 13:56:41.711] [DEBUG] – get disk info for path /boot, total: 1063256064 avail: 905920512

[2024-05-31 13:56:41.711] [DEBUG] – get disk info for path /home, total: 90146082816 avail: 90112221184

[2024-05-31 13:56:41.711] [DEBUG] – get disk info for path /run/user/0, total: 4307369984 avail: 4307369984

[2024-05-31 13:56:41.711] [DEBUG] – disk: {’/dev’: {‘total’: 21524553728, ‘avail’: 21524553728, ‘need’: 0}, ‘/dev/shm’: {‘total’: 21536837632, ‘avail’: 21536837632, ‘need’: 0}, ‘/run’: {‘total’: 21536837632, ‘avail’: 21527420928, ‘need’: 0}, ‘/sys/fs/cgroup’: {‘total’: 21536837632, ‘avail’: 21536837632, ‘need’: 0}, ‘/’: {‘total’: 992102842368, ‘avail’: 418746372096, ‘need’: 0}, ‘/boot’: {‘total’: 1063256064, ‘avail’: 905920512, ‘need’: 0}, ‘/home’: {‘total’: 90146082816, ‘avail’: 90112221184, ‘need’: 0}, ‘/run/user/0’: {‘total’: 4307369984, ‘avail’: 4307369984, ‘need’: 0}}

[2024-05-31 13:56:41.711] [DEBUG] – local execute: date +%s%N

[2024-05-31 13:56:41.714] [DEBUG] – exited code 0

[2024-05-31 13:56:41.715] [DEBUG] – 127.0.0.1 time delta -0.45751953125

[2024-05-31 13:56:41.781] [INFO] [WARN] OBD-1011: (127.0.0.1) The recommended value of fs.aio-max-nr is 1048576 (Current value: 65536)

[2024-05-31 13:56:41.781] [INFO] [WARN] OBD-1007: (127.0.0.1) The recommended number of open files is 655350 (Current value: 20000)

[2024-05-31 13:56:41.781] [INFO] [WARN] OBD-1007: (127.0.0.1) The recommended number of max user processes is 655350 (Current value: 164219)

[2024-05-31 13:56:41.781] [INFO] [WARN] OBD-1007: (127.0.0.1) The recommended number of core file size is unlimited (Current value: 0)

[2024-05-31 13:56:41.781] [INFO] [WARN] OBD-1007: (127.0.0.1) The recommended number of stack size is unlimited (Current value: 8192)

[2024-05-31 13:56:41.781] [INFO] [WARN] OBD-1017: (127.0.0.1) The value of the “vm.max_map_count” must be within [327600, 1310720] (Current value: 65530, Recommended value: 655360)

[2024-05-31 13:56:41.781] [INFO] [WARN] OBD-1017: (127.0.0.1) The value of the “fs.file-max” must be greater than 6573688 (Current value: 4023216, Recommended value: 6573688)

[2024-05-31 13:56:41.781] [INFO]

[2024-05-31 13:56:41.782] [DEBUG] - sub start_check ref count to 0

[2024-05-31 13:56:41.782] [DEBUG] - export start_check

[2024-05-31 13:56:41.782] [DEBUG] - Call obagent-py_script_start_check-1.3.0 for obagent-4.2.2-100000042024011120.el7-19739a07a12eab736aff86ecf357b1ae660b554e

[2024-05-31 13:56:41.782] [DEBUG] - import start_check

[2024-05-31 13:56:41.792] [DEBUG] - add start_check ref count to 1

[2024-05-31 13:56:41.793] [INFO] Check before start obagent

[2024-05-31 13:56:41.795] [DEBUG] – local execute: cat /root/obagent/run/ob_agentd.pid

[2024-05-31 13:56:41.799] [DEBUG] – exited code 0

[2024-05-31 13:56:41.799] [DEBUG] – local execute: ls /proc/16750

[2024-05-31 13:56:41.804] [DEBUG] – exited code 2, error output:

[2024-05-31 13:56:41.804] [DEBUG] ls: 无法访问/proc/16750: 没有那个文件或目录

[2024-05-31 13:56:41.804] [DEBUG]

[2024-05-31 13:56:41.805] [DEBUG] – 127.0.0.1 port check

[2024-05-31 13:56:41.805] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:1F99’ | awk -F’ ’ ‘{print $3}’ | uniq

[2024-05-31 13:56:41.815] [DEBUG] – exited code 0

[2024-05-31 13:56:41.815] [DEBUG] – local execute: bash -c ‘cat /proc/net/{tcp*,udp*}’ | awk -F’ ’ ‘{if($4==“0A”) print $2,$4,$10}’ | grep ‘:1F98’ | awk -F’ ’ ‘{print $3}’ | uniq

[2024-05-31 13:56:41.824] [DEBUG] – exited code 0

[2024-05-31 13:56:41.926] [DEBUG] - sub start_check ref count to 0

[2024-05-31 13:56:41.926] [DEBUG] - export start_check

[2024-05-31 13:56:41.926] [DEBUG] - Call obproxy-ce-py_script_start_check-4.2.3 for obproxy-ce-4.2.3.0-3.el7-0490ebc04220def8d25cb9cac9ac61a4efa6d639

[2024-05-31 13:56:41.926] [DEBUG] - import start_check

[2024-05-31 13:56:41.929] [DEBUG] - add start_check ref count to 1

[2024-05-31 13:56:41.929] [INFO] Check before start obproxy

[2024-05-31 13:56:41.930] [DEBUG] – local execute: cat /root/obproxy-ce/run/obproxy-127.0.0.1-2883.pid

[2024-05-31 13:56:41.935] [DEBUG] – exited code 0

[2024-05-31 13:56:41.935] [DEBUG] – local execute: ls /proc/16911/fd

[2024-05-31 13:56:41.940] [DEBUG] – exited code 2, error output:

[2024-05-31 13:56:41.940] [DEBUG] ls: 无法访问/proc/16911/fd: 没有那个文件或目录

提供下observer.log日志。

[2024-05-31 14:01:45.073785] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1006)

[2024-05-31 14:01:45.073947] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1691) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to kill tenant session(tenant_id=1003)

[2024-05-31 14:01:45.074111] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.074436] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1691) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=1] removed_tenant begin to kill tenant session(tenant_id=1003)

[2024-05-31 14:01:45.074600] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1006)

[2024-05-31 14:01:45.074763] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.074927] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.075089] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1006)

[2024-05-31 14:01:45.075253] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.075414] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1691) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to kill tenant session(tenant_id=1006)

[2024-05-31 14:01:45.075576] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1691) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to kill tenant session(tenant_id=1006)

[2024-05-31 14:01:45.075740] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.075904] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.076554] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.076718] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.076879] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1691) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=1] removed_tenant begin to kill tenant session(tenant_id=1015)

[2024-05-31 14:01:45.077047] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1003)

[2024-05-31 14:01:45.077206] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1003)

[2024-05-31 14:01:45.077369] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.077533] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1006)

[2024-05-31 14:01:45.077694] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.077858] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.078183] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1006)

[2024-05-31 14:01:45.078345] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.078510] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.078834] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1003)

[2024-05-31 14:01:45.079161] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1006)

[2024-05-31 14:01:45.079322] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.079485] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.079656] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1003)

[2024-05-31 14:01:45.079811] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1691) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to kill tenant session(tenant_id=1015)

[2024-05-31 14:01:45.080138] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.080301] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.080461] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.080790] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1006)

[2024-05-31 14:01:45.080953] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1003)

[2024-05-31 14:01:45.081116] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1015)

[2024-05-31 14:01:45.081605] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1003)

[2024-05-31 14:01:45.081765] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1691) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to kill tenant session(tenant_id=1015)

[2024-05-31 14:01:45.081929] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.082091] INFO [SERVER.OMT] stop (ob_multi_tenant.cpp:626) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] there’re some tenants need destroy(count=5)

[2024-05-31 14:01:45.082256] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.082417] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=1] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.082581] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1003)

[2024-05-31 14:01:45.082744] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1006)

[2024-05-31 14:01:45.082905] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1006)

[2024-05-31 14:01:45.083233] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1006)

[2024-05-31 14:01:45.083558] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.083719] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

[2024-05-31 14:01:45.083883] INFO [SERVER.OMT] remove_tenant (ob_multi_tenant.cpp:1685) [2745][observer][T0][Y0-0000000000000000-0-0] [lt=0] removed_tenant begin to stop(tenant_id=1011)

最后50行,文件压缩后还有17M,发布上来,上午发过