【 使用环境 】生产环境

【 OB or 其他组件 】OceanBase

【 使用版本 】OceanBase-3.1.4 + OBProxy-3.2.3

【问题描述】使用key 对主键做分区,并创建有两个全区索引,单条记录1.4K左右,写入时延极度不稳定,从几毫秒跃升至数秒然后大量超时(超过100秒),最后集群进入异常状态。想咨询一下是什么原因导致的?通过Traceid捕捉到了一条超时SQL的observer日志,想请社区的专家帮忙分析一下什么阶段的耗时导致最终超时回滚?

【复现路径】

【附件及日志】

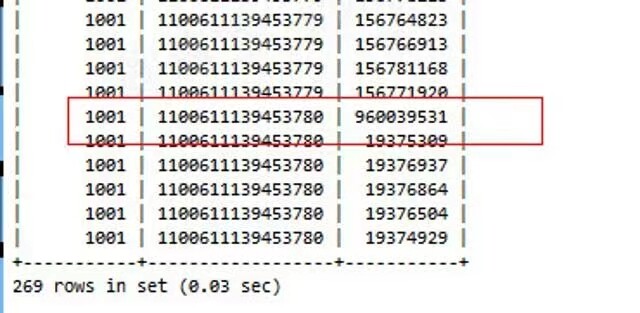

45192:[2024-04-28 09:56:44.591397] TRACE [TRACE]ob_trans_ctx.cpp:528 [981][1506][YB42AC15451F-000616FA064F13CA] [lt=16] [dc=0] [slow trans] (TRACE=begin_ts=1714269232842902 2024-04-28 01:53:52.842902|[init] u=0 arg1:140333578098768, ctx_type:"participant", trans_type:2, trans_id:{hash:4994038682938037139, inc:149347624, addr:{ip:"172.21.69.32", port:2882}, t:1714269232844655}, pkey:{tid:1100611139453777, partition_id:23, part_cnt:0}, arg1:false, uref:1073741824|[start_trans] u=5 ret:0, left_time:100001699, uref:1073741825|[snapshot] u=2 snapshot:1714269232814703, uref:1073741825|[update_gts] u=0 ret:0, sql_no:4294967297, uref:1073741825, snapshot:1714269232814703, tenant_id:1001|[start_task] u=1 ret:0, sql_no:4294967297, uref:1073741825, need_update_gts:true|[end_task] u=443 ret:0, is_rollback:false, uref:1073741825|[set_stc] u=579 stc:1714269232843917|[wait_get_gts] u=12 log_type:3|[alloc_logid_ts] u=0 ret:-4023, log_id:18446744073709551615, tenant_id:1001, uref:1073741826, used:12|[log_pending] u=1 logging:0, pending:1, uref:1073741826|[inc_submit_log_count] u=0 logging:1, pending:1, uref:1073741826|[handle_message] u=0 ret:0, msg_type:240, uref:1073741826|[log_pending_cb] u=103 logging:1, pending:0, uref:1073741825|[dec_submit_log_count] u=1 logging:0, pending:0, uref:1073741825|[alloc_logid_ts] u=0 ret:0, log_id:18446744073709551615, tenant_id:1001, uref:1073741825, used:0|[prepare] u=0 ret:0, trans_version:-1, uref:1073741825|[inc_submit_log_count] u=39 logging:1, pending:0, uref:1073741825|[submit_log] u=0 ret:0, uref:1073741825, timeguard:{click:[0, 11, 27]}|[retry_submit_log] u=1 ret:0, log_type:3, uref:1073741825|[get_gts_callback] u=0 ret:0, srr:1714269232843934, gts:1714269232845452|[dec_submit_log_count] u=230 logging:0, pending:0, uref:1073741825|[on_sync_log_succ] u=8 ret:0, log_type:3, log_id:20363742, uref:1073741825|[handle_timeout] u=102496 ret:0, used:{click:[0, 0, 19]}, uref:1073741825|[handle_timeout] u=206868 ret:0, used:{click:[0, 1, 21]}, uref:1073741825|[handle_timeout] u=403843 ret:0, used:{click:[1, 0, 14]}, uref:1073741825|[handle_timeout] u=803107 ret:0, used:{click:[0, 0, 12]}, uref:1073741825|[handle_timeout] u=1605895 ret:0, used:{click:[0, 0, 12]}, uref:1073741825|[update_trans_version] u=583556 trans_version:1714269236346115, uref:1073741825|[handle_message] u=1 ret:0, msg_type:260, uref:1073741825|[update_trans_version] u=403 trans_version:1714269236346115, uref:1073741825|[calc_checksum_by_commit] u=8 ret:0, checksum:37245167, checksum_log_ts:9223372036854775807|[inc_submit_log_count] u=20 logging:1, pending:0, uref:1073741825|[submit_log] u=0 ret:0, uref:1073741825, timeguard:{click:[1, 2, 17]}|[handle_message] u=1 ret:0, msg_type:280, uref:1073741825|[dec_submit_log_count] u=223 logging:0, pending:0, uref:1073741825|[on_sync_log_succ] u=15 ret:0, log_type:4, log_id:20363743, uref:1073741825|[handle_timeout] u=2421270 ret:0, used:{click:[0, 0, 17]}, uref:1073741825|[handle_timeout] u=3005506 ret:0, used:{click:[1, 0, 15]}, uref:1073741825|[handle_timeout] u=3006016 ret:0, used:{click:[1, 0, 17]}, uref:1073741825|[handle_timeout] u=3004335 ret:0, used:{click:[1, 0, 32]}, uref:1073741825|[handle_timeout] u=3005535 ret:0, used:{click:[0, 0, 20]}, uref:1073741825|[handle_timeout] u=3003326 ret:0, used:{click:[1, 0, 21]}, uref:1073741825|[handle_timeout] u=3007427 |[handle_timeout] u=3002124 |[handle_timeout] u=3007463 |[handle_timeout] u=3001826 |[handle_timeout] u=3005658 |[handle_timeout] u=3004132 |[handle_timeout] u=3005294 |[handle_timeout] u=3004780 |[handle_timeout] u=3009545 |[handle_timeout] u=3000906 |[handle_timeout] u=3004201 |[handle_timeout] u=3009490 |[handle_timeout] u=3004309 |[handle_timeout] u=3003531 |[handle_timeout] u=3004371 |[handle_timeout] u=3004409 |[handle_timeout] u=3005371 |[handle_timeout] u=3004178 |[handle_timeout] u=3008842 |[handle_timeout] u=3004316 |[handle_timeout] u=3003959 |[handle_timeout] u=3004834 |[handle_timeout] u=3004129 |[handle_timeout] u=3005264 |[handle_timeout] u=3003757 |[handle_timeout] u=3004236 |[handle_timeout] u=3005001 |[handle_timeout] u=3009475 |[handle_timeout] u=3004796 |[handle_timeout] u=3004877 |[handle_timeout] u=3005358 |[handle_timeout] u=3005361 |[handle_timeout] u=3004563 |[handle_timeout] u=3005136 |[handle_timeout] u=3004391 |[handle_timeout] u=3005077 |[handle_timeout] u=3005876 |[handle_timeout] u=3009352 |[handle_timeout] u=3004577 |[handle_timeout] u=3004552 |[handle_timeout] u=3004975 |[handle_timeout] u=3004929 |[handle_timeout] u=3004093 |[handle_timeout] u=3004518 |[handle_timeout] u=3004643 |[handle_timeout] u=3004716 |[handle_timeout] u=3005369 |[handle_timeout] u=3004559 |[handle_timeout] u=3004271 |[handle_timeout] u=3009517 |[inc_submit_log_count] u=335831 logging:1, pending:0, uref:1073741825|[submit_log] u=1 |[handle_message] u=1 |[dec_submit_log_count] u=359 |[exiting] u=3 |[on_sync_log_succ] u=18 |[destroy] u=15 arg1:140333578100144|total_timeu=171748411)

48602:[2024-04-28 09:59:45.444684] TRACE [TRACE]ob_trans_ctx.cpp:528 [1303][2150][YB42AC15451E-000616FB2A1E140A] [lt=9] [dc=0] [slow trans] (TRACE=begin_ts=1714269232843914 2024-04-28 01:53:52.843914|[init] u=0 arg1:140334620860352, ctx_type:"coordinator", trans_type:2, trans_id:{hash:4994038682938037139, inc:149347624, addr:{ip:"172.21.69.32", port:2882}, t:1714269232844655}, pkey:{tid:1100611139453777, partition_id:23, part_cnt:0}, arg1:false, uref:1073741824|[cons_context] u=3 ret:0, read_only:false, uref:1073741825|[set_stc] u=0 stc:1714269232843917|[handle_message] u=12 ret:0, id1:-1, id2:-1, msg_type:0, sender:{tid:1000, partition_id:1000, part_cnt:1000}, uref:1073741825|[update_trans_version] u=401 trans_version:1714269232845452, uref:1073741825|[handle_message] u=1 ret:0, msg_type:250, uref:1073741825|[update_trans_version] u=93 trans_version:1714269232836542, uref:1073741825|[handle_message] u=1 ret:0, msg_type:250, uref:1073741825|[handle_timeout] u=102376 ret:0, total_count:1, uref:1073741825|[handle_message] u=27 ret:0, msg_type:250, uref:1073741825|[handle_message] u=2161 ret:0, msg_type:250, uref:1073741825|[handle_timeout] u=204677 ret:0, total_count:1, uref:1073741825|[handle_message] u=32 ret:0, msg_type:250, uref:1073741825|[handle_message] u=2340 ret:0, msg_type:250, uref:1073741825|[handle_timeout] u=401478 ret:0, total_count:1, uref:1073741825|[handle_message] u=22 ret:0, msg_type:250, uref:1073741825|[handle_message] u=2178 ret:0, msg_type:250, uref:1073741825|[handle_timeout] u=800912 ret:0, total_count:1, uref:1073741825|[handle_message] u=17 ret:0, msg_type:250, uref:1073741825|[handle_message] u=6131 ret:0, msg_type:250, uref:1073741825|[handle_message] u=1483265 ret:0, id1:1714269234359979, id2:-1, msg_type:0, sender:{tid:1000, partition_id:1000, part_cnt:1000}, uref:1073741825|[handle_timeout] u=116481 ret:0, total_count:1, uref:1073741825|[handle_message] u=17 ret:0, msg_type:250, uref:1073741825|[handle_message] u=5380 ret:0, msg_type:250, uref:1073741825|[update_trans_version] u=578164 trans_version:1714269236346115, uref:1073741825|[handle_message] u=7 ret:0, msg_type:250, uref:1073741825|[handle_message] u=4 ret:0, msg_type:270, uref:1073741826|[response_scheduler] u=386 ret:0, scheduler:{ip:"172.21.69.32", port:2882}, version:1714269236346115, publish_version:1714269236493473, msg_type:1, uref:1073741825|[handle_message] u=13 ret:0, msg_type:270, uref:1073741825|[handle_message] u=273 ret:0, msg_type:290, uref:1073741825|[handle_message] u=569 ret:0, msg_type:290, uref:1073741825|[handle_timeout] u=107195 ret:0, total_count:1, uref:1073741825|[handle_timeout] u=201944 ret:0, total_count:1, uref:1073741825|[handle_timeout] u=409124 ret:0, total_count:1, uref:1073741825|[handle_timeout] u=803164 ret:0, total_count:1, uref:1073741825|[handle_message] u=899277 ret:0, msg_type:290, uref:1073741825|[handle_message] u=4907 ret:0, msg_type:290, uref:1073741825|[handle_timeout] u=702375 ret:0, total_count:1, uref:1073741825|[handle_message] u=2298222 ret:0, msg_type:290, uref:1073741825|[handle_message] u=3578 ret:0, msg_type:290, uref:1073741825|[handle_timeout] u=703379 ret:0, total_count:1, uref:1073741825|[handle_message] u=2299061 ret:0, msg_type:290, uref:1073741825|[handle_message] u=773 ret:0, msg_type:290, uref:1073741825|[handle_timeout] u=701208 ret:0, total_count:1, uref:1073741825|[handle_message] u=2302361 ret:0, msg_type:290, uref:1073741825|[handle_message] u=5732 ret:0, msg_type:290, uref:1073741825|[handle_timeout] u=696691 ret:0, total_count:1, uref:1073741825|[handle_message] u=2303109 ret:0, msg_type:290, uref:1073741825|[handle_message] u=4128 ret:0, msg_type:290, uref:1073741825|[handle_timeout] u=697562 ret:0, total_count:1, uref:1073741825|[handle_message] u=2301639 ret:0, msg_type:290, uref:1073741825|[handle_message] u=3557 ret:0, msg_type:290, uref:1073741825|[handle_timeout] u=702794 ret:0, total_count:1, uref:1073741825|[handle_message] u=2300946 ret:0, msg_type:290, uref:1073741825|[handle_message] u=129 ret:0, msg_type:290, uref:1073741825|[handle_timeout] u=701421 |[handle_message] u=2300707 |[handle_message] u=2608 |[handle_timeout] u=703760 |[handle_message] u=2301093 |[handle_message] u=3857 |[handle_timeout] u=702246 |[handle_message] u=2295722 |[handle_message] u=5632 |[handle_timeout] u=700858 |[handle_message] u=2299163 |[handle_message] u=4992 |[handle_timeout] u=701805 |[handle_message] u=2297338 |[handle_message] u=5628 |[handle_timeout] u=701496 |[handle_message] u=2298168 |[handle_message] u=5100 |[handle_timeout] u=701988 |[handle_message] u=2297693 |[handle_message] u=4510 |[handle_timeout] u=702429 |[handle_message] u=2301283 |[handle_message] u=1319 |[handle_timeout] u=701781 |[handle_message] u=2299133 |[handle_message] u=6539 |[handle_timeout] u=700524 |[handle_message] u=2297133 |[handle_message] u=6747 |[handle_timeout] u=699850 |[handle_message] u=2302893 |[handle_message] u=2120 |[handle_timeout] u=699850 |[handle_message] u=2302342 |[handle_message] u=1894 |[handle_timeout] u=704226 |[handle_message] u=2297408 |[handle_message] u=2952 |[handle_timeout] u=708627 |[handle_message] u=2292792 |[handle_message] u=4255 |[handle_timeout] u=707771 |[handle_message] u=2292384 |[destroy] u=283368347 |total_timeu=352600660 DROPPED_EVENTS=168)