【 使用环境 】 测试环境 oracle linux el9

【 OB or 其他组件 】OB

【 使用版本 】4.2.2

【问题描述】单机想启动observer,输入命令无报错输出,服务也没启动

【复现路径】参数:cd /home/admin/oceanbase && bin/observer -o cluster_id=1,datafile_size=20G,memory_limit=2G,cache_wash_threshold=1G,__min_full_resource_pool_memory=108435456,system_memory=2G,stack_size=512K,net_thread_count=2,cpu_quota_concurrency=2 -z zone4

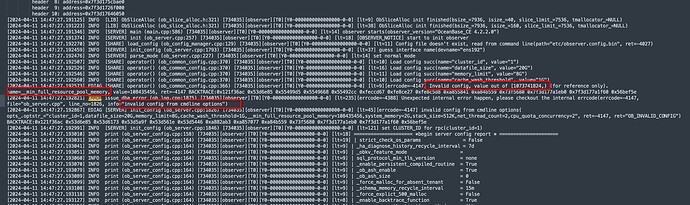

【附件及日志】报错:

ERROR issue_dba_error (ob_log.cpp:1875) [733137][observer][T0][Y0-0000000000000000-0-0] [lt=21][errcode=-4388] Unexpected internal error happen, please checkout the internal errcode(errcode=-4147, file=“ob_server.cpp”, line_no=264, info=“init config failed”)

[2024-04-11 14:18:43.204378] EDIAG [SERVER] init (ob_server.cpp:264) [733137][observer][T0][Y0-0000000000000000-0-0] [lt=29][errcode=-4147] init config failed(ret=-4147, ret=“OB_INVALID_CONFIG”) BACKTRACE:0x121f36ac 0x53d6e85 0x540596a 0x540532b 0x540526a 0x5524257 0xa858210 0xa84dbfd 0x73f5680 0x7f85a0d94eb0 0x7f85a0d94f60 0x56bef5e

修改为 memory_limit=8G, 还是一样

硬件8G内存不知道有没有关系

已经使用用obd web的白屏方式成功安装了3节点的集群,希望再添加个新节点进来,结果服务启动不了。。。 ![]()

那就把日志目录先清空下,然后再手动起一次,看下新的日志里的报错是什么

observer.log (142.0 KB)

好的,已清理,并执行:

oceanbase]# cd /home/admin/oceanbase && bin/observer -o cluster_id=1,datafile_size=20G,memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=108435456,system_memory=2G,stack_size=512K,net_thread_count=2,cpu_quota_concurrency=2 -z zone1

bin/observer -o cluster_id=1,datafile_size=20G,memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=108435456,system_memory=2G,stack_size=512K,net_thread_count=2,cpu_quota_concurrency=2 -z zone1

optstr: cluster_id=1,datafile_size=20G,memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=108435456,system_memory=2G,stack_size=512K,net_thread_count=2,cpu_quota_concurrency=2

zone: zone1

[root@4 oceanbase]# ps -ef | grep observer

root 734042 731760 0 14:47 pts/0 00:00:00 grep --color=auto observer

附件已附上log

部署方式是什么呢

安装包是什么呢

就是使用rpm 直接部署的:

rpm -ivh oceanbase-ce-libs-4.2.2.0-100010012024022719.el8.x86_64.rpm

Verifying… ################################# [100%]

Preparing… ################################# [100%]

Updating / installing…

1:oceanbase-ce-libs-4.2.2.0-1000100warning: user admin does not exist - using root

warning: user admin does not exist - using root

################################# [100%]

[root@ntgksqa04 ~]# rpm -ivh oceanbase-ce-4.2.2.0-100010012024022719.el8.x86_64.rpm

Verifying… ################################# [100%]

Preparing… ################################# [100%]

Updating / installing…

1:oceanbase-ce-4.2.2.0-100010012024warning: user admin does not exist - using root

warning: user admin does not exist - using root

手动部署得嘛?

感觉像是初始化失败那。

也可以通过洪波老师提供得部署方式进行部署。

感谢各位,尤其洪波老师,我光看error 那块了,没注意前面。已可以启动服务了。另外,如果已经使用 obd web的白屏方式成功安装了3节点的集群,希望再添加个新节点进来,这时在这个新节点就不能再用obd web的白屏方式来添加了吧?

这样不行了,obd web是部署的独立的集群,不能用来添加节点,加节点最简单的方式就是用ocp运维管控平台,命令行的话还是比较麻烦

好的,现在3节点的集群,已经 ALTER SYSTEM ADD SERVER ‘10.215.65.202:2882’ ZONE ‘zone1’; 添加了新节点ip 202的了,也执行了 START SERVER,但是还是显示INACTIVE。。。不知道这个操作是否正确?

/root/.oceanbase-all-in-one/obclient/u01/obclient/bin/obclient -h10.215.65.166 -P2883 -uroot@sys -p’xxx’ -Doceanbase -A

obclient [oceanbase]> ALTER SYSTEM START SERVER “10.215.65.202:2882”;

Query OK, 0 rows affected (0.008 sec)

obclient [oceanbase]> SELECT * FROM oceanbase.DBA_OB_SERVERS;

±--------------±---------±----±------±---------±----------------±---------±---------------------------±----------±----------------------±---------------------------±---------------------------±------------------------------------------------------------------------------------------±---------------------------+

| SVR_IP | SVR_PORT | ID | ZONE | SQL_PORT | WITH_ROOTSERVER | STATUS | START_SERVICE_TIME | STOP_TIME | BLOCK_MIGRATE_IN_TIME | CREATE_TIME | MODIFY_TIME | BUILD_VERSION | LAST_OFFLINE_TIME |

±--------------±---------±----±------±---------±----------------±---------±---------------------------±----------±----------------------±---------------------------±---------------------------±------------------------------------------------------------------------------------------±---------------------------+

| 10.215.65.166 | 2882 | 1 | zone1 | 2881 | YES | ACTIVE | 2024-04-09 14:28:38.677142 | NULL | NULL | 2024-04-09 14:28:36.614960 | 2024-04-09 14:28:39.463393 | 4.2.2.1_101000012024030709-083a68a2907b6a1a12138c4a9e0994949166bfba(Mar 7 2024 10:09:35) | NULL |。。。。

。。。。

| 10.215.65.202 | 2882 | 197 | zone1 | 2881 | NO | INACTIVE | NULL | NULL | NULL | 2024-04-11 16:31:36.030072 | 2024-04-11 16:31:47.154914 | 4.2.2.0_100010012024022719-c984fe7cb7a4cef85a40323a0d073f0c9b7b8235(Feb 27 2024 19:21:00) | 2024-04-11 16:31:47.152705 |

±--------------±---------±----±------±---------±----------------±---------±---------------------------±----------±----------------------±---------------------------±---------------------------±------------------------------------------------------------------------------------------±---------------------------+

4 rows in set (0.001 sec)

错误日志:

[2024-04-11 17:22:45.374912] WDIAG [SHARE.LOCATION] nonblock_get_leader (ob_location_service.cpp:150) [739716][TsMgr][T0][Y0-0000000000000000-0-0] [lt=15][errcode=-4721] fail to nonblock get log stream location leader(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, cluster_id=11, tenant_id=1, ls_id={id:1}, leader=“0.0.0.0:0”)

[2024-04-11 17:22:45.374930] WDIAG [STORAGE.TRANS] get_gts_leader_ (ob_gts_source.cpp:535) [739716][TsMgr][T0][Y0-0000000000000000-0-0] [lt=7][errcode=-4721] gts nonblock get leader failed(ret=-4721, tenant_id=1, GTS_LS={id:1})

[2024-04-11 17:22:45.374935] WDIAG [STORAGE.TRANS] refresh_gts_ (ob_gts_source.cpp:593) [739716][TsMgr][T0][Y0-0000000000000000-0-0] [lt=5][errcode=-4721] get gts leader failed(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, tenant_id=1)

[2024-04-11 17:22:45.374939] WDIAG [STORAGE.TRANS] operator() (ob_ts_mgr.h:173) [739716][TsMgr][T0][Y0-0000000000000000-0-0] [lt=3][errcode=-4721] refresh gts failed(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, gts_tenant_info={v:1})

[2024-04-11 17:22:45.374944] INFO [STORAGE.TRANS] operator() (ob_ts_mgr.h:177) [739716][TsMgr][T0][Y0-0000000000000000-0-0] [lt=5] refresh gts functor(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, gts_tenant_info={v:1})

[2024-04-11 17:22:45.374945] WDIAG [SHARE.LOCATION] batch_process_tasks (ob_ls_location_service.cpp:549) [739673][SysLocAsyncUp0][T0][YB420AD741CA-000615CE9D50D54F-0-0] [lt=14][errcode=0] tenant schema is not ready, need wait(ret=0, ret=“OB_SUCCESS”, superior_tenant_id=1, task={cluster_id:11, tenant_id:1, ls_id:{id:1}, renew_for_tenant:false, add_timestamp:1712827365374919})

[2024-04-11 17:22:45.377844] WDIAG [SHARE.LOCATION] nonblock_get_leader (ob_ls_location_service.cpp:448) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=4][errcode=-4721] nonblock get location failed(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, cluster_id=11, tenant_id=1, ls_id={id:1})

[2024-04-11 17:22:45.377858] WDIAG [SHARE.LOCATION] nonblock_get_leader (ob_location_service.cpp:150) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=13][errcode=-4721] fail to nonblock get log stream location leader(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, cluster_id=11, tenant_id=1, ls_id={id:1}, leader=“0.0.0.0:0”)

[2024-04-11 17:22:45.377874] WDIAG [STORAGE.TRANS] get_gts_leader_ (ob_gts_source.cpp:535) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=6][errcode=-4721] gts nonblock get leader failed(ret=-4721, tenant_id=1, GTS_LS={id:1})

[2024-04-11 17:22:45.377877] WDIAG [STORAGE.TRANS] get_gts (ob_gts_source.cpp:224) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=3][errcode=-4023] get gts leader fail(tmp_ret=-4721, tenant_id=1)

[2024-04-11 17:22:45.387950] WDIAG [SHARE.LOCATION] nonblock_get_leader (ob_ls_location_service.cpp:448) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=4][errcode=-4721] nonblock get location failed(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, cluster_id=11, tenant_id=1, ls_id={id:1})

[2024-04-11 17:22:45.387970] WDIAG [SHARE.LOCATION] nonblock_get_leader (ob_location_service.cpp:150) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=19][errcode=-4721] fail to nonblock get log stream location leader(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, cluster_id=11, tenant_id=1, ls_id={id:1}, leader=“0.0.0.0:0”)

[2024-04-11 17:22:45.387988] WDIAG [STORAGE.TRANS] get_gts_leader_ (ob_gts_source.cpp:535) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=8][errcode=-4721] gts nonblock get leader failed(ret=-4721, tenant_id=1, GTS_LS={id:1})

[2024-04-11 17:22:45.387993] WDIAG [STORAGE.TRANS] get_gts (ob_gts_source.cpp:224) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=4][errcode=-4023] get gts leader fail(tmp_ret=-4721, tenant_id=1)

[2024-04-11 17:22:45.398066] WDIAG [SHARE.LOCATION] nonblock_get_leader (ob_ls_location_service.cpp:448) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=3][errcode=-4721] nonblock get location failed(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, cluster_id=11, tenant_id=1, ls_id={id:1})

[2024-04-11 17:22:45.398084] WDIAG [SHARE.LOCATION] nonblock_get_leader (ob_location_service.cpp:150) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=17][errcode=-4721] fail to nonblock get log stream location leader(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, cluster_id=11, tenant_id=1, ls_id={id:1}, leader=“0.0.0.0:0”)

[2024-04-11 17:22:45.398104] WDIAG [STORAGE.TRANS] get_gts_leader_ (ob_gts_source.cpp:535) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=7][errcode=-4721] gts nonblock get leader failed(ret=-4721, tenant_id=1, GTS_LS={id:1})

[2024-04-11 17:22:45.398109] WDIAG [STORAGE.TRANS] get_gts (ob_gts_source.cpp:224) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=4][errcode=-4023] get gts leader fail(tmp_ret=-4721, tenant_id=1)

[2024-04-11 17:22:45.408181] WDIAG [SHARE.LOCATION] nonblock_get_leader (ob_ls_location_service.cpp:448) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=3][errcode=-4721] nonblock get location failed(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, cluster_id=11, tenant_id=1, ls_id={id:1})

[2024-04-11 17:22:45.408198] WDIAG [SHARE.LOCATION] nonblock_get_leader (ob_location_service.cpp:150) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=16][errcode=-4721] fail to nonblock get log stream location leader(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, cluster_id=11, tenant_id=1, ls_id={id:1}, leader=“0.0.0.0:0”)

[2024-04-11 17:22:45.408216] WDIAG [STORAGE.TRANS] get_gts_leader_ (ob_gts_source.cpp:535) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=6][errcode=-4721] gts nonblock get leader failed(ret=-4721, tenant_id=1, GTS_LS={id:1})

[2024-04-11 17:22:45.408221] WDIAG [STORAGE.TRANS] get_gts (ob_gts_source.cpp:224) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=4][errcode=-4023] get gts leader fail(tmp_ret=-4721, tenant_id=1)

[2024-04-11 17:22:45.418310] WDIAG [SHARE.LOCATION] nonblock_get_leader (ob_ls_location_service.cpp:448) [739903][T1_Occam][T1][Y0-0000000000000000-0-0] [lt=3][errcode=-4721] nonblock get location failed(ret=-4721, ret=“OB_LS_LOCATION_NOT_EXIST”, cluster_id=11, tenant_id=1, ls_id={id:1})

看了下这台单机的服务,没有ob_agentd ,不知道是否有关系。。。

手动部署ob集群 可以参考下https://open.oceanbase.com/blog/10900138