[2024-03-27T14:09:38.994+0800] INFO - Dependencies all met for dep_context=non-requeueable deps ti=<TaskInstance: init_metadb.start_metadb manual__2024-03-27T06:09:28.532045+00:00 map_index=0 [queued]>

2

[2024-03-27T14:09:39.007+0800] INFO - Dependencies all met for dep_context=requeueable deps ti=<TaskInstance: init_metadb.start_metadb manual__2024-03-27T06:09:28.532045+00:00 map_index=0 [queued]>

3

[2024-03-27T14:09:39.007+0800] INFO -

4

5

[2024-03-27T14:09:39.007+0800] INFO - Starting attempt 1 of 1

6

[2024-03-27T14:09:39.007+0800] INFO -

7

8

[2024-03-27T14:09:39.024+0800] INFO - Executing <Mapped(_PythonDecoratedOperator): start_metadb> on 2024-03-27 06:09:28.532045+00:00

9

[2024-03-27T14:09:39.031+0800] INFO - Started process 102724 to run task

10

[2024-03-27T14:09:39.034+0800] INFO - Running: [‘airflow’, ‘tasks’, ‘run’, ‘init_metadb’, ‘start_metadb’, ‘manual__2024-03-27T06:09:28.532045+00:00’, ‘–job-id’, ‘2896’, ‘–raw’, ‘–subdir’, ‘DAGS_FOLDER/init_metadb.py’, ‘–cfg-path’, ‘/tmp/tmpkyhqjyiz’, ‘–map-index’, ‘0’]

11

[2024-03-27T14:09:39.035+0800] INFO - Job 2896: Subtask start_metadb

12

[2024-03-27T14:09:39.102+0800] INFO - Running <TaskInstance: init_metadb.start_metadb manual__2024-03-27T06:09:28.532045+00:00 map_index=0 [running]> on host localhost.localdomain

13

[2024-03-27T14:09:39.207+0800] INFO - Exporting the following env vars:

14

AIRFLOW_CTX_DAG_OWNER=airflow

15

AIRFLOW_CTX_DAG_ID=init_metadb

16

AIRFLOW_CTX_TASK_ID=start_metadb

17

AIRFLOW_CTX_EXECUTION_DATE=2024-03-27T06:09:28.532045+00:00

18

AIRFLOW_CTX_TRY_NUMBER=1

19

AIRFLOW_CTX_DAG_RUN_ID=manual__2024-03-27T06:09:28.532045+00:00

20

[2024-03-27T14:09:39.209+0800] INFO - Running statement: select id, ip from oat_server where id=%s, parameters: [2]

21

[2024-03-27T14:09:39.210+0800] INFO - Rows affected: 1

22

[2024-03-27T14:09:39.211+0800] INFO - Running statement: select * from oat_image where id=%s, parameters: [1]

23

[2024-03-27T14:09:39.212+0800] INFO - Rows affected: 1

24

[2024-03-27T14:09:39.213+0800] INFO - Running statement: select a.id, a.ip, a.hardware, b.name as idc, b.region from oat_server a, oat_idc b where a.idc_id=b.id and a.id in (%s), parameters: [2]

25

[2024-03-27T14:09:39.214+0800] INFO - Rows affected: 1

26

[2024-03-27T14:09:39.216+0800] INFO - Running statement: select oat_server.id, oat_credential.id as credential_id, ip, ssh_port, username, password, auth_type, key_data, passphrase from oat_server, oat_credential where oat_server.credential_id=oat_credential.id and oat_server.id=%s, parameters: [2]

27

[2024-03-27T14:09:39.216+0800] INFO - Rows affected: 1

28

[2024-03-27T14:09:39.231+0800] INFO - execute command on 10.17.30.235:

29

ob_version=“4.x”

30

data_dir_full="/data/1/metadb_hy"

31

log_dir_full="/data/log1/metadb_hy"

32

for d in “/home/admin/oceanbase” “$data_dir_full” “$log_dir_full”;

33

do

34

if [ -e “$d” ]; then

35

[ “$(ls -A $d | grep -vw lost+found)” ] && { echo “$d is not empty. Please clean it and retry!” > /dev/stderr; exit 2; }

36

else

37

mkdir -p “$d”

38

fi

39

chown -R 500:500 $d

40

done

41

data_used_percentage=$(df --output=pcent “/data/1”| tail -n 1 | sed ‘s/%//’)

42

log_used_percentage=$(df --output=pcent “/data/log1”| tail -n 1 | sed ‘s/%//’)

43

if [[ $(df --output=target “/data/1” | tail -1) = $(df --output=target “/data/log1” | tail -1) ]]; then

44

share_disk=yes

45

fi

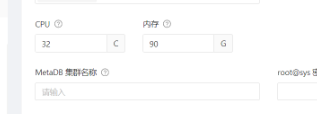

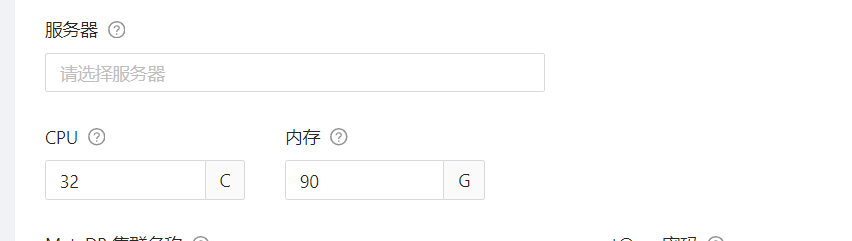

46

if [[ “$ob_version” == “4.x” ]]; then

47

real_data_percentage=90

48

real_log_percentage=90

49

if [[ -n $share_disk ]]; then

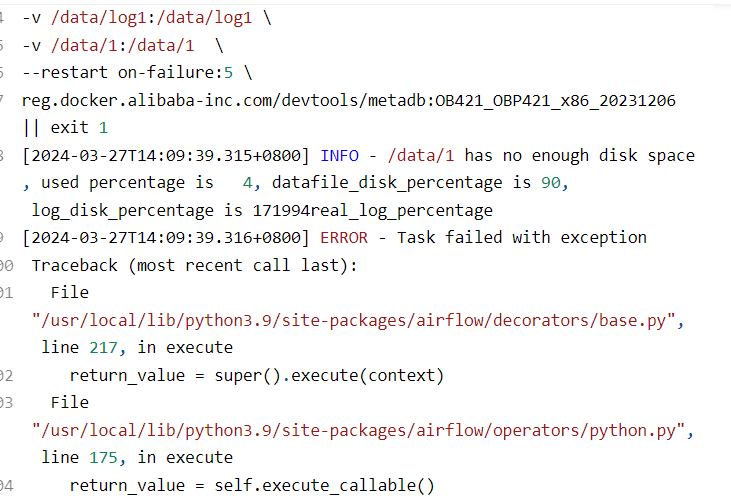

50

[[ $real_data_percentage = 0 ]] && real_data_percentage=60

51

[[ $real_log_percentage = 0 ]] && real_log_percentage=30

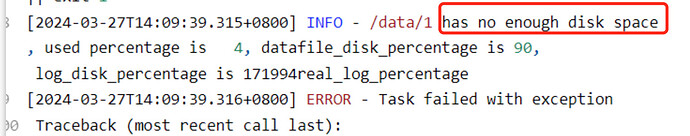

52

if [[ $(($data_used_percentage + $real_data_percentage + $real_log_percentage)) -gt 99 ]]; then

53

echo “/data/1 has no enough disk space, used percentage is $data_used_percentage, datafile_disk_percentage is $real_data_percentage, log_disk_percentage is $$real_log_percentage”; exit 1

54

fi

55

else

56

if [[ $(($data_used_percentage + $real_data_percentage)) -gt 99 ]]; then

57

echo “/data/1 has no enough disk space, used percentage is $data_used_percentage, datafile_disk_percentage is $real_data_percentage”; exit 1

58

fi

59

if [[ $(($log_used_percentage + $real_log_percentage)) -gt 99 ]]; then

60

echo “/data/log1 has no enough disk space, used percentage is $log_used_percentage, log_disk_percentage is $real_log_percentage”; exit 1

61

fi

62

fi

63

else

64

if [[ $(($data_used_percentage + 90)) -gt 99 ]]; then

65

echo “/data/1 has no enough disk space, used percentage is $data_used_percentage, datafile_disk_percentage is 90”; exit 1

66

fi

67

fi

68

69

docker run --net host --cap-add SYS_RESOURCE --rm -v /home/admin/oceanbase:/home/admin/oceanbase -v /data/log1:/data/log1 -v /data/1:/data/1 \

70

–entrypoint su reg.docker.alibaba-inc.com/devtools/metadb:OB421_OBP421_x86_20231206 - admin -c ‘for d in /home/admin/oceanbase /data/1/metadb_hy /data/log1/metadb_hy; do touch $d/test_file_oat && rm -f $d/test_file_oat || exit 1; done’

71

[ $? -ne 0 ] && { echo “mount dir check failed, admin user in metadb container must has write permission” > /dev/stderr; exit 3; }

72

73

dev_name=$(ip a | grep -w 10.17.30.235 | grep inet | awk ‘{print $NF}’)

74

[ -z “$dev_name” ] && { echo ‘can not get nic dev name!’; exit 1; }

75

docker run -d -it --cap-add SYS_RESOURCE --name metadb --net=host \

76

-e OBCLUSTER_NAME=metadb_hy \

77

-e DEV_NAME=$dev_name \

78

-e ROOTSERVICE_LIST=10.17.30.235:2882:2881 \

79

-e DATAFILE_DISK_PERCENTAGE=90 \

80

-e CLUSTER_ID=1711519768 \

81

-e ZONE_NAME=META_ZONE_1 \

82

-e OBPROXY_PORT=2883 \

83

-e MYSQL_PORT=2881 \

84

-e RPC_PORT=2882 \

85

-e app.password_root=’***’ \

86

-e OBPROXY_OPTSTR=obproxy_sys_password=9dc6c63145152b1a3953fe32ba606be5b08ecbf1,observer_sys_password=74a66feae6ac65a96030746384d820c8664946cb,automatic_match_work_thread=false,enable_strict_kernel_release=false,work_thread_num=16,proxy_mem_limited=3G,client_max_connections=16384,log_dir_size_threshold=10G \

87

-e OPTSTR=cpu_count=32,memory_limit=87G,system_memory=10G,__min_full_resource_pool_memory=1073741824,log_disk_percentage=90 \

88

-e SSHD_PORT=2022 \

89

–cpu-period 100000 \

90

–cpu-quota 3200000 \

91

–cpuset-cpus “0-31” \

92

–memory 90G \

93

-v /home/admin/oceanbase:/home/admin/oceanbase \

94

-v /data/log1:/data/log1 \

95

-v /data/1:/data/1 \

96

–restart on-failure:5 \

97

reg.docker.alibaba-inc.com/devtools/metadb:OB421_OBP421_x86_20231206 || exit 1

98

[2024-03-27T14:09:39.315+0800] INFO - /data/1 has no enough disk space, used percentage is 4, datafile_disk_percentage is 90, log_disk_percentage is 171994real_log_percentage

99

[2024-03-27T14:09:39.316+0800] ERROR - Task failed with exception

100

Traceback (most recent call last):

101

File “/usr/local/lib/python3.9/site-packages/airflow/decorators/base.py”, line 217, in execute

102

return_value = super().execute(context)

103

File “/usr/local/lib/python3.9/site-packages/airflow/operators/python.py”, line 175, in execute

104

return_value = self.execute_callable()

105

File “/usr/local/lib/python3.9/site-packages/airflow/operators/python.py”, line 192, in execute_callable

106

return self.python_callable(*self.op_args, **self.op_kwargs)

107

File “/oat/task_engine/dags/init_metadb.py”, line 120, in start_metadb

108

raise RuntimeError(f’start metadb on {server_ip} failed’)

109

RuntimeError: start metadb on 10.17.30.235 failed

110

[2024-03-27T14:09:39.325+0800] INFO - Marking task as FAILED. dag_id=init_metadb, task_id=start_metadb, map_index=0, execution_date=20240327T060928, start_date=20240327T060938, end_date=20240327T060939

111

[2024-03-27T14:09:39.343+0800] ERROR - Failed to execute job 2896 for task start_metadb (start metadb on 10.17.30.235 failed; 102724)

112

[2024-03-27T14:09:39.365+0800] INFO - Task exited with return code 1

113

[2024-03-27T14:09:39.399+0800] INFO - 0 downstream tasks scheduled from follow-on schedule check