老师好,改了2.0.0 之后,logproxy启动后,使用客户端连接时直接没有反应了。

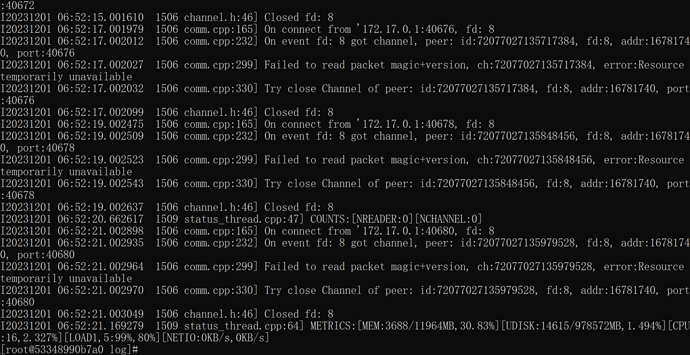

[root@53c58b2a588a log]# cat logproxy.log

[2023-12-01 08:22:52] [info] environmental.cpp(27): Max file descriptors: 1048576

[2023-12-01 08:22:52] [info] environmental.cpp(34): Max processes/threads: 18446744073709551615

[2023-12-01 08:22:52] [info] environmental.cpp(41): Core dump size: 0

[2023-12-01 08:22:52] [info] environmental.cpp(48): Maximum number of pending signals: 47831

[2023-12-01 08:22:52] [info] channel_factory.cpp(49): ChannelFactory init with server mode

[2023-12-01 08:22:52] [info] comm.cpp(105): +++ Listen on port: 2983, fd: 7

[2023-12-01 08:22:52] [info] file_gc.cpp(180): file gc size quota MB: 5242880

[2023-12-01 08:22:52] [info] file_gc.cpp(181): file gc time quota day: 7

[2023-12-01 08:22:52] [info] file_gc.cpp(182): file gc path: log

[2023-12-01 08:22:52] [info] file_gc.cpp(183): file gc oblogreader path: ./run

[2023-12-01 08:22:52] [info] file_gc.cpp(184): file gc oblogreader path retain hour: 168

[2023-12-01 08:22:52] [info] file_gc.cpp(186): file gc prefixs: binlog.converter

[2023-12-01 08:22:52] [info] file_gc.cpp(186): file gc prefixs: binlog_converter.2

[2023-12-01 08:22:52] [info] file_gc.cpp(186): file gc prefixs: liboblog.log.2

[2023-12-01 08:22:52] [info] file_gc.cpp(186): file gc prefixs: logproxy.2

[2023-12-01 08:22:52] [info] file_gc.cpp(186): file gc prefixs: logproxy_

[2023-12-01 08:22:52] [info] file_gc.cpp(186): file gc prefixs: trace_record_read.2

[2023-12-01 08:22:52] [info] comm.cpp(111): +++ Communicator about to start

[2023-12-01 08:23:02] [info] status_thread.cpp(44): COUNTS:[NREADER:0][NCHANNEL:0]

[2023-12-01 08:23:04] [info] status_thread.cpp(62): METRICS:[MEM:3559/11964MB,29.75%][UDISK:67736/1031018MB,6.57%][CPU:16,6.995%][LOAD1,5:163%,172%][NETIO:0KB/s,0KB/s]

[2023-12-01 08:23:04] [info] status_thread.cpp(81): METRICS:[CLIENT_ID:/usr/local/oblogproxy][PID:1902][MEM:7/11964MB,0%][UDISK:1490/1031018MB,6.57%][CPU:16,0%][NETIO:0KB/s,0KB/s]

[2023-12-01 08:23:14] [info] status_thread.cpp(44): COUNTS:[NREADER:0][NCHANNEL:0]

[2023-12-01 08:23:15] [info] status_thread.cpp(62): METRICS:[MEM:3560/11964MB,29.76%][UDISK:67738/1031018MB,6.57%][CPU:16,6.389%][LOAD1,5:138%,166%][NETIO:22KB/s,22KB/s]

[2023-12-01 08:23:15] [info] status_thread.cpp(81): METRICS:[CLIENT_ID:/usr/local/oblogproxy][PID:1902][MEM:7/11964MB,0%][UDISK:1490/1031018MB,6.57%][CPU:16,0%][NETIO:0KB/s,22KB/s]

[2023-12-01 08:23:25] [info] status_thread.cpp(44): COUNTS:[NREADER:0][NCHANNEL:0]

[2023-12-01 08:23:27] [info] status_thread.cpp(62): METRICS:[MEM:3560/11964MB,29.76%][UDISK:67739/1031018MB,6.57%][CPU:16,5.705%][LOAD1,5:117%,161%][NETIO:18KB/s,18KB/s]

[2023-12-01 08:23:27] [info] status_thread.cpp(81): METRICS:[CLIENT_ID:/usr/local/oblogproxy][PID:1902][MEM:7/11964MB,0%][UDISK:1490/1031018MB,6.57%][CPU:16,0%][NETIO:0KB/s,18KB/s]

[2023-12-01 08:23:37] [info] status_thread.cpp(44): COUNTS:[NREADER:0][NCHANNEL:0]

[2023-12-01 08:23:38] [info] status_thread.cpp(62): METRICS:[MEM:3561/11964MB,29.76%][UDISK:67741/1031018MB,6.57%][CPU:16,6.643%][LOAD1,5:153%,166%][NETIO:21KB/s,21KB/s]

[2023-12-01 08:23:38] [info] status_thread.cpp(81): METRICS:[CLIENT_ID:/usr/local/oblogproxy][PID:1902][MEM:7/11964MB,0%][UDISK:1490/1031018MB,6.57%][CPU:16,0%][NETIO:0KB/s,21KB/s]

客户端代码:

public static void main(String[] args) throws Exception {

ObReaderConfig config = new ObReaderConfig();

//config.setClusterUrl("http://10.201.69.20:8080/services?Action=ObRootServiceInfo&User_ID=alibaba&UID=ocpmaster&ObRegion=jzob42");

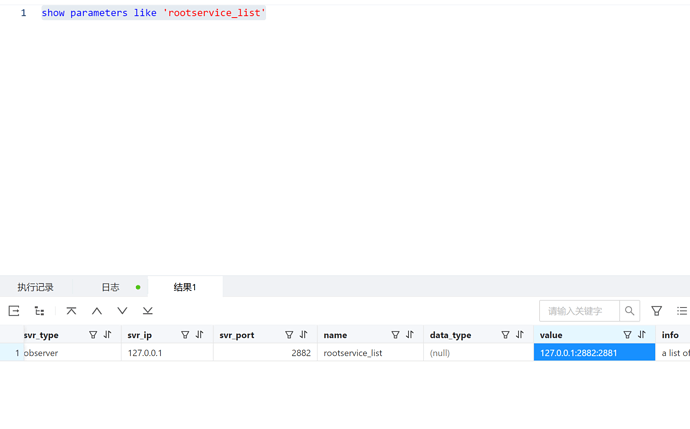

config.setRsList("127.0.0.1:2882:2881");

config.setUsername("proxyro@test");

config.setPassword("Root123@@Root123");

config.setStartTimestamp(0L);

config.setTableWhiteList("test.*.*");

config.setWorkingMode("memory");

ClientConf clientConf =

ClientConf.builder()

.transferQueueSize(1000)

.connectTimeoutMs(3000)

.maxReconnectTimes(100)

.ignoreUnknownRecordType(true)

.clientId("test")

.build();

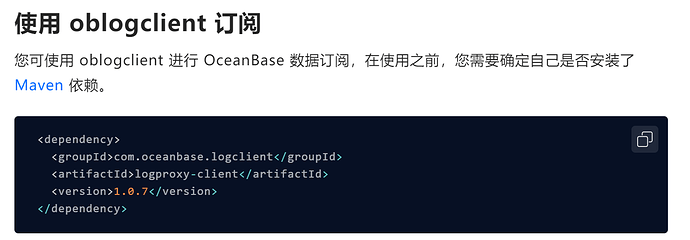

LogProxyClient client = new LogProxyClient("127.0.0.1", 2983, config,clientConf);

// 绑定一个处理日志数据的 RecordListener

client.addListener(new RecordListener() {

@Override

public void notify(LogMessage message){

// 在此添加数据处理逻辑

System.out.println(message.getOpt());

}

@Override

public void onException(LogProxyClientException e) {

System.out.println(e.getMessage());

}

});

client.start();

client.join();

}

不知道之前漏了什么,命令顺序如下:

docker exec -it ob ob-mysql sys

create user proxyro identified by 'Root123@@Root123';

grant select on test.* to proxyro;

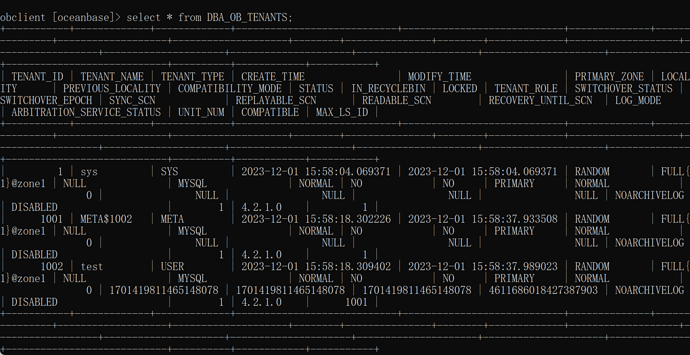

看到docker镜像中有个test租户就直接用了