oceanbase 的 flink cdc 重启之后无法捕获到数据,换了flink和cdc的版本后可以正常同步,重启flink后又无法获取数据,这个要如何排查。

无法捕获数据,等待一段时间后会提示超时

目前的版本信息如下:

oblogproxy-ce-for-4x-1.1.3-20230804144645

OceanBase_CE 4.1.0.0 (r100000202023040520-0765e69043c31bf86e83b5d618db0530cf31b707)

flink-1.17.0

flink-sql-connector-oceanbase-cdc-2.4.0.jar

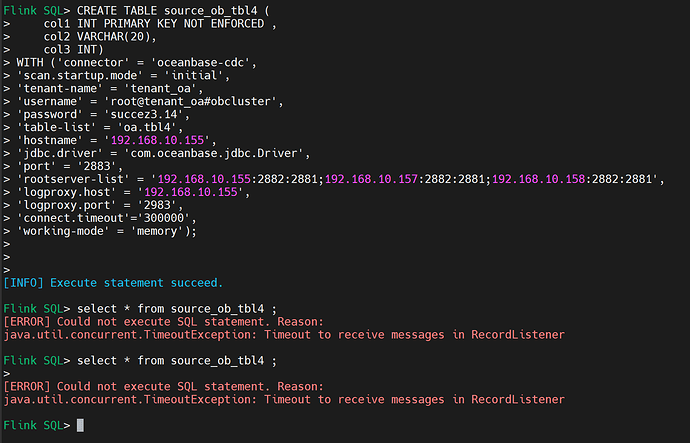

Flink SQL中创建同步任务

CREATE TABLE source_ob_tbl4 (

col1 INT PRIMARY KEY NOT ENFORCED ,

col2 VARCHAR(20),

col3 INT)

WITH (‘connector’ = ‘oceanbase-cdc’,

‘scan.startup.mode’ = ‘initial’,

‘tenant-name’ = ‘tenant**’,

‘username’ = ‘root@tenant**#obcluster’,

‘password’ = ‘**’,

‘table-list’ = ‘oa.tbl4’,

‘hostname’ = ‘192.168.10.155’,

‘jdbc.driver’ = ‘com.oceanbase.jdbc.Driver’,

‘port’ = ‘2883’,

‘rootserver-list’ = ‘192.168.10.155:2882:2881;192.168.10.157:2882:2881;192.168.10.158:2882:2881’,

‘logproxy.host’ = ‘192.168.10.155’,

‘logproxy.port’ = ‘2983’,

‘connect.timeout’=‘300000’,

‘working-mode’ = ‘memory’);

日志信息

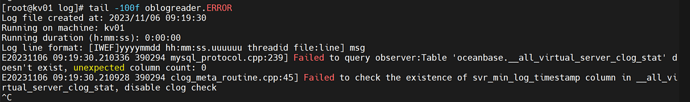

oblogproxy 日志:

flink 日志:

2023-11-06 09:19:29,479 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher [] - Received JobGraph submission ‘collect’ (80b32cfc9550315fc526758d2f0889ee).

2023-11-06 09:19:29,479 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher [] - Submitting job ‘collect’ (80b32cfc9550315fc526758d2f0889ee).

2023-11-06 09:19:29,484 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcService [] - Starting RPC endpoint for org.apache.flink.runtime.jobmaster.JobMaster at akka://flink/user/rpc/jobmanager_9 .

2023-11-06 09:19:29,485 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Initializing job ‘collect’ (80b32cfc9550315fc526758d2f0889ee).

2023-11-06 09:19:29,486 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Using restart back off time strategy NoRestartBackoffTimeStrategy for collect (80b32cfc9550315fc526758d2f0889ee).

2023-11-06 09:19:29,489 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Created execution graph ada295705f8c7b03a59bac7ed0dbdf28 for job 80b32cfc9550315fc526758d2f0889ee.

2023-11-06 09:19:29,490 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Running initialization on master for job collect (80b32cfc9550315fc526758d2f0889ee).

2023-11-06 09:19:29,490 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Successfully ran initialization on master in 0 ms.

2023-11-06 09:19:29,491 INFO org.apache.flink.runtime.scheduler.adapter.DefaultExecutionTopology [] - Built 1 new pipelined regions in 0 ms, total 1 pipelined regions currently.

2023-11-06 09:19:29,492 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - No state backend has been configured, using default (HashMap) org.apache.flink.runtime.state.hashmap.HashMapStateBackend@27a182c9

2023-11-06 09:19:29,492 INFO org.apache.flink.runtime.state.StateBackendLoader [] - State backend loader loads the state backend as HashMapStateBackend

2023-11-06 09:19:29,492 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Checkpoint storage is set to ‘jobmanager’

2023-11-06 09:19:29,493 INFO org.apache.flink.runtime.checkpoint.CheckpointCoordinator [] - No checkpoint found during restore.

2023-11-06 09:19:29,494 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Using failover strategy org.apache.flink.runtime.executiongraph.failover.flip1.RestartPipelinedRegionFailoverStrategy@ab8314d for collect (80b32cfc9550315fc526758d2f0889ee).

2023-11-06 09:19:29,495 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Starting execution of job ‘collect’ (80b32cfc9550315fc526758d2f0889ee) under job master id 00000000000000000000000000000000.

2023-11-06 09:19:29,495 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Starting scheduling with scheduling strategy [org.apache.flink.runtime.scheduler.strategy.PipelinedRegionSchedulingStrategy]

2023-11-06 09:19:29,495 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Job collect (80b32cfc9550315fc526758d2f0889ee) switched from state CREATED to RUNNING.

2023-11-06 09:19:29,496 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Source: source_ob_tbl4[1] → ConstraintEnforcer[2] → Sink: Collect table sink (1/1) (ada295705f8c7b03a59bac7ed0dbdf28_cbc357ccb763df2852fee8c4fc7d55f2_0_0) switched from CREATED to SCHEDULED.

2023-11-06 09:19:29,497 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Connecting to ResourceManager akka.tcp://flink@localhost:6123/user/rpc/resourcemanager_*(00000000000000000000000000000000)

2023-11-06 09:19:29,498 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Resolved ResourceManager address, beginning registration

2023-11-06 09:19:29,499 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager [] - Registering job manager 00000000000000000000000000000000@akka.tcp://flink@localhost:6123/user/rpc/jobmanager_9 for job 80b32cfc9550315fc526758d2f0889ee.

2023-11-06 09:19:29,499 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager [] - Registered job manager 00000000000000000000000000000000@akka.tcp://flink@localhost:6123/user/rpc/jobmanager_9 for job 80b32cfc9550315fc526758d2f0889ee.

2023-11-06 09:19:29,500 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - JobManager successfully registered at ResourceManager, leader id: 00000000000000000000000000000000.

2023-11-06 09:19:29,500 INFO org.apache.flink.runtime.resourcemanager.slotmanager.DeclarativeSlotManager [] - Received resource requirements from job 80b32cfc9550315fc526758d2f0889ee: [ResourceRequirement{resourceProfile=ResourceProfile{UNKNOWN}, numberOfRequiredSlots=1}]

2023-11-06 09:19:29,597 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Source: source_ob_tbl4[1] → ConstraintEnforcer[2] → Sink: Collect table sink (1/1) (ada295705f8c7b03a59bac7ed0dbdf28_cbc357ccb763df2852fee8c4fc7d55f2_0_0) switched from SCHEDULED to DEPLOYING.

2023-11-06 09:19:29,598 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Deploying Source: source_ob_tbl4[1] → ConstraintEnforcer[2] → Sink: Collect table sink (1/1) (attempt #0) with attempt id ada295705f8c7b03a59bac7ed0dbdf28_cbc357ccb763df2852fee8c4fc7d55f2_0_0 and vertex id cbc357ccb763df2852fee8c4fc7d55f2_0 to localhost:6704-895fc4 @ localhost (dataPort=26708) with allocation id 37866fdec06ab581c002006d4adbe2e7

2023-11-06 09:19:29,834 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Source: source_ob_tbl4[1] → ConstraintEnforcer[2] → Sink: Collect table sink (1/1) (ada295705f8c7b03a59bac7ed0dbdf28_cbc357ccb763df2852fee8c4fc7d55f2_0_0) switched from DEPLOYING to INITIALIZING.

2023-11-06 09:19:29,870 INFO org.apache.flink.streaming.api.operators.collect.CollectSinkOperatorCoordinator [] - Received sink socket server address: localhost/127.0.0.1:21863

2023-11-06 09:19:29,870 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Source: source_ob_tbl4[1] → ConstraintEnforcer[2] → Sink: Collect table sink (1/1) (ada295705f8c7b03a59bac7ed0dbdf28_cbc357ccb763df2852fee8c4fc7d55f2_0_0) switched from INITIALIZING to RUNNING.

2023-11-06 09:19:29,931 INFO org.apache.flink.streaming.api.operators.collect.CollectSinkOperatorCoordinator [] - Sink connection established

2023-11-06 09:24:29,899 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Source: source_ob_tbl4[1] → ConstraintEnforcer[2] → Sink: Collect table sink (1/1) (ada295705f8c7b03a59bac7ed0dbdf28_cbc357ccb763df2852fee8c4fc7d55f2_0_0) switched from RUNNING to FAILED on localhost:6704-895fc4 @ localhost (dataPort=26708).

java.util.concurrent.TimeoutException: Timeout to receive messages in RecordListener

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.readChangeRecords(OceanBaseRichSourceFunction.java:374) ~[flink-sql-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.run(OceanBaseRichSourceFunction.java:158) ~[flink-sql-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:110) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:67) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:333) ~[flink-dist-1.17.0.jar:1.17.0]

2023-11-06 09:24:29,901 INFO org.apache.flink.runtime.resourcemanager.slotmanager.DeclarativeSlotManager [] - Clearing resource requirements of job 80b32cfc9550315fc526758d2f0889ee

2023-11-06 09:24:29,901 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Job collect (80b32cfc9550315fc526758d2f0889ee) switched from state RUNNING to FAILING.

org.apache.flink.runtime.JobException: Recovery is suppressed by NoRestartBackoffTimeStrategy

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.handleFailure(ExecutionFailureHandler.java:139) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.getFailureHandlingResult(ExecutionFailureHandler.java:83) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.DefaultScheduler.recordTaskFailure(DefaultScheduler.java:258) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.DefaultScheduler.handleTaskFailure(DefaultScheduler.java:249) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.DefaultScheduler.onTaskFailed(DefaultScheduler.java:242) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.SchedulerBase.onTaskExecutionStateUpdate(SchedulerBase.java:748) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.SchedulerBase.updateTaskExecutionState(SchedulerBase.java:725) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.SchedulerNG.updateTaskExecutionState(SchedulerNG.java:80) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.jobmaster.JobMaster.updateTaskExecutionState(JobMaster.java:479) ~[flink-dist-1.17.0.jar:1.17.0]

at sun.reflect.GeneratedMethodAccessor63.invoke(Unknown Source) ~[?:?]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_242]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_242]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.lambda$handleRpcInvocation$1(AkkaRpcActor.java:309) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at org.apache.flink.runtime.concurrent.akka.ClassLoadingUtils.runWithContextClassLoader(ClassLoadingUtils.java:83) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcInvocation(AkkaRpcActor.java:307) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:222) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:84) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:168) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:24) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:20) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at scala.PartialFunction.applyOrElse(PartialFunction.scala:127) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at scala.PartialFunction.applyOrElse$(PartialFunction.scala:126) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:20) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:175) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:176) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:176) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.actor.Actor.aroundReceive(Actor.scala:537) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.actor.Actor.aroundReceive$(Actor.scala:535) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:220) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:579) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.actor.ActorCell.invoke(ActorCell.scala:547) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:270) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.dispatch.Mailbox.run(Mailbox.scala:231) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.dispatch.Mailbox.exec(Mailbox.scala:243) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289) [?:1.8.0_242]

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1056) [?:1.8.0_242]

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1692) [?:1.8.0_242]

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:157) [?:1.8.0_242]

Caused by: java.util.concurrent.TimeoutException: Timeout to receive messages in RecordListener

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.readChangeRecords(OceanBaseRichSourceFunction.java:374) ~[flink-sql-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.run(OceanBaseRichSourceFunction.java:158) ~[flink-sql-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:110) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:67) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:333) ~[flink-dist-1.17.0.jar:1.17.0]

2023-11-06 09:24:29,914 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Job collect (80b32cfc9550315fc526758d2f0889ee) switched from state FAILING to FAILED.

org.apache.flink.runtime.JobException: Recovery is suppressed by NoRestartBackoffTimeStrategy

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.handleFailure(ExecutionFailureHandler.java:139) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.getFailureHandlingResult(ExecutionFailureHandler.java:83) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.DefaultScheduler.recordTaskFailure(DefaultScheduler.java:258) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.DefaultScheduler.handleTaskFailure(DefaultScheduler.java:249) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.DefaultScheduler.onTaskFailed(DefaultScheduler.java:242) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.SchedulerBase.onTaskExecutionStateUpdate(SchedulerBase.java:748) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.SchedulerBase.updateTaskExecutionState(SchedulerBase.java:725) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.scheduler.SchedulerNG.updateTaskExecutionState(SchedulerNG.java:80) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.runtime.jobmaster.JobMaster.updateTaskExecutionState(JobMaster.java:479) ~[flink-dist-1.17.0.jar:1.17.0]

at sun.reflect.GeneratedMethodAccessor63.invoke(Unknown Source) ~[?:?]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_242]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_242]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.lambda$handleRpcInvocation$1(AkkaRpcActor.java:309) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at org.apache.flink.runtime.concurrent.akka.ClassLoadingUtils.runWithContextClassLoader(ClassLoadingUtils.java:83) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcInvocation(AkkaRpcActor.java:307) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:222) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:84) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:168) ~[flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:24) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:20) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at scala.PartialFunction.applyOrElse(PartialFunction.scala:127) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at scala.PartialFunction.applyOrElse$(PartialFunction.scala:126) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:20) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:175) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:176) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:176) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.actor.Actor.aroundReceive(Actor.scala:537) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.actor.Actor.aroundReceive$(Actor.scala:535) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:220) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:579) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.actor.ActorCell.invoke(ActorCell.scala:547) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:270) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.dispatch.Mailbox.run(Mailbox.scala:231) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at akka.dispatch.Mailbox.exec(Mailbox.scala:243) [flink-rpc-akka_bd3f1ada-d4d4-4d4c-a73a-a05ea3417c2f.jar:1.17.0]

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289) [?:1.8.0_242]

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1056) [?:1.8.0_242]

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1692) [?:1.8.0_242]

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:157) [?:1.8.0_242]

Caused by: java.util.concurrent.TimeoutException: Timeout to receive messages in RecordListener

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.readChangeRecords(OceanBaseRichSourceFunction.java:374) ~[flink-sql-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.run(OceanBaseRichSourceFunction.java:158) ~[flink-sql-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:110) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:67) ~[flink-dist-1.17.0.jar:1.17.0]

at org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:333) ~[flink-dist-1.17.0.jar:1.17.0]

2023-11-06 09:24:29,914 INFO org.apache.flink.runtime.checkpoint.CheckpointCoordinator [] - Stopping checkpoint coordinator for job 80b32cfc9550315fc526758d2f0889ee.

2023-11-06 09:24:29,915 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher [] - Job 80b32cfc9550315fc526758d2f0889ee reached terminal state FAILED.

org.apache.flink.runtime.JobException: Recovery is suppressed by NoRestartBackoffTimeStrategy

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.handleFailure(ExecutionFailureHandler.java:139)

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.getFailureHandlingResult(ExecutionFailureHandler.java:83)

at org.apache.flink.runtime.scheduler.DefaultScheduler.recordTaskFailure(DefaultScheduler.java:258)

at org.apache.flink.runtime.scheduler.DefaultScheduler.handleTaskFailure(DefaultScheduler.java:249)

at org.apache.flink.runtime.scheduler.DefaultScheduler.onTaskFailed(DefaultScheduler.java:242)

at org.apache.flink.runtime.scheduler.SchedulerBase.onTaskExecutionStateUpdate(SchedulerBase.java:748)

at org.apache.flink.runtime.scheduler.SchedulerBase.updateTaskExecutionState(SchedulerBase.java:725)

at org.apache.flink.runtime.scheduler.SchedulerNG.updateTaskExecutionState(SchedulerNG.java:80)

at org.apache.flink.runtime.jobmaster.JobMaster.updateTaskExecutionState(JobMaster.java:479)

at sun.reflect.GeneratedMethodAccessor63.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.lambda$handleRpcInvocation$1(AkkaRpcActor.java:309)

at org.apache.flink.runtime.concurrent.akka.ClassLoadingUtils.runWithContextClassLoader(ClassLoadingUtils.java:83)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcInvocation(AkkaRpcActor.java:307)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:222)

at org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:84)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:168)

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:24)

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:20)

at scala.PartialFunction.applyOrElse(PartialFunction.scala:127)

at scala.PartialFunction.applyOrElse$(PartialFunction.scala:126)

at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:20)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:175)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:176)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:176)

at akka.actor.Actor.aroundReceive(Actor.scala:537)

at akka.actor.Actor.aroundReceive$(Actor.scala:535)

at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:220)

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:579)

at akka.actor.ActorCell.invoke(ActorCell.scala:547)

at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:270)

at akka.dispatch.Mailbox.run(Mailbox.scala:231)

at akka.dispatch.Mailbox.exec(Mailbox.scala:243)

at java.util.concurrent.ForkJoinTask.doExec(ForkJoinTask.java:289)

at java.util.concurrent.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1056)

at java.util.concurrent.ForkJoinPool.runWorker(ForkJoinPool.java:1692)

at java.util.concurrent.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:157)

Caused by: java.util.concurrent.TimeoutException: Timeout to receive messages in RecordListener

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.readChangeRecords(OceanBaseRichSourceFunction.java:374)

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.run(OceanBaseRichSourceFunction.java:158)

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:110)

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:67)

at org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:333)

2023-11-06 09:24:29,917 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher [] - Job 80b32cfc9550315fc526758d2f0889ee has been registered for cleanup in the JobResultStore after reaching a terminal state.

2023-11-06 09:24:29,918 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Stopping the JobMaster for job ‘collect’ (80b32cfc9550315fc526758d2f0889ee).

2023-11-06 09:24:29,921 INFO org.apache.flink.runtime.checkpoint.StandaloneCompletedCheckpointStore [] - Shutting down

2023-11-06 09:24:29,921 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Disconnect TaskExecutor localhost:6704-895fc4 because: Stopping JobMaster for job ‘collect’ (80b32cfc9550315fc526758d2f0889ee).

2023-11-06 09:24:29,921 INFO org.apache.flink.runtime.jobmaster.slotpool.DefaultDeclarativeSlotPool [] - Releasing slot [37866fdec06ab581c002006d4adbe2e7].

2023-11-06 09:24:29,922 INFO org.apache.flink.runtime.jobmaster.JobMaster [] - Close ResourceManager connection 5534fcf9e4e5b659aeb6cb8ddcc2608c: Stopping JobMaster for job ‘collect’ (80b32cfc9550315fc526758d2f0889ee).

2023-11-06 09:24:29,922 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager [] - Disconnect job manager