17:09:51.480 [Legacy Source Thread - Source: flight_info (1/1)#0] ERROR com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction - Read snapshot from table `ob_db_psg_data_center_test`.`market_flight_info` failed

java.sql.SQLException: Operation not allowed after ResultSet closed

at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:129) ~[mysql-connector-java-8.0.28.jar:8.0.28]

at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:97) ~[mysql-connector-java-8.0.28.jar:8.0.28]

at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:89) ~[mysql-connector-java-8.0.28.jar:8.0.28]

at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:63) ~[mysql-connector-java-8.0.28.jar:8.0.28]

at com.mysql.cj.jdbc.result.ResultSetImpl.checkClosed(ResultSetImpl.java:485) ~[mysql-connector-java-8.0.28.jar:8.0.28]

at com.mysql.cj.jdbc.result.ResultSetImpl.next(ResultSetImpl.java:1802) ~[mysql-connector-java-8.0.28.jar:8.0.28]

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.lambda$readSnapshotRecordsByTable$1(OceanBaseRichSourceFunction.java:273) ~[flink-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at io.debezium.jdbc.JdbcConnection.query(JdbcConnection.java:555) ~[debezium-core-1.9.7.Final.jar:1.9.7.Final]

at io.debezium.jdbc.JdbcConnection.query(JdbcConnection.java:496) ~[debezium-core-1.9.7.Final.jar:1.9.7.Final]

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.readSnapshotRecordsByTable(OceanBaseRichSourceFunction.java:269) ~[flink-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.lambda$readSnapshotRecords$0(OceanBaseRichSourceFunction.java:250) ~[flink-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at java.lang.Iterable.forEach(Iterable.java:75) ~[?:1.8.0_291]

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.readSnapshotRecords(OceanBaseRichSourceFunction.java:247) ~[flink-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction.run(OceanBaseRichSourceFunction.java:162) ~[flink-connector-oceanbase-cdc-2.4.0.jar:2.4.0]

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:116) ~[flink-streaming-java_2.12-1.14.2.jar:1.14.2]

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:73) ~[flink-streaming-java_2.12-1.14.2.jar:1.14.2]

at org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:323) ~[flink-streaming-java_2.12-1.14.2.jar:1.14.2]

17:09:53.084 [log-proxy-client-worker-1-thread-1] ERROR com.oceanbase.clogproxy.client.connection.ClientHandler - LogProxy refused handshake request: code: 1

message: "Failed to create oblogreader"

17:09:53.085 [log-proxy-client-worker-1-thread-1] ERROR com.oceanbase.clogproxy.client.connection.ClientHandler - Exception occurred ClientId: 10.1.37.174_58468_1691658568_1_loongair_ob_cx: rootserver_list=10.1.129.154:2882:2881;10.1.129.155:2882:2881;10.1.129.156:2882:2881, cluster_id=, cluster_user=uathsd@loongair_ob_cx#loongair_ssd:3, cluster_password=******, , sys_user=, sys_password=******, tb_white_list=loongair_ob_cx.*.*, tb_black_list=|, start_timestamp=0, start_timestamp_us=0, timezone=+00:00, working_mode=memory, with LogProxy: 10.1.129.157:2983

com.oceanbase.clogproxy.client.exception.LogProxyClientException: LogProxy refused handshake request: code: 1

message: "Failed to create oblogreader"

at com.oceanbase.clogproxy.client.connection.ClientHandler.handleErrorResponse(ClientHandler.java:228) ~[oblogclient-logproxy-1.1.0.jar:?]

at com.oceanbase.clogproxy.client.connection.ClientHandler.channelRead(ClientHandler.java:158) ~[oblogclient-logproxy-1.1.0.jar:?]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286) ~[netty-handler-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:722) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:658) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:584) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:496) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:995) ~[netty-common-4.1.77.Final.jar:4.1.77.Final]

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) ~[netty-common-4.1.77.Final.jar:4.1.77.Final]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_291]

17:09:54.585 [Thread-15] ERROR com.ververica.cdc.connectors.oceanbase.source.OceanBaseRichSourceFunction - LogProxyClient exception

com.oceanbase.clogproxy.client.exception.LogProxyClientException: LogProxy refused handshake request: code: 1

message: "Failed to create oblogreader"

at com.oceanbase.clogproxy.client.connection.ClientHandler.handleErrorResponse(ClientHandler.java:228) ~[oblogclient-logproxy-1.1.0.jar:?]

at com.oceanbase.clogproxy.client.connection.ClientHandler.channelRead(ClientHandler.java:158) ~[oblogclient-logproxy-1.1.0.jar:?]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286) ~[netty-handler-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:722) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:658) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:584) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:496) ~[netty-transport-4.1.77.Final.jar:4.1.77.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:995) ~[netty-common-4.1.77.Final.jar:4.1.77.Final]

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) ~[netty-common-4.1.77.Final.jar:4.1.77.Final]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_291]

上面这个 Operation not allowed after ResultSet closed 是目前的异常退出逻辑中会触发的情况,这里可以先忽略,主要还是看下边的 Failed to create oblogreader,需要先确认下 logproxy 和 ob 的版本是否适配,没问题的话再看下 logproxy 的运行目录有什么报错信息。

版本对应关系可以参考这里的 release note:Releases · oceanbase/oblogproxy · GitHub

是我打开姿势不对吗,怎么解压之后不是文本文件

我在找DBA重新弄下,不好意思,我也没注意

从 logproxy 日志里看不出来问题,麻烦再提供一下 liboblog 和 logreader 的日志,在 logproxy 目录下边的 run/{client-id}/,client id 就是 flink 日志里报的那个 10.1.37.174_58468_1691658568_1_loongair_ob_cx 类似的。

另外一点,在测试程序时需要注意,每次退出时客户端要主动关闭连接,否则 logreader 进程是不会结束的,建议先检查下 logproxy 所在机器的 2983 端口有没有不需要的进程,有的话先清理一下。

Log file created at: 2023/08/10 17:18:52

Running on machine: ob-oms

Running duration (h:mm:ss): 0:00:00

Log line format: [IWEF]yyyymmdd hh:mm:ss.uuuuuu threadid file:line] msg

E20230810 17:18:52.336222 47119 mysql_protocol.cpp:239] Failed to query observer:Table ‘oceanbase.__all_virtual_server_clog_stat’ doesn’t exist, unexpected column count: 0

E20230810 17:18:52.336532 47119 clog_meta_routine.cpp:45] Failed to check the existence of svr_min_log_timestamp column in __all_virtual_server_clog_stat, disable clog check

E20230810 17:18:59.682936 47324 comm.cpp:357] Not found channel of peer![]() 12548437079696343048, fd:8, addr:2921660682, port:7588, just close it

12548437079696343048, fd:8, addr:2921660682, port:7588, just close it

E20230810 17:18:59.683066 47324 sender_routine.cpp:171] Failed to write LogMessage to client: id:12548437079696343048, fd:8, addr:2921660682, port:7588

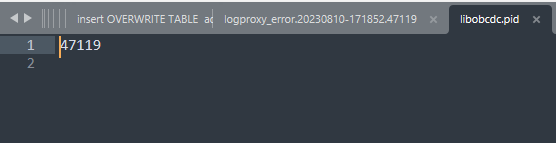

--------run文件夹下的id=47119,以上是logproxy_error.20230810-171852.47119的全部日志

以下是所有日志

链接: 百度网盘 请输入提取码 提取码: pkep 复制这段内容后打开百度网盘手机App,操作更方便哦

–来自百度网盘超级会员v6的分享

百度网盘我们用不了…你看看能不能把最近一次的 error log 文件贴上来