如果可以的话,试着重启一下。我们怀疑和存储设备有关,麻烦把环境的存储设备信息发下。

重启完可以解决,不过好像过段时间又会出现,目前的环境是

三节点ob组成集群,环境相关信息如下:

操作系统是x86的

uname -a

Linux obdb1 3.10.0-693.el7.x86_64 #1 SMP Thu Jul 6 19:56:57 EDT 2017 x86_64 x86_64 x86_64 GNU/Linux

cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.4 (Maipo)

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 6.6T 0 disk

├─sda1 8:1 0 200M 0 part /boot/efi

├─sda2 8:2 0 200M 0 part /boot

└─sda3 8:3 0 6.6T 0 part

├─rhel-root 253:0 0 300G 0 lvm /

├─rhel-swap 253:1 0 128G 0 lvm [SWAP]

└─rhel-gpdata 253:5 0 6.1T 0 lvm /data/soft

sdb 8:16 0 1.8T 0 disk

├─vg_ob-lv_pro 253:2 0 300G 0 lvm /data/oceanbase/product

├─vg_ob-lv_red 253:3 0 200G 0 lvm /data/oceanbase/redolog

└─vg_ob-lv_sto 253:4 0 1.2T 0 lvm /data/oceanbase/storage

日志盘( /data/oceanbase/redolog)和数据盘(/data/oceanbase/storage)是用的IBM的ssd盘做的lvm,划分出三个vg

各挂载点空间使用情况如下:

[admin@obdb1 ~]$ df -mh

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rhel-root 300G 68G 233G 23% /

devtmpfs 63G 0 63G 0% /dev

tmpfs 63G 0 63G 0% /dev/shm

tmpfs 63G 114M 63G 1% /run

tmpfs 63G 0 63G 0% /sys/fs/cgroup

/dev/sda2 194M 113M 81M 59% /boot

/dev/sda1 200M 9.8M 191M 5% /boot/efi

/dev/mapper/vg_ob-lv_pro 300G 228G 73G 76% /data/oceanbase/product

/dev/mapper/vg_ob-lv_red 200G 180G 20G 91% /data/oceanbase/redolog

/dev/mapper/vg_ob-lv_sto 1.2T 1.1T 120G 91% /data/oceanbase/storage

/dev/mapper/rhel-gpdata 6.2T 34M 6.2T 1% /data/soft

tmpfs 13G 0 13G 0% /run/user/6100

[admin@obdb1 log]$ cd /data/oceanbase/redolog/ob/obcluster/clog/

[admin@obdb1 clog]$ du -sh *

52G log_pool

13G tenant_1

12G tenant_1001

104G tenant_1002

请用如下cpp代码进行验证,具体使用方式:

- 将如下代码复制到到文件test.cpp中,test.cpp目录需要在/data/oceanbase/redolog/目录下;

- 使用g++工具进行编译,需要支持c++11,命令为: g++ -g -O2 test.cpp -lpthread -std=c++11,改名执行完成后,会生成a.out可执行文件;

- 执行ulimit -c unlimited;

- 执行./a.out > /tmp/test.log,如果出现运行失败,会生成core文件,core的预期在 ‘cat /proc/sys/kernel/core_pattern’ 下;

- 如果程序顺利运行结束,请联系支持同学。

#include <stdint.h>

#include <stdlib.h>

#include <stdio.h>

#include <fcntl.h>

#include <string.h>

#include <unistd.h>

#include <iostream>

#include <errno.h>

#include <pthread.h>

#include <string>

#include <assert.h>

using namespace std;

int64_t FILE_SIZE = 100ul * 1024 * 1024 * 1024; // 100G

int64_t WRITE_SIZE = 128; // 必须被4k整除

int64_t ALIGN_SIZE = 4096;

int log_write_flag = 0;

int log_read_flag = 0;

pthread_mutex_t mutex;

void lock()

{

pthread_mutex_lock(&mutex);

}

void unlock()

{

pthread_mutex_unlock(&mutex);

}

class Writer {

public:

void init(const char *file_name);

void append(int64_t write_offset);

void close();

int64_t curr_offset_;

int fd_;

std::string file_name_;

char *write_buf_;

};

void Writer::init(const char *file_name)

{

write_buf_ = static_cast<char*>(aligned_alloc(ALIGN_SIZE, ALIGN_SIZE));

curr_offset_ = 0;

file_name_ = file_name;

fd_ = ::open(file_name_.c_str(), log_write_flag, 0666);

fallocate(fd_, 0, 0, FILE_SIZE);

int64_t remined_size = FILE_SIZE;

}

void Writer::append(int64_t write_offset)

{

int64_t curr_write_size = WRITE_SIZE;

int64_t start_offset = write_offset / ALIGN_SIZE * ALIGN_SIZE;

int64_t valid_buff_len = (write_offset + curr_write_size) % ALIGN_SIZE;

if (valid_buff_len == 0) {

valid_buff_len = ALIGN_SIZE;

}

memset(write_buf_, 'x', valid_buff_len);

if (valid_buff_len != ALIGN_SIZE) {

memset(write_buf_ + valid_buff_len, 'y', 4096 - valid_buff_len);

}

printf("pwrite begin, write_offset:offset:%ld, start_offset:%ld, valid_buff_len:%ld\n", write_offset, start_offset, valid_buff_len);

usleep(10);

::pwrite(fd_, write_buf_, ALIGN_SIZE, start_offset);

printf("pwrite success , write_offset:%ld, start_offset:%ld, valid_buff_len:%ld\n", write_offset, start_offset, valid_buff_len);

lock();

curr_offset_ += curr_write_size;

unlock();

}

void Writer::close()

{

::close(fd_);

}

Writer writer;

void write_func()

{

while (1) {

lock();

int64_t curr_offset = writer.curr_offset_;

unlock();

if (curr_offset < FILE_SIZE - 4096) {

writer.append(curr_offset);

} else {

break;

}

}

writer.close();

}

class Reader {

public:

void init(const char *file_name);

void read(int64_t read_offset);

int fd_;

char *read_buf_;

char *right_buf_;

int64_t last_read_offset_;

std::string file_name_;

char *retry_buf_;

};

void Reader::init(const char *file_name)

{

read_buf_ = static_cast<char*>(aligned_alloc(ALIGN_SIZE, ALIGN_SIZE));

right_buf_ = static_cast<char*>(aligned_alloc(ALIGN_SIZE, ALIGN_SIZE));

retry_buf_ = static_cast<char*>(aligned_alloc(ALIGN_SIZE, ALIGN_SIZE));

memset(right_buf_, 'x', ALIGN_SIZE);

memset(retry_buf_, 'r', ALIGN_SIZE);

file_name_ = file_name;

last_read_offset_ = 0;

fd_ = ::open(file_name_.c_str(), log_read_flag);

}

void Reader::read(int64_t read_offset)

{

int64_t start_offset = (read_offset) / ALIGN_SIZE * ALIGN_SIZE;

int64_t valid_buff_len = read_offset % ALIGN_SIZE;

// memset(read_buf_, 'b', ALIGN_SIZE);

printf("read begin, read_offset:%ld, start_offset:%ld, valid_buff_len:%ld\n", read_offset, start_offset, valid_buff_len);

usleep(3);

::pread(fd_, read_buf_, ALIGN_SIZE, start_offset);

printf("read success, read_offset:%ld, start_offset:%ld, valid_buff_len:%ld\n", read_offset, start_offset, valid_buff_len);

if (0 != memcmp(read_buf_, right_buf_, valid_buff_len)) {

::pread(fd_, retry_buf_, ALIGN_SIZE, start_offset);

int i = 1;

while (0 != memcmp(retry_buf_, right_buf_, valid_buff_len)) {

{

printf("second read failed, offset:%ld, ptr:%p, retry_count: %d", start_offset, retry_buf_, i);

::pread(fd_, retry_buf_, ALIGN_SIZE, start_offset);

i++;

}

}

printf("memcmp failed, offset:%ld, read_buf:%p, retry_buf:%p", start_offset, read_buf_, retry_buf_);

assert(false);

} else {

last_read_offset_ += valid_buff_len;

}

}

Reader reader;

void* read_func(void *)

{

while (1) {

lock();

int64_t curr_offset = writer.curr_offset_;

unlock();

if (curr_offset > 4096) {

reader.read(curr_offset);

} else if (curr_offset > FILE_SIZE - 4096) {

break;

}

}

}

int main(int argc, char **argv)

{

pthread_mutex_init(&mutex, NULL);

std::string log_dir_str = "ob_unittest";

std::string rm_cmd = "rm -rf " + log_dir_str;

std::string mk_cmd = "mkdir " + log_dir_str;

system(rm_cmd.c_str());

system(mk_cmd.c_str());

std::string testfile = log_dir_str + "/" + "testfile";

log_read_flag = O_RDONLY | O_DIRECT | O_SYNC;

log_write_flag = O_RDWR | O_CREAT | O_DIRECT | O_SYNC;

writer.init(testfile.c_str());

reader.init(testfile.c_str());

pthread_t ntid;

pthread_create(&ntid, NULL, read_func, NULL);

write_func();

pthread_join(ntid, NULL);

return 0;

}

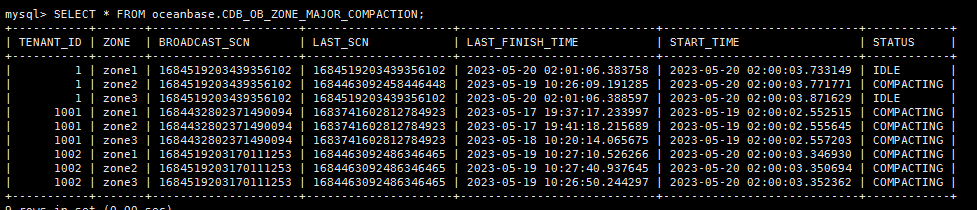

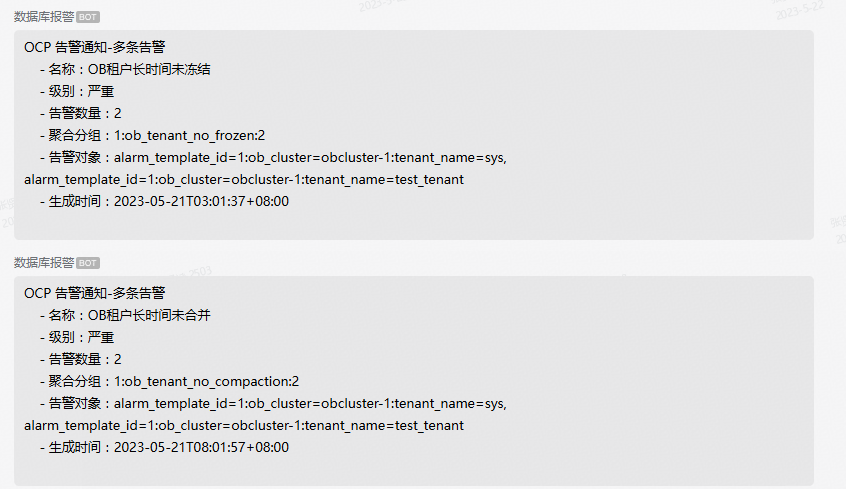

这个可能是有副本遇到了data checksum。

测试步骤和结果如下,麻烦帮忙再分析一下:

[admin@obdb2 redolog]$ pwd

/data/oceanbase/redolog

[admin@obdb2 redolog]$ ll

total 8

drwxr-xr-x 3 admin admin 23 May 10 16:46 ob

-rw-r–r-- 1 admin admin 4891 May 19 21:21 test.cpp

[admin@obdb2 redolog]$ g++ -g -O2 test.cpp -lpthread -std=c++11

[admin@obdb2 redolog]$ ulimit -c unlimited

[admin@obdb2 redolog]$ ./a.out >/tmp/test.log

a.out: test.cpp:153: void Reader::read(int64_t): Assertion `false’ failed.

Aborted (core dumped)

[admin@obdb2 redolog]$ ll

total 584

-rwxrwxr-x 1 admin admin 71800 May 19 21:32 a.out

-rw------- 1 admin admin 21704704 May 19 21:34 core.194044

drwxr-xr-x 3 admin admin 23 May 10 16:46 ob

drwxrwxr-x 2 admin admin 22 May 19 21:34 ob_unittest

-rw-r–r-- 1 admin admin 4891 May 19 21:21 test.cpp

[admin@obdb2 redolog]$ export TMOUT=0

[admin@obdb2 redolog]$ cat /proc/sys/kernel/core_pattern

core

[admin@obdb2 redolog]$ tar -zcvf test_result.tar.gz a.out core.194044 ob_unittest test.cpp /tmp/test.log

a.out

core.194044

ob_unittest/

ob_unittest/testfile

test.cpp

tar: Removing leading `/’ from member names

/tmp/test.log

test_result.tar.gz (99.8 KB)

单测程序使用了极简的C++代码模拟了Oceanbase4.1的日志持久化以及消费逻辑,基本确定是存储设备本身的问题。

临时解决方案:

- 重启才能解决,但基于回传的日志以及core文件,IBM的这块SSD出现问题的频率会非常高;

- 尝试换一块不同厂商的SSD。

跟/data/oceanbase/redolog这个挂载点使用率高有没有影响

/dev/mapper/vg_ob-lv_red 200G 180G 20G 91% /data/oceanbase/redolog

在另一台空间使用率比较低的同构机器上测试结果如下:

-bash-4.2$ ./a.out >/tmp/test.log

a.out: test.cpp:153: void Reader::read(int64_t): Assertion `false’ failed.

Aborted (core dumped)

-bash-4.2$ du -sh *

72K a.out

500K core.413914

101G ob_unittest

8.0K test.cpp

-bash-4.2$ vi /tmp/test.log

-bash-4.2$ cd ob_unittest/

-bash-4.2$ ll

total 104857604

-rw-rw-r-- 1 admin admin 107374182400 May 22 11:25 testfile

test_result.tar (2).gz (96.2 KB)

还有,有什么方法能提前检测ssd盘是否兼容的吗?

用我这个单测,如果长时间不出,基本就是没问题的,IBM那边也可以反馈下这个问题。

有没推荐的SSD厂商品牌?

可以试试Intel P4510,SAMSUNG PM9A3。

您好,这个问题我们又做了一些测试,有发现如果把磁盘做成raid1或者raid10,就不行,做jbod模式是正常的,想请问一下,咱们有没有推荐磁盘的冗余模式

目前测试结果来看,

1.当多块磁盘做成raid0,raid10,raid1测试程序都是运行异常,报错信息都为

a.out: test.cpp:153: void Reader::read(int64_t): Assertion `false’ failed.

Aborted (core dumped)

2.同样的硬件配置的物理机异常退出,报错信息同上,物理机上再建的虚拟机输出结果符合预期的(长时间不出结果)运行正常

3.单块盘jbod模式,测试代码输出结果是符合预期的(长时间不出结果)运行正常

4.目前主机都有使用阵列卡来挂载磁盘,测试来看有使用阵列卡时就有问题(a.out: test.cpp:153: void Reader::read(int64_t): Assertion `false’ failed.

Aborted (core dumped)),未使用阵列卡时正常。所以想咨询一下咱们这个产品是否支持阵列卡,对阵列卡配置是否有要求,能否提供配置文档?或推荐的服务器配置?

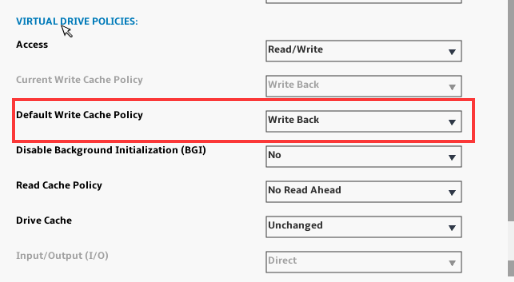

可以看下阵列卡的cache模式,write back 还是 write through,如果是write through的话,可以换成write back。

最近一次测试raid0时,cache的模式是write back,也是不行

抱歉,我说反了,应该改成write through,write back表示数据写到cache中就返回了。

好的,我们测试一下

改为write through,test.cpp测试结果正常了,感谢哈