【 使用环境 】生产环境

【 OB or 其他组件 】社区版OB

【 使用版本 】社区版OB 4.1.0

【问题描述】

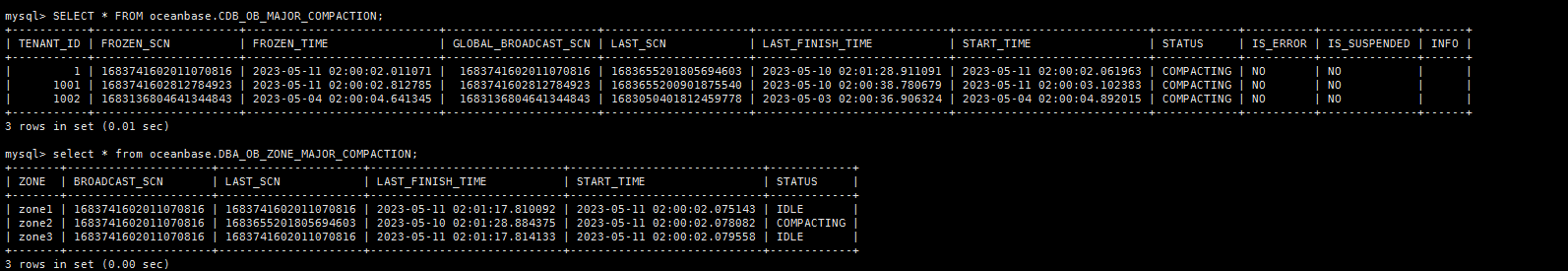

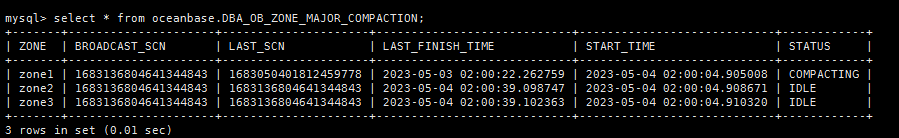

sys租户5月11日02点发起的自动合并未完成,普通租户5月4号02点发起的自动合并未完成

你的问题我们已经收到,稍后会有相关同学给你回复

查询日志信息:

grep “check merge progress success” rootservice.log |tail -10

grep 'WARN ’ observer.log |grep merge

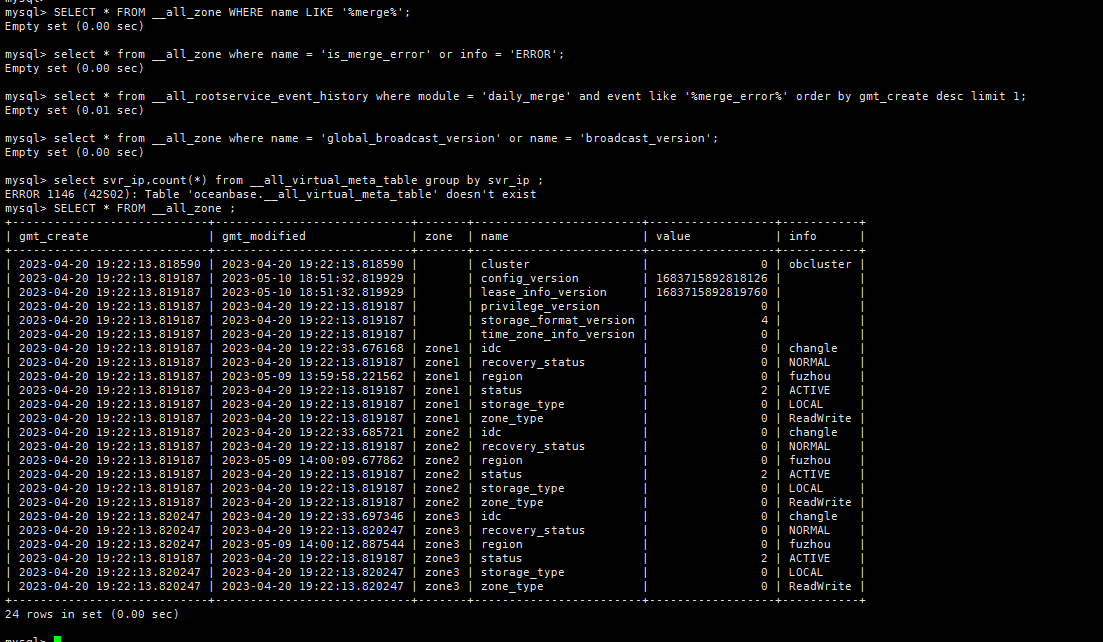

查询下面结果:

SELECT * FROM __all_zone WHERE name LIKE ‘%merge%’;

select * from __all_zone where name = “is_merge_error” or info = “ERROR”;

select * from __all_rootservice_event_history where module = “daily_merge” and event like “%merge_error%” order by gmt_create desc limit 1;

select * from __all_zone where name = “global_broadcast_version” or name = “broadcast_version”;

select svr_ip,count(*) from __all_virtual_meta_table group by svr_ip ;

[admin@obdb1 log]$ grep “check merge progress success” rootservice.log* |tail -10

[admin@obdb1 log]$ grep “WARN” observer.log* |grep merge

[admin@obdb2 log]$ grep “check merge progress success” rootservice.log* |tail -10

[admin@obdb2 log]$ grep “WARN” observer.log* |grep merge

[admin@obdb3 log]$ grep “check merge progress success” rootservice.log* |tail -10

[admin@obdb3 log]$ grep “WARN” observer.log* |grep merge

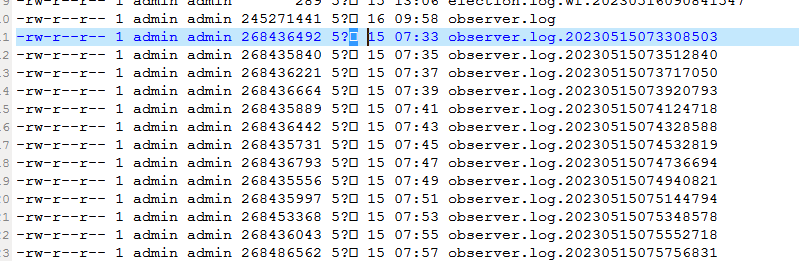

注:observer.log日志目前只有5月15日之后的

麻烦看下

select * from GV$OB_COMPACTION_DIAGNOSE_INFO;

select * from GV$OB_COMPACTION_PROGRESS;

select * from __all_virtual_tablet_meta_table where tenant_id = 1002 and compaction_scn < 1683136804641344843;

看起来是备机读时间戳没推导致合并前的转储无法执行。

我们找个事务同学确认下

1、

SELECT gmt_create,svr_ip,svr_port,event,name3,value3 FROM __all_server_event_history WHERE module=“ELECTION” AND value1=1002 AND value2=1 ORDER BY gmt_create;

根据查出来的leader确认下节点是哪个,同时,查询结果也回传下

到那个节点上帮忙取一下rootserver.log

2、10.168.89.11,10.168.89.12 取一下这两台的observer日志

3、select * from __all_virtual_dag_warning_history where tenant_id=1002;

查询结果.txt (4.4 KB)

election_89.13.tar.gz (2.6 MB)

election_89.11.tar.gz (1.4 MB)

observer_89_11.tar.gz (3.1 MB)

election_89.12.tar.gz (240.2 KB)

observer_89_12.tar.gz (2.7 MB)

observer.log文件较大,先提供最新的50000行记录,目前observer.log里有比较多报错

Unexpected internal error happen, please checkout the internal errcode(errcode=-4103, file=“log_iterator_impl.h”, line_no=700, info=“verify accumlate checksum failed”)

Log out of disk space(msg=“log disk space is almost full”, ret=-4264, total_size(MB)=14745, used_size(MB)=12945, used_percent(%)=87, warn_size(MB)=11796, warn_percent(%)=80, limit_size(MB)=14008, limit_percent(%)=95, maximum_used_size(MB)=12945, maximum_log_stream=1, oldest_log_stream=1, oldest_scn={val:1683731979634087599})

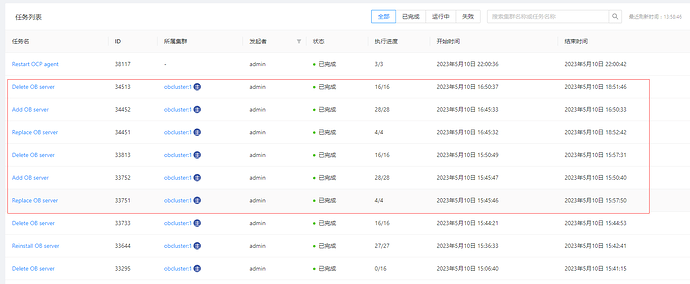

5月10号有进行了observer替换操作,第一次将89.12替换成了89.14,第二次又将89.14替换回89.12

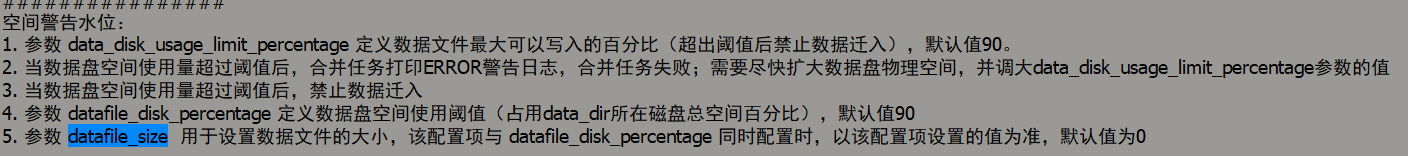

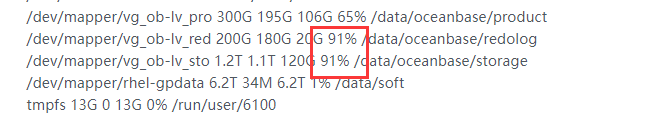

看看12节点的log盘是不是满了,df -h一下看看

同时select * from __all_server;也帮忙执行下

如果是目录满了,看看除了ob的文件外,是否还有其他文件占用

[admin@obdb2 log]$ df -mh

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rhel-root 300G 7.6G 293G 3% /

devtmpfs 63G 0 63G 0% /dev

tmpfs 63G 0 63G 0% /dev/shm

tmpfs 63G 98M 63G 1% /run

tmpfs 63G 0 63G 0% /sys/fs/cgroup

/dev/sda2 194M 113M 81M 59% /boot

/dev/sda1 200M 9.8M 191M 5% /boot/efi

/dev/mapper/vg_ob-lv_pro 300G 195G 106G 65% /data/oceanbase/product

/dev/mapper/vg_ob-lv_red 200G 180G 20G 91% /data/oceanbase/redolog

/dev/mapper/vg_ob-lv_sto 1.2T 1.1T 120G 91% /data/oceanbase/storage

/dev/mapper/rhel-gpdata 6.2T 34M 6.2T 1% /data/soft

tmpfs 13G 0 13G 0% /run/user/6100

tmpfs 13G 0 13G 0% /run/user/0

[admin@obdb2 log]$ cd /data/oceanbase/redolog/ob/obcluster/

[admin@obdb2 obcluster]$ ls

clog etc2

[admin@obdb2 obcluster]$ du -sh *

180G clog

8.0K etc2

[admin@obdb2 obcluster]$ cd clog/

[admin@obdb2 clog]$ du -sh *

97G log_pool

3.7G tenant_1

13G tenant_1001

67G tenant_1002

mysql> select * from __all_server;

±---------------------------±---------------------------±-------------±---------±—±------±-----------±----------------±-------±----------------------±------------------------------------------------------------------------------------------±----------±-------------------±-------------±---------------+

| gmt_create | gmt_modified | svr_ip | svr_port | id | zone | inner_port | with_rootserver | status | block_migrate_in_time | build_version | stop_time | start_service_time | first_sessid | with_partition |

±---------------------------±---------------------------±-------------±---------±—±------±-----------±----------------±-------±----------------------±------------------------------------------------------------------------------------------±----------±-------------------±-------------±---------------+

| 2023-04-20 19:22:06.756554 | 2023-04-20 19:25:52.897573 | 10.168.89.11 | 2982 | 1 | zone1 | 2981 | 1 | ACTIVE | 0 | 4.1.0.0_100000202023040520-0765e69043c31bf86e83b5d618db0530cf31b707(Apr 5 2023 20:26:14) | 0 | 1681989951900812 | 0 | 1 |

| 2023-05-10 16:47:28.757269 | 2023-05-10 16:50:42.757071 | 10.168.89.12 | 2982 | 7 | zone2 | 2981 | 0 | ACTIVE | 0 | 4.1.0.0_100000202023040520-0765e69043c31bf86e83b5d618db0530cf31b707(Apr 5 2023 20:26:14) | 0 | 1683708604272665 | 0 | 1 |

| 2023-04-20 19:22:06.793627 | 2023-04-20 19:25:53.293155 | 10.168.89.13 | 2982 | 3 | zone3 | 2981 | 0 | ACTIVE | 0 | 4.1.0.0_100000202023040520-0765e69043c31bf86e83b5d618db0530cf31b707(Apr 5 2023 20:26:14) | 0 | 1681989952296456 | 0 | 1 |

±---------------------------±---------------------------±-------------±---------±—±------±-----------±----------------±-------±----------------------±------------------------------------------------------------------------------------------±----------±-------------------±-------------±---------------+

3 rows in set (0.00 sec)

帮忙在10.168.89.11节点上取一下rootserver.log日志,

select * from __all_virtual_disk_stat; 查下这个

mysql> select * from __all_virtual_disk_stat;

±-------------±---------±--------------±------------±--------------±--------------±--------------------+

| svr_ip | svr_port | total_size | used_size | free_size | is_disk_valid | disk_error_begin_ts |

±-------------±---------±--------------±------------±--------------±--------------±--------------------+

| 10.168.89.12 | 2982 | 1159066550272 | 7851737088 | 1151210618880 | 1 | 0 |

| 10.168.89.11 | 2982 | 1159066550272 | 10045358080 | 1149016997888 | 1 | 0 |

| 10.168.89.13 | 2982 | 1159066550272 | 9208594432 | 1149853761536 | 1 | 0 |

±-------------±---------±--------------±------------±--------------±--------------±--------------------+

3 rows in set (0.05 sec)

rootservice89_11.tar.gz (4.6 MB)

您好,操作系统是x86 还是arm 的可以告知下吗

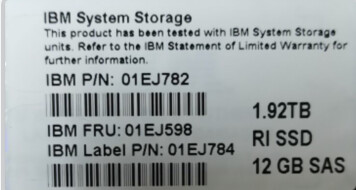

同时,日志盘和数据盘是什么型号的也告知下。

然后执行下lsblk

操作系统是x86的

[root@obdb1 ~]# uname -a

Linux obdb1 3.10.0-693.el7.x86_64 #1 SMP Thu Jul 6 19:56:57 EDT 2017 x86_64 x86_64 x86_64 GNU/Linux

[root@obdb1 ~]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.4 (Maipo)

[root@obdb1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 6.6T 0 disk

├─sda1 8:1 0 200M 0 part /boot/efi

├─sda2 8:2 0 200M 0 part /boot

└─sda3 8:3 0 6.6T 0 part

├─rhel-root 253:0 0 300G 0 lvm /

├─rhel-swap 253:1 0 128G 0 lvm [SWAP]

└─rhel-gpdata 253:5 0 6.1T 0 lvm /data/soft

sdb 8:16 0 1.8T 0 disk

├─vg_ob-lv_pro 253:2 0 300G 0 lvm /data/oceanbase/product

├─vg_ob-lv_red 253:3 0 200G 0 lvm /data/oceanbase/redolog

└─vg_ob-lv_sto 253:4 0 1.2T 0 lvm /data/oceanbase/storage

日志盘和数据库是用的IBM的ssd盘做的lvm

麻烦有空再帮忙看看哈

磁盘 扩容了没 ??? 现在 解决了没