从 ocp-server.log 里提取到任务重试失败附近的 日志如下:

2025-04-01 08:13:32.794 WARN 5479 --- [manual-subtask-executor4,a0327b7a330a68f8,42fea0700a7acce3] com.zaxxer.hikari.pool.ProxyConnection : metadb-connect-pool - Connection com.oceanbase.jdbc.OceanBaseConnection@405b9708 marked as broken because of SQLSTATE(08000), ErrorCode(0)

java.sql.SQLNonTransientConnectionException: (conn=655429) Connection timed out at com.oceanbase.jdbc.internal.util.exceptions.ExceptionFactory.createException(ExceptionFactory.java:122) at com.oceanbase.jdbc.internal.util.exceptions.ExceptionFactory.create(ExceptionFactory.java:202) at com.oceanbase.jdbc.OceanBaseStatement.executeExceptionEpilogue(OceanBaseStatement.java:320)

at com.oceanbase.jdbc.JDBC4PreparedStatement.executeInternal(JDBC4PreparedStatement.java:257) at com.oceanbase.jdbc.JDBC4PreparedStatement.execute(JDBC4PreparedStatement.java:171)

这个日志里有个 cs_id=655429 。这是一个 OCP 到 metadb 的链接,不是到业务OB 的链接。

然后到 OCP 服务器的 obproxy.log 里查找这个链接相关日志。

[admin@RS-OBOPC-P1 log]$ grep 655429 obproxy.log

[2025-04-01 07:02:26.655429] INFO [PROXY.TXN] ob_mysql_transact.cpp:5761 [4835][Y0-00002B9910CA2BA0] [lt=4] [dc=0] [ObMysqlTransact::handle_server_connection_break](client_ip={10.10.10.37:15248}, server_ip={10.10.10.39:2881}, cs_id=917549, proxy_sessid=723401852955330437, ss_id=0, server_sessid=0, sm_id=4868, proxy_user_name=root@ocp_monitor#ocpcluster, database_name=monitor_database, server_state="INTERNAL_ERROR", request_cmd="Quit", sql_cmd="Quit", sql=)[2025-04-01 07:48:17.228104] INFO [PROXY.CS] ob_mysql_client_session.cpp:370 [4833][Y0-00002B9910CA2D20] [lt=7] [dc=0] client session born(cs_id=655429, proxy_sessid=0, is_local_connection=false, client_vc=0x2b9910c94580, client_fd=188, client_addr="10.10.10.39:48578")[2025-04-01 07:48:17.228124] INFO [PROXY.CS] ob_mysql_client_session.cpp:241 [4833][Y0-00002B9910CA2D20] [lt=9] [dc=0] Starting new transaction using sm(cs_id=655429, get_transact_count()=0, sm_id=5632)[2025-04-01 07:48:17.228659] INFO [PROXY.SS] ob_mysql_server_session.cpp:106 [4833][Y0-00002B9910CA2D20] [lt=6] [dc=0] server session born(ss_id=5640, server_ip={10.10.10.39:2881}, cs_id=655429, proxy_sessid=0, server_type=1)[2025-04-01 07:48:17.228720] INFO [PROXY.TXN] ob_mysql_transact.cpp:4726 [4833][Y0-00002B9910CA2D20] [lt=8] [dc=0] succ to set proxy_sessid(cs_id=655429, proxy_sessid=723401852955330709, server_ip={10.10.10.39:2881}, ss_id=5640, server_sessid=3221745283, is_proxy_mysql_client=false, ss_fd=195, client_addr="10.10.10.39:48578")[2025-04-01 08:13:32.793781] WARN [PROXY.SM] set_client_abort (ob_mysql_sm.cpp:8124) [4833][Y0-00002B9910CA2D20] [lt=45] [dc=0] client will abort soon(sm_id=5632, cs_id=655429, proxy_sessid=723401852955330709, ss_id=5640, server_sessid=3221745283, client_ip={10.10.10.39:48578}, server_ip={10.10.10.39:2881}, cluster_name=ocpcluster, tenant_name=ocp_meta, user_name=root, db=meta_database, event="VC_EVENT_EOS", request_cmd="Query", sql_cmd="Query", sql=update `subtask_instance` set `state`='PAUSED' where `id` in (9171685))[2025-04-01 08:13:32.793910] WARN [PROXY.SM] update_cmd_stats (ob_mysql_sm.cpp:10239) [4833][Y0-00002B9910CA2D20] [lt=5] [dc=0] Slow Query: ((client_ip={10.10.10.39:48578}, server_ip={10.10.10.39:2881}, obproxy_client_port={10.10.10.39:62722}, server_trace_id=, route_type=ROUTE_TYPE_LEADER, user_name=root, tenant_name=ocp_meta, cluster_name=ocpcluster, logic_database_name=, logic_tenant_name=, ob_proxy_protocol=2, cs_id=655429, proxy_sessid=723401852955330709, ss_id=5640, server_sessid=3221745283, sm_id=5632, cmd_size_stats={client_request_bytes:75, server_request_bytes:191, server_response_bytes:0, client_response_bytes:0}, cmd_time_stats={client_transaction_idle_time_us=0, client_request_read_time_us=7, client_request_analyze_time_us=6, cluster_resource_create_time_us=0, pl_lookup_time_us=3, pl_process_time_us=2, bl_lookup_time_us=0, bl_process_time_us=0, congestion_control_time_us=13, congestion_process_time_us=0, do_observer_open_time_us=1, server_connect_time_us=0, server_sync_session_variable_time_us=0, server_send_saved_login_time_us=0, server_send_use_database_time_us=0, server_send_session_variable_time_us=0, server_send_session_user_variable_time_us=0, server_send_all_session_variable_time_us=0, server_send_start_trans_time_us=0, server_send_xa_start_time_us=0, server_send_init_sql_time_us=0, build_server_request_time_us=4, plugin_compress_request_time_us=0, prepare_send_request_to_server_time_us=37, server_request_write_time_us=14, server_process_request_time_us=0, server_response_read_time_us=0, plugin_decompress_response_time_us=0, server_response_analyze_time_us=0, ok_packet_trim_time_us=0, client_response_write_time_us=0, request_total_time_us=30029398}, sql=update `subtask_instance` set `state`='PAUSED' where `id` in (9171685), trans_internal_routing_state=not in trans)[2025-04-01 08:13:32.794009] WARN [PROXY.SM] update_stats (ob_mysql_sm.cpp:10366) [4833][Y0-00002B9910CA2D20] [lt=16] [dc=0] Slow transaction: ((client_ip={10.10.10.39:48578}, server_ip={10.10.10.39:2881}, server_trace_id=, route_type=ROUTE_TYPE_LEADER, ob_proxy_protocol=2, cs_id=655429, proxy_sessid=723401852955330709, ss_id=5640, server_sessid=3221745283, sm_id=5632, state=1, trans_stats_={client_requests:1, server_responses:0, pl_lookup_retries:0, server_retries:0, client_request_bytes:75, server_request_bytes:191, server_response_bytes:0, client_response_bytes:0, client_transaction_idle_time_us=0, client_process_request_time_us=21, client_request_read_time_us=7, client_request_analyze_time_us=6, cluster_resource_create_time_us=0, pl_lookup_time_us=3, pl_process_time_us=2, congestion_control_time_us=13, congestion_process_time_us=0, do_observer_open_time_us=1, server_connect_time_us=0, sync_session_variable_time_us=0, send_saved_login_time_us=0, send_use_database_time_us=0, send_session_vars_time_us=0, send_session_user_vars_time_us=0, send_all_session_vars_time_us=0, send_start_trans_time_us=0, send_xa_start_time_us=0, build_server_request_time_us=4, plugin_compress_request_time_us=0, prepare_send_request_to_server_time_us=37, server_request_write_time_us=14, server_process_request_time_us=0, server_response_read_time_us=0, plugin_decompress_response_time_us=0, server_response_analyze_time_us=0, ok_packet_trim_time_us=0, client_response_write_time_us=0, trans_time_us=30029630}, last_sql=update `subtask_instance` set `state`='PAUSED' where `id` in (9171685))[2025-04-01 08:13:32.794063] INFO [PROXY.SS] ob_mysql_server_session.cpp:153 [4833][Y0-00002B9910CA2D20] [lt=21] [dc=0] server session do_io_close((*this={ss_id:5640, server_sessid:3221745283, server_ip:{10.10.10.39:2881}, is_inited:true, magic:19136237, state:1, server_vc:0x2b991246bbd0, client_session:{this:0x2b9912089120, is_proxy_mysql_client:false, is_waiting_trans_first_request:false, need_delete_cluster:false, is_first_dml_sql_got:false, vc_ready_killed:false, active:true, magic:19132429, conn_decrease:true, current_tid:4833, cs_id:655429, proxy_sessid:723401852955330709, session_info:{is_inited:true, priv_info:{has_all_privilege:false, cs_id:655429, user_priv_set:133009965054, cluster_name:"ocpcluster", tenant_name:"ocp_meta", user_name:"root"}, version:{common_hot_sys_var_version:0, common_sys_var_version:0, mysql_hot_sys_var_version:0, mysql_sys_var_version:0, hot_sys_var_version:6968, sys_var_version:6801, user_var_version:1, db_name_version:1, last_insert_id_version:6, sess_info_version:11099}, hash_version:{common_hot_sys_var_version:0, common_sys_var_version:0, mysql_hot_sys_var_version:0, mysql_sys_var_version:0, hot_sys_var_version:0, sys_var_version:0, user_var_version:0, db_name_version:0, last_insert_id_version:0, sess_info_version:0}, val_hash:{common_hot_sys_var_hash:0, common_cold_sys_var_hash:0, mysql_hot_sys_var_hash:0, mysql_cold_sys_var_hash:0, hot_sys_var_hash:0, cold_sys_var_hash:0, user_var_hash:0}, global_vars_version:0, is_global_vars_changed:false, is_trans_specified:false, is_user_idc_name_set:false, is_read_consistency_set:false, idc_name:"", cluster_id:0, real_meta_cluster_name:"", safe_read_snapshot:1, syncing_safe_read_snapshot:0, route_policy:1, proxy_route_policy:3, user_identity:0, global_vars_version:0, is_read_only_user:false, is_request_follower_user:false, obproxy_force_parallel_query_dop:1, ob20_request:{remain_payload_len:0, ob20_request_received_done:false, ob20_header:{ob 20 protocol header:{compressed_len:0, seq:0, non_compressed_len:0}, magic_num:0, header_checksum:0, connection_id:0, request_id:0, pkt_seq:0, payload_len:0, version:0, flag_.flags:0, reserved:0}}, client_cap:72, server_cap:916303, last_server_addr:{*Not IP address [0]*:0}, last_server_sess_id:0, init_sql:""}, dummy_ldc:{use_ldc:false, idc_name:"", item_count:1, site_start_index_array:[[0]0, [1]1, [2]1, [3]1], item_array:[[0]{idc_type:"SAME_IDC", zone_type:"ReadWrite", replica:{server:"10.10.10.39:2881", is_dup_replica:false, role:"FOLLOWER", type:"FULL"}, is_merging:false, is_partition_server:false, is_force_congested:false, is_used:false}], pl:null, ts:0x2b9903f00030, readonly_exist_status:"READONLY_ZONE_NOT_EXIST"}, dummy_entry:0x2b9903f9bbe0, server_state_version:3, cur_ss:0x2b9912204be0, bound_ss:null, lii_ss:0x2b9912204be0, cluster_resource:{this:0x2b9910023080, ref_count:182, is_inited:true, cluster_info_key:{cluster_name:{config_string:"ocpcluster"}, cluster_id:0}, cr_state:"CR_AVAIL", version:2, last_access_time_ns:1743466412669165417, deleting_completed_thread_num:0, fetch_rslist_task_count:0, fetch_idc_list_task_count:0, last_idc_list_refresh_time_ns:1743437552565904769, last_rslist_refresh_time_ns:0, server_state_version:3}, client_vc:0x2b9910c94580, using_ldg:false, trace_stats:NULL}, transact_count:26716, server_trans_stat:1, session_info:{cap:916303, ob_server:, version:{common_hot_sys_var_version:0, common_sys_var_version:0, mysql_hot_sys_var_version:0, mysql_sys_var_version:0, hot_sys_var_version:6968, sys_var_version:6801, user_var_version:1, db_name_version:1, last_insert_id_version:6, sess_info_version:11099}, val_hash:{common_hot_sys_var_hash:0, common_cold_sys_var_hash:0, mysql_hot_sys_var_hash:0, mysql_cold_sys_var_hash:0, hot_sys_var_hash:0, cold_sys_var_hash:0, user_var_hash:0}, checksum_switch:1, server_type:1}}, server_vc_=0x2b991246bbd0, this=0x2b9912204be0)

[2025-04-01 08:13:32.794176] INFO [PROXY.SS] ob_mysql_server_session.cpp:190 [4833][Y0-00002B9910CA2D20] [lt=84] [dc=0] server session is closing(ss_id=5640, server_sessid=3221745283, server_ip={10.10.10.39:2881}, cs_id=655429, proxy_sessid=723401852955330709)

[2025-04-01 08:13:32.794206] INFO [PROXY.SS] ob_mysql_client_session.cpp:652 [4833][Y0-00002B9910CA2D20] [lt=8] [dc=0] client session do_io_close((*this={this:0x2b9912089120, is_proxy_mysql_client:false, is_waiting_trans_first_request:false, need_delete_cluster:false, is_first_dml_sql_got:false, vc_ready_killed:false, active:true, magic:19132429, conn_decrease:true, current_tid:4833, cs_id:655429, proxy_sessid:723401852955330709, session_info:{is_inited:true, priv_info:{has_all_privilege:false, cs_id:655429, user_priv_set:133009965054, cluster_name:"ocpcluster", tenant_name:"ocp_meta", user_name:"root"}, version:{common_hot_sys_var_version:0, common_sys_var_version:0, mysql_hot_sys_var_version:0, mysql_sys_var_version:0, hot_sys_var_version:6968, sys_var_version:6801, user_var_version:1, db_name_version:1, last_insert_id_version:6, sess_info_version:11099}, hash_version:{common_hot_sys_var_version:0, common_sys_var_version:0, mysql_hot_sys_var_version:0, mysql_sys_var_version:0, hot_sys_var_version:0, sys_var_version:0, user_var_version:0, db_name_version:0, last_insert_id_version:0, sess_info_version:0}, val_hash:{common_hot_sys_var_hash:0, common_cold_sys_var_hash:0, mysql_hot_sys_var_hash:0, mysql_cold_sys_var_hash:0, hot_sys_var_hash:0, cold_sys_var_hash:0, user_var_hash:0}, global_vars_version:0, is_global_vars_changed:false, is_trans_specified:false, is_user_idc_name_set:false, is_read_consistency_set:false, idc_name:"", cluster_id:0, real_meta_cluster_name:"", safe_read_snapshot:1, syncing_safe_read_snapshot:0, route_policy:1, proxy_route_policy:3, user_identity:0, global_vars_version:0, is_read_only_user:false, is_request_follower_user:false, obproxy_force_parallel_query_dop:1, ob20_request:{remain_payload_len:0, ob20_request_received_done:false, ob20_header:{ob 20 protocol header:{compressed_len:0, seq:0, non_compressed_len:0}, magic_num:0, header_checksum:0, connection_id:0, request_id:0, pkt_seq:0, payload_len:0, version:0, flag_.flags:0, reserved:0}}, client_cap:72, server_cap:916303, last_server_addr:{*Not IP address [0]*:0}, last_server_sess_id:0, init_sql:""}, dummy_ldc:{use_ldc:false, idc_name:"", item_count:1, site_start_index_array:[[0]0, [1]1, [2]1, [3]1], item_array:[[0]{idc_type:"SAME_IDC", zone_type:"ReadWrite", replica:{server:"10.10.10.39:2881", is_dup_replica:false, role:"FOLLOWER", type:"FULL"}, is_merging:false, is_partition_server:false, is_force_congested:false, is_used:false}], pl:null, ts:0x2b9903f00030, readonly_exist_status:"READONLY_ZONE_NOT_EXIST"}, dummy_entry:0x2b9903f9bbe0, server_state_version:3, cur_ss:null, bound_ss:null, lii_ss:null, cluster_resource:{this:0x2b9910023080, ref_count:182, is_inited:true, cluster_info_key:{cluster_name:{config_string:"ocpcluster"}, cluster_id:0}, cr_state:"CR_AVAIL", version:2, last_access_time_ns:1743466412669165417, deleting_completed_thread_num:0, fetch_rslist_task_count:0, fetch_idc_list_task_count:0, last_idc_list_refresh_time_ns:1743437552565904769, last_rslist_refresh_time_ns:0, server_state_version:3}, client_vc:0x2b9910c94580, using_ldg:false, trace_stats:NULL}, client_vc_=0x2b9910c94580, this=0x2b9912089120)

[2025-04-01 08:13:32.794257] INFO [PROXY.CS] ob_mysql_client_session.cpp:94 [4833][Y0-00002B9910CA2D20] [lt=44] [dc=0] client session destroy(cs_id=655429, proxy_sessid=723401852955330709, client_vc=NULL)

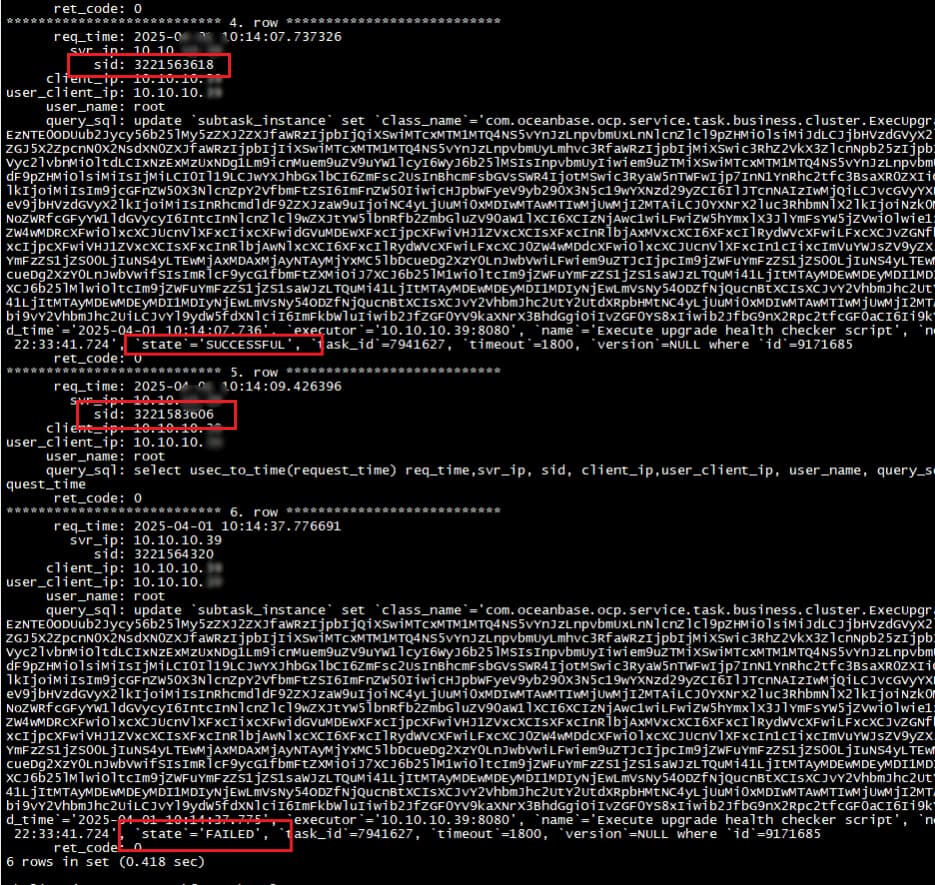

从 ocp的 obproxy.log 里可以看出,这个链接在任务重试之前就已经建好(2025-04-01 07:48:17.228104),任务重试在 2025-04-01 08:12:47.581,链接异常断开在 2025-04-01 08:12:47.581,断开时正在执行的SQL是 :

sql_cmd="Query", sql=update `subtask_instance` set `state`='PAUSED' where `id` in (9171685))

并且紧接着这句 SQL 还被标识为 slow query,执行时间约 request_total_time_us=30029398 。

metadb 的 sql 超时时间是 100s

obclient(root@ocp_meta)[meta_database]> show global variables like '%timeout%';

+---------------------+------------------+

| Variable_name | Value |

+---------------------+------------------+

| connect_timeout | 10 |

| interactive_timeout | 28800 |

| lock_wait_timeout | 31536000 |

| net_read_timeout | 30 |

| net_write_timeout | 60 |

| ob_pl_block_timeout | 3216672000000000 |

| ob_query_timeout | 100000000 |

| ob_trx_idle_timeout | 86400000000 |

| ob_trx_lock_timeout | -1 |

| ob_trx_timeout | 86400000000 |

| wait_timeout | 28800 |

+---------------------+------------------+

11 rows in set (0.003 sec)

metadb 压力并不大,这个 SQL 也不可能是慢 SQL。30s 恰好是 net_read_timeout 。ocp跟metadb 是同一台服务器。 很可能是 ocp 自身哪里问题。 @论坛小助手