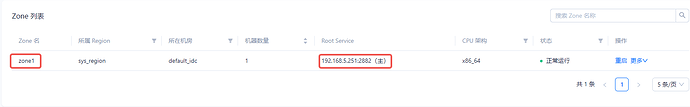

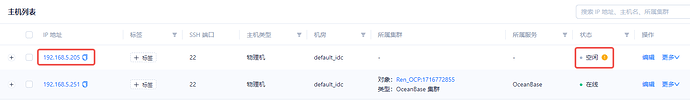

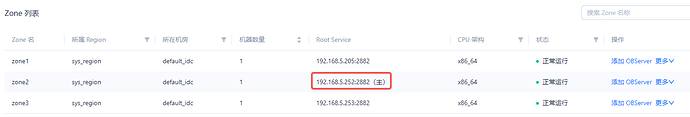

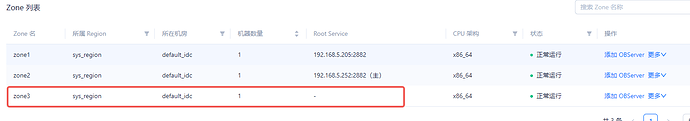

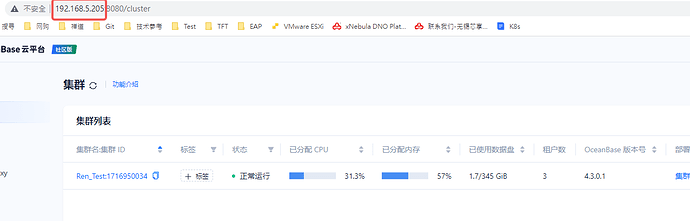

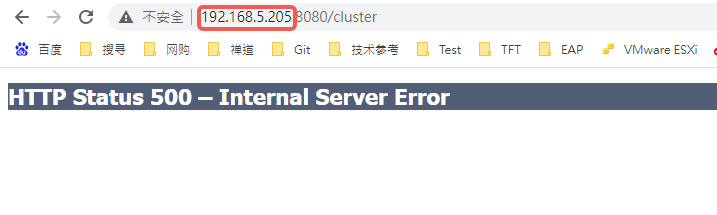

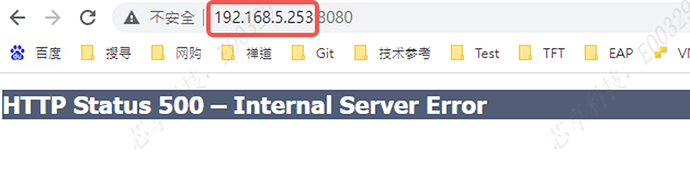

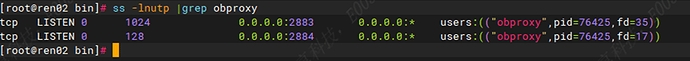

我先将副节点的日志清空,然後停止主节点(192.168.5.205),查看副节点的管理页面,显示 HTTP Status 500 – Internal Server Error。OCP log没有产生 (/home/root/logs/),只有/root/oceanbase/log/election.log持续在写日志

[2024-05-29 16:30:45.167388] INFO [ELECT] operator() (election_proposer.cpp:241) [3907][T1004_Occam][T1004][Y0-0000000000000000-0-0] [lt=69] dump proposer info(*this={ls_id:{id:1}, addr:“192.168.5.253:2882”, role:Follower, ballot_number:2, lease_interval:-0.00s, memberlist_with_states:{member_list:{addr_list:[“192.168.5.205:2882”, “192.168.5.252:2882”, “192.168.5.253:2882”], membership_version:{proposal_id:10, config_seq:11}, replica_num:3}, prepare_ok:False, accept_ok_promised_ts:invalid, follower_promise_membership_version:{proposal_id:9223372036854775807, config_seq:-1}}, priority_seed:0x1000, restart_counter:1, last_do_prepare_ts:2024-05-29 16:21:02.2812, self_priority:{priority:{is_valid:true, is_observer_stopped:false, is_server_stopped:false, is_zone_stopped:false, fatal_failures:[], is_primary_region:true, serious_failures:[], is_in_blacklist:false, in_blacklist_reason:, scn:{val:1716971444729641472, v:0}, is_manual_leader:false, zone_priority:1}}, p_election:0x7ff89fdf9230})

[2024-05-29 16:30:45.197575] INFO [ELECT] operator() (election_proposer.cpp:241) [3907][T1004_Occam][T1004][Y0-0000000000000000-0-0] [lt=74] dump proposer info(*this={ls_id:{id:1003}, addr:“192.168.5.253:2882”, role:Leader, ballot_number:3, prepare_success_ballot:3, lease_interval:4.00s, memberlist_with_states:{member_list:{addr_list:[“192.168.5.205:2882”, “192.168.5.252:2882”, “192.168.5.253:2882”], membership_version:{proposal_id:14, config_seq:18}, replica_num:3}, prepare_ok:[false, true, true], accept_ok_promised_ts:[16:21:03.194, 16:30:48.692, 16:30:48.692]follower_promise_membership_version:{proposal_id:14, config_seq:18}}, lease_and_epoch:{leader_lease:{span_from_now:3.496s, expired_time_point:16:30:48.693}, epoch:3}, priority_seed:0x1000, restart_counter:1, last_do_prepare_ts:2024-05-29 13:12:46.501809, self_priority:{priority:{is_valid:true, is_observer_stopped:false, is_server_stopped:false, is_zone_stopped:false, fatal_failures:[], is_primary_region:true, serious_failures:[], is_in_blacklist:false, in_blacklist_reason:, scn:{val:1716971444297306999, v:0}, is_manual_leader:false, zone_priority:0}}, p_election:0x7ff89aa0d230})

[2024-05-29 16:30:45.308278] INFO [ELECT] operator() (election_proposer.cpp:252) [3907][T1004_Occam][T1004][Y0-0000000000000000-0-0] [lt=18] dump message count(ls_id="{id:1002}", self_addr=“192.168.5.253:2882”, state=| match:[Prepare Request, Prepare Response, Accept Request, Accept Response, Change Leader]| “192.168.5.205:2882”:send:[1, 1, 0, 241, 0], rec:[1, 0, 241, 0, 0], last_send:2024-05-29 12:02:29.127697, last_rec:2024-05-29 12:02:29.127697| “192.168.5.252:2882”:send:[1, 0, 0, (32195), 0], rec:[1, 0, (32195), 0, 0], last_send:2024-05-29 16:30:45.231949, last_rec:2024-05-29 16:30:45.231949| “192.168.5.253:2882”:send:[1, 0, 0, 0, 0], rec:[1, 0, 0, 0, 0], last_send:2024-05-29 12:00:27.800338, last_rec:2024-05-29 12:00:27.800338)

[2024-05-29 16:30:45.499232] INFO [ELECT] operator() (election_acceptor.cpp:137) [3501][T1002_Occam][T1002][Y0-0000000000000000-0-0] [lt=35] dump acceptor info(*this={ls_id:{id:1001}, addr:“192.168.5.253:2882”, ballot_number:2, ballot_of_time_window:2, lease:{owner:“192.168.5.252:2882”, lease_end_ts:{span_from_now:3.817s, expired_time_point:16:30:49.316}, ballot_number:2}, is_time_window_opened:False, vote_reason:IP-PORT(priority equal), last_time_window_open_ts:2024-05-29 16:21:02.486613, highest_priority_prepare_req:{this:0x7ff8da51fab0, BASE:{msg_type:“Prepare Request”, id:1001, sender:“192.168.5.252:2882”, receiver:“192.168.5.253:2882”, restart_counter:1, ballot_number:2, debug_ts:{src_construct_ts:“21:02.490497”, src_serialize_ts:“21:02.490535”, dest_deserialize_ts:“21:02.487041”, dest_process_ts:“21:02.487049”, process_delay:-3448}, biggest_min_cluster_version_ever_seen:4.3.0.1}, role:“Follower”, is_buffer_valid:true, inner_priority_seed:4096, membership_version:{proposal_id:9, config_seq:10}}, p_election:0x7ff8da51f230})

[2024-05-29 16:30:45.499291] INFO [ELECT] operator() (election_acceptor.cpp:137) [3501][T1002_Occam][T1002][Y0-0000000000000000-0-0] [lt=55] dump acceptor info(*this={ls_id:{id:1003}, addr:“192.168.5.253:2882”, ballot_number:3, ballot_of_time_window:3, lease:{owner:“192.168.5.253:2882”, lease_end_ts:{span_from_now:3.889s, expired_time_point:16:30:49.388}, ballot_number:3}, is_time_window_opened:False, vote_reason:IP-PORT(priority equal), last_time_window_open_ts:2024-05-29 12:00:19.486030, highest_priority_prepare_req:{this:0x7ff8da5b3ab0, BASE:{msg_type:“Prepare Request”, id:1003, sender:“192.168.5.205:2882”, receiver:“192.168.5.253:2882”, restart_counter:1, ballot_number:0, debug_ts:{src_construct_ts:“00:19.483126”, src_serialize_ts:“00:19.483174”, dest_deserialize_ts:“00:19.486492”, dest_process_ts:“00:19.486495”, process_delay:3369}, biggest_min_cluster_version_ever_seen:4.3.0.1}, role:“Follower”, is_buffer_valid:true, inner_priority_seed:4096, membership_version:{proposal_id:10, config_seq:10}}, p_election:0x7ff8da5b3230})