查一下看看

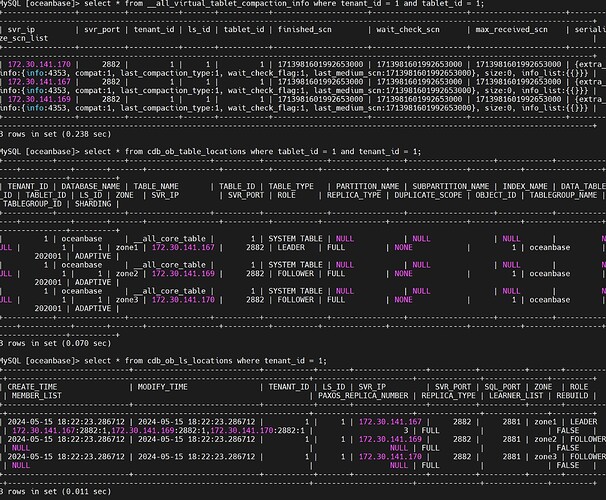

select * from __all_virtual_tablet_compaction_info where tenant_id = 1004 and tablet_id = 248954;

select * from cdb_ob_table_locations where tablet_id = 248954 and tenant_id 1004;

select * from cdb_ob_ls_locations where tenant_id = 1004 and ls_id in (1001,1003);

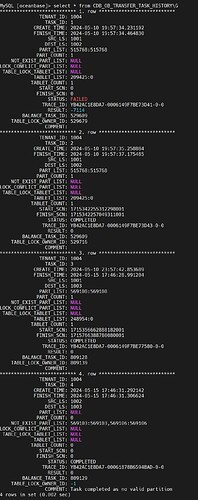

是不是有transfer任务卡住了?

这个怎么看呢

https://www.oceanbase.com/docs/common-oceanbase-database-cn-1000000000750427

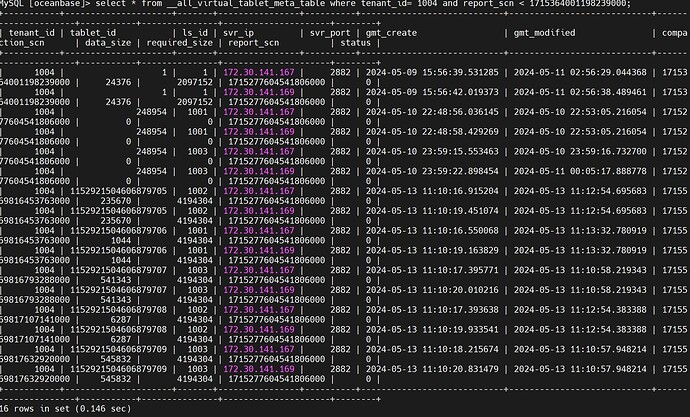

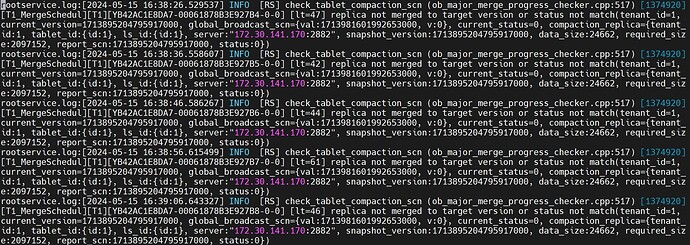

不过先不用看了,日志里我看到1004租户248954是transfer状态所以没合并,既然重启后恢复了就不用管了。重启后我看系统租户1号分区也像是合完了吧,你再看看__all_tablet_meta_table呢,

select * from __all_tablet_meta_table where tenant_id = 1 and compaction_scn < 1713981601992653000;

select * from __all_tablet_meta_table where tenant_id = 1 and report_scn < 1713981601992653000;

昨天尝试切主后,sys租户也正常了。这两个语句现在查出来是空的,目前能得到的结论是看哪个zone处于合并状态,然后切主过去,该zone就正常了。这个具体原因不知道是啥

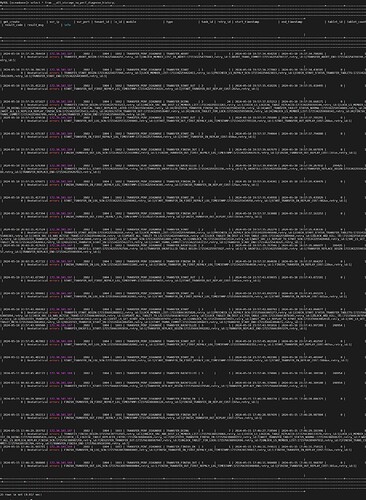

NFO [BALANCE.TRANSFER] unlock_and_clear_task_ (ob_tenant_transfer_service.cpp:1292) [1952023][T1004_BalanceEx][T1004][YB42AC1E8DA7-0006149F7BE77580-0-0] [lt=30] [TENANT_TRANSFER] task is not in finish status, can’t clear(ret=-4036, ret=“OB_NEED_RETRY”, task={task_id:{id:3}, src_ls:{id:1001}, dest_ls:{id:1003}, part_list:[{table_id:569108, part_object_id:569108}], not_exist_part_list:[], lock_conflict_part_list:[], table_lock_tablet_list:[], tablet_list:[{tablet_id:{id:248954}, transfer_seq:0}], start_scn:{val:1715356662888182001, v:0}, finish_scn:{val:0, v:0}, status:{status:2, status:“DOING”}, trace_id:YB42AC1E8DA7-0006149F7BE77580-0-0, result:0, comment:0, balance_task_id:{id:809128}, table_lock_owner_id:{id:809139}}) [2024-05-15 12:23:21.413652] WDIAG [STORAGE.TRANS] do_local_abort_tx_ (ob_trans_part_ctx.cpp:7860) [1952023][T1004_BalanceEx][T1004][YB42AC1E8DA7-0006149F7BE77580-0-0] [lt=23][errcode=0] do_local_abort_tx_(ret=0, ret=“OB_SUCCESS”, *this={this:0x7f38fcad9050, ref:2, trans_id:{txid:3421798}, tenant_id:1004, is_exiting:false, trans_expired_time:1715747131411379, cluster_version:17180000769, trans_need_wait_wrap:{receive_gts_ts_:[mts=0], need_wait_interval_us:0}, stc:[mts=0], ctx_create_time:1715747001413221}{ls_id:{id:1}, session_id:3221712468, part_trans_action:2, pending_write:0, exec_info:{state:10, upstream:{id:-1}, participants:[], incremental_participants:[], prev_record_lsn:{lsn:18446744073709551615}, redo_lsns:[], redo_log_no:0, multi_data_source:[], scheduler:“172.30.141.167:2882”, prepare_version:{val:18446744073709551615, v:3}, trans_type:0, next_log_entry_no:0, max_applied_log_ts:{val:18446744073709551615, v:3}, max_applying_log_ts:{val:18446744073709551615, v:3}, max_applying_part_log_no:9223372036854775807, max_submitted_seq_no:0, checksum:0, checksum_scn:{val:0, v:0}, max_durable_lsn:{lsn:18446744073709551615}, data_complete:false, is_dup_tx:false, prepare_log_info_arr:[], xid:{gtrid_str:"", bqual_str:"", format_id:1, gtrid_str_.ptr():“data_size:0, data:”, bqual_str_.ptr():“data_size:0, data:”, g_hv:0, b_hv:0}, need_checksum:true, is_sub2pc:false}, sub_state:{flag:0}, is_leaf():false, is_root():false, busy_cbs_.get_size():0, final_log_cb_:{ObTxBaseLogCb:{base_ts:{val:18446744073709551615, v:3}, log_ts:{val:18446744073709551615, v:3}, lsn:{lsn:18446744073709551615}, submit_ts:0}, this:0x7f38fcadbfc0, is_inited_:true, trans_id:{txid:3421798}, ls_id:{id:1}, ctx:0x7f38fcad9050, tx_data_guard:{tx_data:NULL}, is_callbacked_:false, is_dynamic_:false, mds_range_:{range_array_.count():0, range_array_:[]}, cb_arg_array_:[], first_part_scn_:{val:18446744073709551615, v:3}}, ctx_tx_data_:{ctx_mgr_:0x7f33de404030, tx_data_guard_:{tx_data:{tx_id:{txid:3421798}, ref_cnt:1, state:“RUNNING”, commit_version:{val:18446744073709551615, v:3}, start_scn:{val:18446744073709551615, v:3}, end_scn:{val:18446744073709551615, v:3}, undo_status_list:{head:null, undo_node_cnt:0{}}}}, read_only_:false}, role_state_:0, start_replay_ts_:{val:18446744073709551615, v:3}, start_recover_ts_:{val:18446744073709551615, v:3}, is_incomplete_replay_ctx_:false, mt_ctx_:{ObIMvccCtx={alloc_type=0 ctx_descriptor=0 min_table_version=1715241401789224 max_table_version=1715241401789224 trans_version={val:4611686018427387903, v:0} commit_version={val:0, v:0} lock_wait_start_ts=0 replay_compact_version={val:0, v:0}} end_code=0 tx_status=0 is_readonly=false ref=0 trans_id={txid:3421798} ls_id=1 callback_alloc_count=1 callback_free_count=0 checksum=0 tmp_checksum=0 checksum_scn={val:0, v:0} redo_filled_count=0 redo_sync_succ_count=0 redo_sync_fail_count=0 main_list_length=2 unsynced_cnt=1 unsubmitted_cnt_=1 cb_statistics:[main=2, slave=0, merge=0, tx_end=0, rollback_to=0, fast_commit=0, remove_memtable=0, ext_info_log_cb=0]}, coord_prepare_info_arr_:[], upstream_state:10, retain_cause:-1, 2pc_role:-1, collected:[], rec_log_ts:{val:18446744073709551615, v:3}, prev_rec_log_ts:{val:18446744073709551615, v:3}, lastest_snapshot:{val:18446744073709551615, v:3}, state_info_array:[], last_request_ts:1715747001413221, block_frozen_memtable:null}) [2024-05-15 12:23:21.413737] INFO [STORAGE.TRANS] abort_ (ob_trans_part_ctx.cpp:1870) [1952023][T1004_BalanceEx][T1004][YB42AC1E8DA7-0006149F7BE77580-0-0] [lt=74] tx abort(ret=0, reason=-6002, reason_str=“OB_TRANS_ROLLBACKED”, this={this:0x7f38fcad9050, ref:1, trans_id:{txid:3421798}, tenant_id:1004, is_exiting:true, trans_expired_time:1715747131411379, cluster_version:17180000769, trans_need_wait_wrap:{receive_gts_ts_:[mts=0], need_wait_interval_us:0}, stc:[mts=0], ctx_create_time:1715747001413221}{ls_id:{id:1}, session_id:3221712468, part_trans_action:4, pending_write:0, exec_info:{state:60, upstream:{id:-1}, participants:[], incremental_participants:[], prev_record_lsn:{lsn:18446744073709551615}, redo_lsns:[], redo_log_no:0, multi_data_source:[], scheduler:“172.30.141.167:2882”, prepare_version:{val:18446744073709551615, v:3}, trans_type:0, next_log_entry_no:0, max_applied_log_ts:{val:18446744073709551615, v:3}, max_applying_log_ts:{val:18446744073709551615, v:3}, max_applying_part_log_no:9223372036854775807, max_submitted_seq_no:0, checksum:0, checksum_scn:{val:0, v:0}, max_durable_lsn:{lsn:18446744073709551615}, data_complete:false, is_dup_tx:false, prepare_log_info_arr:[], xid:{gtrid_str:"", bqual_str:"", format_id:1, gtrid_str_.ptr():“data_size:0, data:”, bqual_str_.ptr():“data_size:0, data:”, g_hv:0, b_hv:0}, need_checksum:true, is_sub2pc:false}, sub_state:{flag:64}, is_leaf():false, is_root():false, busy_cbs_.get_size():0, final_log_cb_:{ObTxBaseLogCb:{base_ts:{val:18446744073709551615, v:3}, log_ts:{val:18446744073709551615, v:3}, lsn:{lsn:18446744073709551615}, submit_ts:0}, this:0x7f38fcadbfc0, is_inited_:true, trans_id:{txid:3421798}, ls_id:{id:1}, ctx:0x7f38fcad9050, tx_data_guard:{tx_data:NULL}, is_callbacked_:false, is_dynamic_:false, mds_range_:{range_array_.count():0, range_array_:[]}, cb_arg_array_:[], first_part_scn_:{val:18446744073709551615, v:3}}, ctx_tx_data_:{ctx_mgr_:0x7f33de404030, tx_data_guard_:{tx_data:{tx_id:{txid:3421798}, ref_cnt:1, state:“ABORT”, commit_version:{val:18446744073709551615, v:3}, start_scn:{val:18446744073709551615, v:3}, end_scn:{val:18446744073709551615, v:3}, undo_status_list:{head:null, undo_node_cnt:0{}}}}, read_only_:false}, role_state_:0, start_replay_ts_:{val:18446744073709551615, v:3}, start_recover_ts_:{val:18446744073709551615, v:3}, is_incomplete_replay_ctx_:false, mt_ctx_:{ObIMvccCtx={alloc_type=0 ctx_descriptor=0 min_table_version=1715241401789224 max_table_version=1715241401789224 trans_version={val:4611686018427387903, v:0} commit_version={val:18446744073709551615, v:3} lock_wait_start_ts=0 replay_compact_version={val:0, v:0}} end_code=-6002 tx_status=1 is_readonly=false ref=0 trans_id={txid:3421798} ls_id=1 callback_alloc_count=1 callback_free_count=1 checksum=0 tmp_checksum=0 checksum_scn={val:0, v:0} redo_filled_count=0 redo_sync_succ_count=0 redo_sync_fail_count=0 main_list_length=0 unsynced_cnt=0 unsubmitted_cnt_=0 cb_statistics:[main=2, slave=0, merge=0, tx_end=2, rollback_to=0, fast_commit=0, remove_memtable=0, ext_info_log_cb=0]}, coord_prepare_info_arr_:[], upstream_state:10, retain_cause:-1, 2pc_role:-1, collected:[], rec_log_ts:{val:18446744073709551615, v:3}, prev_rec_log_ts:{val:18446744073709551615, v:3}, lastest_snapshot:{val:18446744073709551615, v:3}, state_info_array:[], last_request_ts:1715747001413221, block_frozen_memtable:null}) [2024-05-15 12:23:21.413803] INFO [STORAGE.TRANS] handle_trans_abort_request (ob_trans_service_v4.cpp:2019) [1952023][T1004_BalanceEx][T1004][YB42AC1E8DA7-0006149F7BE77580-0-0] [lt=60] handle trans abort request(ret=0, abort_req={txMsg:{type:22, cluster_version:17180000769, tenant_id:1004, tx_id:{txid:3421798}, receiver:{id:1}, sender:{id:9223372036854775807}, sender_addr:“172.30.141.167:2882”, epoch:-1, request_id:4, timestamp:1715747001413648, cluster_id:1711516104}, reason:-6002}) [2024-05-15 12:23:21.413819] INFO [STORAGE.TRANS] rollback_tx (ob_tx_api.cpp:339) [1952023][T1004_BalanceEx][T1004][YB42AC1E8DA7-0006149F7BE77580-0-0] [lt=13] rollback tx(ret=0, *this={is_inited_:true, tenant_id_:1004, this:0x7f30af804030}, tx={this:0x7f392c6375c0, tx_id:{txid:3421798}, state:7, addr:“172.30.141.167:2882”, tenant_id:1004, session_id:3221712468, assoc_session_id:3221712468, xid:NULL, xa_mode:"", xa_start_addr:“0.0.0.0:0”, access_mode:0, tx_consistency_type:0, isolation:1, snapshot_version:{val:18446744073709551615, v:3}, snapshot_scn:0, active_scn:1715747004231762, op_sn:4, alloc_ts:1715747001412155, active_ts:1715747001412155, commit_ts:-1, finish_ts:1715747001413221, timeout_us:129999224, lock_timeout_us:-1, expire_ts:1715747131411379, coord_id:{id:-1}, parts:[{id:{id:1}, addr:“172.30.141.167:2882”, epoch:438493338572096, first_scn:1715747004231764, last_scn:1715747004231764, last_touch_ts:1715747004231765}], exec_info_reap_ts:1715747004231764, commit_version:{val:18446744073709551615, v:3}, commit_times:0, commit_cb:null, cluster_id:1711516104, cluster_version:17180000769, flags_.SHADOW:false, flags_.INTERRUPTED:false, flags_.BLOCK:false, flags_.REPLICA:false, can_elr:true, cflict_txs:[], abort_cause:-6002, commit_expire_ts:0, commit_task_.is_registered():false, ref:2}) [2024-05-15 12:23:21.413983] INFO [STORAGE.TRANS] get_number (ob_id_service.cpp:390) [1952023][T1004_BalanceEx][T1004][YB42AC1E8DA7-0006149F7BE77580-0-0] [lt=31] get number(ret=0, service_type_=0, range=1, base_id=1715747001413221000, start_id=1715747001413221000, end_id=1715747001413221001) [2024-05-15 12:23:21.414150] INFO [PALF] handle_next_submit_log_ (log_sliding_window.cpp:1091) [1952023][T1004_BalanceEx][T1004][YB42AC1E8DA7-0006149F7BE77580-0-0] [lt=11] [PALF STAT GROUP LOG INFO](palf_id=1, self=“172.30.141.167:2882”, role=“LEADER”, total_group_log_cnt=10, avg_log_batch_cnt=1, total_group_log_size=2042, avg_group_log_size=204) [2024-05-15 12:23:21.414172] INFO [PALF] submit_log (palf_handle_impl.cpp:456) [1952023][T1004_BalanceEx][T1004][YB42AC1E8DA7-0006149F7BE77580-0-0] [lt=19] [PALF STAT APPEND DATA SIZE](this={palf_id:1, self:“172.30.141.167:2882”, has_set_deleted:false}, append size=2042)

老师,昨天的日志还在,可以通过YB42AC1E8DA7-0006149F7BE77580-0-0去拿到一些信息,麻烦看下能找到原因吗

帮忙把日志收集后发一下吧

顺便搜下select * from __all_storage_ha_error_diagnose_history where task_id = 3 and tenant_id =1004; 看下

那查下这个表看看__all_storage_ha_perf_diagnose_history

没有比较明显的异常原因,transfer这块不是很熟,你重新发个帖子,值班同学再路由给相关的同学看看这个transfer任务花费这么长时间(疑似中间卡住了)的原因

ok,谢谢老师了