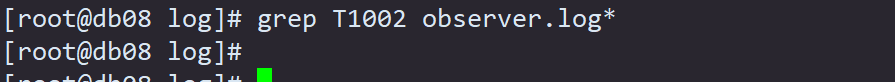

关键字是不是错了

3.1的话就不过滤租户了,直接过滤那几个字符串吧

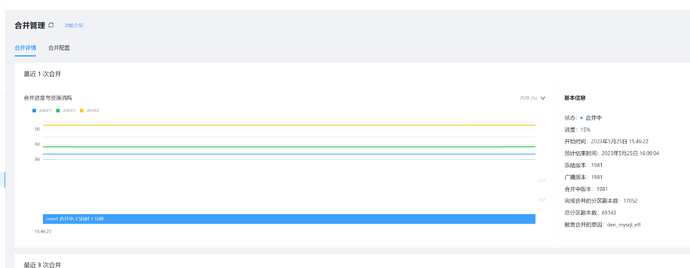

这个租户看__all_virtual_tenant_memstore_info表有内存爆的情况,__all_virtual_table_mgr表可以看到有多个冻结memtable没有转储。而没有转储的原因目前没有定位,但可能的原因有资源不足,如果是这种原因,扩容后结束是可以理解的。

grep “try minor merge all” observer.log*

observer.log.20230525100646:[2023-05-25 10:06:44.604174] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=20] [dc=0] start try minor merge all

observer.log.20230525100646:[2023-05-25 10:06:45.356322] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:996 [49397][954][Y0-0000000000000000] [lt=5] [dc=0] finish try minor merge all(cost_ts=762142)

observer.log.20230525100750:[2023-05-25 10:07:05.516910] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=4] [dc=0] start try minor merge all

observer.log.20230525100750:[2023-05-25 10:07:06.279405] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:996 [49397][954][Y0-0000000000000000] [lt=6] [dc=0] finish try minor merge all(cost_ts=773257)

observer.log.20230525100750:[2023-05-25 10:07:26.435940] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=20] [dc=0] start try minor merge all

observer.log.20230525100750:[2023-05-25 10:07:47.291295] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=19] [dc=0] start try minor merge all

observer.log.20230525100750:[2023-05-25 10:07:48.083496] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:996 [49397][954][Y0-0000000000000000] [lt=6] [dc=0] finish try minor merge all(cost_ts=804475)

observer.log.20230525100849:[2023-05-25 10:08:08.243794] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=8] [dc=0] start try minor merge all

observer.log.20230525100849:[2023-05-25 10:08:08.995058] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:996 [49397][954][Y0-0000000000000000] [lt=6] [dc=0] finish try minor merge all(cost_ts=761895)

observer.log.20230525100849:[2023-05-25 10:08:29.163674] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=22] [dc=0] start try minor merge all

observer.log.20230525100849:[2023-05-25 10:08:29.860295] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:996 [49397][954][Y0-0000000000000000] [lt=5] [dc=0] finish try minor merge all(cost_ts=706915)

observer.log.20230525100950:[2023-05-25 10:09:10.881318] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=17] [dc=0] start try minor merge all

observer.log.20230525100950:[2023-05-25 10:09:11.592607] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:996 [49397][954][Y0-0000000000000000] [lt=6] [dc=0] finish try minor merge all(cost_ts=721250)

observer.log.20230525100950:[2023-05-25 10:09:31.756746] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=21] [dc=0] start try minor merge all

observer.log.20230525100950:[2023-05-25 10:09:32.454625] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:996 [49397][954][Y0-0000000000000000] [lt=5] [dc=0] finish try minor merge all(cost_ts=708756)

observer.log.20230525101052:[2023-05-25 10:09:52.614567] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=23] [dc=0] start try minor merge all

observer.log.20230525101052:[2023-05-25 10:09:53.316633] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:996 [49397][954][Y0-0000000000000000] [lt=5] [dc=0] finish try minor merge all(cost_ts=712809)

observer.log.20230525101052:[2023-05-25 10:10:13.476189] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=3] [dc=0] start try minor merge all

observer.log.20230525101052:[2023-05-25 10:10:14.153452] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:996 [49397][954][Y0-0000000000000000] [lt=7] [dc=0] finish try minor merge all(cost_ts=686418)

observer.log.20230525101052:[2023-05-25 10:10:34.307712] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=9] [dc=0] start try minor merge all

observer.log.20230525101052:[2023-05-25 10:10:35.046329] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:996 [49397][954][Y0-0000000000000000] [lt=5] [dc=0] finish try minor merge all(cost_ts=748325)

observer.log.20230525101201:[2023-05-25 10:10:55.193882] INFO [STORAGE.COMPACTION] ob_partition_scheduler.cpp:941 [49397][954][Y0-0000000000000000] [lt=24] [dc=0] start try minor merge all

grep "schedule merge sstable dag finish.pkey" observer.log

5807, partition:null}, is_queuing_table=false, dag=0x7e3a7ca0ac80)

observer.log.20230525095126:[2023-05-25 09:51:24.091454] INFO [STORAGE] ob_partition_scheduler.cpp:1669 [49397][954][Y0-0000000000000000] [lt=5] [dc=1] schedule merge sstable dag finish(pkey={tid:1105009185977873, partition_id:0, part_cnt:0}, pg_key={tid:1105009185977873, partition_id:0, part_cnt:0}, index_id=1105009185977873, merge_type=3, frozen_version="0-0-0", info={tenant_id:1005, last_freeze_timestamp:1676357816577646, handle_id:9223372036854775807, emergency:false, protection_clock:9223372036854775807, partition:null}, is_queuing_table=false, dag=0x7e3a7ca10e00)

observer.log.20230525095126:[2023-05-25 09:51:24.091504] INFO [STORAGE] ob_partition_scheduler.cpp:1669 [49397][954][Y0-0000000000000000] [lt=5] [dc=1] schedule merge sstable dag finish(pkey={tid:1105009185977873, partition_id:0, part_cnt:0}, pg_key={tid:1105009185977873, partition_id:0, part_cnt:0}, index_id=1105009185977876, merge_type=3, frozen_version="0-0-0", info={tenant_id:1005, last_freeze_timestamp:1676357816577646, handle_id:9223372036854775807, emergency:false, protection_clock:9223372036854775807, partition:null}, is_queuing_table=false, dag=0x7fb5fafd4180)

observer.log.20230525095126:[2023-05-25 09:51:24.091564] INFO [STORAGE] ob_partition_scheduler.cpp:1669 [49397][954][Y0-0000000000000000] [lt=6] [dc=0] schedule merge sstable dag finish(pkey={tid:1105009185977873, partition_id:0, part_cnt:0}, pg_key={tid:1105009185977873, partition_id:0, part_cnt:0}, index_id=1105009185977878, merge_type=3, frozen_version="0-0-0", info={tenant_id:1005, last_freeze_timestamp:1676357816577646, handle_id:9223372036854775807, emergency:false, protection_clock:9223372036854775807, partition:null}, is_queuing_table=false, dag=0x7fb5fafda300)

observer.log.20230525095126:[2023-05-25 09:51:24.091612] INFO [STORAGE] ob_partition_scheduler.cpp:1669 [49397][954][Y0-0000000000000000] [lt=3] [dc=1] schedule merge sstable dag finish(pkey={tid:1105009185971965, partition_id:0, part_cnt:0}, pg_key={tid:1105009185971965, partition_id:0, part_cnt:0}, index_id=1105009185971965, merge_type=3, frozen_version="0-0-0", info={tenant_id:1005, last_freeze_timestamp:1676357816577646, handle_id:9223372036854775807, emergency:false, protection_clock:9223372036854775807, partition:null}, is_queuing_table=false, dag=0x7fb5fafe0480)

observer.log.20230525095126:[2023-05-25 09:51:24.091661] INFO [STORAGE] ob_partition_scheduler.cpp:1669 [49397][954][Y0-0000000000000000] [lt=5] [dc=1] schedule merge sstable dag finish(pkey={tid:1105009185977234, partition_id:0, part_cnt:0}, pg_key={tid:1105009185977234, partition_id:0, part_cnt:0}, index_id=1105009185977234, merge_type=3, frozen_version="0-0-0", info={tenant_id:1005, last_freeze_timestamp:1676357816577646, handle_id:9223372036854775807, emergency:false, protection_clock:9223372036854775807, partition:null}, is_queuing_table=false, dag=0x7fb5fafe6600)

observer.log.20230525095126:[2023-05-25 09:51:24.091711] INFO [STORAGE] ob_partition_scheduler.cpp:1669 [49397][954][Y0-0000000000000000] [lt=5] [dc=1] schedule merge sstable dag finish(pkey={tid:1105009185977234, partition_id:0, part_cnt:0}, pg_key={tid:1105009185977234, partition_id:0, part_cnt:0}, index_id=1105009185977241, merge_type=3, frozen_version="0-0-0", info={tenant_id:1005, last_freeze_timestamp:1676357816577646, handle_id:9223372036854775807, emergency:false, protection_clock:9223372036854775807, partition:null}, is_queuing_table=false, dag=0x7fb5fafec780)

observer.log.20230525095126:[2023-05-25 09:51:24.091763] INFO [STORAGE] ob_partition_scheduler.cpp:1669 [49397][954][Y0-0000000000000000] [lt=5] [dc=1] schedule merge sstable dag finish(pkey={tid:1105009185972044, partition_id:0, part_cnt:0}, pg_key={tid:1105009185972044, partition_id:0, part_cnt:0}, index_id=1105009185972044, merge_type=3, frozen_version="0-0-0", info={tenant_id:1005, last_freeze_timestamp:1676357816577646, handle_id:9223372036854775807, emergency:false, protection_clock:9223372036854775807, partition:null}, is_queuing_table=false, dag=0x7fb5faff2900)

observer.log.20230525095126:[2023-05-25 09:51:24.091810] INFO [STORAGE] ob_partition_scheduler.cpp:1669 [49397][954][Y0-0000000000000000] [lt=5] [dc=1] schedule merge sstable dag finish(pkey={tid:1105009185972044, partition_id:0, part_cnt:0}, pg_key={tid:1105009185972044, partition_id:0, part_cnt:0}, index_id=1105009185972045, merge_type=3, frozen_version="0-0-0", info={tenant_id:1005, last_freeze_timestamp:1676357816577646, handle_id:9223372036854775807, emergency:false, protection_clock:9223372036854775807, partition:null}, is_queuing_table=false, dag=0x7fb5faff8a80)

observer.log.20230525095126:[2023-05-25 09:51:24.091859] INFO [STORAGE] ob_partition_scheduler.cpp:1669 [49397][954][Y0-0000000000000000] [lt=5] [dc=1] schedule merge sstable dag finish(pkey={tid:1105009185970722, partition_id:0, part_cnt:0}, pg_key={tid:1105009185970722, partition_id:0, part_cnt:0}, index_id=1105009185970722, merge_type=3, frozen_version="0-0-0", info={tenant_id:1005, last_freeze_timestamp:1676357816577646, handle_id:9223372036854775807, emergency:false, protection_clock:9223372036854775807, partition:null}, is_queuing_table=false, dag=0x7fb5faffec00)

上次那个卡死的任务是在5月6日卡死的,5月6日之前那个租户的资源还是很大的。但我具体忘记了有多大,然后16号我看到所有可能是资源不够导致无法合并,一开始我以为指的是observer集群的整体资源。所有我把有些空闲的租户资源回收了,包括这个租户,然后发现还是卡着,今天看到这个租户又memstore的告警,还有你也一直在差这个租户;所以给这个租户重新扩了一下容看了下,结果貌似是有效的。

能确定转存失败是因为资源不足么?

这些日志信息量太少。我们通过只看一个表一个分区来简化排查,因此需要查的是tid:1101710651031555, partition_id:0的分区(之前一直看的是这个分区),希望的是确认其是否调度了转储,3.1只能通过日志来排查,还是比较麻烦的。

从结果来看,比较大概率是1002租户的资源不足导致的

目前只能从结果推断。

从日志排查的话,我们从tid:1101710651031555, partition_id:0的分区入手,通过schedule merge sstable dag finish 字段判断其是否调度了转储。

如果调度了,则通过以下几个字段(分别是转储任务执行过程的几个输出)来看转储任务是否有异常(有问题通常会报出错误码)

add dag success

schedule one task

task finish process

dag finish

个人觉得可能是转储任务执行中出现资源不足从而持续失败。

但证据需要查日志

想在大量的observer日志里面找到这种日志简直就是大海捞针

所以3.2以后有增加一系列的内部表/虚拟表来帮助排查问题。